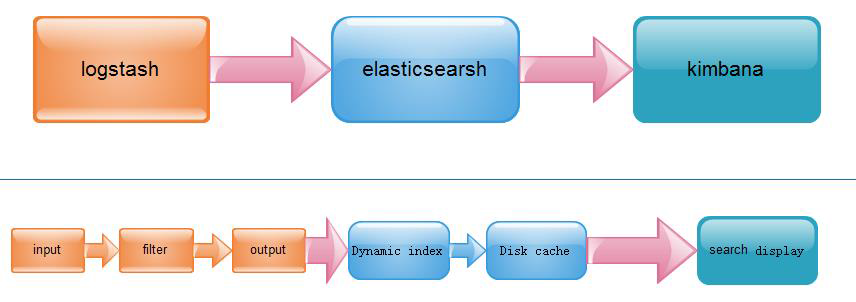

ELK就是一套完整的日志分析系统

ELK=Logstash+Elasticsearch+Kibana

统一官网https://www.elastic.co/products

Logstash

–用于处理传入的日志,负责收集、过滤和写出日志

Logstash也是一个日志收集处理工具和flume类似但是功能更强大一些,flume分为三个组件 source、channel、sink ;Logstash也分为三个组件 input、filter、output;filter可以做很多的事情、比如日志数据的格式化等等;

安装Logstash

logstash解压既用

tar -zxvf logstash-2.0.0.tar.gz

试用:

bin/logstash -e "input{stdin{}}output{stdout{codec=>rubydebug}}"

查看支持的插件

bin/plugin listlogstash-codec-collectd

logstash-codec-dots

logstash-codec-edn

logstash-codec-edn_lines

logstash-codec-es_bulk

logstash-codec-fluent

logstash-codec-graphite

logstash-codec-json

logstash-codec-json_lines

logstash-codec-line

logstash-codec-msgpack

logstash-codec-multiline

logstash-codec-netflow

logstash-codec-oldlogstashjson

logstash-codec-plain

logstash-codec-rubydebug

logstash-filter-anonymize

logstash-filter-checksum

logstash-filter-clone

logstash-filter-csv

logstash-filter-date

logstash-filter-dns

logstash-filter-drop

logstash-filter-fingerprint

logstash-filter-geoip

logstash-filter-grok

logstash-filter-json

logstash-filter-kv

logstash-filter-metrics

logstash-filter-multiline

logstash-filter-mutate

logstash-filter-ruby

logstash-filter-sleep

logstash-filter-split

logstash-filter-syslog_pri

logstash-filter-throttle

logstash-filter-urldecode

logstash-filter-useragent

logstash-filter-uuid

logstash-filter-xml

logstash-input-beats

logstash-input-couchdb_changes

logstash-input-elasticsearch

logstash-input-eventlog

logstash-input-exec

logstash-input-file

logstash-input-ganglia

logstash-input-gelf

logstash-input-generator

logstash-input-graphite

logstash-input-heartbeat

logstash-input-http

logstash-input-imap

logstash-input-irc

logstash-input-kafka

logstash-input-log4j

logstash-input-lumberjack

logstash-input-pipe

logstash-input-rabbitmq

logstash-input-redis

logstash-input-s3

logstash-input-snmptrap

logstash-input-sqs

logstash-input-stdin

logstash-input-syslog

logstash-input-tcp

logstash-input-twitter

logstash-input-udp

logstash-input-unix

logstash-input-xmpp

logstash-input-zeromq

logstash-output-cloudwatch

logstash-output-csv

logstash-output-elasticsearch

logstash-output-email

logstash-output-exec

logstash-output-file

logstash-output-ganglia

logstash-output-gelf

logstash-output-graphite

logstash-output-hipchat

logstash-output-http

logstash-output-irc

logstash-output-juggernaut

logstash-output-kafka

logstash-output-lumberjack

logstash-output-nagios

logstash-output-nagios_nsca

logstash-output-null

logstash-output-opentsdb

logstash-output-pagerduty

logstash-output-pipe

logstash-output-rabbitmq

logstash-output-redis

logstash-output-s3

logstash-output-sns

logstash-output-sqs

logstash-output-statsd

logstash-output-stdout

logstash-output-tcp

logstash-output-udp

logstash-output-xmpp

logstash-output-zeromq

logstash-patterns-core常用的filter插件

logstash-filter-date

logstash-filter-geoip

logstash-filter-grokinput

logstash-input-beatsbeat是一个组件,新兴起来的,非常强大,所以也有了ELKB一说

bin/logstash -e "input{stdin{}}filter{geoip{source => "message"}}output{stdout{codec => rubydebug}}" 、

、

试用脚本向es中写数据

vim logstash.conf

input {

file {

path =>"/usr/local/hadoop-2.5.1/logs/hadoop-root-namenode-node01.out"

start_position => beginning

}

}

filter {

grok {

match => {

"message" => "(?m)%{TIMESTAMP_ISO8601:date} %{WORD:log_type} %{DATA:classPath}:%{DATA:data}"

}

overwrite => ["message"]

}

date {

match => [ "date" , "YYYY-MM-dd HH:mm:ss,SSS" ]

}

}

output {

elasticsearch {

hosts => ["node01", "node02", "node03"]

}

stdout { codec => rubydebug }

}执行

bin/logstash -f conf/logstash.conf页面查看执行结果

1933

1933

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?