一、shell操作

-

l、列出所有topic

kafka-topics --zookeeper zk01:2181/kafka --list -

2、创建topic(分区、副本)

kafka-topics --zookeeper zk01:2181/kafka --create --topic test --partitions 1 --replication-factor 1 -

3、查看某个Topic的详情

kafka-topics --zookeeper zk01:2181/kafka --describe --topic test -

4、对分区数进行修改:只能增加分区,不能减少

kafka-topics --zookeeper zk01:2181/kafka --alter --topic utopic --partitions 15 -

5、删除topic

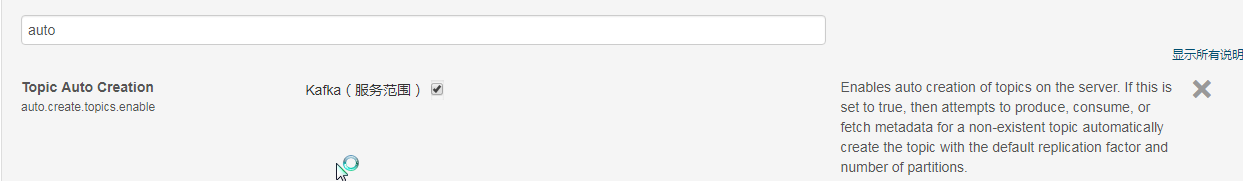

kafka-topics --zookeeper zk01:2181/kafka --delete --topic test 需要server.properties中设置delete.topic.enable=true,否则只是标记删除或者直接重启。需要在页面勾选允许删除,否则只是“伪”删除

-

6、发送消息(手动一行行发送)

kafka-console-producer --broker-list kafka01:9092 --topic test -

7、发送消息(管道,批量上传一个文件内容)

tail -F /root/log.log | kafka-console-producer --broker-list kafka01:9092 --topic test

-

8、消费消息

kafka-console-consumer --zookeeper zk01:2181/kafka --topic test --from-beginning -

9、查看消费位置

kafka-run-class kafka.tools.ConsumerOffsetChecker --zookeeper zk01:2181/kafka --group testgroup kafka-run-class kafka.tools.ConsumerOffsetChecker --zookeeper zk01:2181/kafka --group testgroup --topic test 或者 kafka-consumer-offset-checker --zookeeper zk01/kafka --group testgroup --topic test

二、Java的api

-

1、 maven依赖

<dependency> <groupId>org.apache.kafka</groupId> <artifactId>kafka_2.10</artifactId> <version>0.9.0.0</version> <exclusions> <exclusion> <groupId>junit</groupId> <artifactId>junit</artifactId> </exclusion> </exclusions> </dependency> -

2、自定义生产者分区器

package com.bigdata.hadoop.kafka.producer; import kafka.producer.DefaultPartitioner; import kafka.utils.VerifiableProperties; public class KafkaPartitioner extends DefaultPartitioner { public KafkaPartitioner(VerifiableProperties props) { super(props); } public int partition(Object obj, int numPartitions) { // return Math.abs(obj.hashCode()%numPartitions); return 2; } } -

3、新旧生产者API(不强制机器hosts文件做kafka机器名映射)

package com.bigdata.hadoop.kafka.producer; import com.bigdata.hadoop.kafka.consumer.ConsumerTest; import kafka.javaapi.producer.Producer; import kafka.producer.KeyedMessage; import kafka.producer.ProducerConfig; import org.apache.kafka.clients.producer.KafkaProducer; import org.apache.kafka.clients.producer.ProducerRecord; import org.apache.kafka.common.serialization.StringSerializer; import java.util.Properties; /** * 新旧 kafka producer api 测试 */ public class ProducerTest { public static void main(String[] args) { // 旧api Properties oldProps = new Properties(); oldProps.setProperty("metadata.broker.list", "cloudera:9092"); oldProps.setProperty("request.required.acks", "-1"); oldProps.setProperty("partitioner.class", KafkaPartitioner.class.getName()); Producer<byte[], byte[]> oldProducer = new Producer<byte[], byte[]>(new ProducerConfig(oldProps)); for (int i = 0; i < 1000; i++) { oldProducer.send(new KeyedMessage<>("one", ("msg one:" + i).getBytes())); oldProducer.send(new KeyedMessage<>("two", ("msg two:" + i).getBytes())); } // 新api ==> kafka 0.9版本及以上 Properties newProps = new Properties(); newProps.setProperty("bootstrap.servers", "cloudera:9092"); newProps.setProperty("key.serializer", StringSerializer.class.getName()); newProps.setProperty("value.serializer", StringSerializer.class.getName()); newProps.put("partitioner.class", KafkaPartitioner.class); KafkaProducer<String, String> newProducer = new KafkaProducer<String, String>(newProps); for (int i = 0; i < 100000; i++) { newProducer.send(new ProducerRecord<>("one", "msg one:" + i)); newProducer.send(new ProducerRecord<>("two", "msg two:" + i)); } } } -

4、自定义消费者“partition分配器” =>用默认就好kafka.consumer.RangeAssignor.RangeAssignor

package com.bigdata.hadoop.kafka.consumer; import kafka.common.TopicAndPartition; import kafka.consumer.AssignmentContext; import kafka.consumer.ConsumerThreadId; import kafka.consumer.RangeAssignor; import kafka.utils.Pool; /** * 自定义分区任务分配器,一般用不上 */ public class PartitionAssignor extends RangeAssignor { public Pool<String, scala.collection.mutable.Map<TopicAndPartition, ConsumerThreadId>> assign(final AssignmentContext ctx) { return null; } } -

5、新旧消费者API(一定要在hosts文件中配置broker机器名映射)

package com.bigdata.hadoop.kafka.consumer; import kafka.consumer.Consumer; import kafka.consumer.ConsumerConfig; import kafka.consumer.ConsumerIterator; import kafka.consumer.KafkaStream; import kafka.javaapi.consumer.ConsumerConnector; import org.apache.kafka.clients.consumer.ConsumerRecord; import org.apache.kafka.clients.consumer.ConsumerRecords; import org.apache.kafka.clients.consumer.KafkaConsumer; import org.apache.kafka.clients.consumer.OffsetAndTimestamp; import org.apache.kafka.common.PartitionInfo; import org.apache.kafka.common.TopicPartition; import org.apache.kafka.common.serialization.StringDeserializer; import java.util.*; /** * 新旧kafka consumer api 测试 */ public class ConsumerTest { public static void main(String[] args) { /** * 旧消费者api,0.8版本 */ Properties oldProps = new Properties(); oldProps.setProperty("zookeeper.connect", "192.168.50.10:2181"); oldProps.setProperty("group.id", "testGroup"); oldProps.setProperty("auto.offset.reset", "smallest"); // smallest 或者 largest if (false) { // kerberos认证开关 oldProps.setProperty("security.protocal", "SASL_PLAINTEXT"); } HashMap<String, Integer> topicPartitionsMap = new HashMap<String, Integer>(); topicPartitionsMap.put("one", 1); ConsumerConnector oldConsumer = Consumer.createJavaConsumerConnector(new ConsumerConfig(oldProps)); Map<String, List<KafkaStream<byte[], byte[]>>> topicAndParStream = oldConsumer.createMessageStreams(topicPartitionsMap); for (Map.Entry<String, List<KafkaStream<byte[], byte[]>>> entry : topicAndParStream.entrySet()) { for (KafkaStream<byte[], byte[]> kafkaStream : entry.getValue()) { //这下面要搞成一个线程来处理最好,这样每个线程直接对接一个partition,提高读取速度 ConsumerIterator<byte[], byte[]> it = kafkaStream.iterator(); new Thread(() -> { while (it.hasNext()) { System.out.println(new String(it.next().message())); } }).start(); } } /** * 新api ==> kafka 0.9及以上,功能很强大 */ Map<String, Object> newProps = new HashMap<>(); newProps.put("bootstrap.servers", "hosts1:9092,hosts2:9092"); newProps.put("group.id", "testGroup1"); newProps.put("auto.offset.reset", "earliest"); // earliest 或者 latest newProps.put("key.deserializer", StringDeserializer.class); newProps.put("value.deserializer", StringDeserializer.class); if (false) { // kerberos认证开关 newProps.put("security.protocal", "SASL_PLAINTEXT"); } KafkaConsumer<String, String> newConsumer = new KafkaConsumer<>(newProps); // 订阅topic LinkedList<String> topics = new LinkedList<>(); topics.add("wl_008"); topics.add("fj_008"); newConsumer.subscribe(topics); // 获取每个分区信息,包括leader Map<String, List<PartitionInfo>> topicMap = newConsumer.listTopics(); List<PartitionInfo> onePartitions = newConsumer.partitionsFor("wl_008"); // 收集所有的分区信息 LinkedList<TopicPartition> topicPartitions = new LinkedList<>(); for (String topic : topics) { for (PartitionInfo parTemp : newConsumer.partitionsFor(topic)) { topicPartitions.add(new TopicPartition(topic, parTemp.partition())); } } // 获取分区最早和最新的offset => 0.10以上版本 Map<TopicPartition, Long> beginningOffsets = newConsumer.beginningOffsets(topicPartitions); Map<TopicPartition, Long> endOffsets = newConsumer.endOffsets(topicPartitions); // 如果版本不支持上面的方式,可以使用consumer.assign(),注意:必须consumer.unsubscribe()否则冲突 newConsumer.unsubscribe(); newConsumer.assign(topicPartitions); newConsumer.seekToBeginning(topicPartitions); // 移动到开始获取beginningOffsets HashMap<TopicPartition, Long> beginOffsetsMap = new HashMap<>(); for (TopicPartition temp : topicPartitions) { beginOffsetsMap.put(temp, newConsumer.position(temp)); } newConsumer.seekToEnd(topicPartitions); // 移动到最后获取endOffsets HashMap<TopicPartition, Long> endOffsetsMap = new HashMap<>(); for (TopicPartition temp : topicPartitions) { endOffsetsMap.put(temp, newConsumer.position(temp)); } // 跳到始点开始消费 newConsumer.seekToBeginning(topicPartitions); // 跳到最新点开始消费 newConsumer.seekToEnd(topicPartitions); // 指定位置开始消费 newConsumer.seek(new TopicPartition("wl_008", 0), 100L); newConsumer.seek(new TopicPartition("fj_008", 0), 100L); newConsumer.seek(new TopicPartition("fj_008", 1), 100L); // 其他的功能 Set<TopicPartition> assignment = newConsumer.assignment(); Map<TopicPartition, OffsetAndTimestamp> timestampMap = newConsumer.offsetsForTimes(beginOffsetsMap); newConsumer.pause(topicPartitions); // 暂停partitions newConsumer.resume(topicPartitions); // 恢复partitions // 消费数据 while (true) { ConsumerRecords<String, String> records = newConsumer.poll(100L); for (ConsumerRecord<String, String> temp : records) { System.out.println(temp.value()); } } } } -

6、旧api获取kafka的最小和最新偏移量

package com.bigdata.hadoop.kafka.utils; import kafka.api.PartitionOffsetRequestInfo; import kafka.common.TopicAndPartition; import kafka.javaapi.*; import kafka.javaapi.consumer.SimpleConsumer; import org.apache.kafka.common.TopicPartition; import org.slf4j.Logger; import org.slf4j.LoggerFactory; import java.util.*; import java.util.Map.Entry; /** * 获取kafka broker 每个topic分区可读offset范围(最小值和最大值) * 1、先获取每个topic分区对应的leader * 2、在连接对应leader获取最小值和最大值 * 注意:clientID值是随意取的,不过一般都会取一个有意义的值,好排查 */ public class KafkaHeplerOldAPI { private static Logger logger = LoggerFactory.getLogger(KafkaHeplerOldAPI.class); /** * 获取brokers leader * key:topic * value:topic的所有分区信息,包括leader * SimpleConsumer最后一个参数 clientID是可以随意取值的,一般取一个有意义的值 */ public static HashMap<String, List<PartitionMetadata>> findLeader(String brokerHost, int port, List<String> topicList) { HashMap<String, List<PartitionMetadata>> map = new HashMap<>(); SimpleConsumer consumer = null; try { consumer = new SimpleConsumer(brokerHost, port, 100000, 64 * 1024, "findLeader" + System.currentTimeMillis()); TopicMetadataRequest req = new TopicMetadataRequest(topicList); TopicMetadataResponse resp = consumer.send(req); List<TopicMetadata> metaData = resp.topicsMetadata(); for (TopicMetadata item : metaData) { map.put(item.topic(), item.partitionsMetadata()); } } catch (Exception e) { logger.error("通过brokerhost:{}, brokerPort:{}, topic:{} 来查找leader失败!", brokerHost, port, String.join("|", topicList)); } finally { if (consumer != null) { consumer.close(); } } return map; } /** * 通过leader的host和port来获取指定topic、partition的偏移量 * clientName 可以随便取值,一般取有意义的值 * 注意:这里可以在requestInfo中组装这个leader中所有的分区,这样就可以一次性拿出来 * 减少leader连接,但是这样得返回一个list,还需要对应组装 * 而现在这种情况比较直观,缺点是每个分区都会连接一下,不过实测每次耗时很低 */ public static long getOffsetByLeader(String leadHost, int leadPort, String topic, int partition, boolean isLast) { String clientName = "Client_" + topic + "_" + partition; long indexFlag = isLast ? kafka.api.OffsetRequest.LatestTime() : kafka.api.OffsetRequest.EarliestTime(); Map<TopicAndPartition, PartitionOffsetRequestInfo> requestInfo = new HashMap<>(); requestInfo.put(new TopicAndPartition(topic, partition), new PartitionOffsetRequestInfo(indexFlag, 1)); kafka.javaapi.OffsetRequest request = new kafka.javaapi.OffsetRequest(requestInfo, kafka.api.OffsetRequest.CurrentVersion(), clientName); SimpleConsumer consumer = new SimpleConsumer(leadHost, leadPort, 100000, 64 * 1024, clientName); OffsetResponse response = consumer.getOffsetsBefore(request); consumer.close(); if (response.hasError()) { logger.error("getOffsetByLeader 中获取topicAndPartition:[{}] 失败!", topic + "_" + partition); return 0; } long[] offsets = response.offsets(topic, partition); return offsets[0]; } /** * 获取偏移量。最早的或者最晚的 * 先获取每一个topic的分区对应的leader */ private static Map<TopicPartition, Long> getOffsetOneBroker(String kafkaHost, int kafkaPort, List<String> topicList, boolean isLast) { Map<TopicPartition, Long> partionAndOffset = new HashMap<>(); // 获取每一个topic对应的分区信息,PartitionMetadata里面包含了leader HashMap<String, List<PartitionMetadata>> metadatas = findLeader(kafkaHost, kafkaPort, topicList); for (Entry<String, List<PartitionMetadata>> entry : metadatas.entrySet()) { String topic = entry.getKey(); for (PartitionMetadata temp : entry.getValue()) { long offset = getOffsetByLeader(temp.leader().host(), temp.leader().port(), topic, temp.partitionId(), isLast); partionAndOffset.put(new TopicPartition(topic, temp.partitionId()), offset); } } return partionAndOffset; } /** * 对传入进来的bootstrapServers配置逐个获取 * 其实只需要传递一个正确的broker进来即可,因为只要有一个broker能够连接上即可获取到所有的leader */ private static Map<TopicPartition, Long> getOffsetAllHosts(String bootstrapServers, List<String> topicList, boolean isLast) { String[] servers = bootstrapServers.split(","); List<String> kafkaHosts = new ArrayList<String>(); List<Integer> kafkaPorts = new ArrayList<Integer>(); for (String server : servers) { String[] hostAndPort = server.split(":"); kafkaHosts.add(hostAndPort[0]); kafkaPorts.add(Integer.parseInt(hostAndPort[1])); } Map<TopicPartition, Long> partionAndOffset = null; for (int i = 0, size = kafkaHosts.size(); i < size; i++) { partionAndOffset = getOffsetOneBroker(kafkaHosts.get(i), kafkaPorts.get(i), topicList, isLast); if (partionAndOffset.size() > 0) { break; } } return partionAndOffset; } /** * 为了解决kafka.common.OffsetOutOfRangeException * 当streaming zk里面记录kafka偏移小于kafka有效偏移,就会出现OffsetOutOfRangeException * <p> * 最早的offset 刚开始是0,过期(默认7天)的数据删除后,那么就会慢慢变大 * 如果kafka中没有可读的数据,那么最新和最旧的值是一样的 * * [@param](https://my.oschina.net/u/2303379) bootstrapServers kafka配置{e.g hosts1:9092,hosts2:9092,hosts3:9092} */ public static Map<TopicPartition, Long> getEarliestOffset(String bootstrapServers, List<String> topicList) throws Exception { return getOffsetAllHosts(bootstrapServers, topicList, false); } /** * 初始化到最新数据 * * [@param](https://my.oschina.net/u/2303379) bootstrapServers kafka配置{e.g hosts1:9092,hosts2:9092,hosts3:9092} */ public static Map<TopicPartition, Long> getLastestOffset(String bootstrapServers, List<String> topicList) throws Exception { return getOffsetAllHosts(bootstrapServers, topicList, true); } public static void main(String[] args) throws Exception { List<String> topicList = Arrays.asList("one,two".split(",")); Map<TopicPartition, Long> lastestOffset = getLastestOffset("cloudera:9092", topicList); Map<TopicPartition, Long> earliestOffset = getEarliestOffset("cloudera:9092", topicList); } } -

7、新api获取kafka的最小和最新偏移量

package com.bigdata.hadoop.kafka.utils; import org.apache.kafka.clients.consumer.KafkaConsumer; import org.apache.kafka.common.PartitionInfo; import org.apache.kafka.common.TopicPartition; import org.apache.kafka.common.serialization.StringDeserializer; import org.slf4j.Logger; import org.slf4j.LoggerFactory; import java.util.*; /** * 获取kafka broker 每个topic分区可读offset范围(最小值和最大值) */ public class KafkaHeplerNewAPI { private static Logger logger = LoggerFactory.getLogger(KafkaHeplerNewAPI.class); /** * 获取最新的offset * * [@param](https://my.oschina.net/u/2303379) consumer * [@param](https://my.oschina.net/u/2303379) topics * [@return](https://my.oschina.net/u/556800) */ public static Map<TopicPartition, Long> getLastOffsets(KafkaConsumer<String, String> consumer, List<String> topics) { // 收集所有的分区信息 LinkedList<TopicPartition> topicPartitions = new LinkedList<>(); for (String topic : topics) { for (PartitionInfo parTemp : consumer.partitionsFor(topic)) { topicPartitions.add(new TopicPartition(topic, parTemp.partition())); } } // 获取分区最早和最新的offset => 0.10以上版本 Map<TopicPartition, Long> result = consumer.endOffsets(topicPartitions); // 如果api不支持上面方式,使用下面这种 // Map<TopicPartition, Long> result = new HashMap<>(); // consumer.assign(topicPartitions); // consumer.seekToEnd(topicPartitions); // 这句很重要,重定位到最后,才能拿到最新的offset // for (TopicPartition temp : topicPartitions) { // result.put(temp, consumer.position(temp)); // } return result; } /** * 获取最新的offset * * @param consumer * @param topics * @return */ public static Map<TopicPartition, Long> getEarliestOffsets(KafkaConsumer<String, String> consumer, List<String> topics) { // 收集所有的分区信息 LinkedList<TopicPartition> topicPartitions = new LinkedList<>(); for (String topic : topics) { for (PartitionInfo parTemp : consumer.partitionsFor(topic)) { topicPartitions.add(new TopicPartition(topic, parTemp.partition())); } } // 获取分区最早和最新的offset => 0.10以上版本 Map<TopicPartition, Long> result = consumer.beginningOffsets(topicPartitions); // 如果api不支持上面方式,使用下面这种。 // Map<TopicPartition, Long> result = new HashMap<>(); // consumer.assign(topicPartitions); // consumer.seekToBeginning(topicPartitions); // 这句很重要,重定位到最前面,才能拿到最早的offset // for (TopicPartition temp : topicPartitions) { // result.put(temp, consumer.position(temp)); // } return result; } public static void main(String[] args) throws Exception { List<String> topicList = Arrays.asList("one,two".split(",")); Map<String, Object> props = new HashMap<>(); props.put("bootstrap.servers", "cloudera:9092"); props.put("group.id", "testGroup1"); props.put("auto.offset.reset", "earliest"); // earliest 或者 latest props.put("key.deserializer", StringDeserializer.class); props.put("value.deserializer", StringDeserializer.class); if (false) { // kerberos认证开关 props.put("security.protocal", "SASL_PLAINTEXT"); } KafkaConsumer<String, String> consumer = new KafkaConsumer<String, String>(props); Map<TopicPartition, Long> lastestOffset = getLastOffsets(consumer, topicList); Map<TopicPartition, Long> earliestOffset = getEarliestOffsets(consumer, topicList); } }

本文详细介绍Kafka的基本操作,包括shell命令交互方式与Java API开发方式。涵盖了主题管理、消息生产和消费等关键流程,并提供获取最小及最新偏移量的具体实现。

本文详细介绍Kafka的基本操作,包括shell命令交互方式与Java API开发方式。涵盖了主题管理、消息生产和消费等关键流程,并提供获取最小及最新偏移量的具体实现。

341

341

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?