功能: MapRedcue任务执行不依赖于Hadoop Eclipse插件,只需配置MapReduce的相关参数。

结果:http://hostname:8088 YARN WEB-UI可查看具体结果

package com.zhiwei.mapreduce;

import java.io.IOException;

import java.util.Map;

import java.util.Map.Entry;

import java.util.StringTokenizer;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.io.LongWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.Mapper;

import org.apache.hadoop.mapreduce.Reducer;

import org.apache.hadoop.mapreduce.lib.input.FileInputFormat;

import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

/**

* 统计文本词频信息

*

* 执行步骤:

* 1. start-all.sh 启动HDFS/yarn服务(或者:start-dfs.sh、start-yarn.sh)

* 2. mr-jobhistory-daemon.sh start historyserver 启动MR任务历史记录服务

*

* @author Zerone1993

*/

public class WordCount {

static class WordMapper extends Mapper<LongWritable, Text, Text, IntWritable>{

@Override

protected void map(LongWritable key, Text value, Mapper<LongWritable, Text, Text, IntWritable>.Context context)

throws IOException, InterruptedException {

String str = value.toString();

StringTokenizer st = new StringTokenizer(str);

while(st.hasMoreTokens()){

String temp = st.nextToken();

context.write(new Text(temp), new IntWritable(1));

}

}

}

static class WordReducer extends Reducer<Text, IntWritable, Text, IntWritable>{

@Override

protected void reduce(Text arg0, Iterable<IntWritable> arg1,

Reducer<Text, IntWritable, Text, IntWritable>.Context arg2) throws IOException, InterruptedException {

int sum = 0;

for(IntWritable temp : arg1){

sum = sum + temp.get();

}

arg2.write(new Text(arg0), new IntWritable(sum));

}

}

/**

* 手动配置MR任务配置参数

* 1. fs.defaultFS:若不配置可能初夏你无法创建MR作业临时文件异常

* 2. mapreduce.framework.name:指定MR任务执行的资源调度框架

* 3. yarn.resourcemanager.address:指定YARM的Application提交入口

* 4. mapreduce.app-submission.cross-platform:指定MR任务提交跨平台,如果不指定出现脚本执行异常问题

*/

public static Configuration initMRJobConfig(Map<String,String> props) {

Configuration conf = new Configuration();

if(props == null || props.size() == 0) {

return conf;

}

//封装手动配置参数

for(Entry<String, String> entry : props.entrySet()) {

conf.set(entry.getKey(), entry.getValue());

}

return conf;

}

/**

* 快捷配置方式(推荐:一步到位):

* 将Hadoop的核心配置文件全部装入Configuration,Configuration会自动解析配置属性

*

* 特别注意:HADOOP集群配置IP一般是主机名的形式配置,客户端如果不指定主机名映射则可能出现域名解析错误

*/

public static Configuration initMRJobConfig(String ... resources) {

Configuration conf = new Configuration();

if(resources == null || resources.length == 0) {

return conf;

}

//封装手动配置参数

for(String res : resources) {

conf.addResource(res);

}

return conf;

}

/**

* 普通Java Application main方法执行即可,不依赖hadoop eclipse 插件

*

* 手动指定(源码解析):hadoop.home.dir,从无需配置环境变量:HADOOP_HOME、PATH

*

* 主要配置:将winutils.exe放到$HADOOP_HOME/bin目录下即可

*/

public static void main(String[] args) {

System.setProperty("hadoop.home.dir","D:\\Tools\\hadoop-2.7.3");

// Map<String,String> props = new HashMap<String, String>();

//

// props.put("fs.defaultFS", "hdfs://192.168.204.129:9090");

// props.put("mapreduce.framework.name","yarn");

// props.put("yarn.resourcemanager.address","192.168.204.129:8032");

// props.put("mapreduce.app-submission.cross-platform", "true");

//

// //手动配置

// Configuration conf = initMRJobConfig(props);

//快捷配置(一步到位):引入HADOOP配置资源:属性名相同则替换:注意域名和IP配置问题

Configuration conf = initMRJobConfig("core-site.xml","hdfs-site.xml","mapred-site.xml","yarn-site.xml");

try{

Job job = Job.getInstance(conf, "wordCount");

//获取MR任务Jar的绝对路径

String jobJarPath = WordCount.class.getClassLoader().getResource("wordcount.jar").getPath();

//job.setJarByClass方法main运行无效

//job.setJarByClass(WordCount.class); //设置启动作业类

job.setJar(jobJarPath);

job.setMapperClass(WordMapper.class); //设置Map类

job.setReducerClass(WordReducer.class);

job.setMapOutputKeyClass(Text.class); //设置mapper输出的key类型

job.setMapOutputValueClass(IntWritable.class); //设置mapper输出的value类型

//设置Reduce Task的数量:影响Reduce任务执行后生成的文件数

job.setNumReduceTasks(1);

//设置mapreduce作业的文件输入、输出目录

FileInputFormat.addInputPath(job, new Path("hdfs://192.168.204.129:9090/data/mapreduce/input"));

FileOutputFormat.setOutputPath(job, new Path("hdfs://192.168.204.129:9090/data/mapreduce/output") );

//等待MR任务完成,成功则返回true,应用正常退出,是否异常推出(0:类似Linux的进程状态反馈机制:0表示正常执行)

System.exit(job.waitForCompletion(true) ? 0 : 1);

}catch(Exception e){

e.printStackTrace();

}

}

}

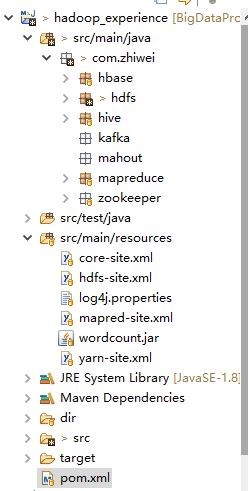

项目结构图:

本文详细介绍了一种不依赖Hadoop Eclipse插件的MapReduce任务执行方法,通过配置相关参数,实现文本词频统计。文章提供了完整的代码示例,包括Mapper和Reducer的实现,以及如何在本地环境中配置和运行MapReduce作业。

本文详细介绍了一种不依赖Hadoop Eclipse插件的MapReduce任务执行方法,通过配置相关参数,实现文本词频统计。文章提供了完整的代码示例,包括Mapper和Reducer的实现,以及如何在本地环境中配置和运行MapReduce作业。

676

676

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?