oracle 是经过多年研发的, 通用的, 质量很高, 而application 是为客户定制的, 一次性的, 质量可能会出问题.

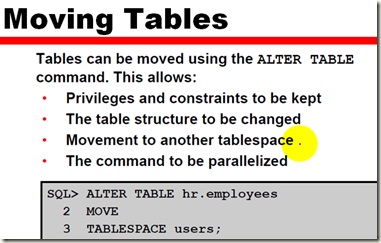

数据库的 move 的含义, 是将老表copy到新的表, 然后将新表命名为老表的名字.

为什么要move呢, 可能是表的物理结构有问题, 有很多碎片化. 或者是 PCTFREE, PCTUSED 设置要改变.

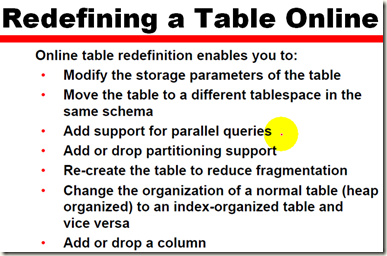

实际上是使用一个 pl/sql 包 (administrator guid ora 11g, 这个是oracle 提供的一个官方文档)

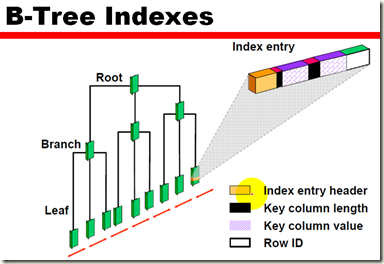

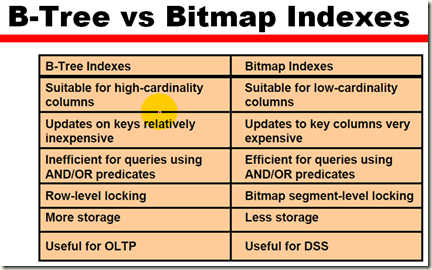

B-Tree 是一个平衡树, 所有的叶子节点都在一个层次上.

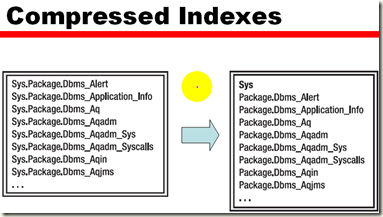

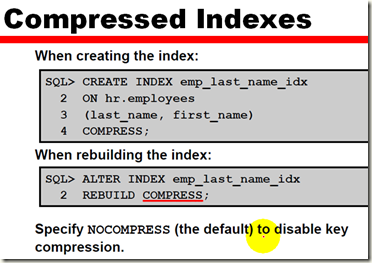

例如递增的编号, 1234, 1235, 1236, 看来前边是一样的, 那么能不能123 存储1次, 后别的单独存储.

看来就是索引值前边有相同的数值.

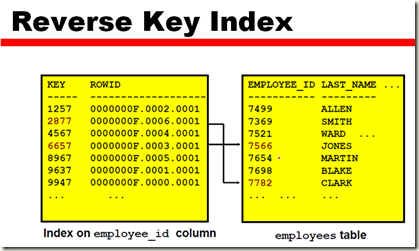

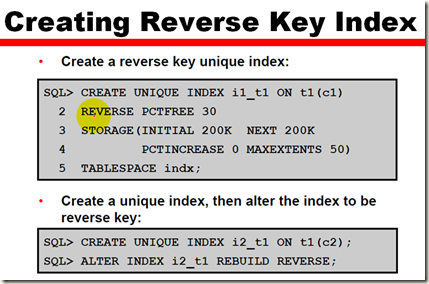

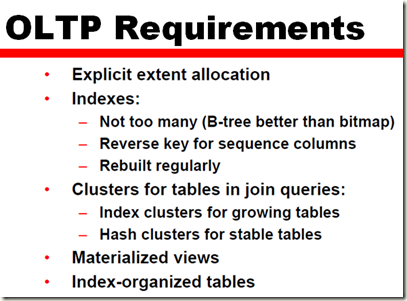

倒序索引 7566 –> 倒序 6657, 7782 倒序 2877

为什么要倒序呢, 因为往往之前是按照顺序排的, 那么可能上图 7499~7782在一个block里, 这时候如果有3个人, 它们分别想确认 7499, 7566, 7782 那么其中两个人就要等待, 因为一次性一个块只能被一个人访问, 但是, 如果倒序后, 那么这些信息已经被分开到多个块中了, 这时候这些块都可以被同时访问.

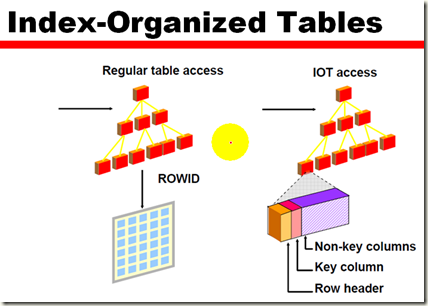

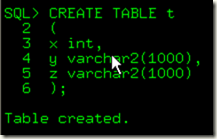

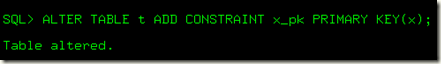

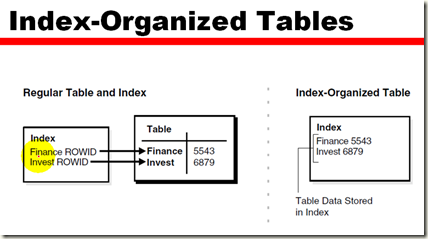

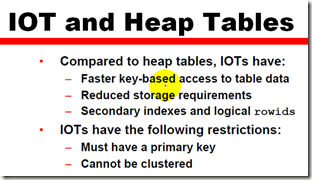

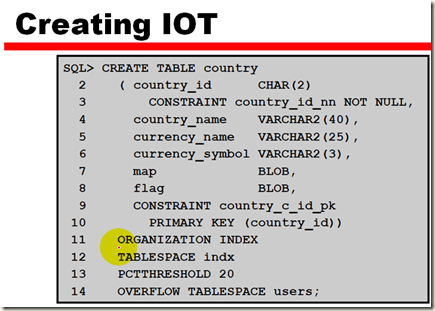

为什么要创建索引表, 比如上边例子, 我们为 x 创建一个索引, OK 没有问题, 但是如果我们又为 y z 创建了索引, 那么就没有必要了, 因为我们加大了很多磁盘的 I/O, 这个时候我们就可以考虑使用所以表.

IOT 表一定要有主见, 可见IOT表示根据主键的.

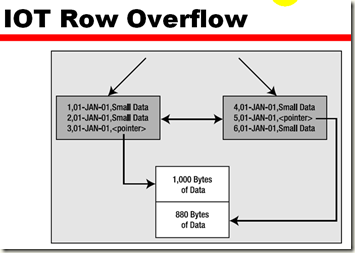

overflow 的意思是, 我想把这个table中的一部分不常用的列移动到别的地方, 不跟这个表存储在一起的物理特性.

PCTTHRESHOLD 20 一个叶子数据块中可以放多少个entry, 多了的就放到 overflow 中

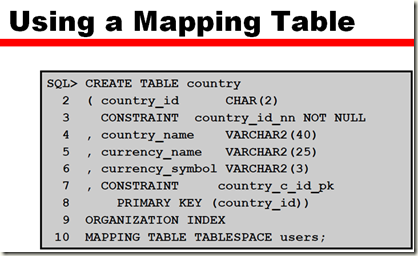

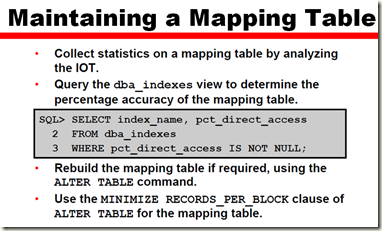

IOT 没有 rowid

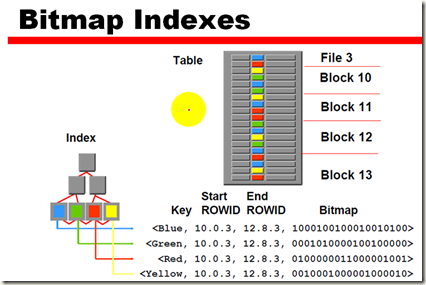

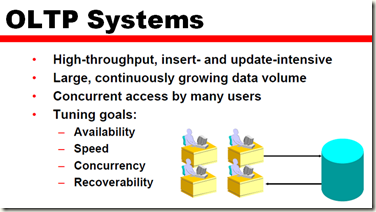

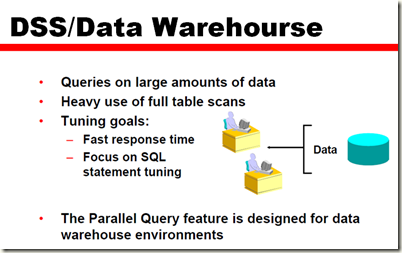

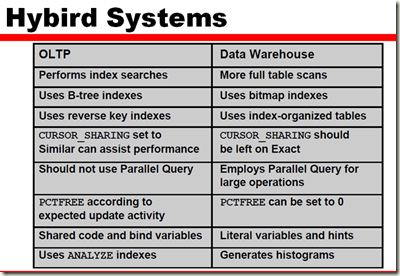

OLAP

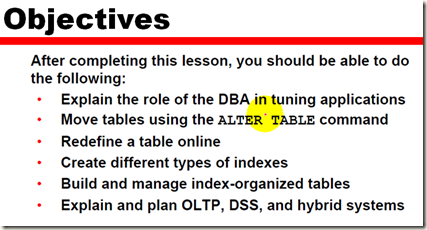

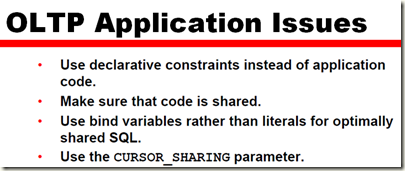

这里主要是针对sql语句调优.

一般都会把这两个系统独立分开, 除非没钱

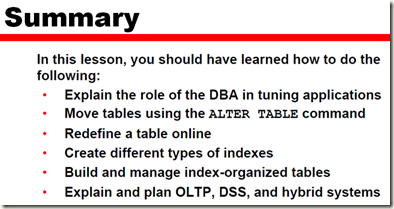

本文介绍了 Oracle 数据库的优化方法,包括表的移动操作、B-Tree 索引优化、倒序索引的使用及索引组织表(IOT)的特点。通过实际案例解释了这些技术如何提高查询效率。

本文介绍了 Oracle 数据库的优化方法,包括表的移动操作、B-Tree 索引优化、倒序索引的使用及索引组织表(IOT)的特点。通过实际案例解释了这些技术如何提高查询效率。

720

720

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?