1006. Square frames

Memory Limit: 16 MB

Input

Output

X 1 Y 1 A 1

…

Xk Yk Ak

Sample

| input | output |

|---|---|

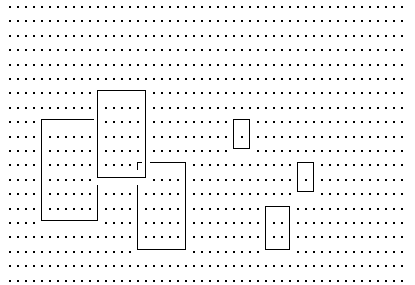

| (see the figure above) | 6 16 11 7 32 14 4 4 8 8 11 6 7 36 11 3 28 8 3 |

解答如下:

2 using System.IO;

3 using System.Text;

4 using System.Collections.Generic;

5

6 namespace Skyiv.Ben.Timus

7 {

8 // http://acm.timus.ru/problem.aspx?space=1 &num=1006

9 sealed class T1006

10 {

11 struct Frame

12 {

13 static readonly char Marked = ' * ' ;

14 static readonly char Background = ' . ' ;

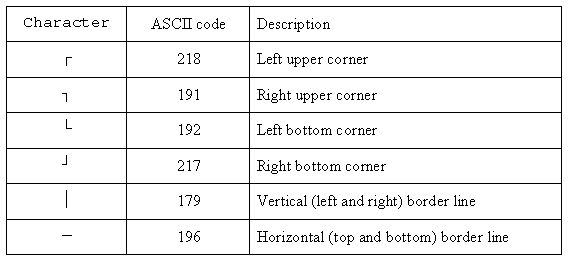

15 static readonly char Vertical = ( char ) 179 ;

16 static readonly char Horizontal = ( char ) 196 ;

17 static readonly char LeftUpper = ( char ) 218 ;

18 static readonly char RightUpper = ( char ) 191 ;

19 static readonly char LeftBottom = ( char ) 192 ;

20 static readonly char RightBottom = ( char ) 217 ;

21

22 public static readonly char [] Uppers = new char [] { LeftUpper, RightUpper };

23 public static readonly char [] Lefts = new char [] { LeftUpper, LeftBottom };

24

25 int row, col, len;

26

27 Frame( int row, int col, int len)

28 {

29 this .row = row;

30 this .col = col;

31 this .len = len;

32 }

33

34 public static Frame GetValue( char c, int row, int col, int len)

35 {

36 if (c == LeftUpper) return new Frame(row, col, len);

37 if (c == RightUpper) return new Frame(row, col - len, len);

38 if (c == LeftBottom) return new Frame(row - len, col, len);

39 if (c == RightBottom) return new Frame(row - len, col - len, len);

40 throw new ArgumentOutOfRangeException( " c " );

41 }

42

43 public override string ToString()

44 {

45 return col + " " + row + " " + (len + 1 );

46 }

47

48 public static bool IsCorner( char c)

49 {

50 return c == LeftUpper || c == RightUpper || c == LeftBottom || c == RightBottom;

51 }

52

53 public static bool IsHorizontal( char c)

54 {

55 return c == Horizontal;

56 }

57

58 public static bool IsVertical( char c)

59 {

60 return c == Vertical;

61 }

62

63 public static bool IsBackground( char c)

64 {

65 return c == Background;

66 }

67

68 public bool InScreen( char [,] screen)

69 {

70 return row >= 0 && row + len < screen.GetLength( 0 )

71 && col >= 0 && col + len < screen.GetLength( 1 );

72 }

73

74 public bool Search(Stack < Frame > stack, char [,] screen)

75 {

76 if ( ! IsFrame(screen)) return false ;

77 Mark(screen);

78 stack.Push( this );

79 return true ;

80 }

81

82 bool IsFrame( char [,] screen)

83 {

84 for ( int i = 1 ; i < len; i ++ )

85 {

86 if ( ! IsBorderLine(Vertical, screen, i, 0 )) return false ;

87 if ( ! IsBorderLine(Vertical, screen, i, len)) return false ;

88 if ( ! IsBorderLine(Horizontal, screen, 0 , i)) return false ;

89 if ( ! IsBorderLine(Horizontal, screen, len, i)) return false ;

90 }

91 if ( ! IsBorderLine(LeftUpper, screen, 0 , 0 )) return false ;

92 if ( ! IsBorderLine(RightUpper, screen, 0 , len)) return false ;

93 if ( ! IsBorderLine(LeftBottom, screen, len, 0 )) return false ;

94 if ( ! IsBorderLine(RightBottom, screen, len, len)) return false ;

95 return true ;

96 }

97

98 bool IsBorderLine( char c, char [,] screen, int dy, int dx)

99 {

100 char ch = screen[row + dy, col + dx];

101 return ch == Marked || ch == c;

102 }

103

104 void Mark( char [,] screen)

105 {

106 for ( int i = 0 ; i <= len; i ++ )

107 screen[row + i, col] = screen[row + i, col + len] =

108 screen[row, col + i] = screen[row + len, col + i] = Marked;

109 }

110 }

111

112 static void Main()

113 {

114 using (TextReader reader = new StreamReader(

115 Console.OpenStandardInput(), Encoding.GetEncoding( " iso-8859-1 " )))

116 {

117 new T1006().Run(reader, Console.Out);

118 }

119 }

120

121 void Run(TextReader reader, TextWriter writer)

122 {

123 char [,] screen = Read(reader);

124 Stack < Frame > stack = new Stack < Frame > ();

125 SearchCorner(stack, screen);

126 SearchSide(stack, screen);

127 writer.WriteLine(stack.Count);

128 foreach (Frame frame in stack) writer.WriteLine(frame);

129 }

130

131 char [,] Read(TextReader reader)

132 {

133 List < string > list = new List < string > ();

134 for ( string s; (s = reader.ReadLine()) != null ; ) list.Add(s);

135 char [,] v = new char [list.Count, list[ 0 ].Length];

136 for ( int i = 0 ; i < list.Count; i ++ )

137 for ( int j = 0 ; j < list[i].Length; j ++ )

138 v[i, j] = list[i][j];

139 return v;

140 }

141

142 void SearchCorner(Stack < Frame > stack, char [,] screen)

143 {

144 begin:

145 for ( int i = 0 ; i < screen.GetLength( 0 ); i ++ )

146 for ( int j = 0 ; j < screen.GetLength( 1 ); j ++ )

147 {

148 if ( ! Frame.IsCorner(screen[i, j])) continue ;

149 for ( int len = 1 ; ; len ++ )

150 {

151 Frame frame = Frame.GetValue(screen[i, j], i, j, len);

152 if ( ! frame.InScreen(screen)) break ;

153 if (frame.Search(stack, screen)) goto begin;

154 }

155 }

156 }

157

158 void SearchSide(Stack < Frame > stack, char [,] screen)

159 {

160 begin:

161 for ( int i = 0 ; i < screen.GetLength( 0 ); i ++ )

162 for ( int j = 0 ; j < screen.GetLength( 1 ); j ++ )

163 if (SearchVertical(stack, screen, i, j)) goto begin;

164 else if (SearchHorizontal(stack, screen, i, j)) goto begin;

165 }

166

167 bool SearchVertical(Stack < Frame > stack, char [,] screen, int row, int col)

168 {

169 if ( ! Frame.IsVertical(screen[row, col])) return false ;

170 for ( int k = row - 1 ; k >= 0 ; k -- )

171 {

172 if (Frame.IsBackground(screen[k, col]) || Frame.IsHorizontal(screen[k, col])) break ;

173 foreach ( char c in Frame.Uppers)

174 for ( int len = row - k + 1 ; ; len ++ )

175 {

176 Frame frame = Frame.GetValue(c, k, col, len);

177 if ( ! frame.InScreen(screen)) break ;

178 if (frame.Search(stack, screen)) return true ;

179 }

180 }

181 return false ;

182 }

183

184 bool SearchHorizontal(Stack < Frame > stack, char [,] screen, int row, int col)

185 {

186 if ( ! Frame.IsHorizontal(screen[row, col])) return false ;

187 for ( int k = col - 1 ; k >= 0 ; k -- )

188 {

189 if (Frame.IsBackground(screen[row, k]) || Frame.IsVertical(screen[row, k])) break ;

190 foreach ( char c in Frame.Lefts)

191 for ( int len = col - k + 1 ; ; len ++ )

192 {

193 Frame frame = Frame.GetValue(c, row, k, len);

194 if ( ! frame.InScreen(screen)) break ;

195 if (frame.Search(stack, screen)) return true ;

196 }

197 }

198 return false ;

199 }

200 }

201 }

这道题目比较有意思,它在屏幕上给出了一张画有一些正方形方框的图形,这些方框可能互相覆盖,但不会超出屏幕的边界。现有要求你给出一个能构成该图形的方框序列。

本程序的入口点 Main 方法位于第 112 到 119 行。请注意第 114 到 115 行将输入流的编码设定为 iso-8859-1,这是因为这道题目使用最高位为 1 的 ASCII 码来表示方框线。如果使用缺省的 UTF-8 编码将无法正确读取题目的输入。

第 121 到 129 行的 Run 方法执行实际的工作。首先在第 123 行调用第 131 到 140 行的 Read 方法读取输入(请注意该方法可以读取任意大小的矩形屏幕的内容)。然后在第 124 行分配一个用来保存各个方框的堆栈 stack, 接着在第 125 到 126行依次调用 SearchCorner 和 SearchSide 方法进行搜索。最后在第 127 到 128 行输出保存在堆栈 stack 中的结果。

第 142 到 156 行的 SearchCorner 方法从方框的四个角开始在全屏幕进行搜索。第 148 行判断如果屏幕当前元素不是方框的四个角的话就跳过。第 149 到 154 行的循环依次从边长为 1 开始递增构造方框(第 151 行),直到方框超出屏幕为止(第 152 行)。第 153 行调用 Search 方法来进行搜索,如果成功地找到并标记一个方框,就跳回第 144 行重新开始搜索。注意这并不会造成死循环,因为找到的方框已经被标记过了,下次就不会再找这个方框了。这里使用了 goto 语句,因为这是很清楚自然的做法。如果要消除这个 goto 语句的话,势必要增加布尔变量形成复杂的控制流程,反而不如使用 goto 语句一目了然。

第 74 到 80 行的 Search 方法首先在第 76 行调用 IsFrame 方法判断能否构成一个正方形方框,如果可以的话,就在 77 行调用 Mark 方法标记该方框,然后将该方框压入堆栈(第 78 行)。

第 82 到 96 行的 IsFrame 方法首先在第 84 到 90 行判断构成方框的边线是否符合要求,然后在第 91 到 94 行判断构成方框的四个角是否符合要求。这是通过调用第 98 到 102 行的 IsBorderLine 方法进行判断的,如果该位置是标记过的也算符合要求,因为这表明这个位置原来是被其他方框覆盖了的。

第 104 到 109 行的 Mark 方法用来标记已经找到的方框,以防止下次搜索时再次找到同一方框陷入死循环。

第 158 到 165 行的 SearchSide 方法从方框的边线开始全屏幕搜索。在第 163 到 164 分别调用 SearchVertical 和 SearchHorizontal 来进行搜索,如果成功地找到并标记一个方框,就跳回第 160 行重新开始搜索。

第 167 到 182 行的 SearchVertical 方法在 169 行从方框的垂直线开始搜索。在 170 行开始从当前位置往上搜索直到屏幕顶部为止。如果碰到水平线或屏幕背景就停止搜索(第 172 行),因为这个方框肯定是被其它方框覆盖的,它不能在这次搜索中压入堆栈,必须留待以后处理。然后在第 173 行开始使用两个顶角试图构造方框(第 176 行),其边长从 row - k + 1 开始依次增大(第 174 行),直到方框超出屏幕为止(第 177 行)。第 178 行调用 Search 方法来进行搜索。

第 184 到 199 行到 SearchHorizontal 方法从方框的水平线开始搜索。它的工作原理和 SearchVertical 方法是一样的。

返回目录

解析Timus1006.Squareframes问题,此程序可找出构成给定图形的方框序列,支持方框相互覆盖的情况。

解析Timus1006.Squareframes问题,此程序可找出构成给定图形的方框序列,支持方框相互覆盖的情况。

2085

2085

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?