- 1. 基础环境设置

- 2. 安装 CA 证书和秘钥

- 3. 部署 etcd

- 4. 部署 flannel

- 5. 部署 kubectl 工具,创建 kubeconfig 文件

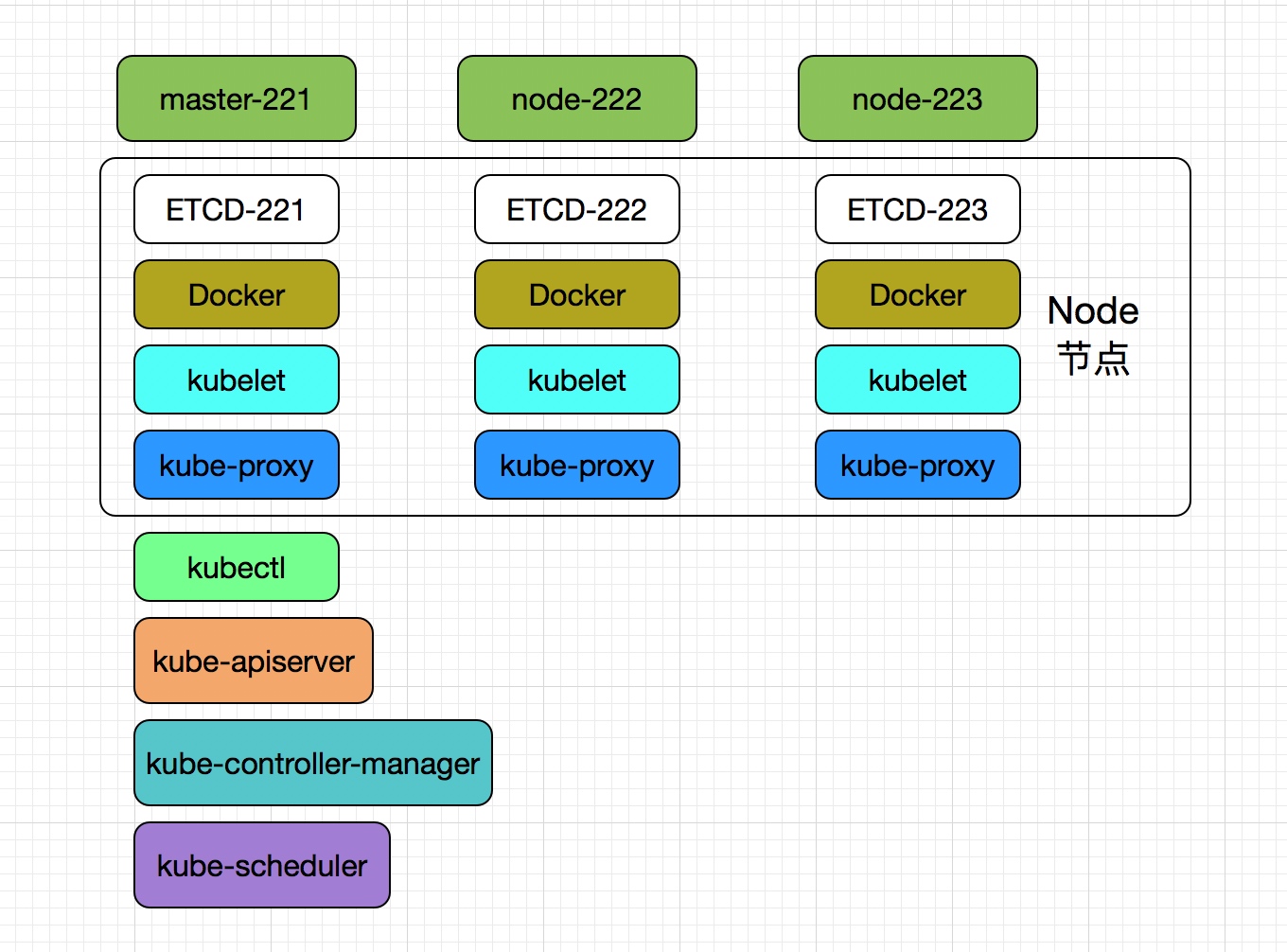

- 6. 部署 master 节点

- 7. 部署 Node 节点

- 8. 安装 DNS 插件

- 9. 部署 dashboard 插件

- 10. 部署 heapster 插件

摘自:

https://blog.youkuaiyun.com/newcrane/article/details/78952987

https://k8s-install.opsnull.com/

https://jimmysong.io/kubernetes-handbook/practice/install-kubernetes-on-centos.html

Kubernetes1.9 二进制版集群+ipvs+coredns

1. 基础环境设置

可使用镜像及二进制文件:链接:https://pan.baidu.com/s/1ypgC8MeYc0SUfZr-IdnHeg 密码:bb82

软件环境:

- CentOS Linux release 7.4.1708 (Core)

- kubernetes1.8.6

- etcd3.2.12

- flanneld0.9.1

- docker17.12.0-ce

方便安装在 master 与其他两台机器设置成无密码访问

ssh-keygen ssh-copy-id -i node01 ssh-copy-id -i node02 ssh-copy-id -i node03hosts设置

for i in {100..102};do ssh 192.168.99.$i " cat <<END>> /etc/hosts 192.168.99.100 node01 etcd01 192.168.99.101 node02 etcd02 192.168.99.102 node03 etcd03 END";done设置防火墙

for i in {100..102};do ssh 192.168.99.$i "systemctl stop firewalld;systemctl disable firewalld";done配置内核参数

创建 /etc/sysctl.d/k8s.conf 文件

for i in {100..102};do ssh 192.168.99.$i " cat << EOF > /etc/sysctl.d/k8s.conf net.bridge.bridge-nf-call-ip6tables = 1 net.bridge.bridge-nf-call-iptables = 1 vm.swappiness=0 EOF";done加载模块

for i in {100..102};do ssh 192.168.99.$i " modprobe br_netfilter echo 'modprobe br_netfilter' >> /etc/rc.local";done设置操作权限

for i in {100..102};do ssh 192.168.99.$i "chmod a+x /etc/rc.d/rc.local";done执行

sysctl -p使其生效for i in {100..102};do ssh 192.168.99.$i " sysctl -p /etc/sysctl.d/k8s.conf";done禁用SELINUX

for i in {100..102};do ssh 192.168.99.$i " setenforce 0; sed -i 's/SELINUX=enforcing/SELINUX=disabled/g' /etc/selinux/config";done关闭系统的Swap

for i in {100..102};do ssh 192.168.99.$i " sed -i 's/.*swap.*/#&/' /etc/fstab swapoff -a";done设置iptables策略为 ACCEPT

for i in {100..102};do ssh 192.168.99.$i " /sbin/iptables -P FORWARD ACCEPT; echo 'sleep 60 && /sbin/iptables -P FORWARD ACCEPT' >> /etc/rc.local";done安装依赖包

for i in {100..102};do ssh 192.168.99.$i " yum install -y epel-release; yum install -y yum-utils device-mapper-persistent-data lvm2 net-tools conntrack-tools wget";done

所有的软件以及相关配置 https://pan.baidu.com/share/init?surl=AZCx_k1w4g3BBWatlomkyQ

密码:ff7y

2. 安装 CA 证书和秘钥

kubernetes 系统各组件需要使用 TLS 证书对通信进行加密,本文档使用 CloudFlare 的 PKI 工具集 cfssl 来生成 Certificate Authority (CA) 证书和秘钥文件,CA 是自签名的证书,用来签名后续创建的其它 TLS 证书。

以下操作在一台 master 上操作,,证书只需要创建一次即可,以后在向集群中添加新节点时只要将 /etc/kubernetes/ 目录下的证书拷贝到新节点上即可

2.1 安装 CFSSL

wget https://pkg.cfssl.org/R1.2/cfssl_linux-amd64

chmod +x cfssl_linux-amd64

mv cfssl_linux-amd64 /usr/local/bin/cfssl

wget https://pkg.cfssl.org/R1.2/cfssljson_linux-amd64

chmod +x cfssljson_linux-amd64

mv cfssljson_linux-amd64 /usr/local/bin/cfssljson

wget https://pkg.cfssl.org/R1.2/cfssl-certinfo_linux-amd64

chmod +x cfssl-certinfo_linux-amd64

mv cfssl-certinfo_linux-amd64 /usr/local/bin/cfssl-certinfo

2.2 创建 CA 证书与秘钥

创建 CA 配置文件

mkdir /root/ssl cd /root/ssl cat > ca-config.json << EOF { "signing": { "default": { "expiry": "8760h" }, "profiles": { "kubernetes": { "usages": [ "signing", "key encipherment", "server auth", "client auth" ], "expiry": "8760h" } } } } EOF- ca-config.json:可以定义多个 profiles,分别指定不同的过期时间、使用场景等参数;后续在签名证书时使用某个 profile;

- signing:表示该证书可用于签名其它证书;生成的 ca.pem 证书中 CA=TRUE;

- server auth:表示 client 可以用该 CA 对 server 提供的证书进行验证;

- client auth:表示 server 可以用该 CA 对 client 提供的证书进行验证;

创建 CA 证书签名请求

cat > ca-csr.json << EOF { "CN": "kubernetes", "key": { "algo": "rsa", "size": 2048 }, "names": [ { "C": "CN", "ST": "BeiJing", "L": "BeiJing", "O": "k8s", "OU": "System" } ] } EOF- “CN”:Common Name,kube-apiserver 从证书中提取该字段作为请求的用户名 (User Name);浏览器使用该字段验证网站是否合法;

- “O”:Organization,kube-apiserver 从证书中提取该字段作为请求用户所属的组 (Group);

生成 CA 证书和私钥

cfssl gencert -initca ca-csr.json | cfssljson -bare ca

2.3 创建 kubernetes 证书与秘钥

创建 kubernetes 证书签名请求文件:

cat > kubernetes-csr.json << EOF { "CN": "kubernetes", "hosts": [ "127.0.0.1", "192.168.99.100", "192.168.99.101", "192.168.99.102", "10.254.0.1", "kubernetes", "kubernetes.default", "kubernetes.default.svc", "kubernetes.default.svc.cluster", "kubernetes.default.svc.cluster.local" ], "key": { "algo": "rsa", "size": 2048 }, "names": [ { "C": "CN", "ST": "BeiJing", "L": "BeiJing", "O": "k8s", "OU": "System" } ] } EOF- hosts 中的内容可以为空,即使按照上面的配置,向集群中增加新节点后也不需要重新生成证书。

- 如果 hosts 字段不为空则需要指定授权使用该证书的 IP 或域名列表,由于该证书后续被 etcd 集群和 kubernetes master 集群使用,所以上面分别指定了 etcd 集群、kubernetes master 集群的主机 IP 和 kubernetes 服务的服务 IP

生成 kubernetes 证书和私钥

cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes kubernetes-csr.json | cfssljson -bare kubernetes确认生成结果

ls kubernetes* kubernetes.csr kubernetes-csr.json kubernetes-key.pem kubernetes.pem

2.4 创建 admin 证书与秘钥

创建 admin 证书

cat > admin-csr.json << EOF { "CN": "admin", "hosts": [], "key": { "algo": "rsa", "size": 2048 }, "names": [ { "C": "CN", "ST": "BeiJing", "L": "BeiJing", "O": "system:masters", "OU": "System" } ] } EOF- kube-apiserver 使用 RBAC 对客户端(如 kubelet、kube-proxy、Pod)请求进行授权;

- kube-apiserver 预定义了一些 RBAC 使用的 RoleBindings,如 cluster-admin 将 Group system:masters 与 Role cluster-admin 绑定,该 Role 授予了调用kube-apiserver 的所有 API的权限;

- OU 指定该证书的 Group 为 system:masters,kubelet 使用该证书访问 kube-apiserver 时 ,由于证书被 CA 签名,所以认证通过,同时由于证书用户组为经过预授权的 system:masters,所以被授予访问所有 API 的权限

生成 admin 证书和私钥

cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes admin-csr.json | cfssljson -bare admin ls admin* admin.csr admin-csr.json admin-key.pem admin.pem

2.5 创建 kube-proxy 证书与秘钥

创建 kube-proxy 证书

cat > kube-proxy-csr.json << EOF { "CN": "system:kube-proxy", "hosts": [], "key": { "algo": "rsa", "size": 2048 }, "names": [ { "C": "CN", "ST": "BeiJing", "L": "BeiJing", "O": "k8s", "OU": "System" } ] } EOF- CN 指定该证书的 User 为 system:kube-proxy;

- kube-apiserver 预定义的 RoleBinding cluster-admin 将User system:kube-proxy 与 Role system:node-proxier 绑定,该 Role 授予了调用 kube-apiserver Proxy 相关 API 的权限;

生成 kube-proxy 客户端证书和私钥

cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes kube-proxy-csr.json | cfssljson -bare kube-proxy ls kube-proxy* kube-proxy.csr kube-proxy-csr.json kube-proxy-key.pem kube-proxy.pem

2.6 分发证书

将生成的证书和秘钥文件(后缀名为.pem)拷贝到所有机器的 /etc/kubernetes/ssl 目录下

for i in {100..102};do ssh 192.168.99.$i "mkdir -p /etc/kubernetes/ssl";done

for i in {100..102};do scp /root/ssl/*.pem 192.168.99.$i:/etc/kubernetes/ssl;done

3. 部署 etcd

在三个节点都安装 etcd,下面的操作需要再三个节点都执行一遍

3.1 下载 etcd 安装包

wget https://github.com/coreos/etcd/releases/download/v3.2.12/etcd-v3.2.12-linux-amd64.tar.gz

tar -xvf etcd-v3.2.12-linux-amd64.tar.gz

for i in {100..102};do scp etcd-v3.2.12-linux-amd64/etcd* 192.168.99.$i:/usr/local/bin;done

3.2 配置 etcd 服务

创建工作目录

for i in {100..102};do ssh 192.168.99.$i "mkdir -p /var/lib/etcd";done创建 node01 的 systemd 文件

cat > /usr/lib/systemd/system/etcd.service << EOF [Unit] Description=Etcd Server After=network.target After=network-online.target Wants=network-online.target Documentation=https://github.com/coreos [Service] Type=notify WorkingDirectory=/var/lib/etcd/ ExecStart=/usr/local/bin/etcd \\ --name node01 \\ --cert-file=/etc/kubernetes/ssl/kubernetes.pem \\ --key-file=/etc/kubernetes/ssl/kubernetes-key.pem \\ --peer-cert-file=/etc/kubernetes/ssl/kubernetes.pem \\ --peer-key-file=/etc/kubernetes/ssl/kubernetes-key.pem \\ --trusted-ca-file=/etc/kubernetes/ssl/ca.pem \\ --peer-trusted-ca-file=/etc/kubernetes/ssl/ca.pem \\ --initial-advertise-peer-urls https://192.168.99.100:2380 \\ --listen-peer-urls https://192.168.99.100:2380 \\ --listen-client-urls https://192.168.99.100:2379,http://127.0.0.1:2379 \\ --advertise-client-urls https://192.168.99.100:2379 \\ --initial-cluster-token etcd-cluster-0 \\ --initial-cluster node01=https://192.168.99.100:2380,node02=https://192.168.99.101:2380,node03=https://192.168.99.102:2380 \\ --initial-cluster-state new \\ --data-dir=/var/lib/etcd Restart=on-failure RestartSec=5 LimitNOFILE=65536 [Install] WantedBy=multi-user.target EOF创建 node02 的 systemd 文件

cat > /usr/lib/systemd/system/etcd.service << EOF [Unit] Description=Etcd Server After=network.target After=network-online.target Wants=network-online.target Documentation=https://github.com/coreos [Service] Type=notify WorkingDirectory=/var/lib/etcd/ ExecStart=/usr/local/bin/etcd \\ --name node02 \\ --cert-file=/etc/kubernetes/ssl/kubernetes.pem \\ --key-file=/etc/kubernetes/ssl/kubernetes-key.pem \\ --peer-cert-file=/etc/kubernetes/ssl/kubernetes.pem \\ --peer-key-file=/etc/kubernetes/ssl/kubernetes-key.pem \\ --trusted-ca-file=/etc/kubernetes/ssl/ca.pem \\ --peer-trusted-ca-file=/etc/kubernetes/ssl/ca.pem \\ --initial-advertise-peer-urls https://192.168.99.101:2380 \\ --listen-peer-urls https://192.168.99.101:2380 \\ --listen-client-urls https://192.168.99.101:2379,http://127.0.0.1:2379 \\ --advertise-client-urls https://192.168.99.101:2379 \\ --initial-cluster-token etcd-cluster-0 \\ --initial-cluster node01=https://192.168.99.100:2380,node02=https://192.168.99.101:2380,node03=https://192.168.99.102:2380 \\ --initial-cluster-state new \\ --data-dir=/var/lib/etcd Restart=on-failure RestartSec=5 LimitNOFILE=65536 [Install] WantedBy=multi-user.target EOF创建 node03 的 systemd 文件

cat > /usr/lib/systemd/system/etcd.service << EOF [Unit] Description=Etcd Server After=network.target After=network-online.target Wants=network-online.target Documentation=https://github.com/coreos [Service] Type=notify WorkingDirectory=/var/lib/etcd/ ExecStart=/usr/local/bin/etcd \\ --name node03 \\ --cert-file=/etc/kubernetes/ssl/kubernetes.pem \\ --key-file=/etc/kubernetes/ssl/kubernetes-key.pem \\ --peer-cert-file=/etc/kubernetes/ssl/kubernetes.pem \\ --peer-key-file=/etc/kubernetes/ssl/kubernetes-key.pem \\ --trusted-ca-file=/etc/kubernetes/ssl/ca.pem \\ --peer-trusted-ca-file=/etc/kubernetes/ssl/ca.pem \\ --initial-advertise-peer-urls https://192.168.99.102:2380 \\ --listen-peer-urls https://192.168.99.102:2380 \\ --listen-client-urls https://192.168.99.102:2379,http://127.0.0.1:2379 \\ --advertise-client-urls https://192.168.99.102:2379 \\ --initial-cluster-token etcd-cluster-0 \\ --initial-cluster node01=https://192.168.99.100:2380,node02=https://192.168.99.101:2380,node03=https://192.168.99.102:2380 \\ --initial-cluster-state new \\ --data-dir=/var/lib/etcd Restart=on-failure RestartSec=5 LimitNOFILE=65536 [Install] WantedBy=multi-user.target EOF- 指定 etcd 的工作目录为 /var/lib/etcd,数据目录为 /var/lib/etcd,需在启动服务前创建这个目录,否则启动服务的时候会报错“Failed at step CHDIR spawning /usr/bin/etcd: No such file or directory”;

- 为了保证通信安全,需要指定 etcd 的公私钥(cert-file和key-file)、Peers 通信的公私钥和 CA 证书(peer-cert-file、peer-key-file、peer-trusted-ca-file)、客户端的CA证书(trusted-ca-file);

- 创建 kubernetes.pem 证书时使用的 kubernetes-csr.json 文件的 hosts 字段包含所有 etcd 节点的IP,否则证书校验会出错;

- –initial-cluster-state 值为 new 时,–name 的参数值必须位于 –initial-cluster 列表中;

3.3 启动和验证 etcd 服务

启动服务

for i in {100..102};do ssh 192.168.99.$i "

systemctl daemon-reload;

systemctl enable etcd;

systemctl start etcd;

systemctl status etcd";done

最先启动的 etcd 进程会卡住一段时间,等待其它节点上的 etcd 进程加入集群,为正常现象。

验证 etcd 服务,在任何一个 etcd 节点执行

export ETCD_ENDPOINTS="https://192.168.99.100:2379,https://192.168.99.102:2379"

etcdctl \

--endpoints=${ETCD_ENDPOINTS} \

--ca-file=/etc/kubernetes/ssl/ca.pem \

--cert-file=/etc/kubernetes/ssl/kubernetes.pem \

--key-file=/etc/kubernetes/ssl/kubernetes-key.pem \

cluster-health

## 输出结果

member be2bba99f48e54c is healthy: got healthy result from https://192.168.99.100:2379

member bec754b230c8075e is healthy: got healthy result from https://192.168.99.102:2379

member dfc0880cac2a50c8 is healthy: got healthy result from https://192.168.99.101:2379

cluster is healthy

4. 部署 flannel

在三个节点都安装 Flannel,下面的操作需要再三个节点都执行一遍

4.1 下载安装 flannel

wget https://github.com/coreos/flannel/releases/download/v0.9.1/flannel-v0.9.1-linux-amd64.tar.gz

mkdir flannel

tar -xzvf flannel-v0.9.1-linux-amd64.tar.gz -C flannel

for i in {100..102};do scp flannel/{flanneld,mk-docker-opts.sh} root@192.168.99.$i:/usr/local/bin;done

4.2 配置网络

向 etcd 写入网段信息

这两个命令只需要任意一个节点上执行一次就可以

etcdctl --endpoints="https://192.168.99.100:2379,https://192.168.99.101:2379,https://192.168.99.102:2379" \

--ca-file=/etc/kubernetes/ssl/ca.pem \

--cert-file=/etc/kubernetes/ssl/kubernetes.pem \

--key-file=/etc/kubernetes/ssl/kubernetes-key.pem \

mkdir /kubernetes/network

etcdctl --endpoints="https://192.168.99.100:2379,https://192.168.99.101:2379,https://192.168.99.102:2379" \

--ca-file=/etc/kubernetes/ssl/ca.pem \

--cert-file=/etc/kubernetes/ssl/kubernetes.pem \

--key-file=/etc/kubernetes/ssl/kubernetes-key.pem \

mk /kubernetes/network/config '{"Network":"172.30.0.0/16","SubnetLen":24,"Backend":{"Type":"vxlan"}}'

4.3 创建 systemd 文件

cat > /usr/lib/systemd/system/flanneld.service << EOF

[Unit]

Description=Flanneld overlay address etcd agent

After=network.target

After=network-online.target

Wants=network-online.target

After=etcd.service

Before=docker.service

[Service]

Type=notify

ExecStart=/usr/local/bin/flanneld \\

-etcd-cafile=/etc/kubernetes/ssl/ca.pem \\

-etcd-certfile=/etc/kubernetes/ssl/kubernetes.pem \\

-etcd-keyfile=/etc/kubernetes/ssl/kubernetes-key.pem \\

-etcd-endpoints=https://192.168.99.100:2379,https://192.168.99.101:2379,https://192.168.99.102:2379 \\

-etcd-prefix=/kubernetes/network \\

-iface=enp0s8

ExecStartPost=/usr/local/bin/mk-docker-opts.sh -k DOCKER_NETWORK_OPTIONS -d /run/flannel/docker

Restart=on-failure

[Install]

WantedBy=multi-user.target

RequiredBy=docker.service

EOF

- mk-docker-opts.sh 脚本将分配给 flanneld 的 Pod 子网网段信息写入到 /run/flannel/docker 文件中,后续 docker 启动时使用这个文件中参数值设置 docker0 网桥;

- flanneld 使用系统缺省路由所在的接口和其它节点通信,对于有多个网络接口的机器(如,内网和公网),可以用 -iface=enpxx 选项值指定通信接口;

4.4 启动和验证 flannel

启动 flannel

for i in {100..102};do scp /usr/lib/systemd/system/flanneld.service root@192.168.99.$i:/usr/lib/systemd/system/;done

for i in {100..102};do ssh 192.168.99.$i "

systemctl daemon-reload;

systemctl enable flanneld;

systemctl start flanneld;

systemctl status flanneld";done

检查 flannel 服务状态

/usr/local/bin/etcdctl \

--endpoints=https://192.168.99.100:2379,https://192.168.99.101:2379,https://192.168.99.102:2379 \

--ca-file=/etc/kubernetes/ssl/ca.pem \

--cert-file=/etc/kubernetes/ssl/kubernetes.pem \

--key-file=/etc/kubernetes/ssl/kubernetes-key.pem \

ls /kubernetes/network/subnets

/kubernetes/network/subnets/172.30.31.0-24

/kubernetes/network/subnets/172.30.51.0-24

/kubernetes/network/subnets/172.30.95.0-24

5. 部署 kubectl 工具,创建 kubeconfig 文件

kubectl 是 kubernetes 的集群管理工具,任何节点通过 kubetcl 都可以管理整个k8s集群。

本文是在 master 节点部署,部署成功后会生成 /root/.kube/config 文件,kubectl 就是通过这个获取 kube-apiserver 地址、证书、用户名等信息,所以这个文件需要保管好。

5.1 下载安装包

wget https://dl.k8s.io/v1.10.3/kubernetes-client-linux-amd64.tar.gz

tar -xzvf kubernetes-client-linux-amd64.tar.gz

chmod a+x kubernetes/client/bin/kube*

scp kube* node01:/usr/local/bin/

scp kube* node02:/usr/local/bin/

scp kube* node03:/usr/local/bin/

5.2 创建 /root/.kube/config

# 设置集群参数,--server 指定 Master 节点 ip

kubectl config set-cluster kubernetes \

--certificate-authority=/etc/kubernetes/ssl/ca.pem \

--embed-certs=true \

--server=https://192.168.99.100:6443

# 设置客户端认证参数

kubectl config set-credentials admin \

--client-certificate=/etc/kubernetes/ssl/admin.pem \

--embed-certs=true \

--client-key=/etc/kubernetes/ssl/admin-key.pem

# 设置上下文参数

kubectl config set-context kubernetes \

--cluster=kubernetes \

--user=admin

# 设置默认上下文

kubectl config use-context kubernetes

- admin.pem 证书 O 字段值为 system:masters,kube-apiserver 预定义的 RoleBinding cluster-admin 将 Group system:masters 与 Role cluster-admin 绑定,该 Role 授予了调用kube-apiserver 相关 API 的权限

5.3 创建 bootstrap.kubeconfig

kubelet 访问 kube-apiserver 的时候是通过 bootstrap.kubeconfig 进行用户验证。

# 生成token 变量

export BOOTSTRAP_TOKEN=$(head -c 16 /dev/urandom | od -An -t x | tr -d ' ')

cat > token.csv <<EOF

${BOOTSTRAP_TOKEN},kubelet-bootstrap,10001,"system:kubelet-bootstrap"

EOF

mv token.csv /etc/kubernetes/

# 设置集群参数 --server 为 master 节点 ip

kubectl config set-cluster kubernetes \

--certificate-authority=/etc/kubernetes/ssl/ca.pem \

--embed-certs=true \

--server=https://192.168.99.100:6443 \

--kubeconfig=bootstrap.kubeconfig

# 设置客户端认证参数

kubectl config set-credentials kubelet-bootstrap \

--token=${BOOTSTRAP_TOKEN} \

--kubeconfig=bootstrap.kubeconfig

# 设置上下文参数

kubectl config set-context default \

--cluster=kubernetes \

--user=kubelet-bootstrap \

--kubeconfig=bootstrap.kubeconfig

# 设置默认上下文

kubectl config use-context default --kubeconfig=bootstrap.kubeconfig

mv bootstrap.kubeconfig /etc/kubernetes/

5.4 创建 kube-proxy.kubeconfig

# 设置集群参数 --server 参数为 master ip

kubectl config set-cluster kubernetes \

--certificate-authority=/etc/kubernetes/ssl/ca.pem \

--embed-certs=true \

--server=https://192.168.99.100:6443 \

--kubeconfig=kube-proxy.kubeconfig

# 设置客户端认证参数

kubectl config set-credentials kube-proxy \

--client-certificate=/etc/kubernetes/ssl/kube-proxy.pem \

--client-key=/etc/kubernetes/ssl/kube-proxy-key.pem \

--embed-certs=true \

--kubeconfig=kube-proxy.kubeconfig

# 设置上下文参数

kubectl config set-context default \

--cluster=kubernetes \

--user=kube-proxy \

--kubeconfig=kube-proxy.kubeconfig

#设置默认上下文

kubectl config use-context default --kubeconfig=kube-proxy.kubeconfig

mv kube-proxy.kubeconfig /etc/kubernetes/

- 设置集群参数和客户端认证参数时 –embed-certs 都为 true,这会将 certificate-authority、client-certificate 和 client-key 指向的证书文件内容写入到生成的 kube-proxy.kubeconfig 文件中;

- kube-proxy.pem 证书中 CN 为 system:kube-proxy,kube-apiserver 预定义的 RoleBinding cluster-admin 将User system:kube-proxy 与 Role system:node-proxier 绑定,该 Role 授予了调用 kube-apiserver Proxy 相关 API 的权限;

for i in {100..102};do scp /etc/kubernetes/{kube-proxy.kubeconfig,bootstrap.kubeconfig} root@192.168.99.$i:/etc/kubernetes/;done

6. 部署 master 节点

上面的那一堆都是准备工作,下面开始正式部署 kubernetes

在 master 节点进行部署

6.1 下载安装文件

wget https://dl.k8s.io/v1.10.3/kubernetes-server-linux-amd64.tar.gz

tar -zxvf kubernetes-server-linux-amd64.tar.gz -C kubernetes-server

scp -r kubernetes/server/bin/{kube-apiserver,kube-controller-manager,kube-scheduler,kubectl,kube-proxy,kubelet} node01:/usr/local/bin/

scp -r kubernetes/server/bin/{kube-apiserver,kube-controller-manager,kube-scheduler,kubectl,kube-proxy,kubelet} node02:/usr/local/bin/

scp -r kubernetes/server/bin/{kube-apiserver,kube-controller-manager,kube-scheduler,kubectl,kube-proxy,kubelet} node03:/usr/local/bin/

6.2 配置和启动 kube-apiserver

cat > /usr/lib/systemd/system/kube-apiserver.service << EOF

[Unit]

Description=Kubernetes API Server

Documentation=https://github.com/GoogleCloudPlatform/kubernetes

After=network.target

After=etcd.service

[Service]

ExecStart=/usr/local/bin/kube-apiserver \\

--logtostderr=true \\

--admission-control=NamespaceLifecycle,LimitRanger,ServiceAccount,DefaultStorageClass,ResourceQuota,NodeRestriction \\

--advertise-address=192.168.99.100 \\

--bind-address=192.168.99.100 \\

--insecure-bind-address=192.168.99.100 \\

--authorization-mode=RBAC \\

--runtime-config=rbac.authorization.k8s.io/v1alpha1 \\

--kubelet-https=true \\

--enable-bootstrap-token-auth \\

--token-auth-file=/etc/kubernetes/token.csv \\

--service-cluster-ip-range=10.254.0.0/16 \\

--service-node-port-range=8400-10000 \\

--tls-cert-file=/etc/kubernetes/ssl/kubernetes.pem \\

--tls-private-key-file=/etc/kubernetes/ssl/kubernetes-key.pem \\

--client-ca-file=/etc/kubernetes/ssl/ca.pem \\

--service-account-key-file=/etc/kubernetes/ssl/ca-key.pem \\

--etcd-cafile=/etc/kubernetes/ssl/ca.pem \\

--etcd-certfile=/etc/kubernetes/ssl/kubernetes.pem \\

--etcd-keyfile=/etc/kubernetes/ssl/kubernetes-key.pem \\

--etcd-servers=https://192.168.99.100:2379,https://192.168.99.101:2379,https://192.168.99.102:2379 \\

--enable-swagger-ui=true \\

--allow-privileged=true \\

--apiserver-count=3 \\

--audit-log-maxage=30 \\

--audit-log-maxbackup=3 \\

--audit-log-maxsize=100 \\

--audit-log-path=/var/lib/audit.log \\

--event-ttl=1h \\

--v=2

Restart=on-failure

RestartSec=5

Type=notify

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target

EOF

- –authorization-mode=RBAC 指定在安全端口使用 RBAC 授权模式,拒绝未通过授权的请求;

- kube-scheduler、kube-controller-manager 一般和 kube-apiserver 部署在同一台机器上,它们使用非安全端口和 kube-apiserver通信;

- kubelet、kube-proxy、kubectl 部署在其它 Node 节点上,如果通过安全端口访问 kube-apiserver,则必须先通过 TLS 证书认证,再通过 RBAC 授权;

- kube-proxy、kubectl 通过在使用的证书里指定相关的 User、Group 来达到通过 RBAC 授权的目的;

- 如果使用了 kubelet TLS Boostrap 机制,则不能再指定 –kubelet-certificate-authority、–kubelet-client-certificate 和 –kubelet-client-key 选项,否则后续 kube-apiserver 校验 kubelet 证书时出现 ”x509: certificate signed by unknown authority“ 错误;

- –admission-control 值必须包含 ServiceAccount,否则部署集群插件时会失败;

- –bind-address 不能为 127.0.0.1;

- –runtime-config配置为rbac.authorization.k8s.io/v1beta1,表示运行时的apiVersion

- –service-cluster-ip-range 指定 Service Cluster IP 地址段,该地址段不能路由可达;

- –service-node-port-range 指定 NodePort 的端口范围;

- 缺省情况下 kubernetes 对象保存在 etcd /registry 路径下,可以通过 –etcd-prefix 参数进行调整;

启动 kube-apiserver

systemctl daemon-reload

systemctl enable kube-apiserver

systemctl restart kube-apiserver

systemctl status kube-apiserver

6.3 配置和启动 kube-controller-manager

cat > /usr/lib/systemd/system/kube-controller-manager.service << EOF

[Unit]

Description=Kubernetes Controller Manager

Documentation=https://github.com/GoogleCloudPlatform/kubernetes

[Service]

ExecStart=/usr/local/bin/kube-controller-manager \\

--logtostderr=true \\

--address=127.0.0.1 \\

--master=http://192.168.99.100:8080 \\

--allocate-node-cidrs=true \\

--service-cluster-ip-range=10.254.0.0/16 \\

--cluster-cidr=172.30.0.0/16 \\

--cluster-name=kubernetes \\

--cluster-signing-cert-file=/etc/kubernetes/ssl/ca.pem \\

--cluster-signing-key-file=/etc/kubernetes/ssl/ca-key.pem \\

--service-account-private-key-file=/etc/kubernetes/ssl/ca-key.pem \\

--root-ca-file=/etc/kubernetes/ssl/ca.pem \\

--leader-elect=true \\

--v=2

Restart=on-failure

LimitNOFILE=65536

RestartSec=5

[Install]

WantedBy=multi-user.target

EOF

- –address 值必须为 127.0.0.1,因为当前 kube-apiserver 期望 scheduler 和 controller-manager 在同一台机器

- –master=http://{MASTER_IP}:8080:使用非安全 8080 端口与 kube-apiserver 通信;

- –cluster-cidr 指定 Cluster 中 Pod 的 CIDR 范围,该网段在各 Node 间必须路由可达(flanneld保证);

- –service-cluster-ip-range 参数指定 Cluster 中 Service 的CIDR范围,该网络在各 Node 间必须路由不可达,必须和 kube-apiserver 中的参数一致;

- –cluster-signing-* 指定的证书和私钥文件用来签名为 TLS BootStrap 创建的证书和私钥;

- –root-ca-file 用来对 kube-apiserver 证书进行校验,指定该参数后,才会在Pod 容器的 ServiceAccount 中放置该 CA 证书文件;

- –leader-elect=true 部署多台机器组成的 master 集群时选举产生一处于工作状态的 kube-controller-manager 进程;

启动服务

systemctl daemon-reload

systemctl enable kube-controller-manager

systemctl restart kube-controller-manager

systemctl status kube-controller-manager

6.4 配置和启动 kube-scheduler

cat > /usr/lib/systemd/system/kube-scheduler.service << EOF

[Unit]

Description=Kubernetes Scheduler

Documentation=https://github.com/GoogleCloudPlatform/kubernetes

[Service]

ExecStart=/usr/local/bin/kube-scheduler \\

--logtostderr=true \\

--address=127.0.0.1 \\

--master=http://192.168.99.100:8080 \\

--leader-elect=true \\

--v=2

Restart=on-failure

LimitNOFILE=65536

RestartSec=5

[Install]

WantedBy=multi-user.target

EOF

- –address 值必须为 127.0.0.1,因为当前 kube-apiserver 期望 scheduler 和 controller-manager 在同一台机器;

- –master=http://{MASTER_IP}:8080:使用非安全 8080 端口与 kube-apiserver 通信;

- –leader-elect=true 部署多台机器组成的 master 集群时选举产生一处于工作状态的 kube-controller-manager 进程;

启动 kube-scheduler

systemctl daemon-reload

systemctl enable kube-scheduler

systemctl restart kube-scheduler

systemctl status kube-scheduler

6.5 验证 master 节点功能

kubectl get componentstatuses

##输出结果

NAME STATUS MESSAGE ERROR

etcd-1 Healthy {"health": "true"}

etcd-2 Healthy {"health": "true"}

etcd-0 Healthy {"health": "true"}

controller-manager Healthy ok

scheduler Healthy ok

7. 部署 Node 节点

master 节点也作为 node 节点使用,需要在三个节点都执行安装操作

7.1 下载文件

wget https://download.docker.com/linux/static/stable/x86_64/docker-17.12.0-ce.tgz

tar -xvf docker-17.12.0-ce.tgz

for i in {100..102};do scp docker/docker* root@192.168.99.$i:/usr/local/bin;done

7.2 配置启动 docker

cat > /usr/lib/systemd/system/docker.service << EOF

[Unit]

Description=Docker Application Container Engine

Documentation=http://docs.docker.io

[Service]

Environment="PATH=/usr/local/bin:/bin:/sbin:/usr/bin:/usr/sbin"

EnvironmentFile=-/run/flannel/subnet.env

EnvironmentFile=-/run/flannel/docker

ExecStart=/usr/local/bin/dockerd \\

--exec-opt native.cgroupdriver=cgroupfs \\

--log-level=error \\

--log-driver=json-file \\

--storage-driver=overlay \\

\$DOCKER_NETWORK_OPTIONS

ExecReload=/bin/kill -s HUP \$MAINPID

Restart=on-failure

RestartSec=5

LimitNOFILE=infinity

LimitNPROC=infinity

LimitCORE=infinity

Delegate=yes

KillMode=process

[Install]

WantedBy=multi-user.target

EOF

- \(DOCKER_NETWORK_OPTIONS和\)MAINPID不需要替换;

- flanneld 启动时将网络配置写入到 /run/flannel/docker 文件中的变量 DOCKER_NETWORK_OPTIONS,dockerd 命令行上指定该变量值来设置 docker0 网桥参数;

- 如果指定了多个 EnvironmentFile 选项,则必须将 /run/flannel/docker 放在最后(确保 docker0 使用 flanneld 生成的 bip 参数);

- 不能关闭默认开启的 –iptables 和 –ip-masq 选项;

- 如果内核版本比较新,建议使用 overlay 存储驱动;

- –exec-opt native.cgroupdriver=systemd参数可以指定为”cgroupfs”或者“systemd”

同步配置到其它节点

for i in {100..102};do scp docker.service root@192.168.99.$i:/usr/lib/systemd/system/;done

启动服务

for i in {100..102};do ssh 192.168.99.$i "

systemctl daemon-reload;

systemctl enable docker;

systemctl start docker;

systemctl status docker";done

7.3 安装和配置 kubelet

kubelet 启动时向 kube-apiserver 发送 TLS bootstrapping 请求,需要先将 bootstrap token 文件中的 kubelet-bootstrap 用户赋予 system:node-bootstrapper 角色,然后 kubelet 才有权限创建认证请求

下面这条命令只在 master 点执行一次即可

cd /etc/kubernetes

kubectl create clusterrolebinding kubelet-bootstrap --clusterrole=system:node-bootstrapper --user=kubelet-bootstrap

由于 master 已经有下面两个文件,这里只需要把他 scp 到其他两个节点即可

7.3.1 下载安装 kubelet 和 kube-proxy

wget https://dl.k8s.io/v1.10.3/kubernetes-server-linux-amd64.tar.gz

tar -xzvf kubernetes-server-linux-amd64.tar.gz

cp -r kubernetes/server/bin/{kube-proxy,kubelet} /usr/local/bin/

for i in {100..102};do scp /usr/local/bin/{kube-proxy,kubelet} root@192.168.99.$i:/usr/local/bin/;done

创建 kubelet 工作目录

for i in {100..102};do ssh 192.168.99.$i " mkdir /var/lib/kubelet";done

7.3.2 配置启动 kubelet

cat > /usr/lib/systemd/system/kubelet.service << EOF

[Unit]

Description=Kubernetes Kubelet

Documentation=https://github.com/GoogleCloudPlatform/kubernetes

After=docker.service

Requires=docker.service

[Service]

WorkingDirectory=/var/lib/kubelet

ExecStart=/usr/local/bin/kubelet \\

--address=192.168.99.100 \\

--hostname-override=192.168.99.100 \\

--pod-infra-container-image=gcr.io/google_containers/pause-amd64:3.0 \\

--experimental-bootstrap-kubeconfig=/etc/kubernetes/bootstrap.kubeconfig \\

--kubeconfig=/etc/kubernetes/kubelet.kubeconfig \\

--cert-dir=/etc/kubernetes/ssl \\

--container-runtime=docker \\

--cluster-dns=10.254.0.2 \\

--cluster-domain=cluster.local \\

--hairpin-mode promiscuous-bridge \\

--allow-privileged=true \\

--serialize-image-pulls=false \\

--register-node=true \\

--logtostderr=true \\

--cgroup-driver=cgroupfs \\

--v=2

Restart=on-failure

KillMode=process

LimitNOFILE=65536

RestartSec=5

[Install]

WantedBy=multi-user.target

EOF

- –address 是本机ip,不能设置为 127.0.0.1,否则后续 Pods 访问 kubelet 的 API 接口时会失败,因为 Pods 访问的 127.0.0.1 指向自己而不是 kubelet;

- –hostname-override 也是本机IP;

- –cgroup-driver 配置成 cgroup(保持docker和kubelet中的cgroup driver配置一致即可);

- –experimental-bootstrap-kubeconfig 指向 bootstrap kubeconfig 文件,kubelet 使用该文件中的用户名和 token 向 kube-apiserver 发送 TLS Bootstrapping 请求;

- 管理员通过了 CSR 请求后,kubelet 自动在 –cert-dir 目录创建证书和私钥文件(kubelet-client.crt 和 kubelet-client.key),然后写入 –kubeconfig 文件(自动创建 –kubeconfig 指定的文件);

- 建议在 –kubeconfig 配置文件中指定 kube-apiserver 地址,如果未指定 –api-servers 选项,则必须指定 –require-kubeconfig 选项后才从配置文件中读取 kue-apiserver 的地址,否则 kubelet 启动后将找不到 kube-apiserver (日志中提示未找到 API Server),kubectl get nodes 不会返回对应的 Node 信息;

- –cluster-dns 指定 kubedns 的 Service IP(可以先分配,后续创建 kubedns 服务时指定该 IP),–cluster-domain 指定域名后缀,这两个参数同时指定后才会生效;

- –cluster-domain 指定 pod 启动时 /etc/resolve.conf 文件中的 search domain ,起初我们将其配置成了 cluster.local.,这样在解析 service 的 DNS 名称时是正常的,可是在解析 headless service 中的 FQDN pod name 的时候却错误,因此我们将其修改为 cluster.local,去掉嘴后面的 ”点号“ 就可以解决该问题;

- –kubeconfig=/etc/kubernetes/kubelet.kubeconfig中指定的kubelet.kubeconfig文件在第一次启动kubelet之前并不存在,请看下文,当通过CSR请求后会自动生成kubelet.kubeconfig文件,如果你的节点上已经生成了~/.kube/config文件,你可以将该文件拷贝到该路径下,并重命名为kubelet.kubeconfig,所有node节点可以共用同一个kubelet.kubeconfig文件,这样新添加的节点就不需要再创建CSR请求就能自动添加到kubernetes集群中。同样,在任意能够访问到kubernetes集群的主机上使用kubectl –kubeconfig命令操作集群时,只要使用~/.kube/config文件就可以通过权限认证,因为这里面已经有认证信息并认为你是admin用户,对集群拥有所有权限。

同步配置

for i in {100..102};do scp /usr/lib/systemd/system/kubelet.service root@192.168.99.$i:/usr/lib/systemd/system/;done

ssh 192.168.99.101 "sed -i 's/192.168.99.100/192.168.99.101/g' /usr/lib/systemd/system/kubelet.service"

ssh 192.168.99.102 "sed -i 's/192.168.99.100/192.168.99.102/g' /usr/lib/systemd/system/kubelet.service"

ssh 192.168.99.102 "cat /usr/lib/systemd/system/kubelet.service"

启动 kubelet

for i in {100..102};do ssh 192.168.99.$i "

systemctl daemon-reload;

systemctl enable kubelet;

systemctl start kubelet;

systemctl status kubelet";done

执行 TLS 证书授权请求

kubelet 首次启动时向 kube-apiserver 发送证书签名请求,必须授权通过后,Node 才会加入到集群中

在三个节点都部署完 kubelet 之后,在 master 节点执行授权操作

# 查询授权请求

kubectl get csr

NAME AGE REQUESTOR CONDITION

node-csr--eUQ3Gbj-kKAFGnvsNcDXaaKSkgOP6qg4yFUzuJcoIo 29s kubelet-bootstrap Pending

node-csr-3Sb1MgoFpVDeI28qKujAkCHTvZELPMKh1QoQtLB1Vv0 29s kubelet-bootstrap Pending

node-csr-4D7r9R2I7XWu1oK0d2HkFb1XggOIJ9XeXaiN_lwb0nQ 28s kubelet-bootstrap Pending

#授权

kubectl certificate approve node-csr--eUQ3Gbj-kKAFGnvsNcDXaaKSkgOP6qg4yFUzuJcoIo

kubectl certificate approve node-csr-3Sb1MgoFpVDeI28qKujAkCHTvZELPMKh1QoQtLB1Vv0

kubectl certificate approve node-csr-4D7r9R2I7XWu1oK0d2HkFb1XggOIJ9XeXaiN_lwb0nQ

kubectl get csr

# 输出结果

NAME AGE REQUESTOR CONDITION

node-csr--eUQ3Gbj-kKAFGnvsNcDXaaKSkgOP6qg4yFUzuJcoIo 2m kubelet-bootstrap Approved,Issued

node-csr-3Sb1MgoFpVDeI28qKujAkCHTvZELPMKh1QoQtLB1Vv0 2m kubelet-bootstrap Approved,Issued

node-csr-4D7r9R2I7XWu1oK0d2HkFb1XggOIJ9XeXaiN_lwb0nQ 1m kubelet-bootstrap Approved,Issued

kubectl get nodes

#返回

No resources found

在 master 上进行角色绑定

kubectl get nodes

kubectl describe clusterrolebindings system:node

kubectl create clusterrolebinding kubelet-node-clusterbinding \

--clusterrole=system:node --user=system:node:192.168.99.100

kubectl describe clusterrolebindings kubelet-node-clusterbinding

# 也可以将在整个集群范围内将 system:node ClusterRole 授予组”system:nodes”:

kubectl create clusterrolebinding kubelet-node-clusterbinding --clusterrole=system:node --group=system:nodes

kubectl get nodes

查看已加入集群的节点

kubectl get nodes

NAME STATUS ROLES AGE VERSION

192.168.99.100 Ready <none> 13s v1.10.3

192.168.99.101 Ready <none> 50s v1.10.3

192.168.99.102 Ready <none> 26s v1.10.3

7.4 安装和配置 kube-proxy

7.4.1 创建 kube-proxy 工作目录

for i in {100..102};do ssh 192.168.99.$i "mkdir -p /var/lib/kube-proxy";done

7.4.2 配置启动 kube-proxy

cat > /usr/lib/systemd/system/kube-proxy.service << EOF

[Unit]

Description=Kubernetes Kube-Proxy Server

Documentation=https://github.com/GoogleCloudPlatform/kubernetes

After=network.target

[Service]

WorkingDirectory=/var/lib/kube-proxy

ExecStart=/usr/local/bin/kube-proxy \\

--bind-address=192.168.99.100 \\

--hostname-override=192.168.99.100 \\

--cluster-cidr=10.254.0.0/16 \\

--kubeconfig=/etc/kubernetes/kube-proxy.kubeconfig \\

--logtostderr=true \\

--v=2

Restart=on-failure

RestartSec=5

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target

EOF

- –bind-address 参数为本机IP

- –hostname-override 参数为本机IP,值必须与 kubelet 的值一致,否则 kube-proxy 启动后会找不到该 Node,从而不会创建任何 iptables 规则;

- –cluster-cidr 必须与 kube-apiserver 的 –service-cluster-ip-range 选项值一致,kube-proxy 根据 –cluster-cidr 判断集群内部和外部流量,指定 –cluster-cidr 或 –masquerade-all 选项后 kube-proxy 才会对访问 Service IP 的请求做 SNAT;

- –kubeconfig 指定的配置文件嵌入了 kube-apiserver 的地址、用户名、证书、秘钥等请求和认证信息;

- 预定义的 RoleBinding cluster-admin 将User system:kube-proxy 与 Role system:node-proxier 绑定,该 Role 授予了调用 kube-apiserver Proxy 相关 API 的权限;

同步配置

for i in {100..102};do scp /usr/lib/systemd/system/kube-proxy.service root@192.168.99.$i:/usr/lib/systemd/system/;done

ssh 192.168.99.101 "sed -i 's/192.168.99.100/192.168.99.101/g' /usr/lib/systemd/system/kube-proxy.service"

ssh 192.168.99.102 "sed -i 's/192.168.99.100/192.168.99.102/g' /usr/lib/systemd/system/kube-proxy.service"

ssh 192.168.99.102 "cat /usr/lib/systemd/system/kube-proxy.service"

启动 kube-proxy

for i in {100..102};do ssh 192.168.99.$i "

systemctl daemon-reload;

systemctl enable kube-proxy;

systemctl start kube-proxy;

systemctl status kube-proxy";done

在另外的两个节点进行上面的部署操作,注意替换其中的 ip

8. 安装 DNS 插件

在 master 主节点上进行安装操作

8.1 下载安装文件

wget https://github.com/kubernetes/kubernetes/releases/download/v1.10.3/kubernetes.tar.gz

tar xzvf kubernetes.tar.gz

cd /root/kubernetes/cluster/addons/dns

mv kubedns-svc.yaml.sed kubedns-svc.yaml

把文件中 $DNS_SERVER_IP 替换成 10.254.0.2

sed -i 's/$DNS_SERVER_IP/10.254.0.2/g' ./kubedns-svc.yaml

mv ./kubedns-controller.yaml.sed ./kubedns-controller.yaml

把 $DNS_DOMAIN 替换成 cluster.local

sed -i 's/$DNS_DOMAIN/cluster.local/g' ./kubedns-controller.yaml

ls *.yaml

kubedns-cm.yaml kubedns-controller.yaml kubedns-sa.yaml kubedns-svc.yaml

8.2 创建

kubectl create -f .

# 或者

kubectl create -f kubedns-cm.yaml -f kubedns-controller.yaml -f kubedns-sa.yaml -f kubedns-svc.yaml

##输出结果

configmap "kube-dns" created

deployment "kube-dns" created

serviceaccount "kube-dns" created

service "kube-dns" created

9. 部署 dashboard 插件

在 master 节点部署

9.1 下载部署文件

wget https://raw.githubusercontent.com/kubernetes/dashboard/v1.8.1/src/deploy/recommended/kubernetes-dashboard.yaml

9.2 修改 kubernetes-dashboard.yaml

定位到 kind: Service 部分,增加 type: NodePort

kind: Service

apiVersion: v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kube-system

spec:

type: NodePort

ports:

- port: 443

targetPort: 8443

nodePort: 8510

selector:

k8s-app: kubernetes-dashboard

9.3 创建 kubernetes-dashboard

kubectl create -f kubernetes-dashboard.yaml

如果 dashboard 出现

configmaps is forbidden: User “system:serviceaccount:kube-system:kubernetes-dashboard” cannot list configmaps in the namespace “default” 错误,需要对 ServiceAccount 授权

9.4 创建 rbac 认证

cat > kubernetes-dashboard-admin.rbac.yaml << EOF

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1alpha1

metadata:

name: dashboard-admin

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: cluster-admin

subjects:

- kind: ServiceAccount

name: kubernetes-dashboard

namespace: kube-system

EOF

kubectl create -f kubernetes-dashboard-admin.rbac.yaml

10. 部署 heapster 插件

10.1 下载安装文件

wget https://github.com/kubernetes/heapster/archive/v1.5.0.tar.gz

tar xzvf ./v1.5.0.tar.gz

cd ./heapster-1.5.0/

10.2 创建 pods

kubectl create -f deploy/kube-config/influxdb/

kubectl create -f deploy/kube-config/rbac/heapster-rbac.yaml

10.3 确认所有 pod 都正常启动

kubectl get pods --all-namespaces

# 输出结果

kube-system heapster-5bc779895-4snvl 1/1 Running 0 3m

kube-system kube-dns-778977457c-dtf7x 3/3 Running 0 2h

kube-system kubernetes-dashboard-7c5d596d8c-7mlj6 1/1 Running 0 2h

kube-system monitoring-grafana-5bccc9f786-9gkvf 1/1 Running 0 3m

kube-system monitoring-influxdb-85cb4985d4-s8rwc 1/1 Running 0 3m

1万+

1万+

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?