今日作业:

''' import requests import re import time headers = { 'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/70.0.3538.77 Safari/537.36', } def get_index(url): time.sleep(1) response1 = requests.get(url, headers=headers) return response1 def parse_index(text): ip_list1 = re.findall('<tr>.*?<td data-title="IP">(.*?)</td>.*?<td data-title="PORT">(.*?)</td>', text, re.S) for ip_port in ip_list1: ip1 = ':'.join(ip_port) yield ip1 def test_ip(ip2): print('测试ip: %s' % ip2) try: proxies = {'https': ip2} # ip测试网站 ip_url1 = 'https://www.ipip.net/' # 使用有效与无效的代理对ip测试站点进行访问,若返回的结果为200则代表当前测试ip正常 response2 = requests.get(ip_url1, headers=headers, proxies=proxies, timeout=1) if response2.status_code == 200: return ip # 若ip代理无效则抛出异常 except Exception as e: print(e) # 使用代理爬取nba def spider_nba(good_ip1): url = 'https://china.nba.com/' proxies = {'https': good_ip1} response3 = requests.get(url, headers=headers, proxies=proxies) print(response3.status_code) print(response3.text) if __name__ == '__main__': base_url = 'https://www.kuaidaili.com/free/inha/{}/' for line in range(1, 2905): ip_url = base_url.format(line) response = get_index(ip_url) ip_list = parse_index(response.text) for ip in ip_list: good_ip = test_ip(ip) if good_ip: spider_nba(good_ip)

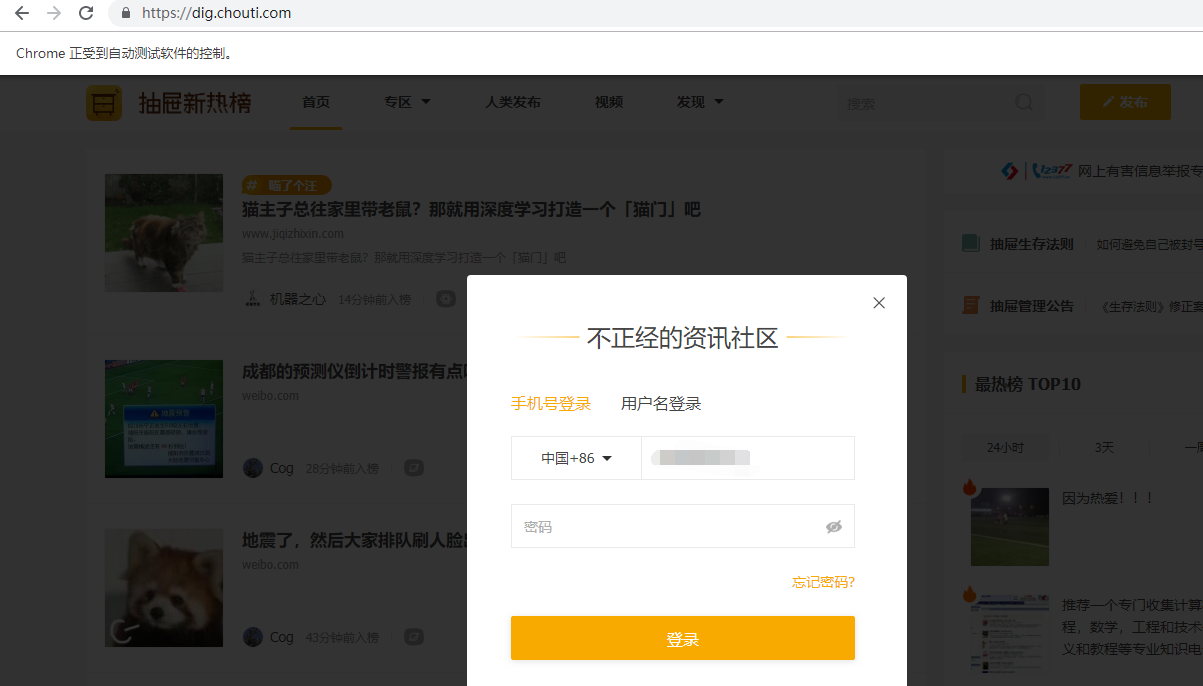

第二个作业及截图

from selenium import webdriver import time #获取驱动对象 driver = webdriver.Chrome() try: #自动登陆抽屉新热榜 #发送get请求 driver.get('https://dig.chouti.com/ ') #隐式等待 driver.implicitly_wait(10) #获取 '登陆' 按钮 send_tag = driver.find_element_by_id('login_btn') send_tag.click() #获取手机号输入框 username = driver.find_element_by_class_name('login-phone') username.send_keys('****') time.sleep(1) #获取密码输入框 password = driver.find_element_by_class_name('pwd-password-input') password.send_keys('***') time.sleep(1) #获取 '登陆' 按钮 login = driver.find_elements_by_link_text('登录') login[1].click() time.sleep(10) finally: driver.close()

import requests import re #一、访问login页获取token信息 ''' 请求url: https://github.com/login 请求方式; GET 响应头: Set-Cookie 请求头: Cookie User-Agent ''' headers = { 'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/75.0.3770.80 Safari/537.36' } response = requests.get(url='https://github.com/login',headers=headers) #print(response.text)先通过打印找到他的token用ctrl+f搜索token然后将前面标识获取后面的value #把login页返回的cookies信息转换成字典 login_cookies= response.cookies.get_dict() authenticity_token = re.findall('<input type="hidden" name="authenticity_token" value="(.*?)" />',response.text,re.S)[0] print(authenticity_token)#这两步得到token的值 #二、往session url发送POST请求 ''' 请求url: https://github.com/session 请求方式: POST 请求头: #上一次请求从哪里来Referer Referer: https://github.com/login cookie:... User-Agent: Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/75.0.3770.80 Safari/537.36 请求体; 只有POST请求才会有请求体。 commit: Sign in utf8: ✓ authenticity_token: wmr6PCRaTeoztmV4N/ZVQrHHCCb41enoWn1eM135LkuLqW0G47wpSD6gFwxmH7AE87QB1lQ+rmpPYgUa6oqfKg== login: ***** password:**** webauthn-support: supported ''' #拼接请求头信息 headers2 = { 'Referer': 'https://github.com/login', 'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/75.0.3770.80 Safari/537.36' } #拼接请求体信息 form_data = { "commit": "Sign in", "utf8": "✓", "authenticity_token":authenticity_token, "login": "****", "password":"*******", "webauthn-support": "supported", } #往session地址发送post请求 #携带请求头、请求体、login页的cookies信息 response2 = requests.post(url='https://github.com/session',data=form_data,headers=headers2,cookies = login_cookies) print(response2.status_code) #print(response2.text) with open('github.html','w',encoding='utf-8')as f: f.write(response2.text)

以上为requests中post请求

import requests response = requests.get('https://baidu.com') # response响应 print(response.status_code) # 获取响应状态码 print(response.url) # 获取url地址 print(response.encoding) # 字符编码 response.encoding = 'utf-8' print(response.text) # 获取文本 print(response.content) # 获取二进制流 print(response.headers) # 获取页面请求头信息 print(response.history) # 上一次跳转的地址 # 1、返回cookie字典 2、返回cookies对象 print(response.cookies) # 获取cookies信息, print(response.cookies.get_dict()) # 获取cookies信息转换成字典 print(response.cookies.items()) # 获取cookies信息转换成字典 print(response.encoding) print(response.elapsed) # 访问时间 import requests # 往音频地址发送get请求 url = 'https://vd3.bdstatic.com/mda-ic4pfhh3ex32svqi/hd/mda-ic4pfhh3ex32svqi.mp4?auth_key=1557973824-0-0-bfb2e69bb5198ff65e18065d91b2b8c8&bcevod_channel=searchbox_feed&pd=wisenatural&abtest=all.mp4' response = requests.get(url, stream=True) # stream=True 把content设置为一个迭代器对象 print(response.content) with open('love_for_GD.mp4', 'wb') as f: for content in response.iter_content(): f.write(content)

以上为response响应

''' ''' ''' 证书验证(大部分网站都是https) ''' import requests # # 如果是ssl请求,首先检查证书是否合法,不合法则报错,程序终端 # response = requests.get('https://www.xiaohuar.com') # print(response.status_code) # 改进1:去掉报错,但是会报警告 # import requests # response = requests.get('https://www.xiaohuar.com', verify=False) # # 不验证证书,报警告,返回200 # print(response.status_code) # 改进2:去掉报错,并且去掉警报信息 # import requests # import urllib3 # urllib3.disable_warnings() # 关闭警告 # response = requests.get('https://www.xiaohuar.com', verify=False) # print(response.status_code) # 改进3:加上证书 # 很多网站都是https,但是不用证书也可以访问,大多数情况都是可以携带也可以不携带证书 # 知乎\百度等都是可带可不带 # 有硬性要求的,则必须带,比如对于定向的用户,拿到证书后才有权限访问某个特定网站 # import requests # import urllib3 # # urllib3.disable_warnings() # 关闭警告 # # 伪代码 # response = requests.get( # 'https://www.xiaohuar.com', # # verify=False, # # /path/server.crt证书的存放目录, /path/key # cert=('/path/server.crt', '/path/key')) # print(response.status_code) ''' 超时设置 ''' # 超时设置 # 两种超时:float or tuple # timeout=0.1 # 代表接收数据的超时时间 # timeout=(0.1,0.2) # 0.1代表链接超时 0.2代表接收数据的超时时间 # import requests # response = requests.get('https://www.baidu.com', # timeout=0.0001) # # print(response.elapsed) # print(response.status_code) ''' 代理设置:先发送请求给代理,然后由代理帮忙发送(封ip是常见的事情) ''' # import requests # proxies={ # # 带用户名密码的代理,@符号前是用户名与密码 # 'http':'http://tank:123@localhost:9527', # 'http':'http://localhost:9527', # 'https':'https://localhost:9527', # } # response=requests.get('https://www.12306.cn', # proxies=proxies) # # print(response.status_code) ''' 爬取西刺免费代理: 1.访问西刺免费代理页面 2.通过re模块解析并提取所有代理 3.通过ip测试网站对爬取的代理进行测试 4.若test_ip函数抛出异常代表代理作废,否则代理有效 5.利用有效的代理进行代理测试 <tr class="odd"> <td class="country"><img src="//fs.xicidaili.com/images/flag/cn.png" alt="Cn"></td> <td>112.85.131.99</td> <td>9999</td> <td> <a href="/2019-05-09/jiangsu">江苏南通</a> </td> <td class="country">高匿</td> <td>HTTPS</td> <td class="country"> <div title="0.144秒" class="bar"> <div class="bar_inner fast" style="width:88%"> </div> </div> </td> <td class="country"> <div title="0.028秒" class="bar"> <div class="bar_inner fast" style="width:97%"> </div> </div> </td> <td>6天</td> <td>19-05-16 11:20</td> </tr> re: <tr class="odd">(.*?)</td>.*?<td>(.*?)</td> ''' # import requests # import re # import time # # HEADERS = { # 'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/70.0.3538.77 Safari/537.36', # } # # # def get_index(url): # time.sleep(1) # response = requests.get(url, headers=HEADERS) # return response # # # def parse_index(text): # ip_list = re.findall('<tr class="odd">.*?<td>(.*?)</td>.*?<td>(.*?)</td>', text, re.S) # for ip_port in ip_list: # ip = ':'.join(ip_port) # yield ip # # def test_ip(ip): # print('测试ip: %s' % ip) # try: # proxies = { # 'https': ip # } # # # ip测试网站 # ip_url = 'https://www.ipip.net/' # # # 使用有效与无效的代理对ip测试站点进行访问,若返回的结果为200则代表当前测试ip正常 # response = requests.get(ip_url, headers=HEADERS, proxies=proxies, timeout=1) # # if response.status_code == 200: # print(f'有用的ip:{ip}') # return ip # # # 若ip代理无效则抛出异常 # except Exception as e: # print(e) # # # 使用代理爬取nba # def spider_nba(good_ip): # url = 'https://china.nba.com/' # # proxies = { # 'https': good_ip # } # # response = requests.get(url, headers=HEADERS, proxies=proxies) # print(response.status_code) # print(response.text) # # # if __name__ == '__main__': # base_url = 'https://www.xicidaili.com/nn/{}' # # for line in range(1, 3677): # ip_url = base_url.format(line) # # response = get_index(ip_url) # # # 解析西刺代理获取每一个ip列表 # ip_list = parse_index(response.text) # # # 循环每一个ip # for ip in ip_list: # # print(ip) # # # 对爬取下来的ip进行测试 # good_ip = test_ip(ip) # # if good_ip: # # 真是代理,开始测试 # spider_nba(good_ip) ''' 认证设置 ''' import requests # 通过访问github的api来测试 url = 'https://api.github.com/user' HEADERS = { 'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/70.0.3538.77 Safari/537.36', } # 测试1,失败返回401 # response = requests.get(url, headers=HEADERS) # print(response.status_code) # 401 # print(response.text) ''' 打印结果: { "message": "Requires authentication", "documentation_url": "https://developer.github.com/v3/users/#get-the-authenticated-user" } ''' # # # 测试2,通过requests.auth内的HTTPBasicAuth进行认证,认证成功返回用户信息 # from requests.auth import HTTPBasicAuth # response = requests.get(url, headers=HEADERS, auth=HTTPBasicAuth('tankjam', 'kermit46709394')) # print(response.text) # # 测试3,通过requests.get请求内的auth参数默认就是HTTPBasicAuth,认证成功返回用户信息 # response = requests.get(url, headers=HEADERS, auth=('tankjam', 'kermit46709394')) # print(response.text) ''' 上传文件 ''' import requests # 上传文本文件 # files1 = {'file': open('user.txt', 'rb')} # # files参数是POST请求固定参数 # response = requests.post('http://httpbin.org/post', files=files1) # print(response.status_code) # 200 # print(response.text) # 200 # 上传图片文件 # files2 = {'jpg': open('一拳.jpg', 'rb')} # response = requests.post('http://httpbin.org/post', files=files2) # print(response.status_code) # 200 # print(response.text) # 200 # # 上传视频文件 # files3 = {'movie': open('love_for_GD.mp4', 'rb')} # response = requests.post('http://httpbin.org/post', files=files3) # print(response.status_code) # 200 # print(response.text) # 200

以上为request高级用法

''' selenium模块讲解 一 什么是selenium? 最初是一个自动化测试工具,可以使用它帮我们驱动浏览器自动去执行某些自定义好的操作。 例如在页面中执行js代码、跳过登录验证。可以使用selenium帮我们实现爬虫 二 为什么要使用selenium? 1.优点: 使用requests模块登录需要分析大量的复杂通信流程,使用selenium可以轻松跳过登录验证 2.缺点: 浏览器会加载css、js、图片、视频...数据,爬虫效率相比requests模块要低。 三如何使用selenium? 下载selenium模块: pip3 install -i https://pypi.tuna.tsinghua.edu.cn/simple selenium 下载浏览器驱动: http://npm.taobao.org/mirrors/chromedriver/2.38/ ''' # selenium之第一次 from selenium import webdriver # 用来驱动浏览器的 from selenium.webdriver import ActionChains # 调用得到一个动作链对象,破解滑动验证码的时候用的 可以拖动图片 from selenium.webdriver.common.by import By # 按照什么方式查找,By.ID,By.CSS_SELECTOR from selenium.webdriver.common.keys import Keys # 键盘按键操作 from selenium.webdriver.support import expected_conditions as EC # 和下面WebDriverWait一起用的,EC是expected_conditions的别名 from selenium.webdriver.support.wait import WebDriverWait # 等待页面加载某些元素 import time #通过谷歌浏览器驱动打开谷歌浏览器 #webdrive.Chrome(r'chromedriver.exe路径) #chrome = webdriver.Chrome(r'C:\Program Files\JetBrains\chromedriver_win32\chromedriver.exe')#括号内输入chromedriver.exe绝对路径 #chromedriver.exe存放于python解释器的Scripts文件夹中 chrome = webdriver.Chrome() #若try出现异常 try: #往自己博客主页发送get请求 #chrome.get(*****') #参数1:驱动对象 参数2:等待时间 wait = WebDriverWait(chrome,10) #1.访问百度 chrome.get('http://www.baidu.com/') #2.查找input输入框 input_tag = wait.until( #调用EC的presence_of_element_located() EC.presence_of_element_located( #此处可以写一个元组 #参数1:查找属性的方式 #参数2:属性的名字 (By.ID,'kw') ) ) #3.搜索一拳超人 input_tag.send_keys('一拳超人') #按键盘回车键 input_tag.send_keys(Keys.ENTER) time.sleep(3) #无论发生什么都会关闭浏览器 finally: #关闭浏览器 chrome.close() #实例二: from selenium import webdriver # 用来驱动浏览器的 from selenium.webdriver import ActionChains # 调用得到一个动作链对象,破解滑动验证码的时候用的 可以拖动图片 from selenium.webdriver.common.by import By # 按照什么方式查找,By.ID,By.CSS_SELECTOR from selenium.webdriver.common.keys import Keys # 键盘按键操作 from selenium.webdriver.support import expected_conditions as EC # 和下面WebDriverWait一起用的,EC是expected_conditions的别名 from selenium.webdriver.support.wait import WebDriverWait # 等待页面加载某些元素 import time #通过谷歌浏览器驱动打开谷歌浏览器 #webdrive.Chrome(r'chromedriver.exe路径) #chrome = webdriver.Chrome(r'C:\Program Files\JetBrains\chromedriver_win32\chromedriver.exe')#括号内输入chromedriver.exe绝对路径 #chromedriver.exe存放于python解释器的Scripts文件夹中 chrome = webdriver.Chrome() #若try出现异常 try: #往自己博客主页发送get请求 #chrome.get(******') #参数1:驱动对象 参数2:等待时间 wait = WebDriverWait(chrome,10) #1.访问京东主页 chrome.get('http://www.jd.com/') #查找input输入框 input_tag = wait.until(EC.presence_of_element_located((By.ID,'key'))) #3.搜索唐诗三百首 input_tag.send_keys('唐诗三百首') #4.根据class属性名称查找标签 search_button = wait.until( EC.presence_of_element_located((By.CLASS_NAME,'button')) ) #5.点击搜索按钮 search_button.click() time.sleep(3) #无论发生什么都会关闭浏览器 finally: #关闭浏览器 chrome.close()

以上为selenium基本使用

from selenium import webdriver#用来驱动浏览器的 import time # ''' # 隐式等待 # # ''' # #获取驱动对象、 # driver = webdriver.Chrome() # # try: # # 显式等待:等待某个元素加载 # # 参数1:驱动对象 参数2:等待时间 # #wait = WebDriverWait(chrome, 10) # # driver.get('https://china.nba.com/') # # #隐式等待:等待页面所有元素加载 # driver.implicitly_wait(10) # news_tag = driver.find_element_by_class_name('nav-news') # #获取标签对象 # print(news_tag) # # 获取标签的名字 # print(news_tag.tag_name) # # # time.sleep(10) # # finally: # driver.close() # # from selenium import webdriver # 用来驱动浏览器的 # import time ''' ===============所有方法=================== element是查找一个标签 elements是查找所有标签 1、find_element_by_link_text 通过链接文本去找 2、find_element_by_id 通过id去找 3、find_element_by_class_name 4、find_element_by_partial_link_text 5、find_element_by_name 6、find_element_by_css_selector 7、find_element_by_tag_name ''' # 获取驱动对象、 driver = webdriver.Chrome() try: # 往百度发送请求 driver.get('https://www.baidu.com/') driver.implicitly_wait(10) # 1、find_element_by_link_text 通过链接文本去找 # 根据登录 # send_tag = driver.find_element_by_link_text('登录') # send_tag.click() # 2、find_element_by_partial_link_text 通过局部文本查找a标签 login_button = driver.find_element_by_partial_link_text('登') login_button.click() time.sleep(1) # 3、find_element_by_class_name 根据class属性名查找 login_tag = driver.find_element_by_class_name('tang-pass-footerBarULogin') login_tag.click() time.sleep(1) # 4、find_element_by_name 根据name属性查找 username = driver.find_element_by_name('userName') username.send_keys('*****') time.sleep(1) # 5、find_element_by_id 通过id属性名查找 password = driver.find_element_by_id('TANGRAM__PSP_10__password') password.send_keys('******j') time.sleep(1) # 6、find_element_by_css_selector 根据属性选择器查找 # 根据id查找登录按钮 login_submit = driver.find_element_by_css_selector('#TANGRAM__PSP_10__submit') # driver.find_element_by_css_selector('.pass-button-submit') login_submit.click() # 7、find_element_by_tag_name 根据标签名称查找标签 div = driver.find_element_by_tag_name('div') print(div.tag_name) time.sleep(10) finally: driver.close()

以上为selenium之基本选择器

这次的实习比之前来说又增加了很多难度,我觉得我应该花更多的时间去好好学习,课后应该自己去认真学一下里面深层一点的东西,而不是只会照着老师的代码去套,这样无法真正学好python

Python爬虫与测试学习感悟

Python爬虫与测试学习感悟

博客记录了Python相关作业,涉及requests的post请求、response响应、request高级用法,以及selenium基本使用和选择器。作者表示此次实习难度增加,意识到不能只套老师代码,需深入学习Python,以真正掌握这门技术。

博客记录了Python相关作业,涉及requests的post请求、response响应、request高级用法,以及selenium基本使用和选择器。作者表示此次实习难度增加,意识到不能只套老师代码,需深入学习Python,以真正掌握这门技术。

234

234

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?