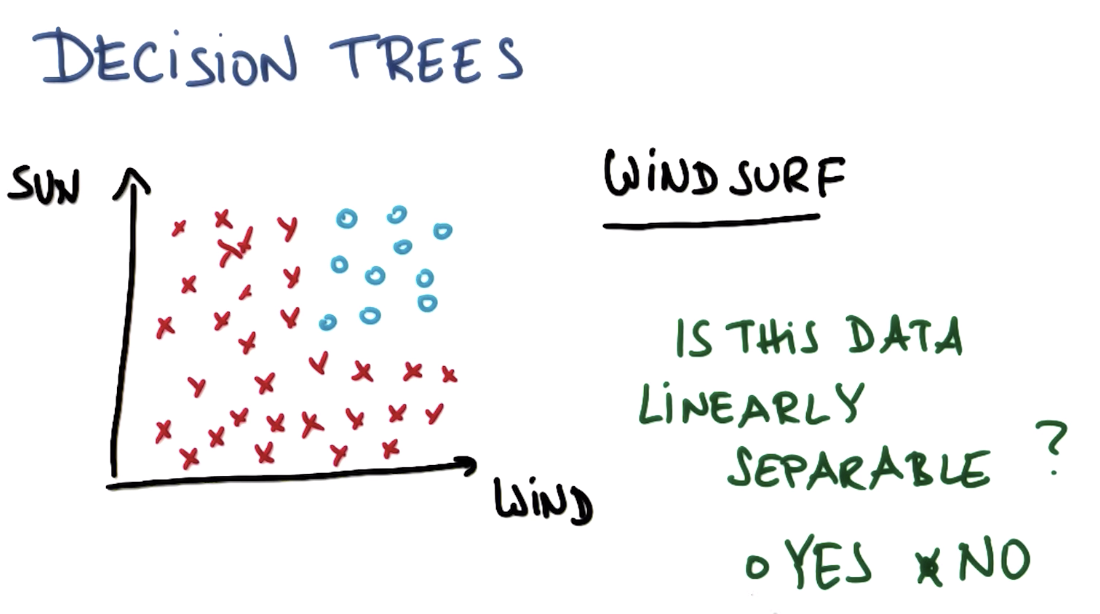

Linearly Separable Data

Multiple Linear Questions

Constructing a Decision Tree First Split

Coding A Decision Tree

Decision Tree Parameters

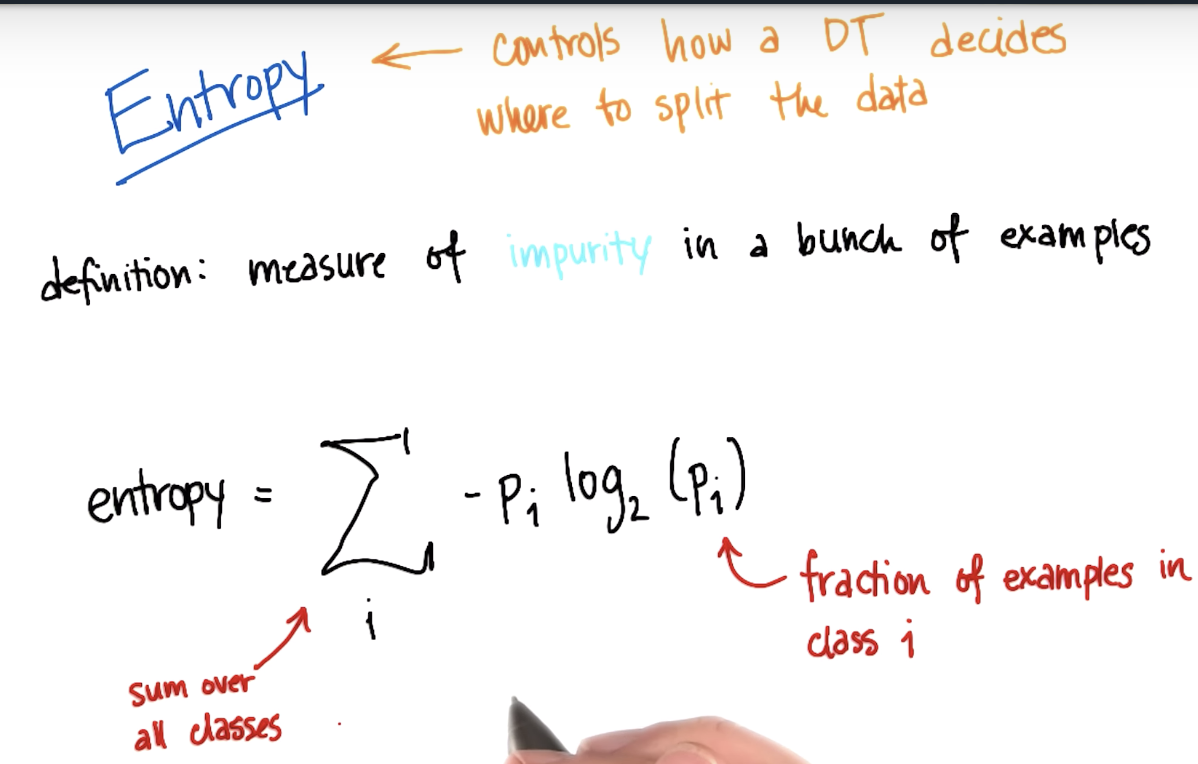

Data Impurity and Entropy

Formula of Entropy

There is an error in the formula in the entropy written on this slide. There should be a negative (-) sign preceding the sum:

Entropy = - \sum_i (p_i) \log_2 (p_i)−∑i(pi)log2(pi)

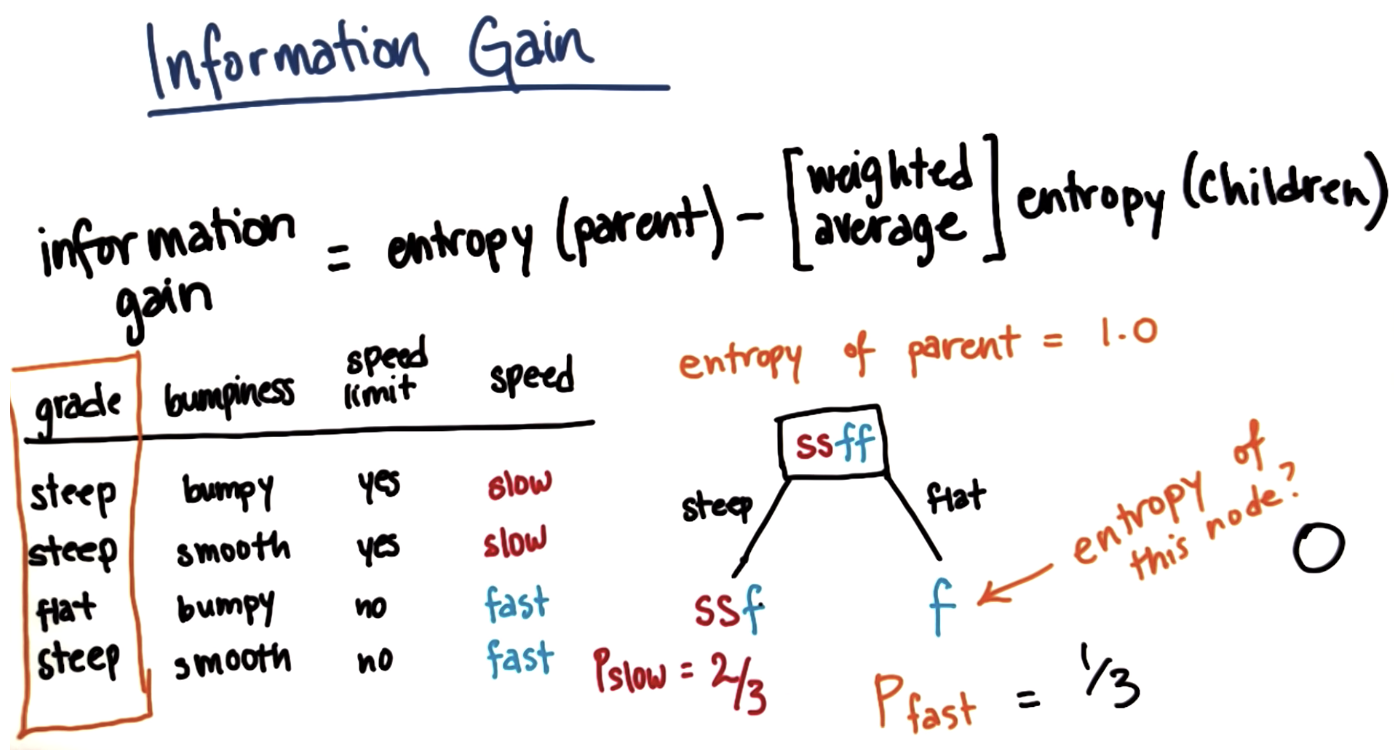

IG = 1

Tuning Criterion Parameter

gini is another measurement of purity

Decision Tree Mini-Project

In this project, we will again try to identify the authors in a body of emails, this time using a decision tree. The starter code is in decision_tree/dt_author_id.py.

Get the data for this mini project from here.

Once again, you'll do the mini-project on your own computer and enter your answers in the web browser. You can find the instructions for the decision tree mini-project here.

本文深入探讨了决策树算法的核心概念,包括线性可分数据、决策树构建、熵与基尼不纯度等关键指标,并通过一个具体的电子邮件作者识别项目实例,展示了决策树在实际应用中的操作流程。

本文深入探讨了决策树算法的核心概念,包括线性可分数据、决策树构建、熵与基尼不纯度等关键指标,并通过一个具体的电子邮件作者识别项目实例,展示了决策树在实际应用中的操作流程。

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?