#导入os包加载数据目录

import os

path = r'E:\dzy'

#停词库

with open(r'e:\\stopsCN.txt', encoding='utf-8') as f:

stopwords = f.read().split('\n')

#对数据进行标准编码处理(encoding='utf-8')

import codecs

import jieba

#存放文件名

filePaths = []

#存放读取的数据

fileContents = []

#存放文件类型

fileClasses = []

#进行遍历实现转码读取处理并对每条新闻进行切分

for root, dirs, files in os.walk(path):

for name in files:

filePath = os.path.join(root, name)

filePaths.append(filePath)

fileClasses.append(filePath.split('\\')[2])

f = codecs.open(filePath, 'r', 'utf-8')

fileContent = f.read()

fileContent = fileContent.replace('\n','')

tokens = [token for token in jieba.cut(fileContent)]

tokens = " ".join([token for token in tokens if token not in stopwords])

f.close()

fileContents.append(tokens)

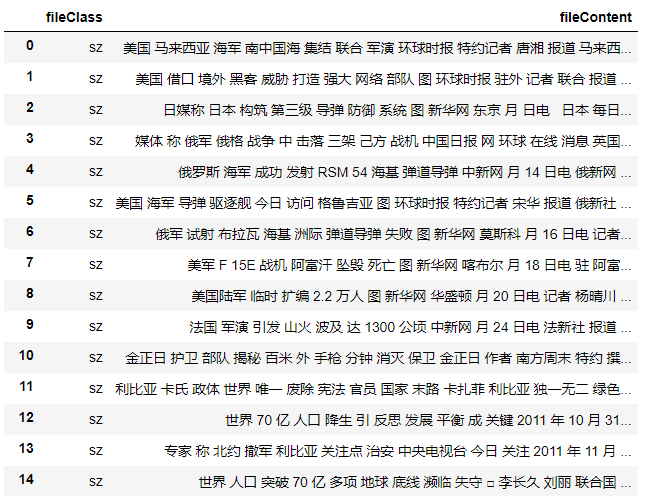

import pandas;

all_datas = pandas.DataFrame({

'fileClass': fileClasses,

'fileContent': fileContents

})

print(all_datas)

str=''

for i in range(len(fileContents)):

str+=fileContents[i]

#TF-IDF算法

#统计词频

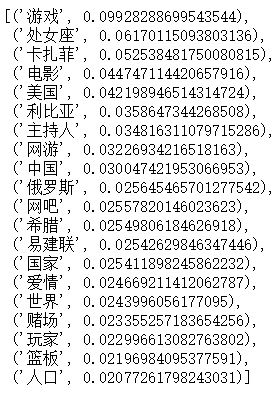

import jieba.analyse

keywords = jieba.analyse.extract_tags(str, topK=20, withWeight=True, allowPOS=('n','nr','ns'))

print(keywords )

from sklearn.model_selection import train_test_split

x_train,x_test,y_train,y_test = train_test_split(fileContents,fileClasses,test_size=0.3,random_state=0,stratify=fileClasses)

vectorizer = TfidfVectorizer()

X_train = vectorizer.fit_transform(x_train)

X_test = vectorizer.transform(x_test)

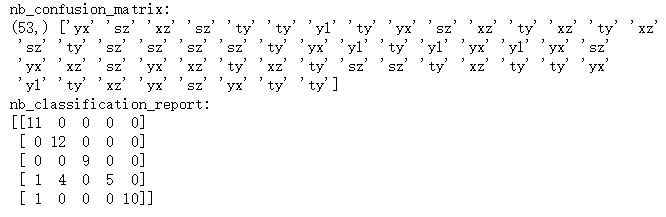

from sklearn.naive_bayes import MultinomialNB

clf= MultinomialNB().fit(X_train,y_train)

y_nb_pred=clf.predict(X_test)

#分类结果显示

from sklearn.metrics import confusion_matrix

from sklearn.metrics import classification_report

print('nb_confusion_matrix:')

print(y_nb_pred.shape,y_nb_pred)#x_test预测结果

cm=confusion_matrix(y_test,y_nb_pred)#混淆矩阵

print('nb_classification_report:')

print(cm)

本文介绍了一种使用TF-IDF算法和朴素贝叶斯分类器进行中文文本分类的方法。通过对E盘上的数据集进行预处理,包括利用jieba进行分词、去除停用词等步骤,然后应用TF-IDF算法提取关键词,并使用朴素贝叶斯分类器进行文本分类,最后评估了分类器的性能。

本文介绍了一种使用TF-IDF算法和朴素贝叶斯分类器进行中文文本分类的方法。通过对E盘上的数据集进行预处理,包括利用jieba进行分词、去除停用词等步骤,然后应用TF-IDF算法提取关键词,并使用朴素贝叶斯分类器进行文本分类,最后评估了分类器的性能。

2003

2003

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?