0. Overview

What is language models?

A time series prediction problem.

It assigns a probility to a sequence of words,and the total prob of all the sequence equal one.

Many Natural Language Processing can be structured as (conditional) language modelling.

Such as Translation:

P(certain Chinese text | given English text)

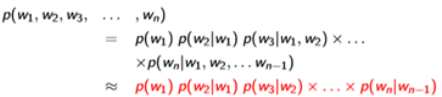

Note that the Prob follows the Bayes Formula.

How to evaluate a Language Model?

Measured with cross entropy.

Three data sets:

1 Penn Treebank: www.fit.vutbr.cz/~imikolov/rnnlm/simple-examples.tgz

2 Billion Word Corpus: code.google.com/p/1-billion-word-language-modeling-benchmark/

3 WikiText datasets: Pointer Sentinel Mixture Models. Merity et al., arXiv 2016

| Overview: Three approaches to build language models: Count based n-gram models: approximate the history of observed words with just the previous n words. Neural n-gram models: embed the same fixed n-gram history in a continues space and thus better capture correlations between histories. Recurrent Neural Networks: we drop the fixed n-gram history and compress the entire history in a fixed length vector, enabling long range correlations to be captured. |

1. N-Gram models:

Assumption:

Only previous history matters.

Only k-1 words are included in history

Kth order Markov model

2-gram language model:

The conditioning context, wi−1, is called the history

Estimate Probabilities:

(For example: 3-gram)

![]() (count w1,w2,w3 appearing in the corpus)

(count w1,w2,w3 appearing in the corpus)

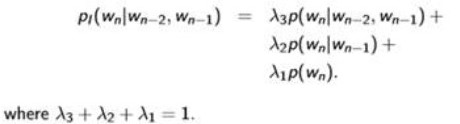

Interpolated Back-Off:

That is , sometimes some certain phrase don’t appear in the corpus so the Prob of them is zero. To avoid this situation, we use Interpolated Back-off. That is to say, Interpolate k-gram models(k = n-1、n-2…1) into the n-gram models.

A simpal approach:

Summary for n-gram:

Good: easy to train. Fast.

Bad: Large n-grams are sparse. Hard to capture long dependencies. Cannot capture correlations between similary word distributions. Cannot resolve the word morphological problem.(running – jumping)

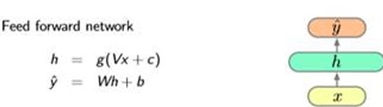

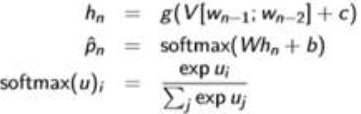

2. Neural N-Gram Language Models

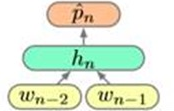

Use A feed forward network like:

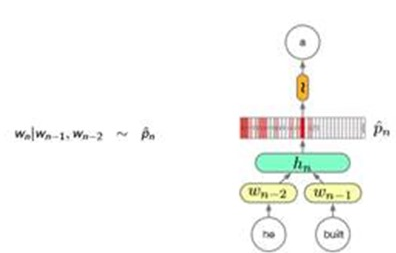

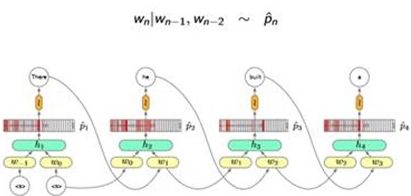

Trigram(3-gram) Neural Network Language Model for example:

Wi are hot-vectors. Pi are distributions. And shape is |V|(words in the vocabulary)

(a sampal:detail cal graph)

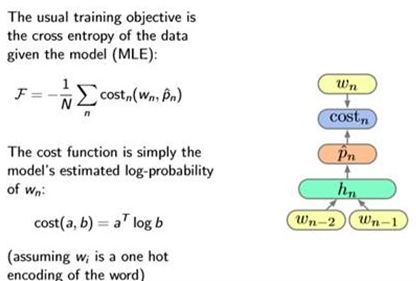

Define the loss:cross entopy:

Training: use Gradient Descent

And a sampal of taining:

Comparsion with Count based n-gram LMs:

Good: Better performance on unseen n-grams But poorer on seen n-grams.(Sol: direct(linear) n-gram fertures). Use smaller memory than Counted based n-gram.

Bad: The number of parameters in the models scales with n-gram size. There is a limit on the longest dependencies that an be captured.

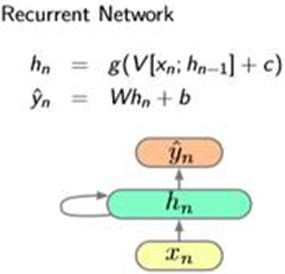

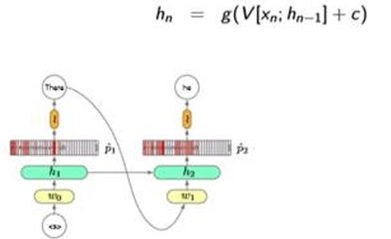

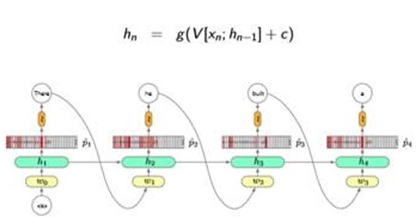

3. Recurrent Neural Network LM

That is to say, using a recurrent neural network to build our LM.

Model and Train:

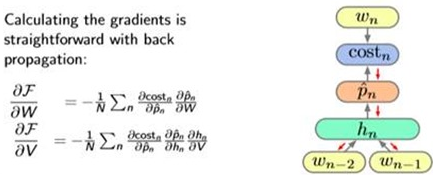

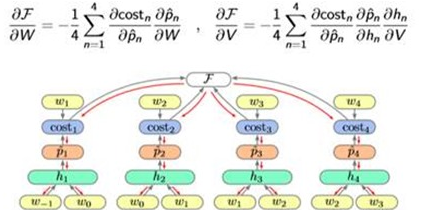

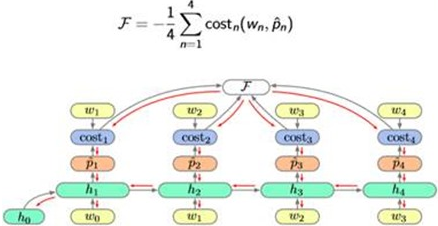

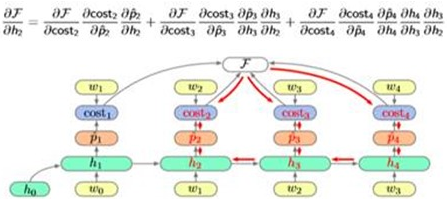

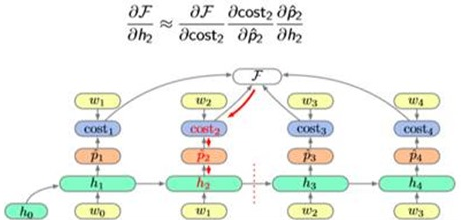

Algorithm: Back Propagation Through Time(BPTT)

Note:

Note that, the Gradient Descent depend heavily. So the improved algorithm is:

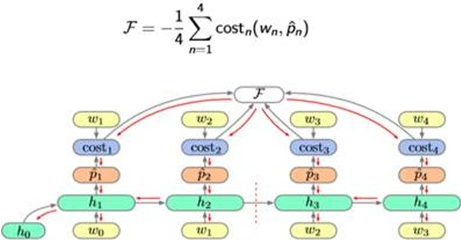

Algorithm: Truncated Back Propagation Through Time.(TBPTT)

So the Cal graph looks like this:

So the Training process and Gradient Descent:

Summary of the Recurrent NN LMs:

Good:

RNNs can represent unbounded dependencies, unlike models with a fixed n-gram order.

RNNs compress histories of words into a fixed size hidden vector.

The number of parameters does not grow with the length of dependencies captured, but they do grow with the amount of information stored in the hidden layer.

Bad:

RNNs are hard to learn and often will not discover long range dependencies present in the data(So we learn LSTM unit).

Increasing the size of the hidden layer, and thus memory, increases the computation and memory quadratically.

Mostly trained with Maximum Likelihood based objectives which do not encode the expected frequencies of words a priori.

Some blogs recommended:

| Andrej Karpathy: The Unreasonable Effectiveness of Recurrent Neural Networks karpathy.github.io/2015/05/21/rnn-effectiveness/ Yoav Goldberg: The unreasonable effectiveness of Character-level Language Models nbviewer.jupyter.org/gist/yoavg/d76121dfde2618422139 Stephen Merity: Explaining and illustrating orthogonal initialization for recurrent neural networks. smerity.com/articles/2016/orthogonal_init.html |

本文介绍了三种语言模型构建方法:n元语法模型、神经n元语法模型及循环神经网络语言模型。详细探讨了每种模型的工作原理、优缺点,并对比了它们在不同应用场景下的表现。

本文介绍了三种语言模型构建方法:n元语法模型、神经n元语法模型及循环神经网络语言模型。详细探讨了每种模型的工作原理、优缺点,并对比了它们在不同应用场景下的表现。

875

875

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?