程序代码:

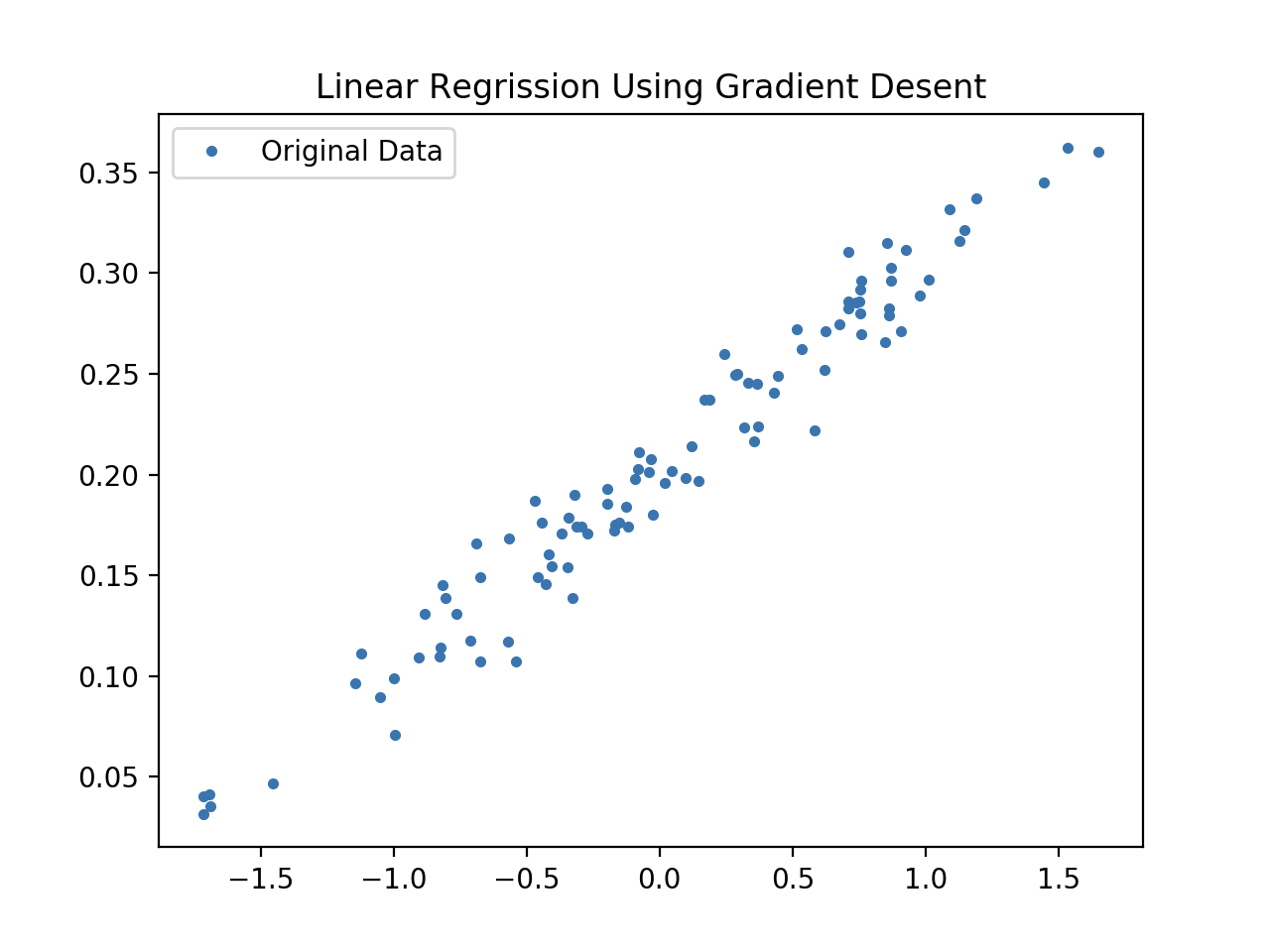

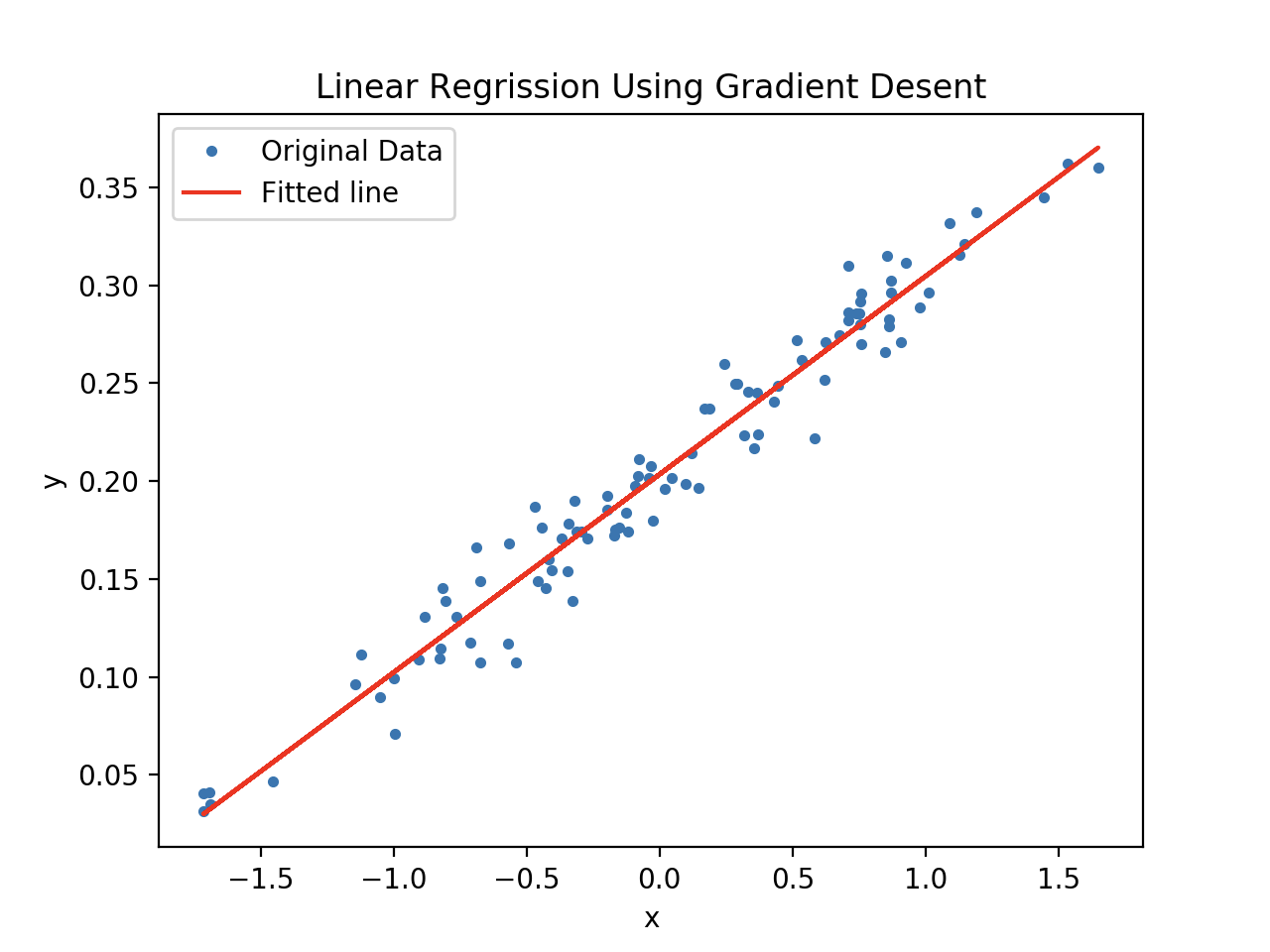

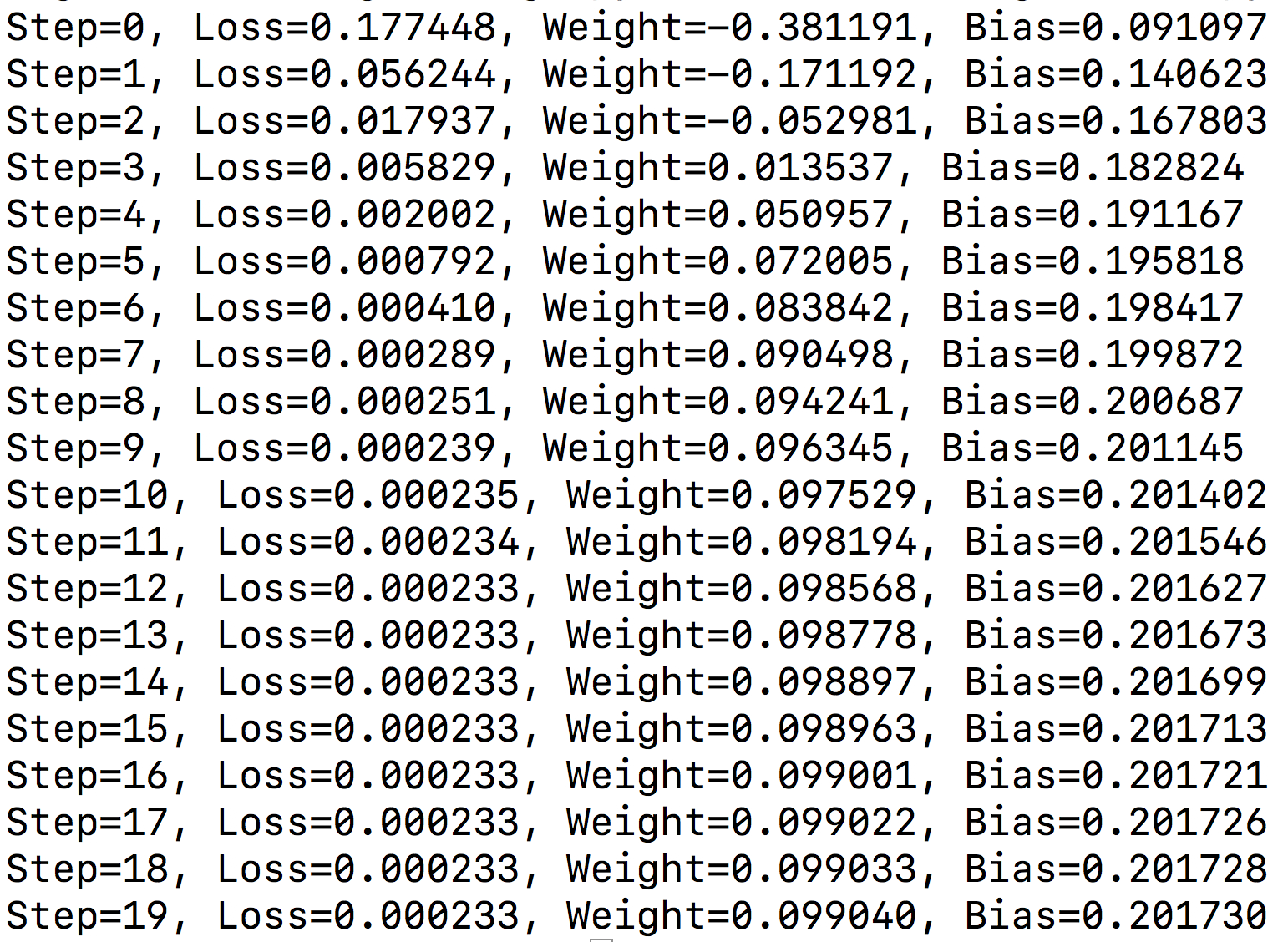

1 # -*- coding: UTF-8 -*- 2 3 ''' 4 用梯度下降的优化方法解决线性回归问题 5 ''' 6 7 import tensorflow as tf 8 import matplotlib.pyplot as plt 9 import numpy as np 10 11 #设置坐标点个数 12 points_num = 100 13 vectors = [] 14 15 #获取每个点的坐标 16 for i in xrange(points_num): 17 x1 = np.random.normal(0, 0.77) 18 y1 = 0.1 * x1 + 0.2 + np.random.normal(0, 0.015) 19 vectors.append([x1, y1]) 20 #得到每个点的横纵坐标 21 x_data = [v[0] for v in vectors] 22 y_data = [v[1] for v in vectors] 23 #图像1(原始坐标点) 24 plt.plot(x_data, y_data, ".", label="Original Data") 25 plt.title("Linear Regrission Using Gradient Desent") 26 plt.legend() 27 plt.show() 28 29 #构建线性回归模型 30 W = tf.Variable(tf.random_uniform([1], -1.0, 1.0)) 31 b = tf.Variable(tf.zeros([1])) 32 y = W * x_data + b 33 34 #定义损失函数 35 loss = tf.reduce_mean(tf.square(y - y_data)) 36 #定义梯度下降优化器 37 optimizer = tf.train.GradientDescentOptimizer(0.3) 38 train = optimizer.minimize(loss) 39 40 sees = tf.Session() 41 42 #初始化全局变量 43 init = tf.global_variables_initializer() 44 sees.run(init) 45 #训练,次数为20 46 for step in xrange(20): 47 sees.run(train) 48 print("Step=%d, Loss=%f, Weight=%f, Bias=%f") \ 49 %(step, sees.run(loss), sees.run(W), sees.run(b)) 50 51 #图像2(原始点和拟合曲线) 52 plt.plot(x_data, y_data, ".", label="Original Data") 53 plt.title("Linear Regrission Using Gradient Desent") 54 plt.plot(x_data, sees.run(W) * x_data + sees.run(b), color="red", label="Fitted line" ) 55 plt.legend() 56 plt.xlabel('x') 57 plt.ylabel('y') 58 plt.show() 59 60 sees.close()

运行效果:

本文通过Python和TensorFlow实现了一个简单的线性回归模型,并使用梯度下降法进行参数优化。通过生成随机数据点并拟合一条直线来展示整个过程。

本文通过Python和TensorFlow实现了一个简单的线性回归模型,并使用梯度下降法进行参数优化。通过生成随机数据点并拟合一条直线来展示整个过程。

768

768

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?