搭建k8s环境

1. 环境初始化

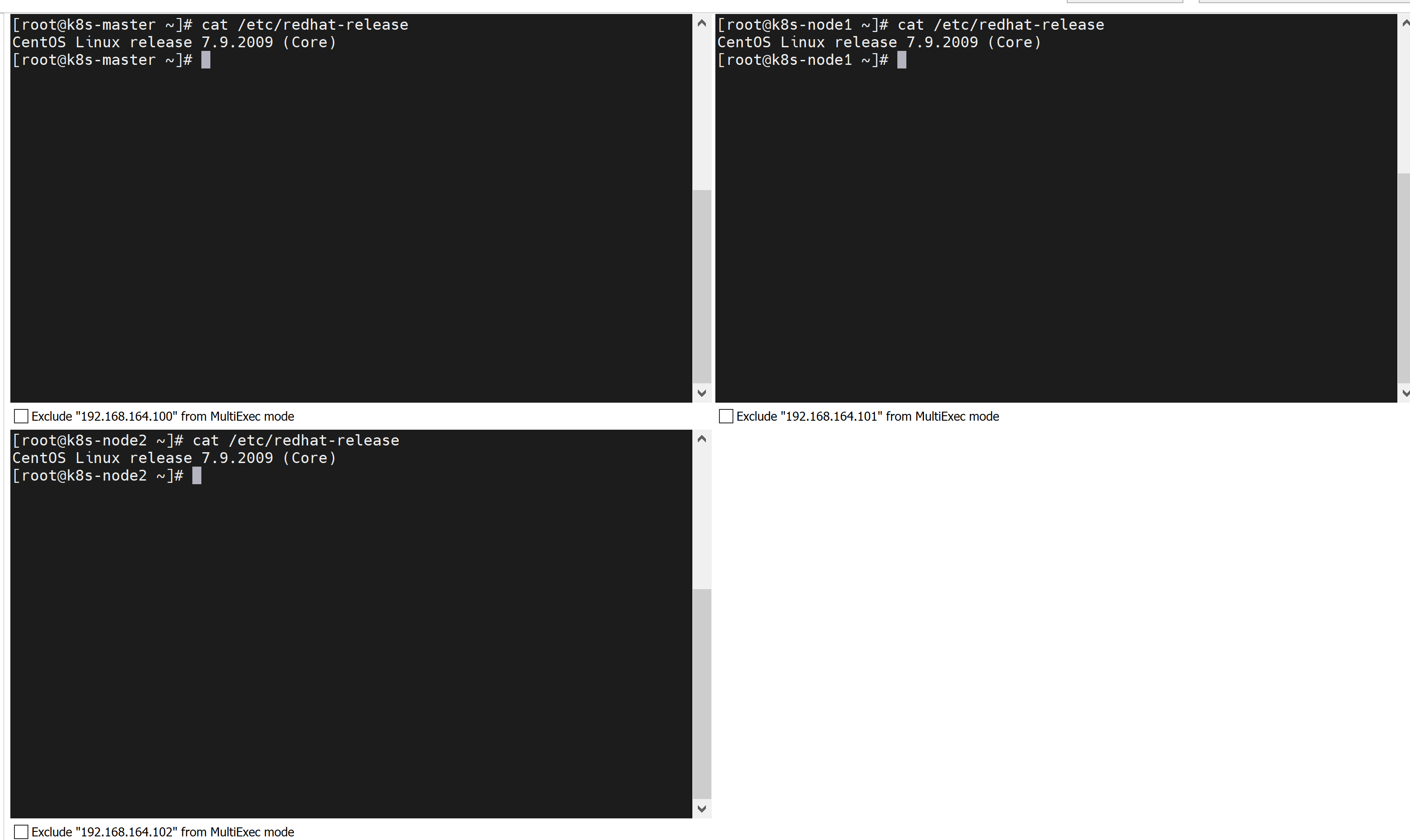

1.1. 检查操作系统的版本

cat /etc/redhat-release

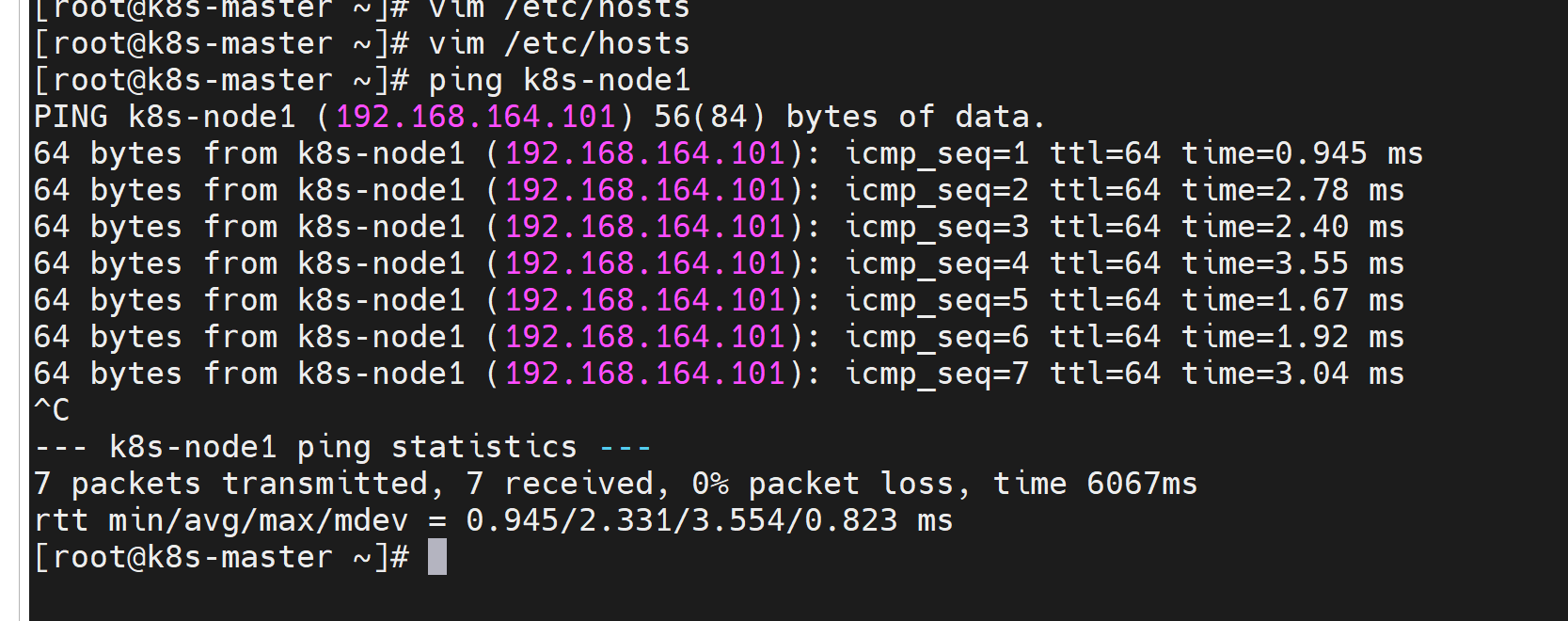

1.2. 主机名解析(配置hosts)

每个节点的hosts文件中,都添加如下内容

192.168.164.100 k8s-master

192.168.164.101 k8s-node1

192.168.164.102 k8s-node2

配好之后,可以测试一下:

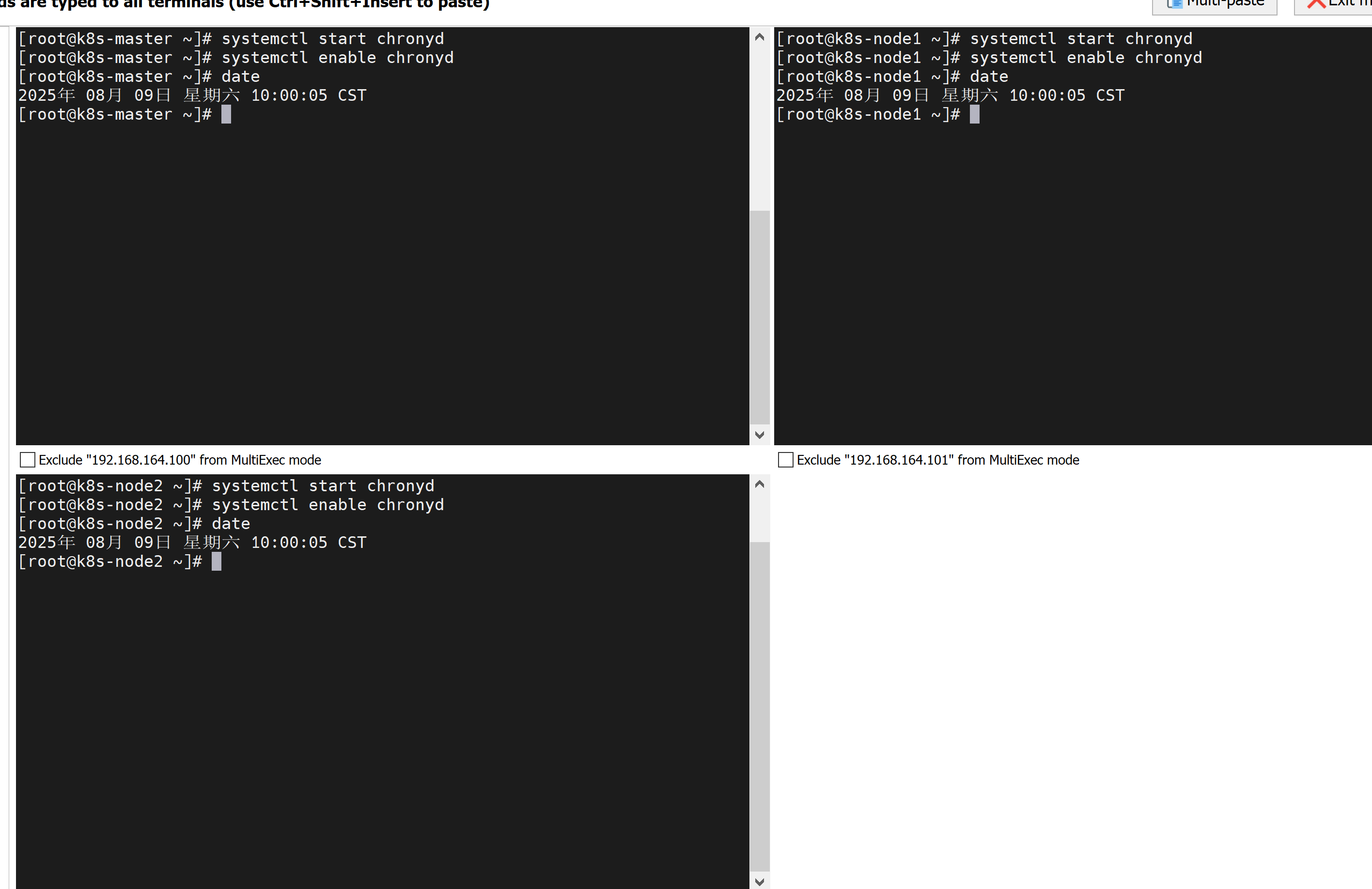

1.3. 时间同步

依次执行:

systemctl start chronyd

systemctl enable chronyd

date

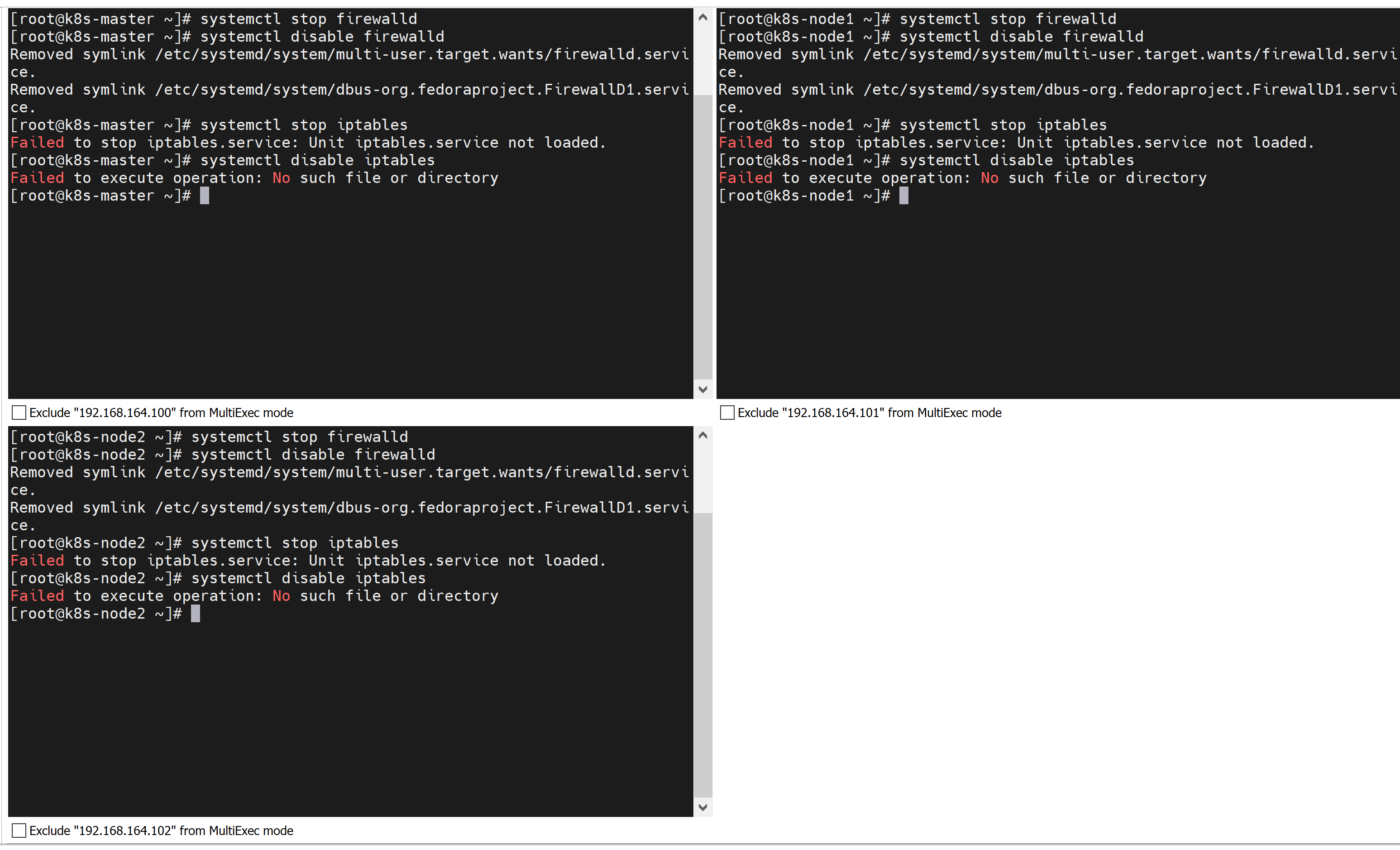

1.4. 禁用iptables和防火墙

systemctl stop firewalld

systemctl disable firewalld

systemctl stop iptables

systemctl disable iptables

iptables如果failed了,说明系统本身就没有开启iptables,就不用管了。

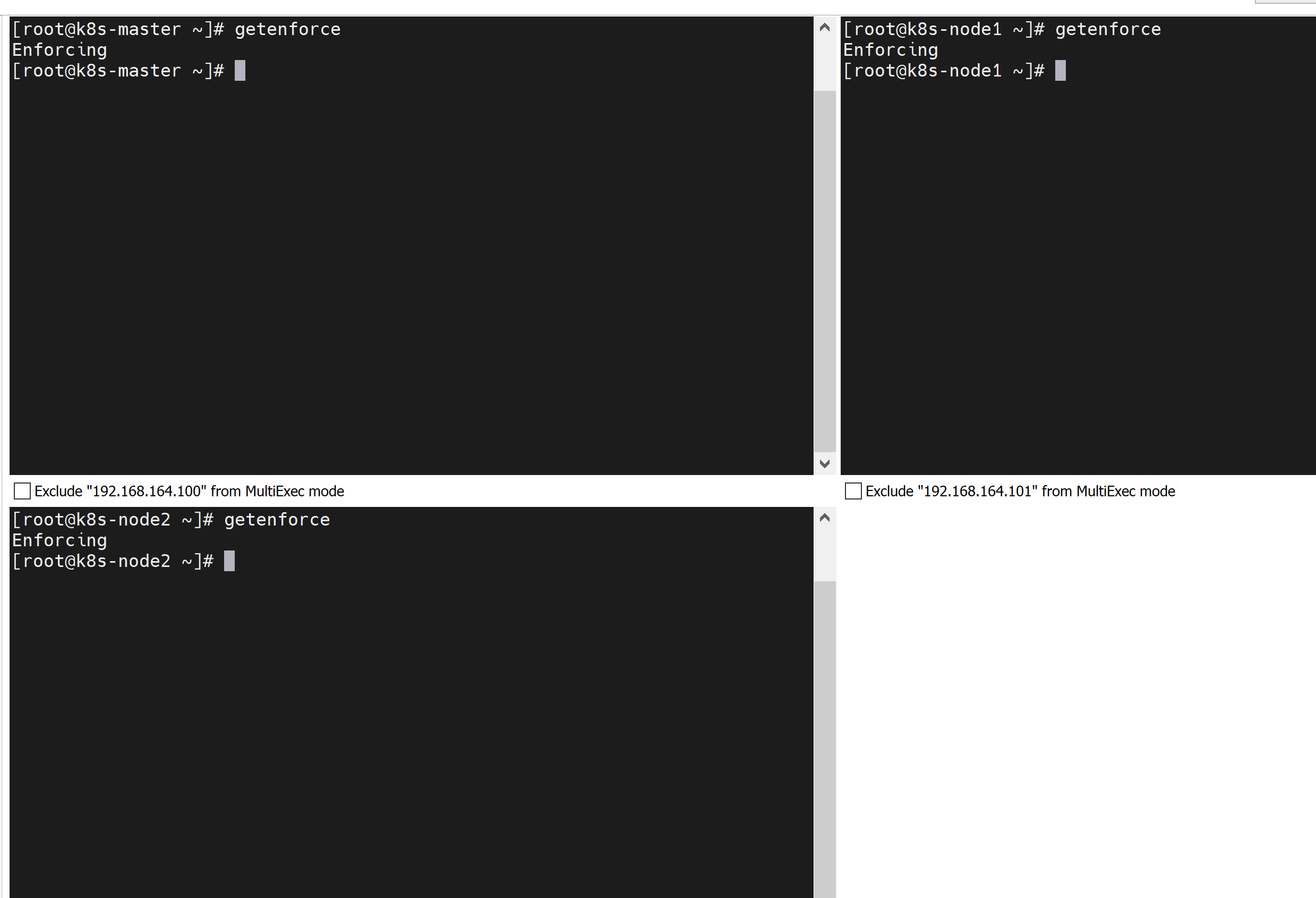

1.5. 禁用selinux

可以先查看下是否开启,可以看到是开启状态

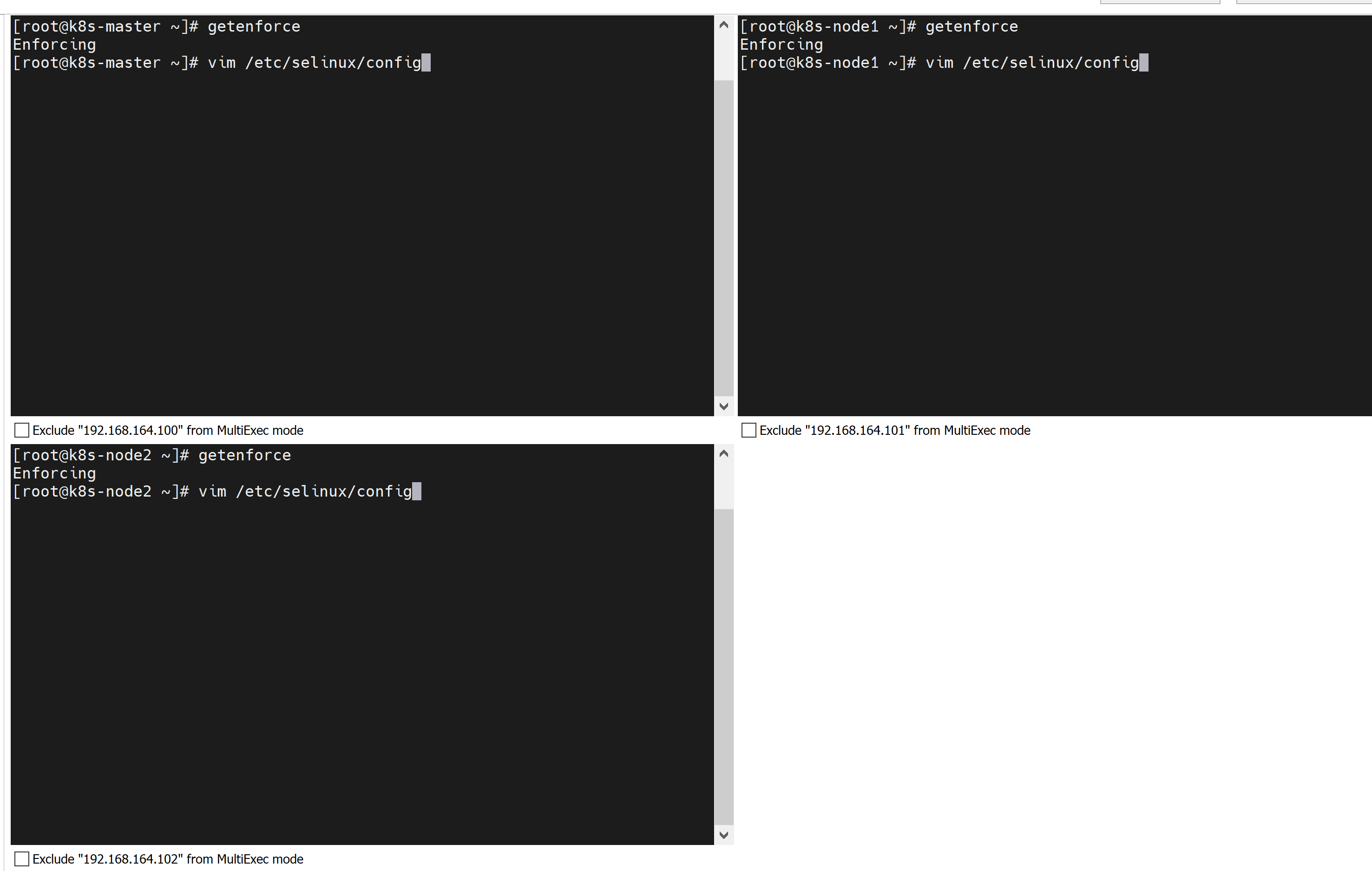

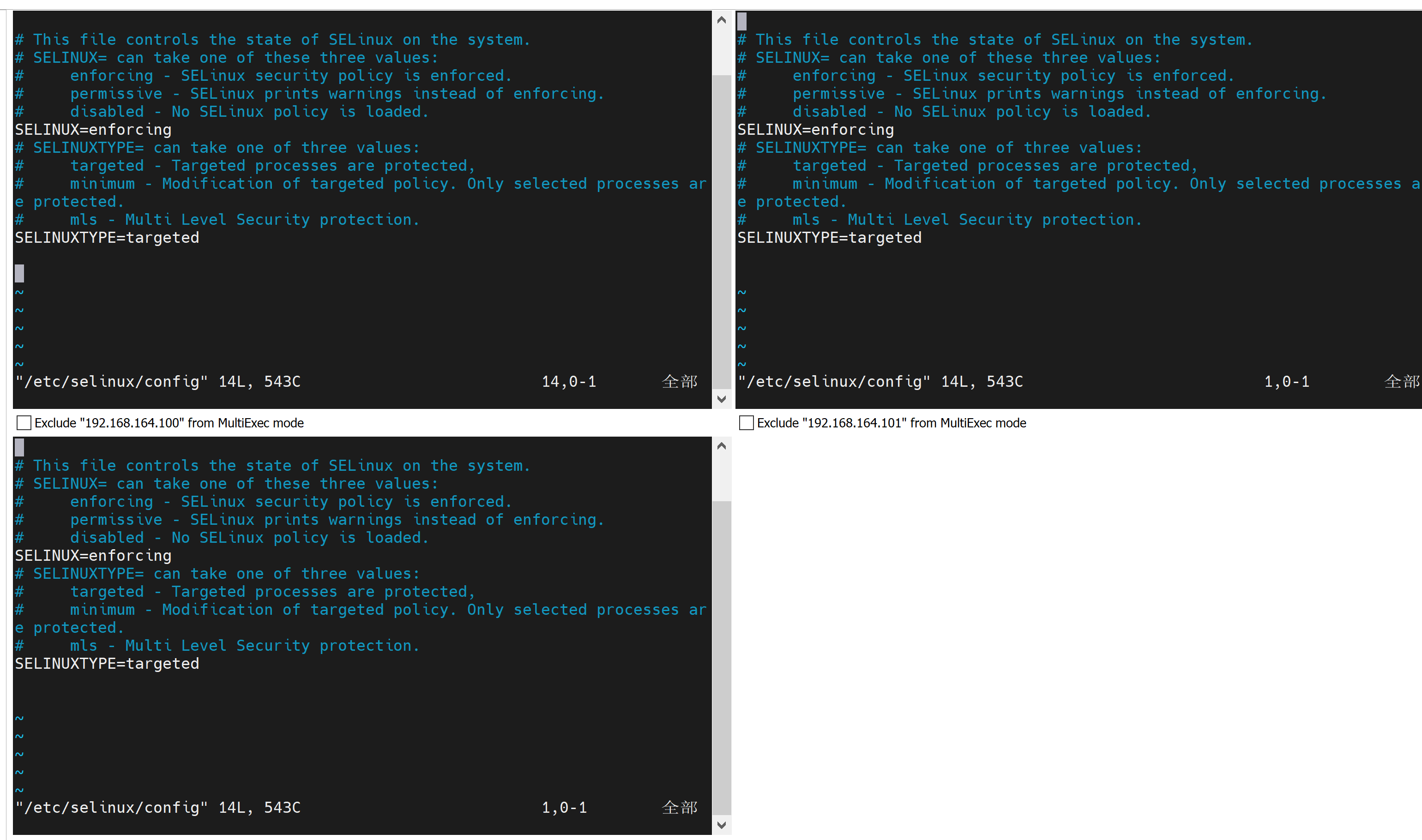

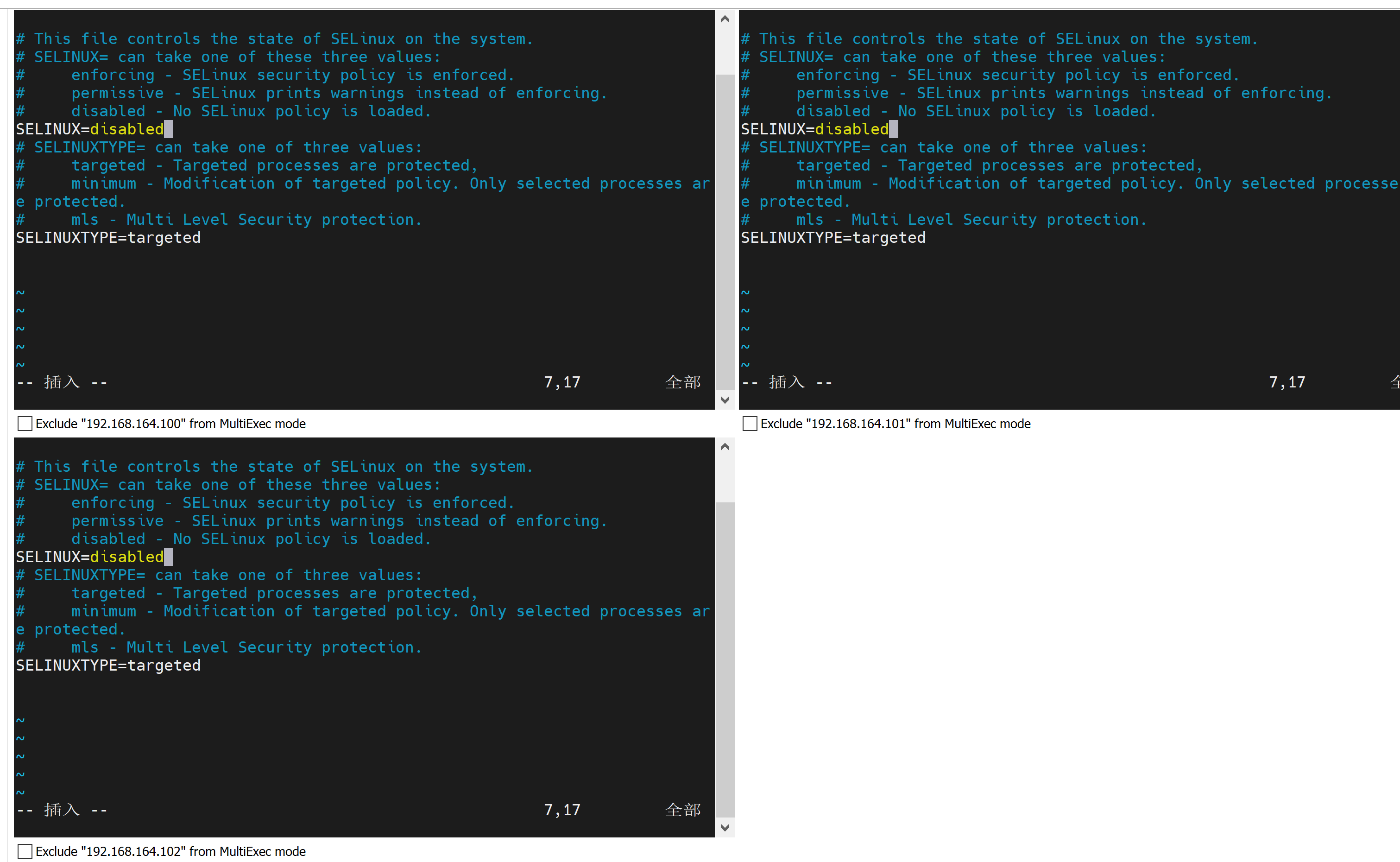

编辑 /etc/selinux/config 文件,修改SELINUX=disabled

vim /etc/selinux/config

修改完成后,记得要重启机器才能生效。做完后面的步骤再重启吧。

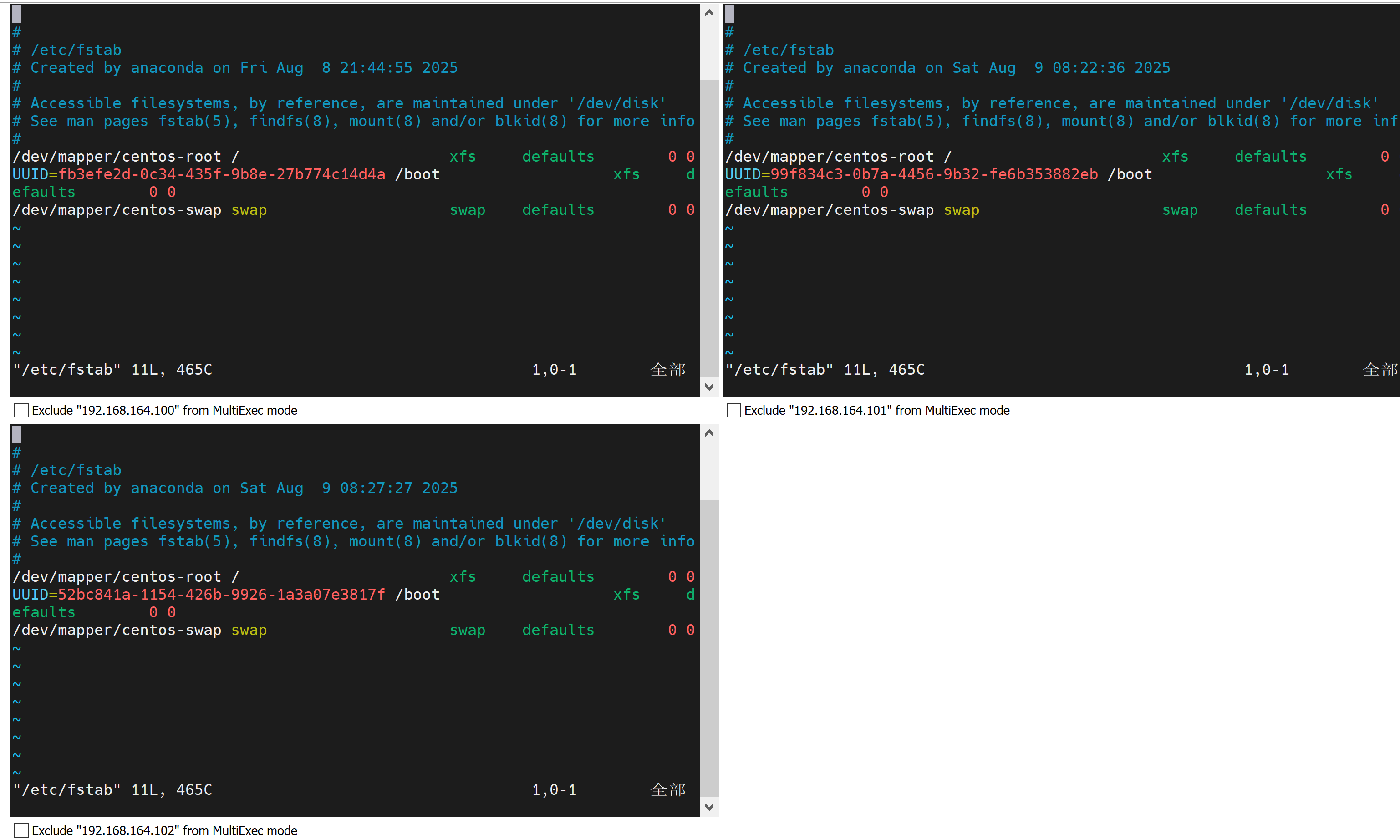

1.6. 禁用swap分区

swap分区指的是虚拟内存分区,它的作用是在物理内存使用完之后,将磁盘空间虚拟成内存来使用。

编辑 /etc/fstab 文件,注释掉swap那一行。

vim /etc/fstab

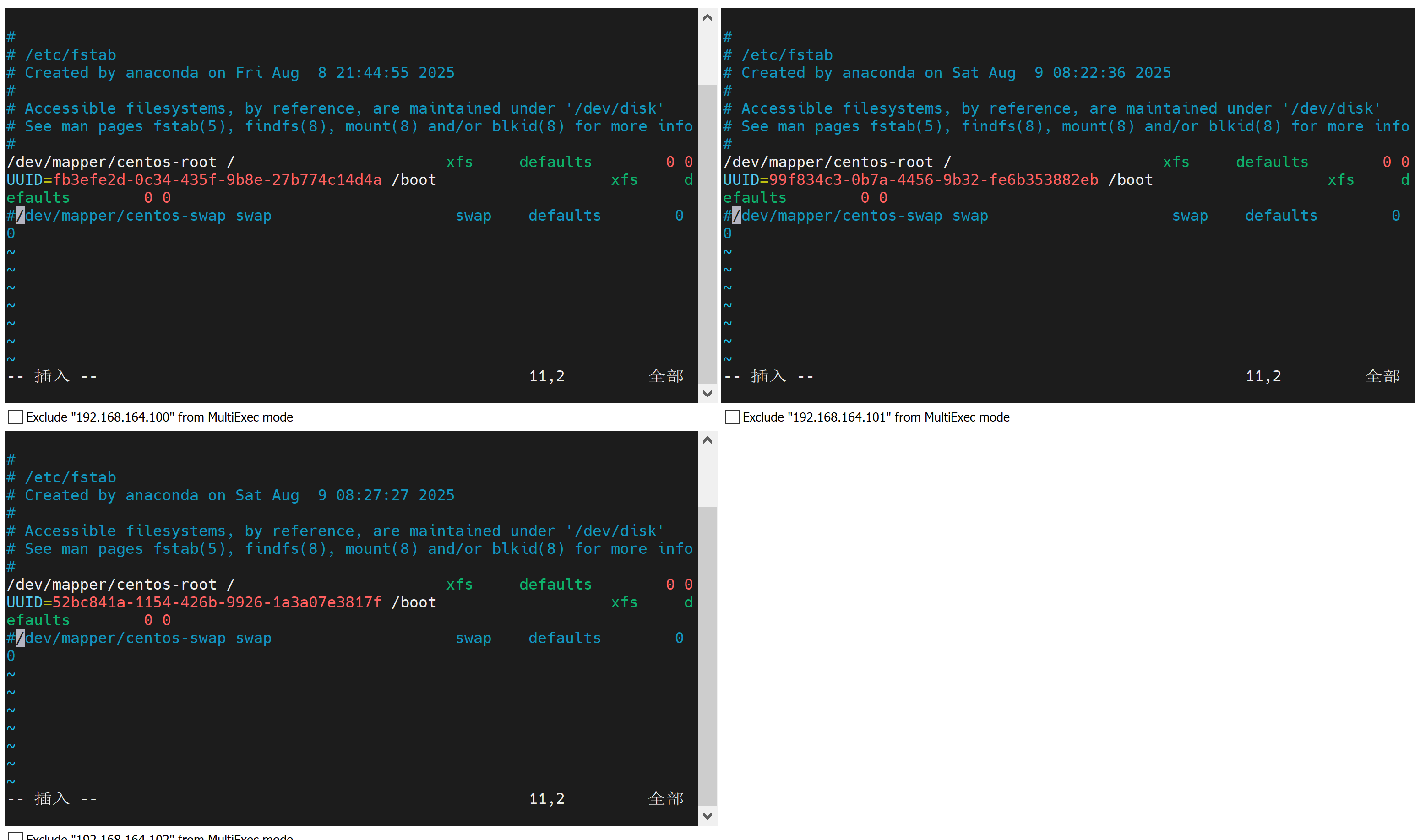

1.7. 修改linux内核参数

编辑文件 /etc/sysctl.d/kubernetes.conf,添加下面的配置。

这个文件本身不存在,所以这次是创建文件

vim /etc/sysctl.d/kubernetes.conf

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

net.ipv4.ip_forward = 1

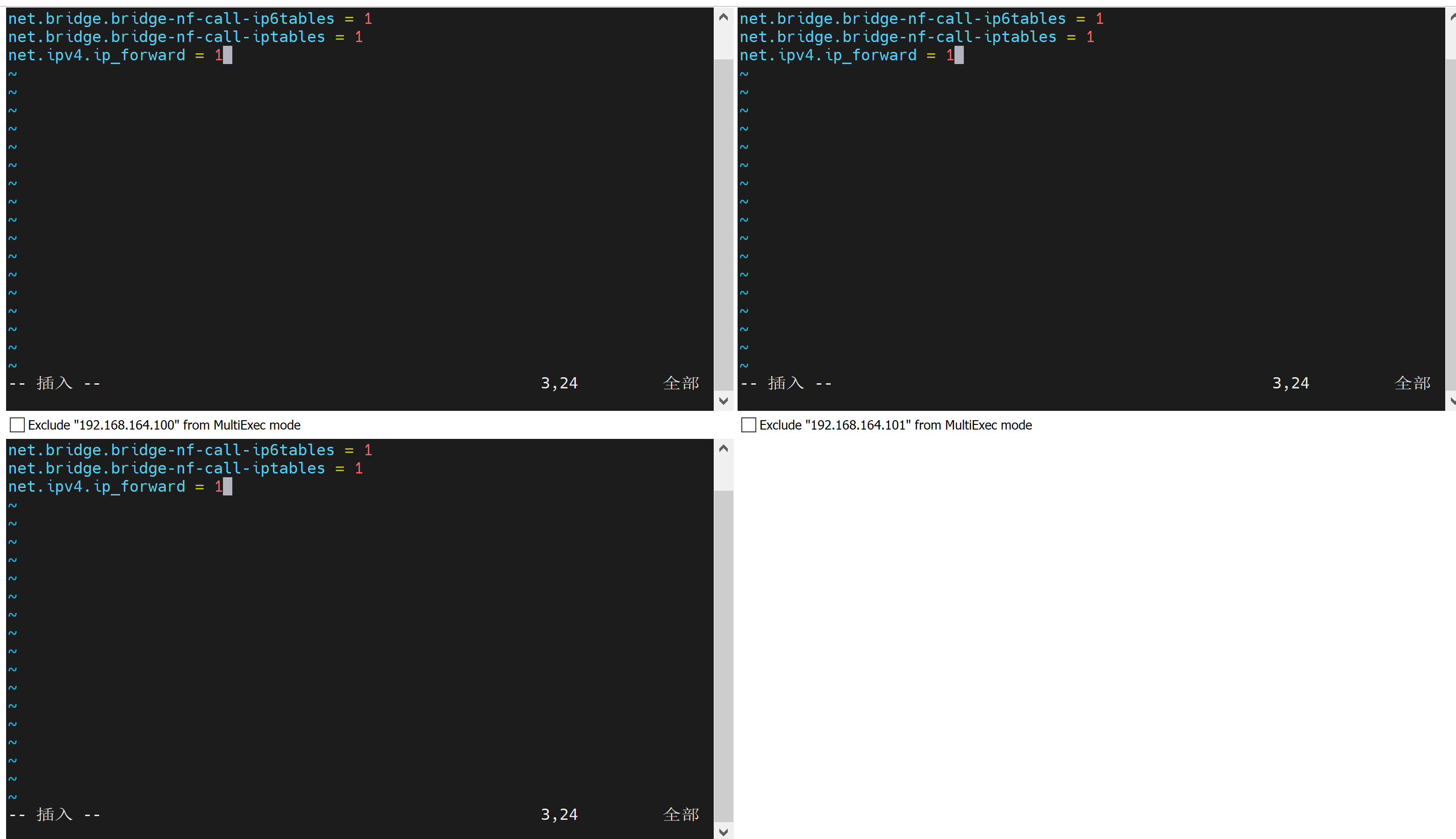

然后重新加载

sysctl -p

加载网桥过滤模块

modprobe br_netfilter

检查是否成功

lsmod | grep br_netfilter

1.8. 配置ipvs功能

service有两种代理模式 基于iptables,基于ipvs;

ipvs性能高一些,但需手动配置。

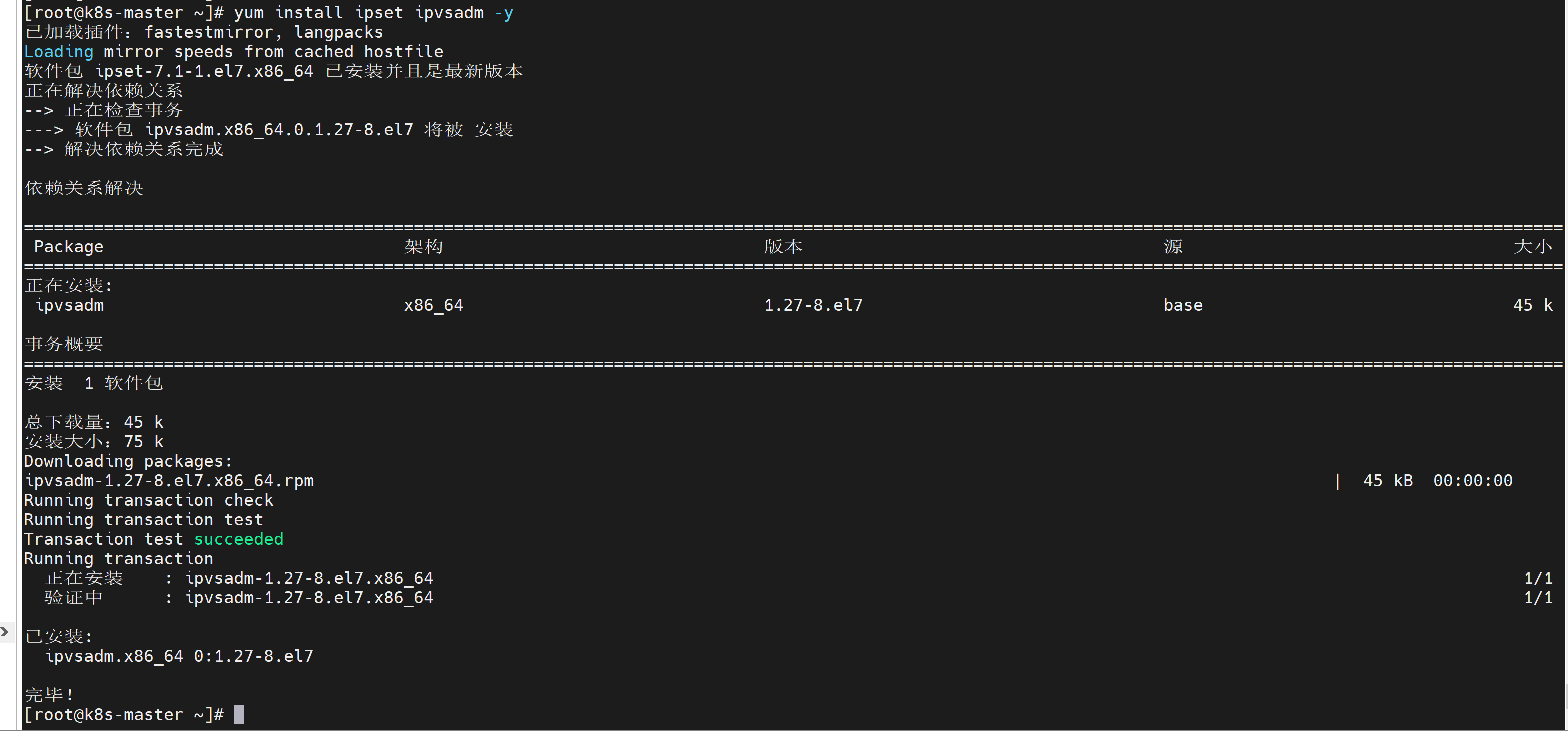

1.8.1. 先安装 ipset ipvsadm:

yum install ipset ipvsadm -y

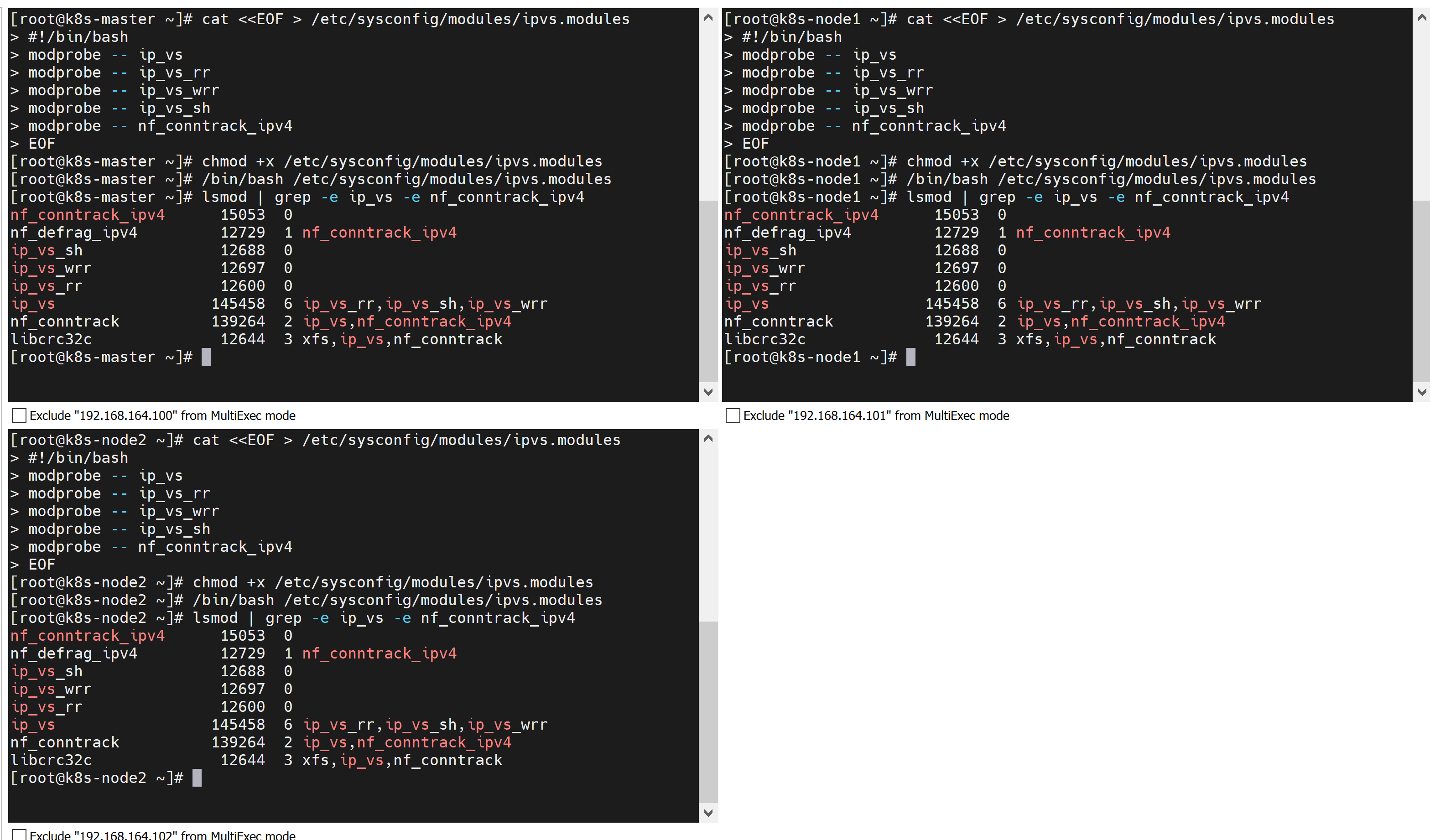

1.8.2. 添加脚本文件

cat <<EOF > /etc/sysconfig/modules/ipvs.modules

> #!/bin/bash

> modprobe -- ip_vs

> modprobe -- ip_vs_rr

> modprobe -- ip_vs_wrr

> modprobe -- ip_vs_sh

> modprobe -- nf_conntrack_ipv4

> EOF

给这个文件添加执行权限:

chmod +x /etc/sysconfig/modules/ipvs.modules

执行文件:

/bin/bash /etc/sysconfig/modules/ipvs.modules

检查是否成功

lsmod | grep -e ip_vs -e nf_conntrack_ipv4

1.9. 重启服务器

1.10. 检查

- getenforce命令 必须是disabled

- free -m,swap全部是0

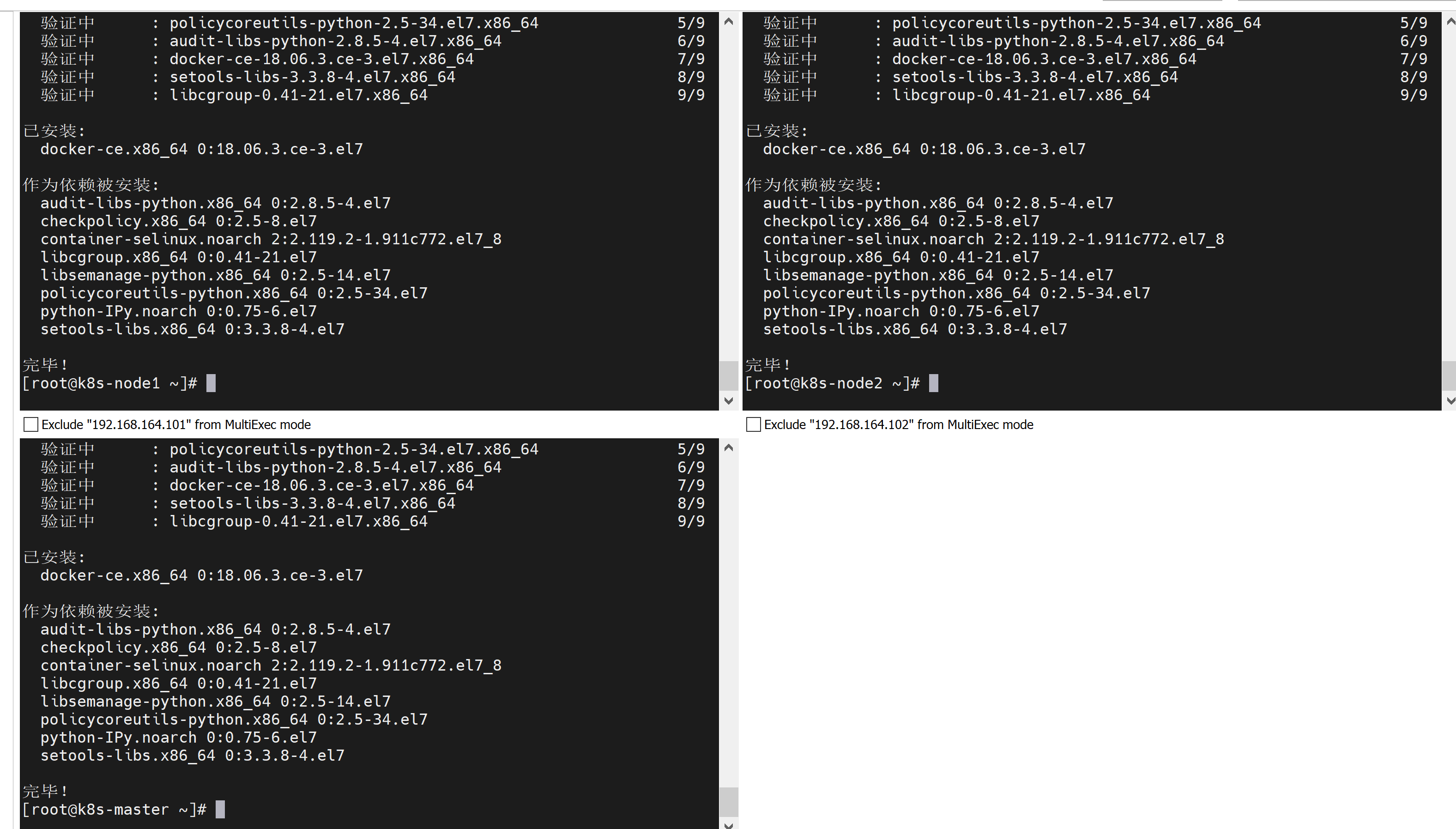

2. 安装docker

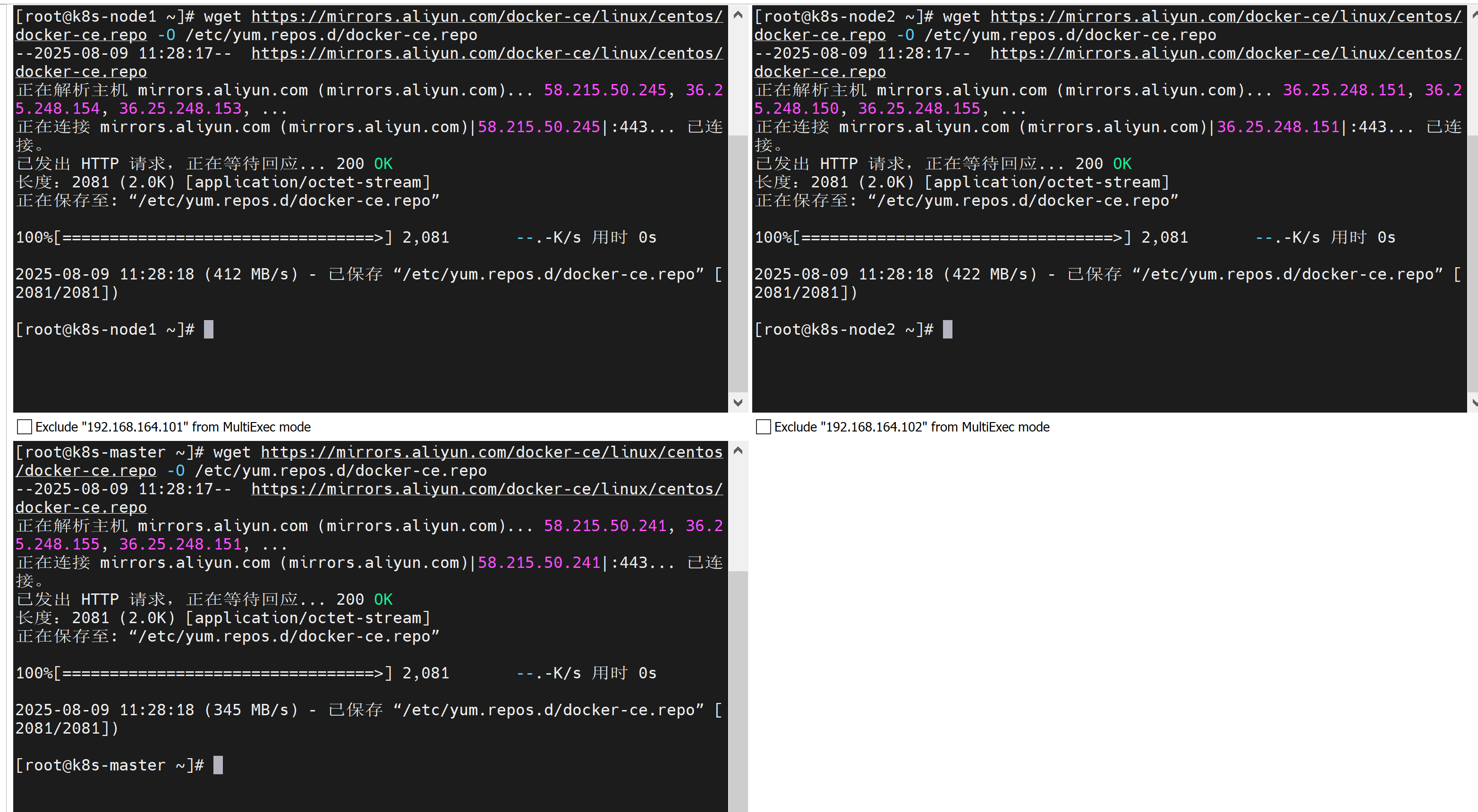

2.1. 切换阿里镜像源

wget https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo -O /etc/yum.repos.d/docker-ce.repo

2.2. 查看docker-ce 版本列表(可选)

yum list docker-ce --showduplicates

至于安装哪个版本,自行选择,可能需要额外配置。

2.3. 安装指定版本

–setopt=obsoletes=0,必须指定,否则会安装更高版本。

yum install --setopt=obsoletes=0 docker-ce-18.06.3.ce-3.el7 -y

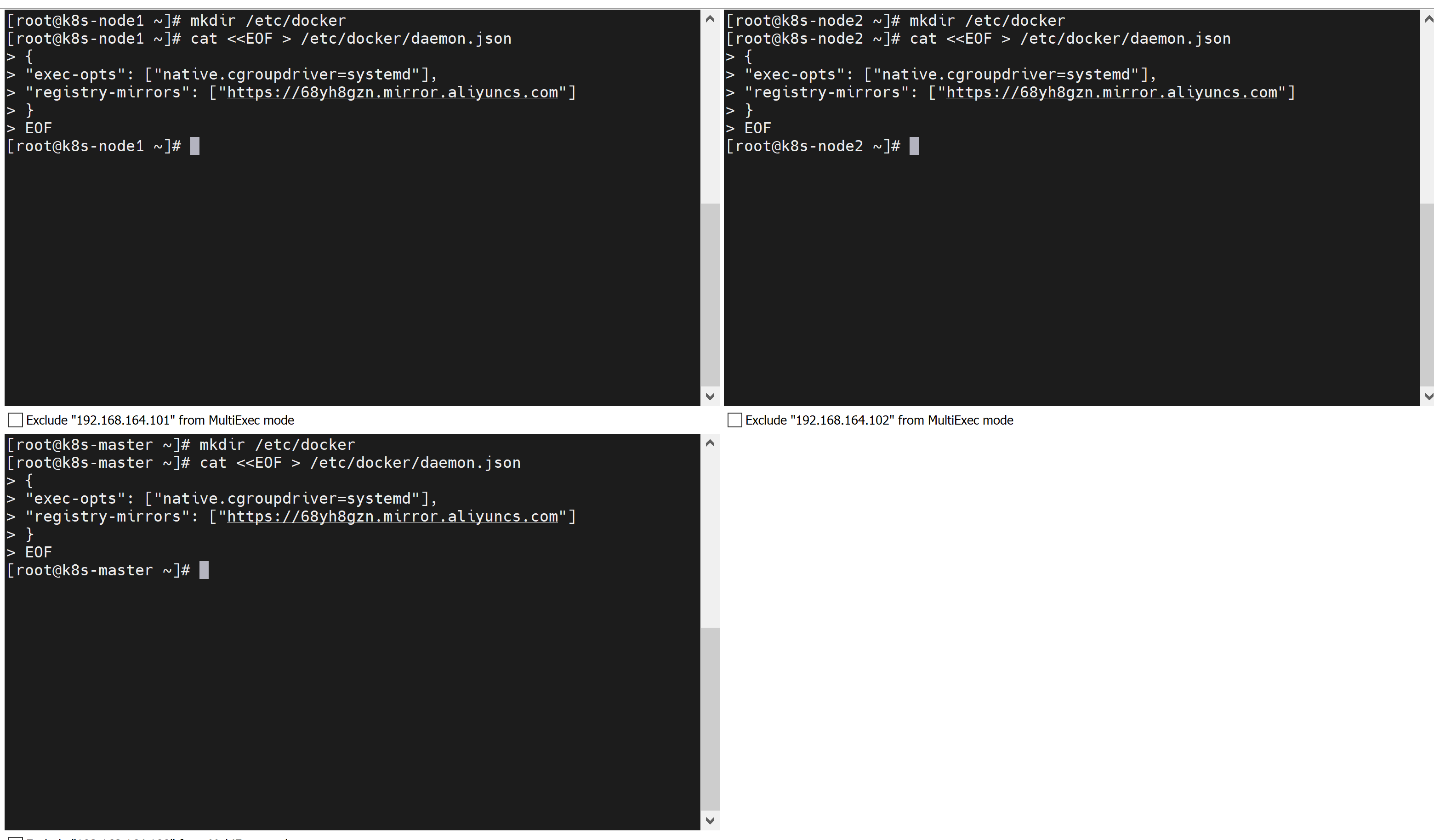

2.4. 添加配置文件

Docker默认情况下使用Cgroup Driver为cgroupfs,而k8s推荐使用systemd代替cgroupfs。

创建目录:

mkdir /etc/docker

添加配置文件:

cat <<EOF > /etc/docker/daemon.json

{

"exec-opts": ["native.cgroupdriver=systemd"],

"registry-mirrors": ["https://docker.1ms.run"]

}

EOF

registry-mirrors中的地址随时可能失效。

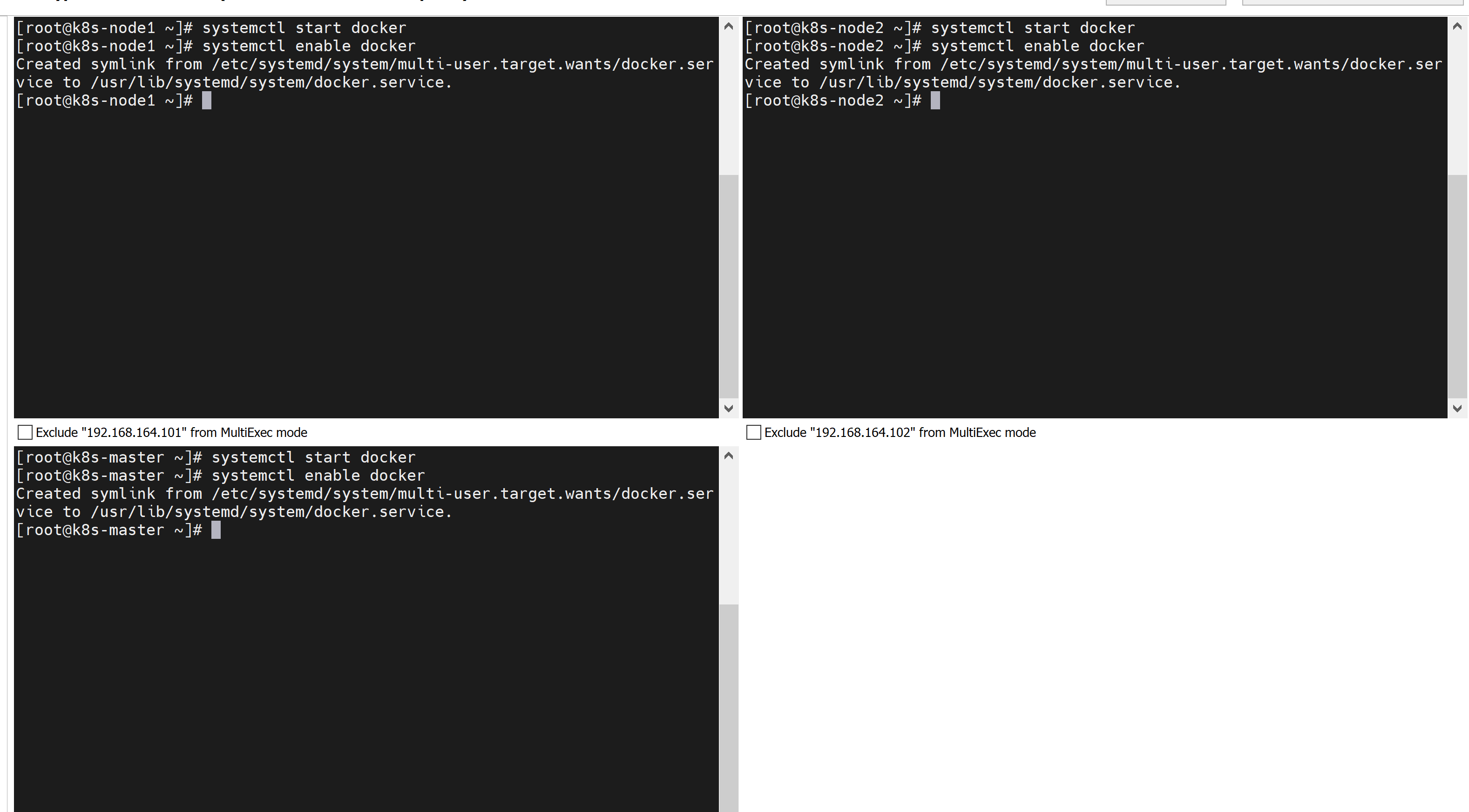

2.5. 启动docker

启动docker

systemctl start docker

设置开机自启动

systemctl enable docker

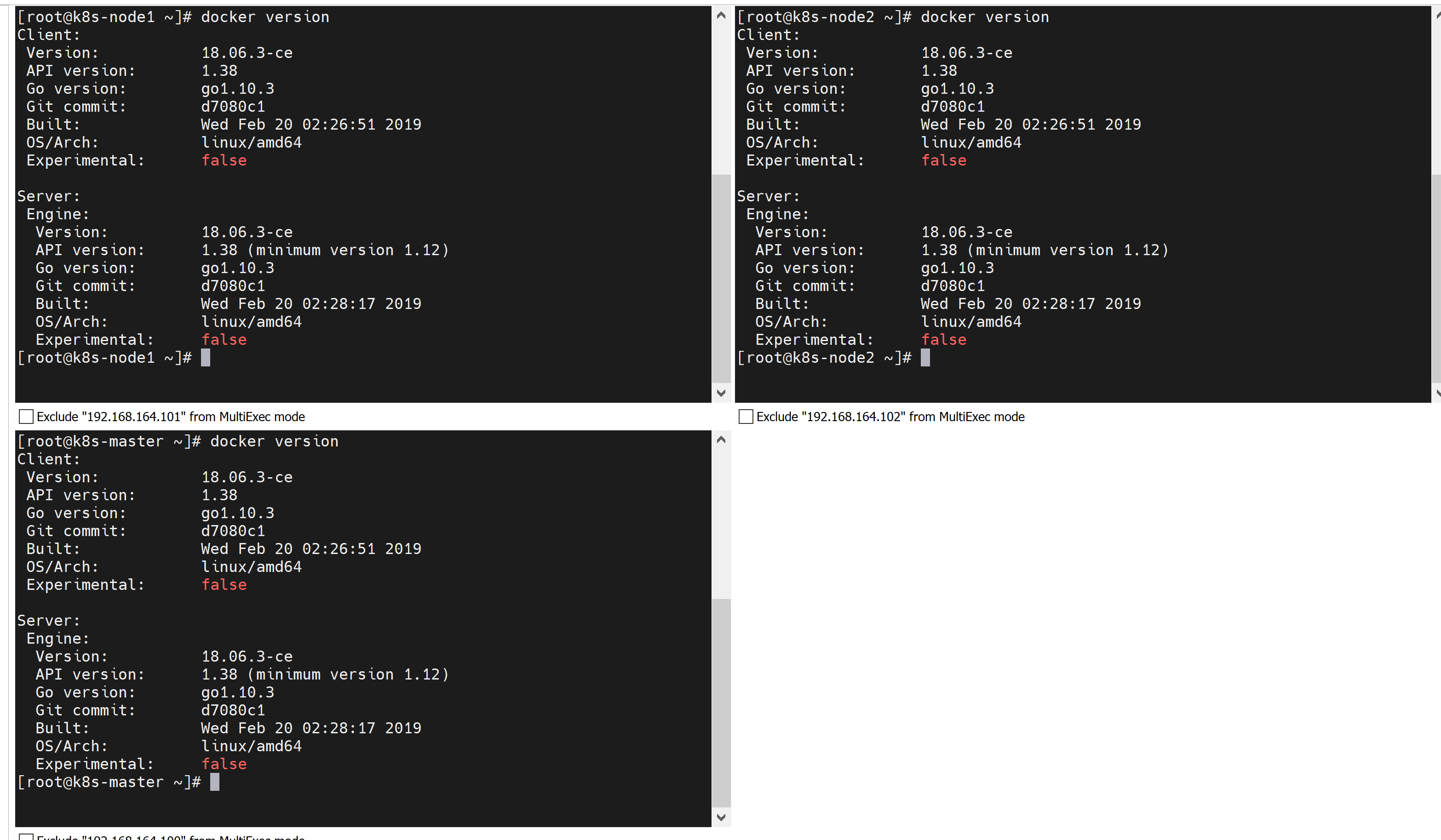

2.6. 检查是否安装成功

3. 安装kubernetes的组件

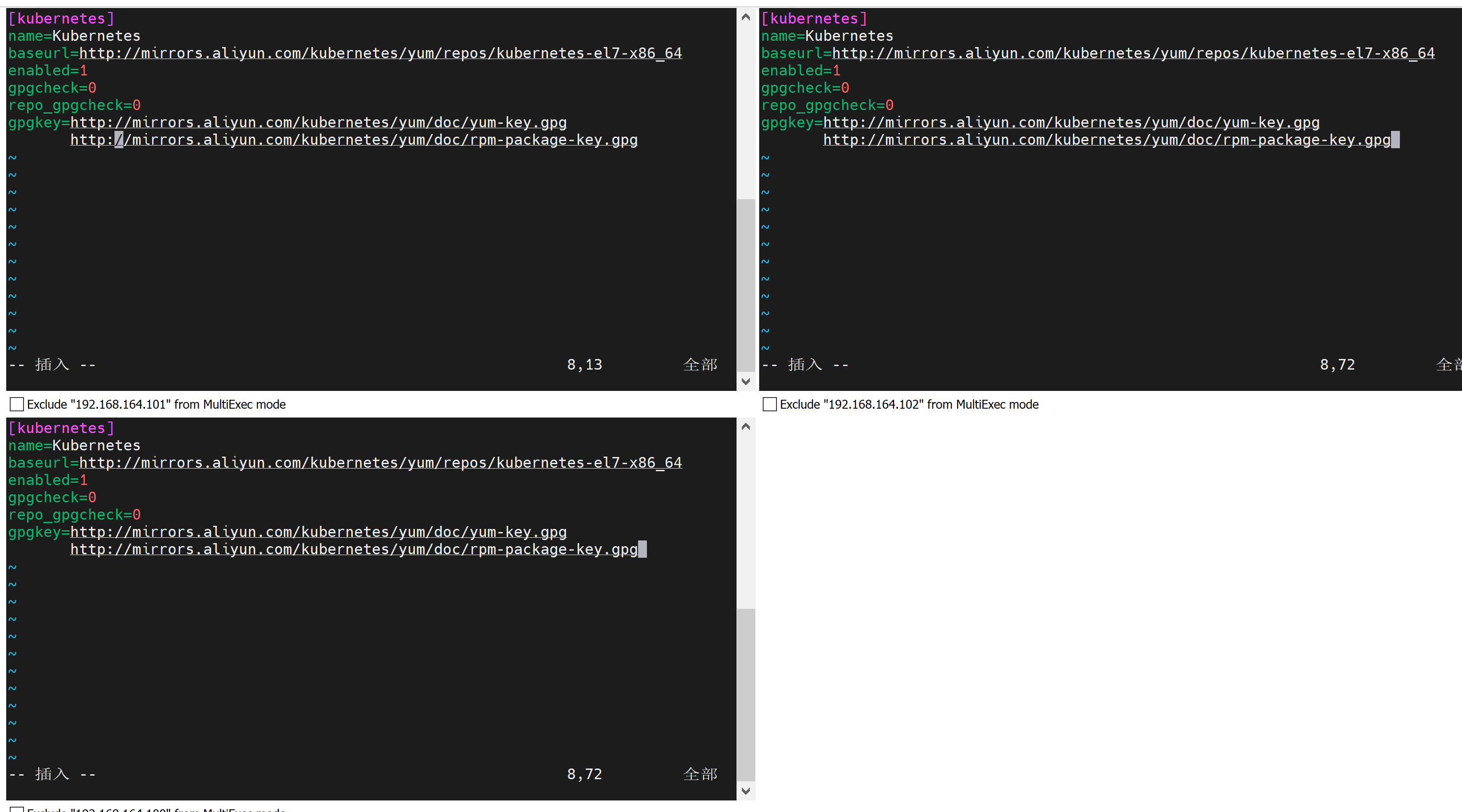

3.1. 切换镜像源

编辑文件:

vim /etc/yum.repos.d/kubernetes.repo

添加以下内容:

[kubernetes]

name=Kubernetes

baseurl=http://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64

enabled=1

gpgcheck=0

repo_gpgcheck=0

gpgkey=http://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg

http://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

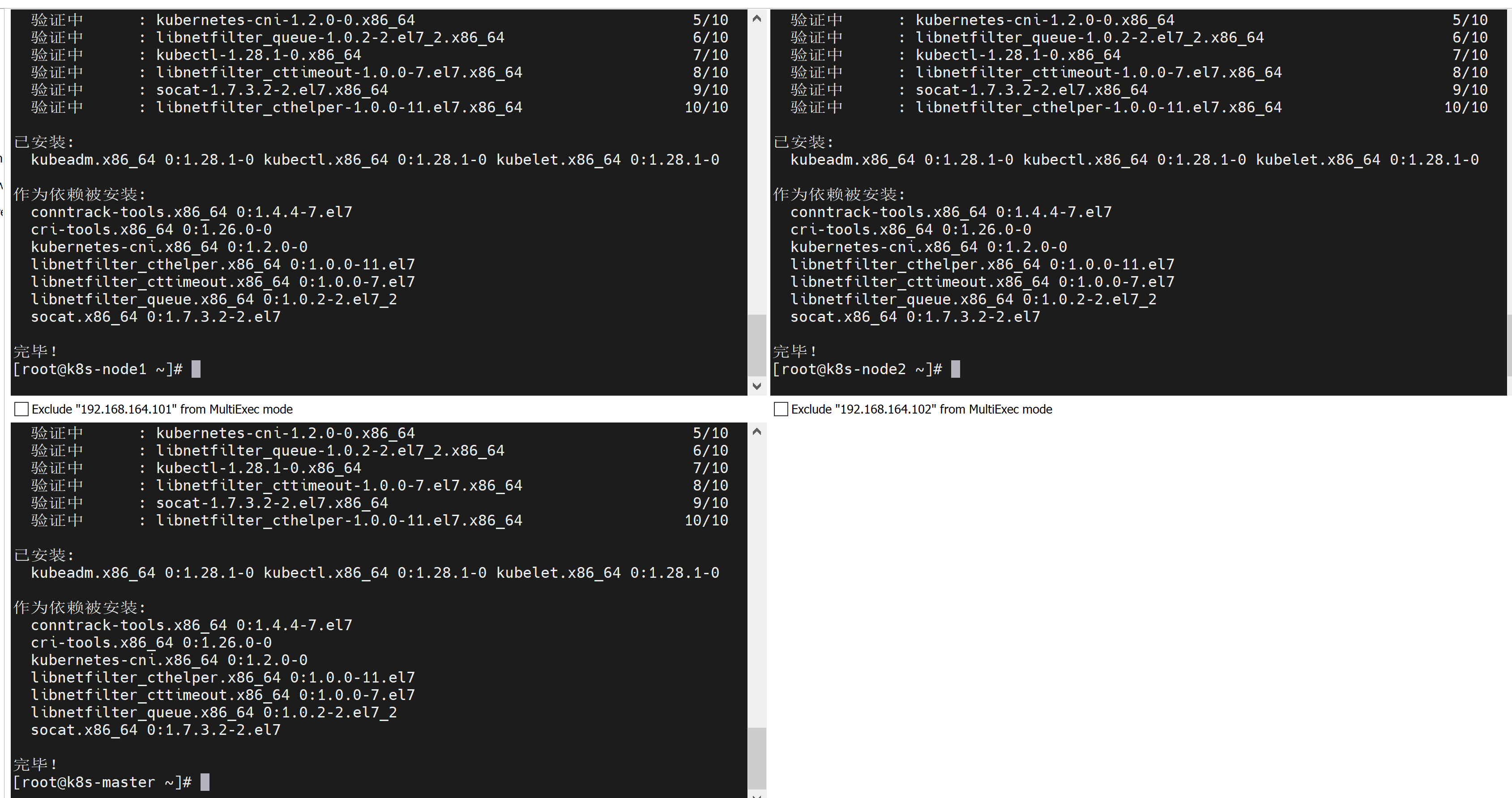

3.2. 安装组件

安装kubeadm、kubelet、kubectl三个组件。

这里提供两个版本的安装方式,但后续步骤基于1.17.4-0版本。

3.2.1. 安装1.28.1-0版本

yum install --setopt=obsoletes=0 kubeadm-1.28.1-0 kubelet-1.28.1-0 kubectl-1.28.1-0 -y

卸载1.28.1-0版本的相关组件,包括组件本身和其依赖的组件:

yum remove kubeadm.x86_64 kubectl.x86_64 kubelet.x86_64 conntrack-tools.x86_64 cri-tools.x86_64 kubernetes-cni.x86_64 libnetfilter_cthelper.x86_64 libnetfilter_cttimeout.x86_64 libnetfilter_queue.x86_64 socat.x86_64

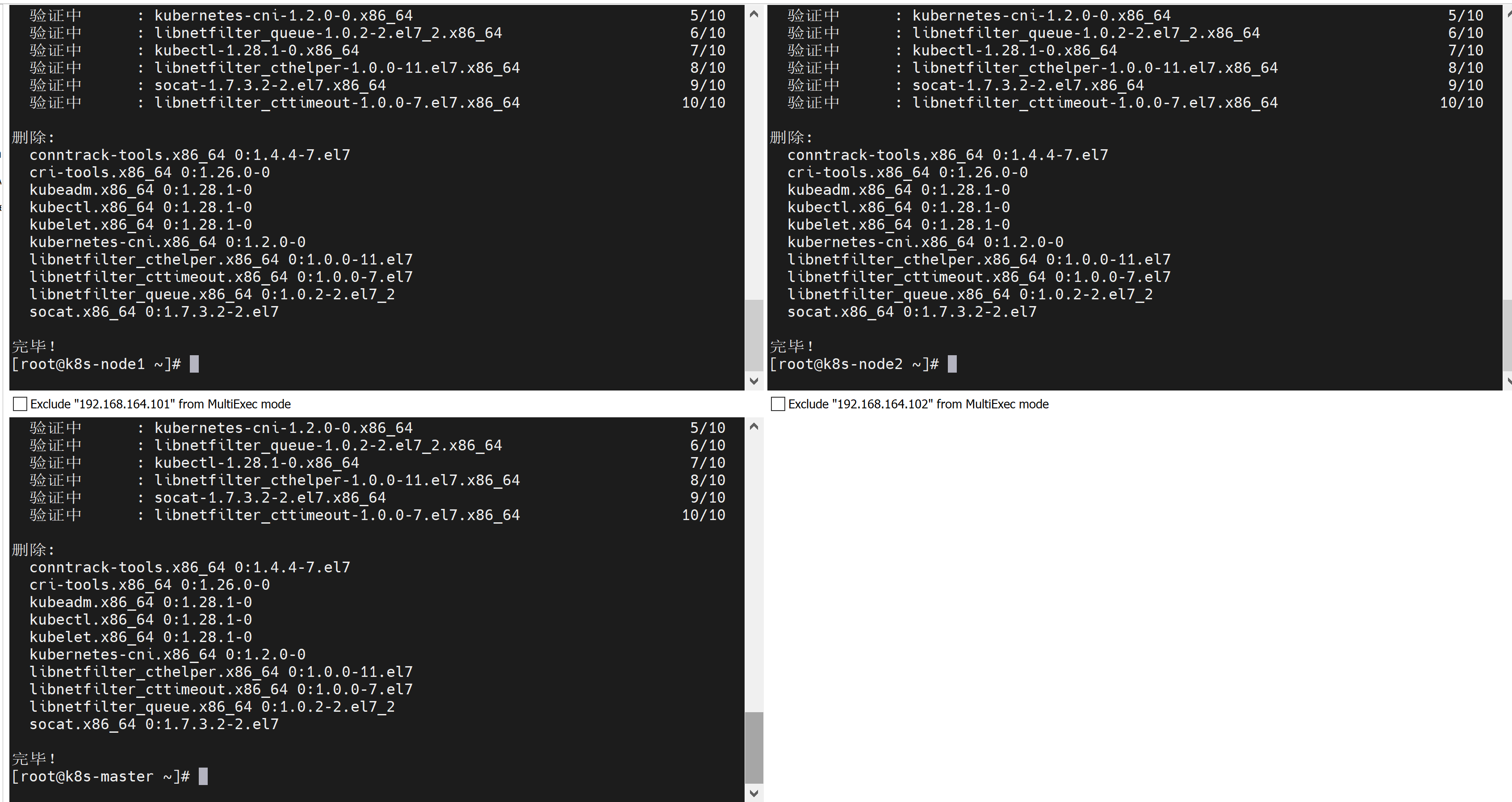

3.2.2. 安装1.17.4-0版本(k8s从1.20版本之后放弃对docker的支持)

yum install --setopt=obsoletes=0 kubeadm-1.17.4-0 kubelet-1.17.4-0 kubectl-1.17.4-0 -y

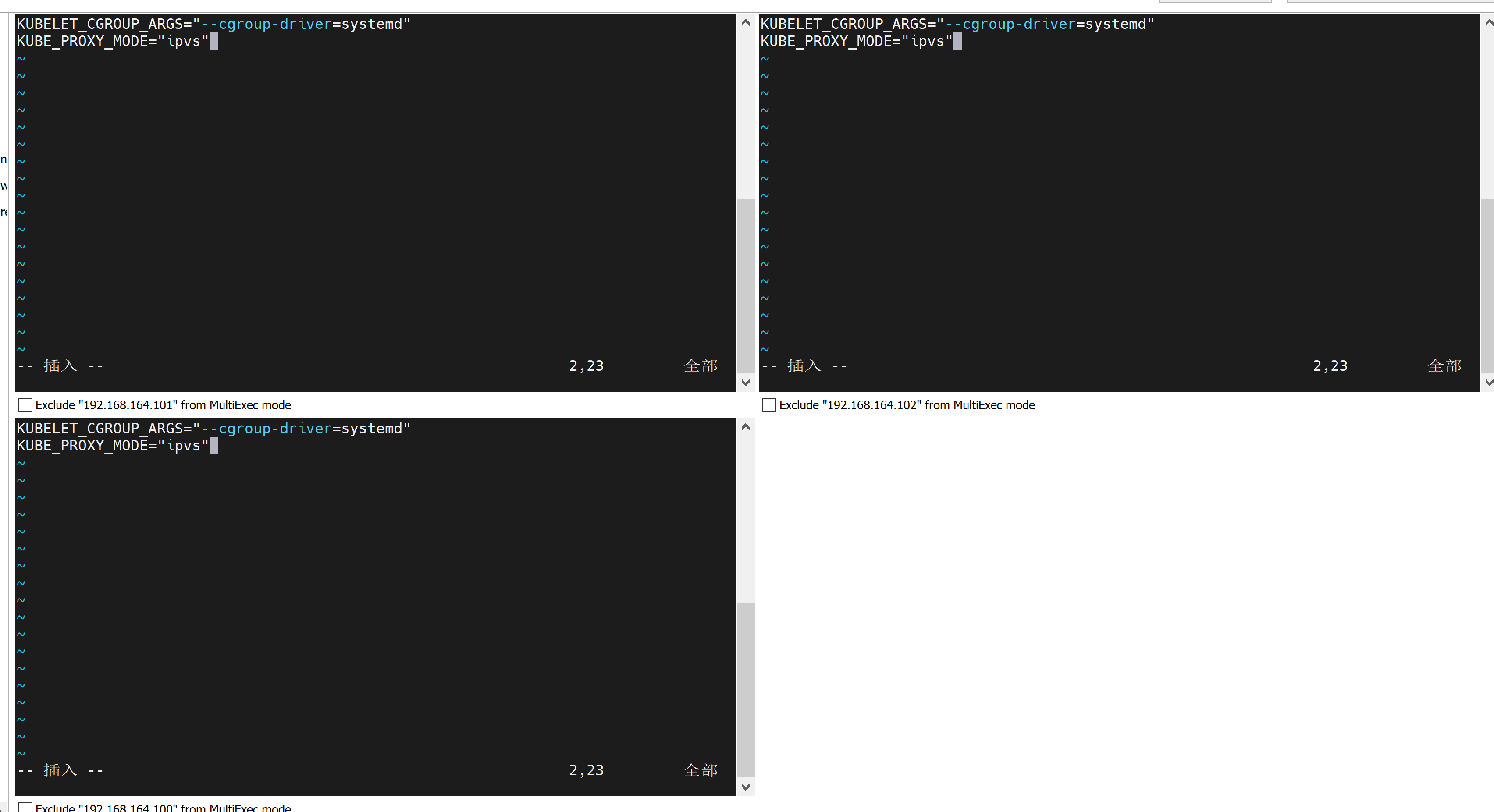

3.3. 添加配置文件

编辑文件

vim /etc/sysconfig/kubelet

打开后有一行内容,删掉即可;

再添加如下配置:

使用systemd 和 ipvs

KUBELET_CGROUP_ARGS="--cgroup-driver=systemd"

KUBE_PROXY_MODE="ipvs"

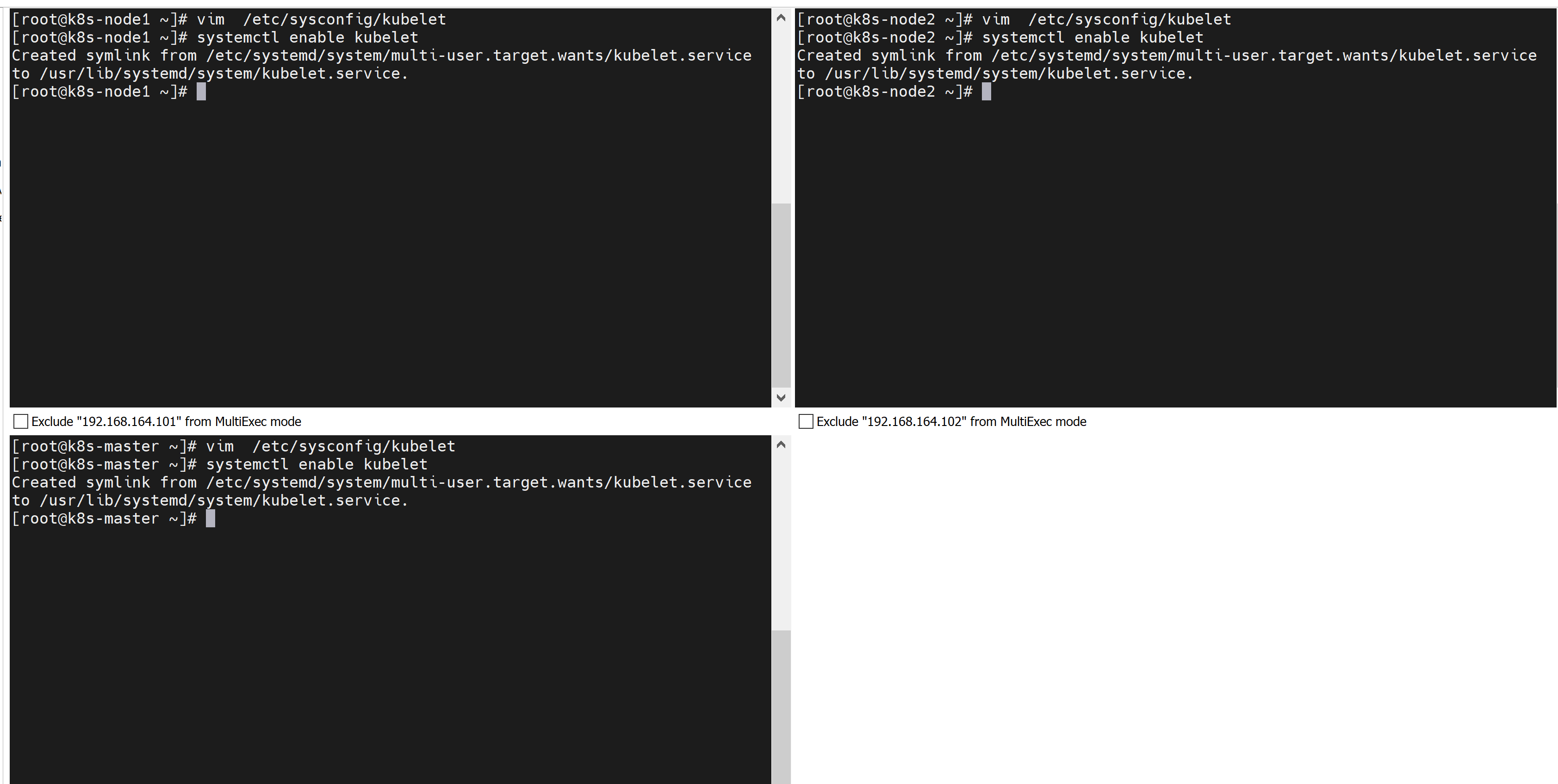

3.4. 设置kubelet开机自启

systemctl enable kubelet

4. 初始化集群

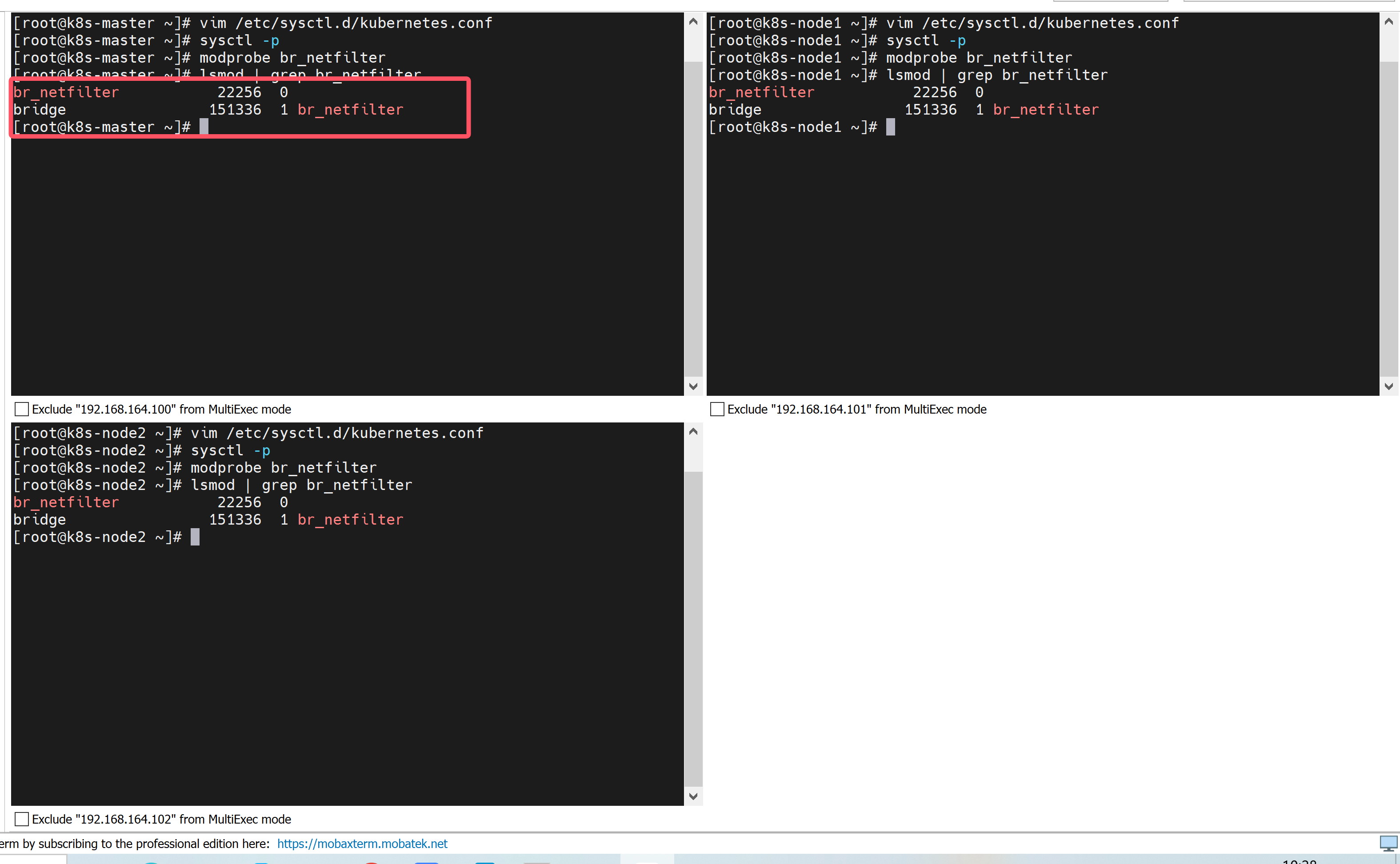

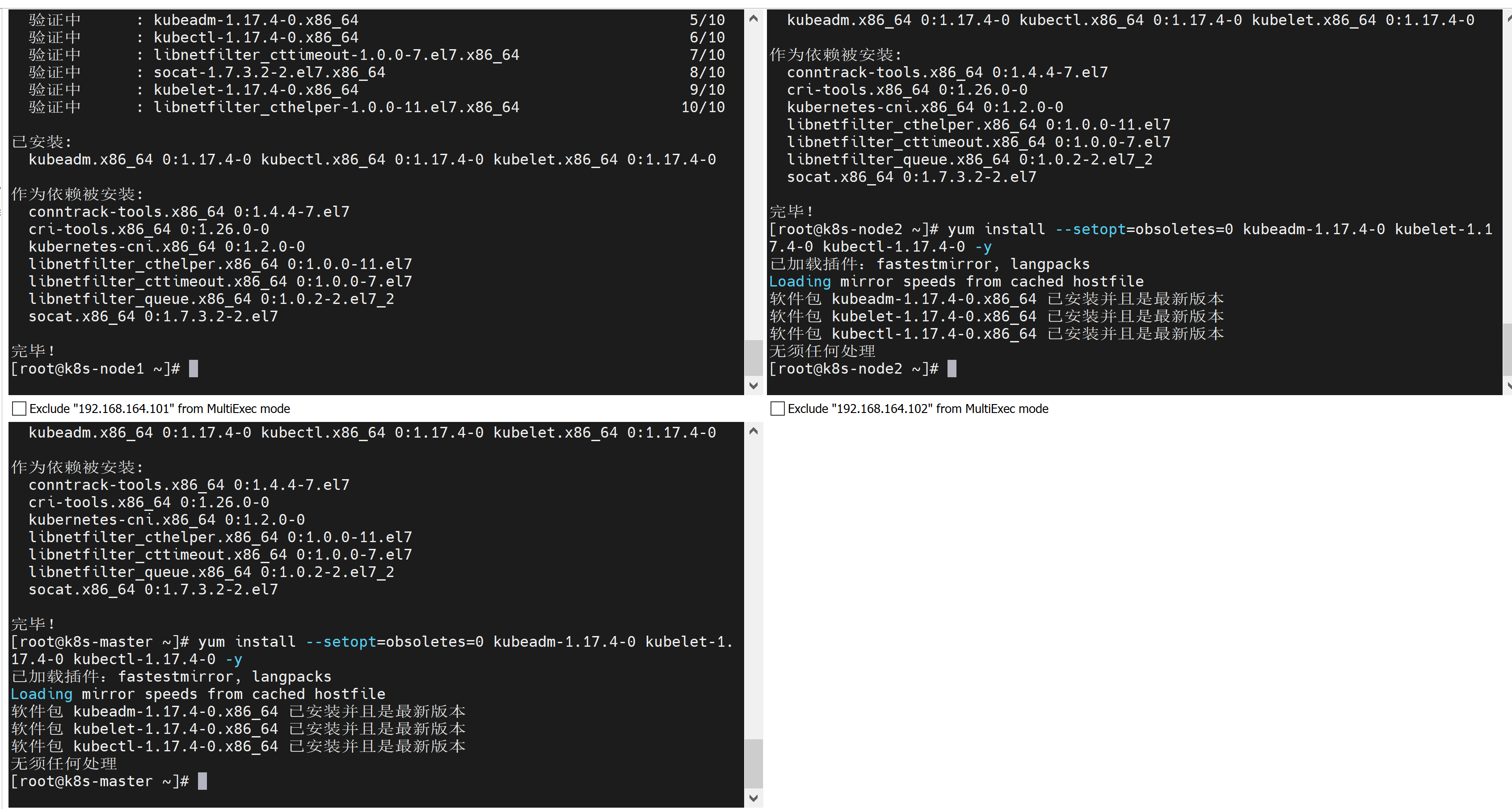

4.1. kubeadm init

只需在master节点执行下面的命令:

kubeadm init \

--apiserver-advertise-address=192.168.164.100 \

--image-repository registry.aliyuncs.com/google_containers \

--kubernetes-version=v1.17.4 \

--pod-network-cidr=10.244.0.0/16 \

--service-cidr=10.96.0.0/12

[root@k8s-master ~]# kubeadm init \

> --apiserver-advertise-address=192.168.164.100 \

> --image-repository registry.aliyuncs.com/google_containers \

> --kubernetes-version=v1.17.4 \

> --pod-network-cidr=10.244.0.0/16 \

> --service-cidr=10.96.0.0/12

W0809 12:44:57.714115 8618 validation.go:28] Cannot validate kube-proxy config - no validator is available

W0809 12:44:57.714778 8618 validation.go:28] Cannot validate kubelet config - no validator is available

[init] Using Kubernetes version: v1.17.4

[preflight] Running pre-flight checks

[preflight] Pulling images required for setting up a Kubernetes cluster

[preflight] This might take a minute or two, depending on the speed of your internet connection

[preflight] You can also perform this action in beforehand using 'kubeadm config images pull'

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Starting the kubelet

[certs] Using certificateDir folder "/etc/kubernetes/pki"

[certs] Generating "ca" certificate and key

[certs] Generating "apiserver" certificate and key

[certs] apiserver serving cert is signed for DNS names [k8s-master kubernetes kubernetes.default kubernetes.default.svc kubernetes.default.svc.cluster.local] and IPs [10.96.0.1 192.168.164.100]

[certs] Generating "apiserver-kubelet-client" certificate and key

[certs] Generating "front-proxy-ca" certificate and key

[certs] Generating "front-proxy-client" certificate and key

[certs] Generating "etcd/ca" certificate and key

[certs] Generating "etcd/server" certificate and key

[certs] etcd/server serving cert is signed for DNS names [k8s-master localhost] and IPs [192.168.164.100 127.0.0.1 ::1]

[certs] Generating "etcd/peer" certificate and key

[certs] etcd/peer serving cert is signed for DNS names [k8s-master localhost] and IPs [192.168.164.100 127.0.0.1 ::1]

[certs] Generating "etcd/healthcheck-client" certificate and key

[certs] Generating "apiserver-etcd-client" certificate and key

[certs] Generating "sa" key and public key

[kubeconfig] Using kubeconfig folder "/etc/kubernetes"

[kubeconfig] Writing "admin.conf" kubeconfig file

[kubeconfig] Writing "kubelet.conf" kubeconfig file

[kubeconfig] Writing "controller-manager.conf" kubeconfig file

[kubeconfig] Writing "scheduler.conf" kubeconfig file

[control-plane] Using manifest folder "/etc/kubernetes/manifests"

[control-plane] Creating static Pod manifest for "kube-apiserver"

[control-plane] Creating static Pod manifest for "kube-controller-manager"

W0809 12:45:46.204702 8618 manifests.go:214] the default kube-apiserver authorization-mode is "Node,RBAC"; using "Node,RBAC"

[control-plane] Creating static Pod manifest for "kube-scheduler"

W0809 12:45:46.205353 8618 manifests.go:214] the default kube-apiserver authorization-mode is "Node,RBAC"; using "Node,RBAC"

[etcd] Creating static Pod manifest for local etcd in "/etc/kubernetes/manifests"

[wait-control-plane] Waiting for the kubelet to boot up the control plane as static Pods from directory "/etc/kubernetes/manifests". This can take up to 4m0s

[apiclient] All control plane components are healthy after 16.014650 seconds

[upload-config] Storing the configuration used in ConfigMap "kubeadm-config" in the "kube-system" Namespace

[kubelet] Creating a ConfigMap "kubelet-config-1.17" in namespace kube-system with the configuration for the kubelets in the cluster

[upload-certs] Skipping phase. Please see --upload-certs

[mark-control-plane] Marking the node k8s-master as control-plane by adding the label "node-role.kubernetes.io/master=''"

[mark-control-plane] Marking the node k8s-master as control-plane by adding the taints [node-role.kubernetes.io/master:NoSchedule]

[bootstrap-token] Using token: sc9l0r.z7tob2hw1ffn60kn

[bootstrap-token] Configuring bootstrap tokens, cluster-info ConfigMap, RBAC Roles

[bootstrap-token] configured RBAC rules to allow Node Bootstrap tokens to post CSRs in order for nodes to get long term certificate credentials

[bootstrap-token] configured RBAC rules to allow the csrapprover controller automatically approve CSRs from a Node Bootstrap Token

[bootstrap-token] configured RBAC rules to allow certificate rotation for all node client certificates in the cluster

[bootstrap-token] Creating the "cluster-info" ConfigMap in the "kube-public" namespace

[kubelet-finalize] Updating "/etc/kubernetes/kubelet.conf" to point to a rotatable kubelet client certificate and key

[addons] Applied essential addon: CoreDNS

[addons] Applied essential addon: kube-proxy

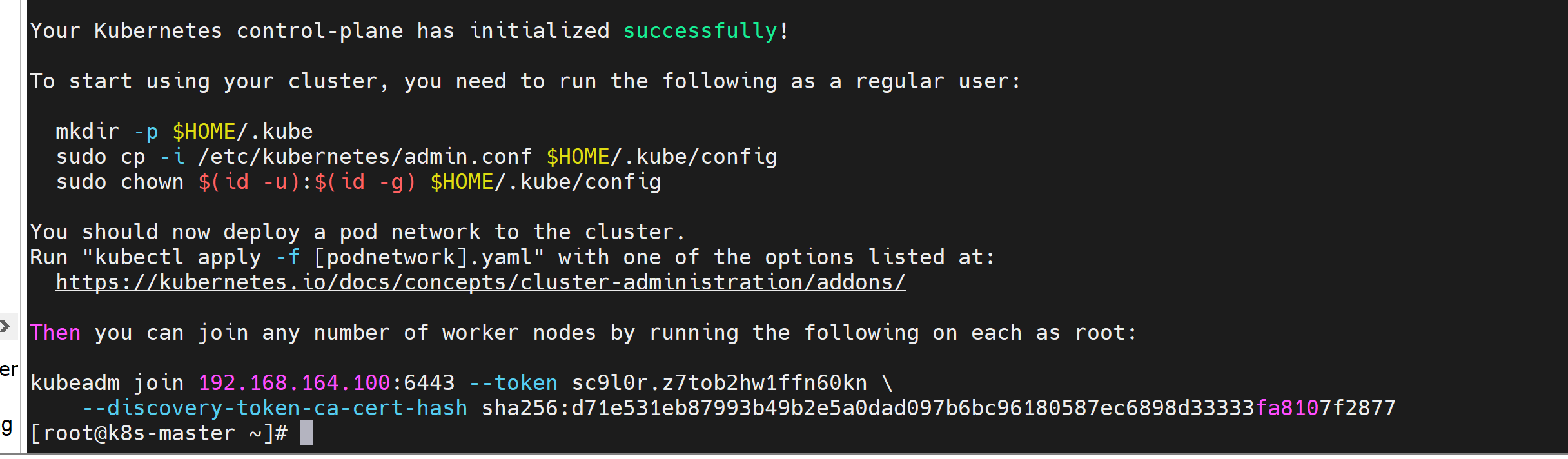

Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join 192.168.164.100:6443 --token sc9l0r.z7tob2hw1ffn60kn \

--discovery-token-ca-cert-hash sha256:d71e531eb87993b49b2e5a0dad097b6bc96180587ec6898d33333fa8107f2877

[root@k8s-master ~]#

4.2. 创建.kube文件夹

按照图中的提示,执行以下命令

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

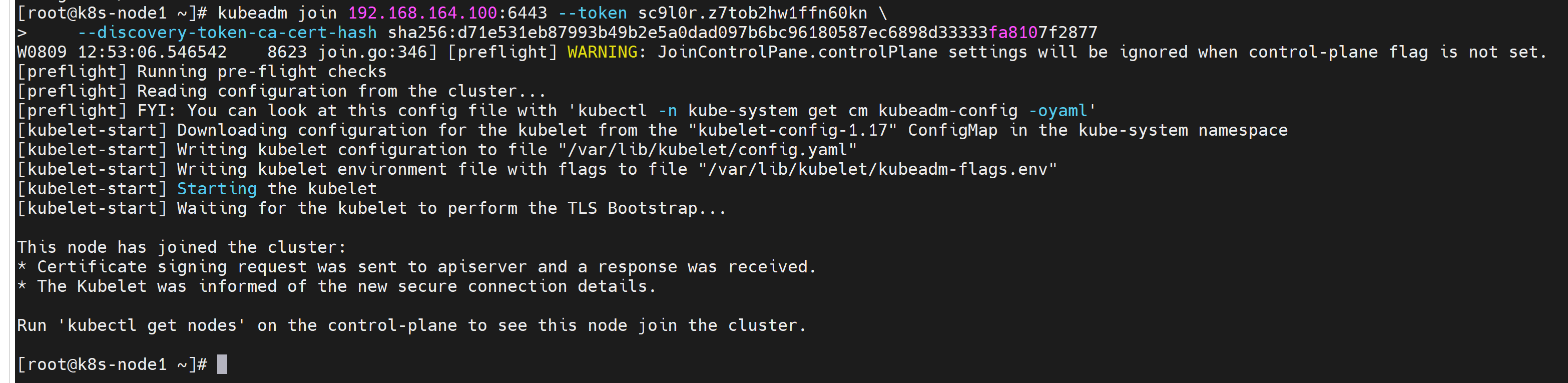

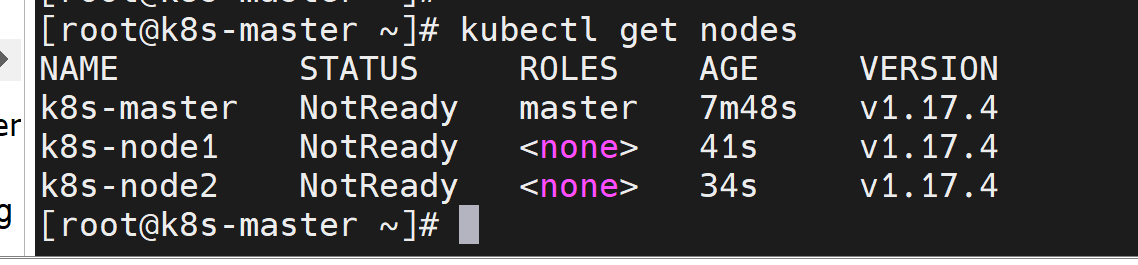

4.3. 将node加入集群

按照图中的提示,以下命令只需在node节点执行

kubeadm join 192.168.164.100:6443 --token sc9l0r.z7tob2hw1ffn60kn \

--discovery-token-ca-cert-hash sha256:d71e531eb87993b49b2e5a0dad097b6bc96180587ec6898d33333fa8107f2877

在master节点查看:

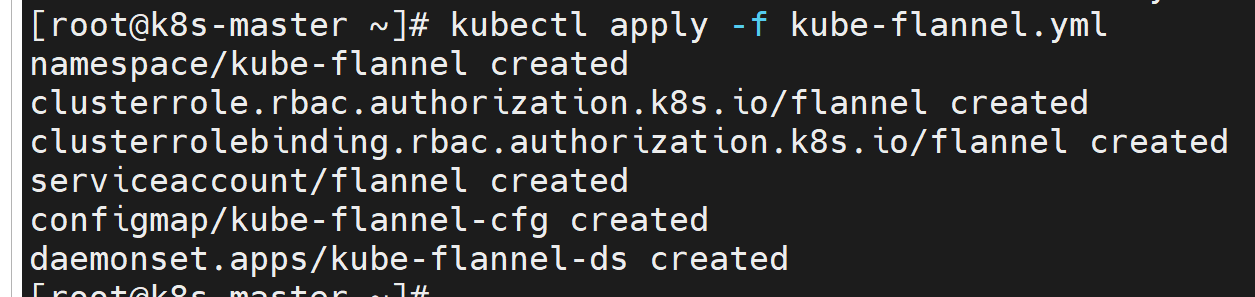

5. 安装网络插件(只需在master节点执行)

k8s支持多种网络插件,比如flannel、calico、canal等,这里选择flannel。

flannel插件使用的是DaemonSet的控制器,它会在每个节点上都运行。

下载flannel文件:

wget https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml

执行以下命令:

kubectl apply -f kube-flannel.yml

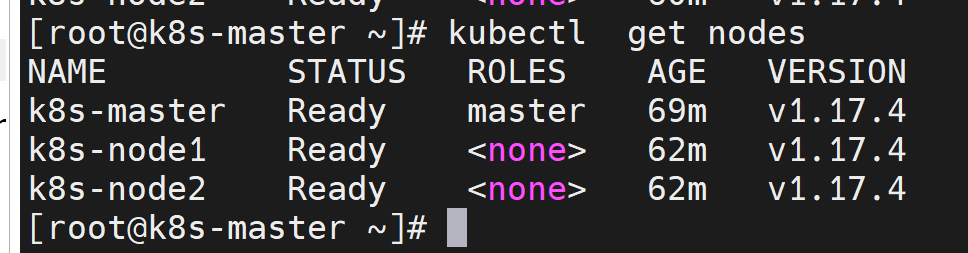

稍等一会:

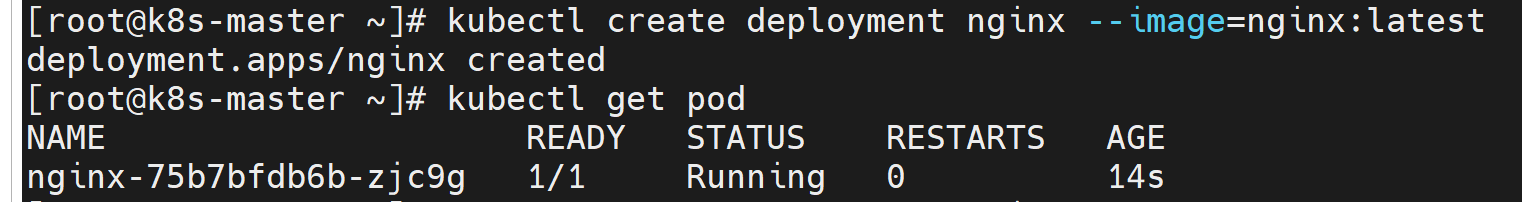

6. 环境测试

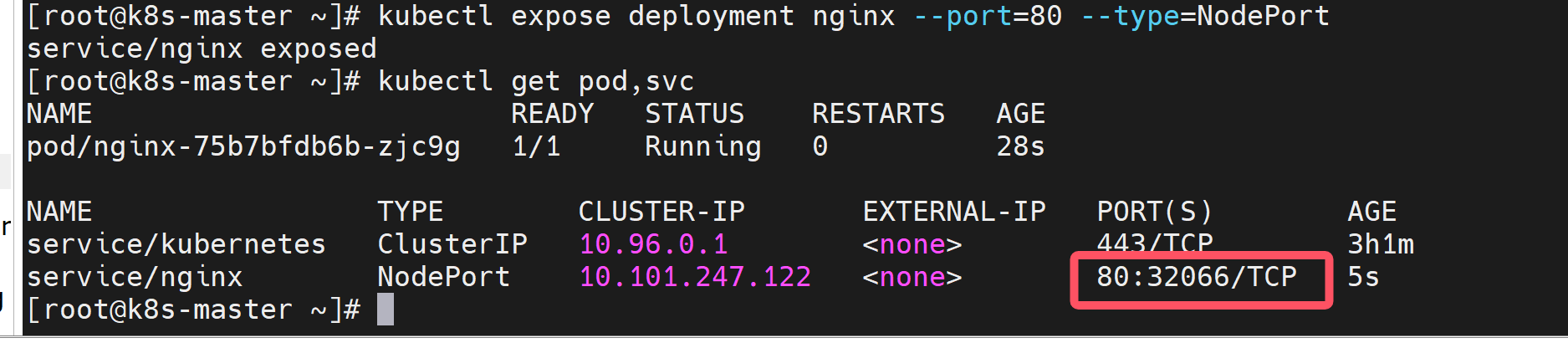

6.1. 通过deployment部署nginx服务

kubectl create deployment nginx --image=nginx:latest

6.2. 通过service暴露nginx服务

kubectl expose deployment nginx --port=80 --type=NodePort

可以看到pod和service都已经好了,service的端口32066

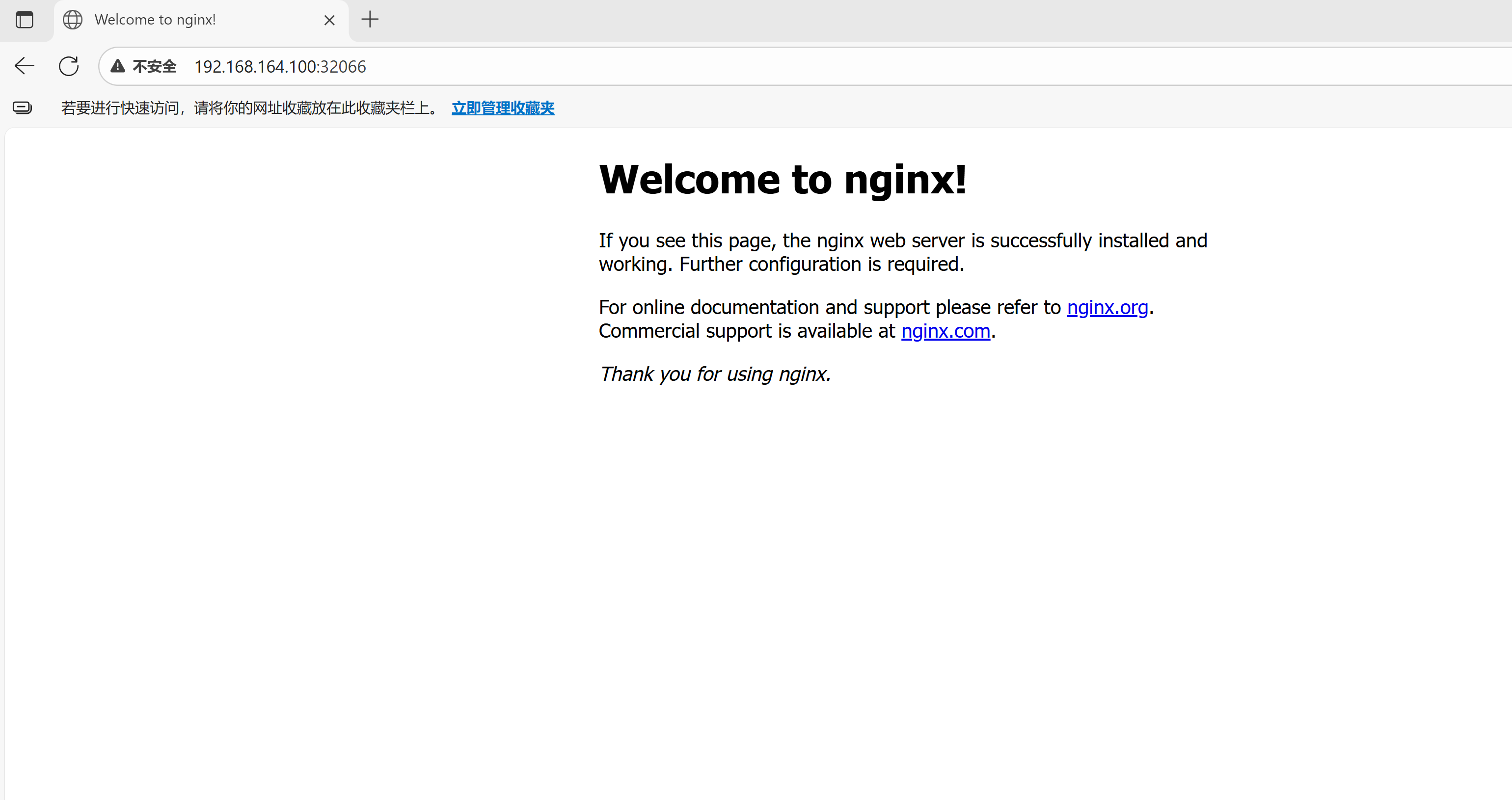

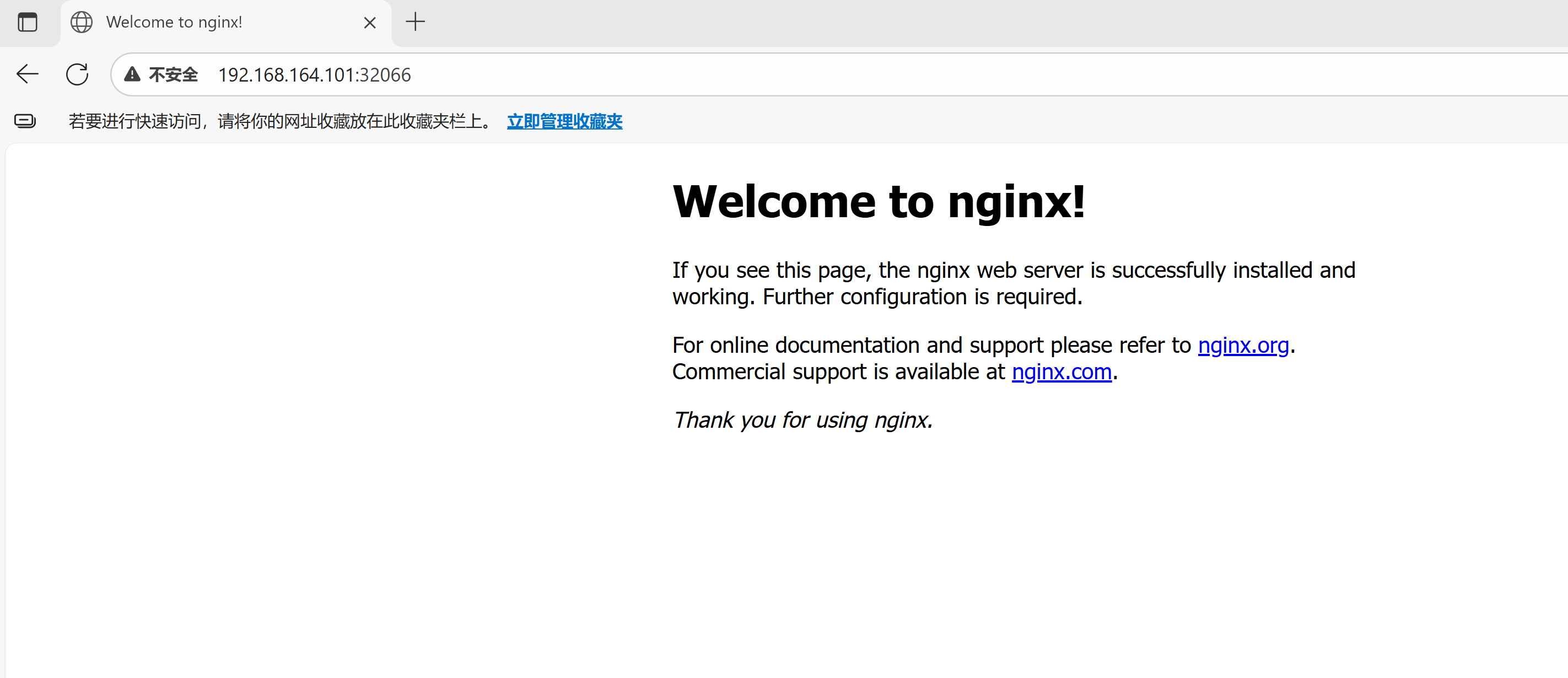

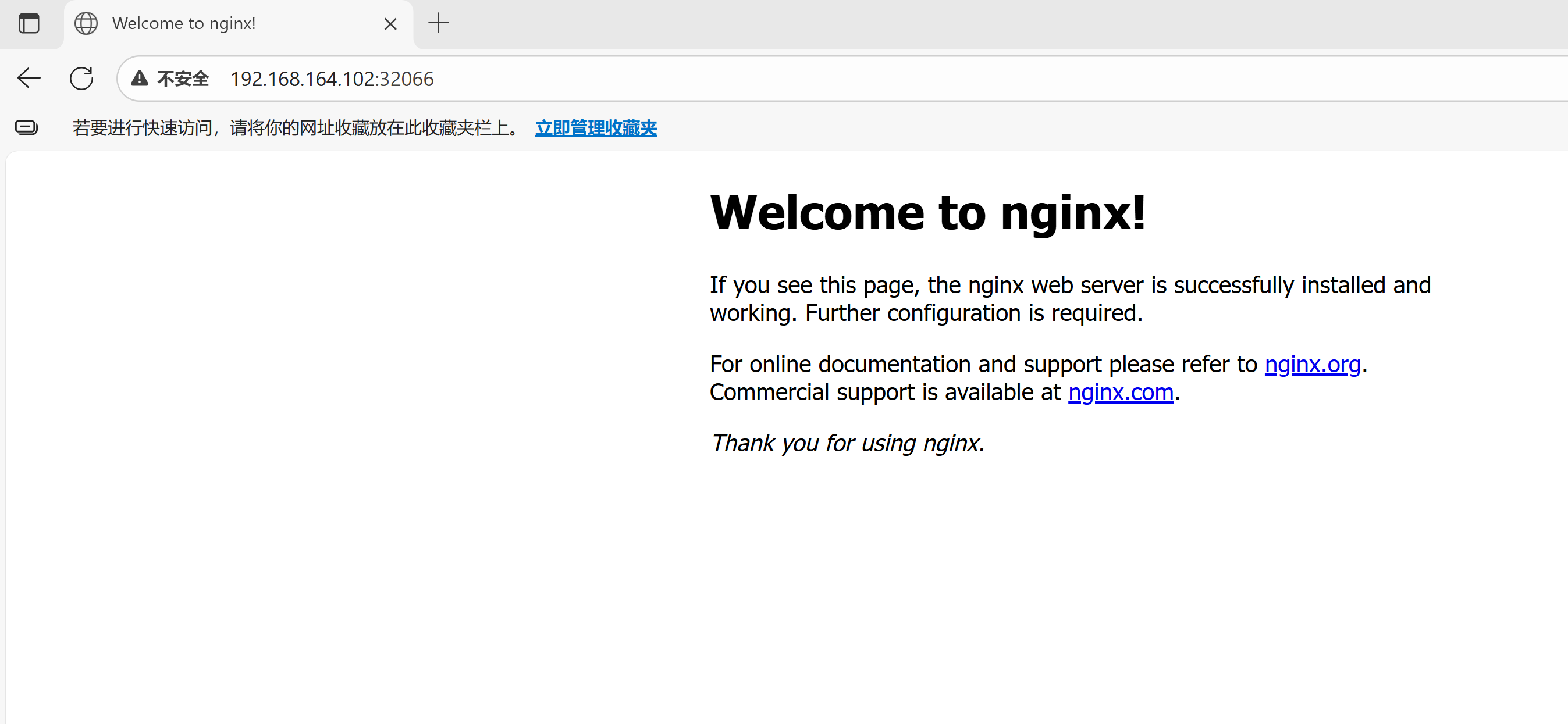

6.3. 访问nginx服务

访问 任意节点ip:32066

至此,k8s学习环境搭建完成。

1272

1272

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?