【Centos】EFAK(kafka-eagle)对ZK、Kafka可视化管理工具容器化安装与配置

前言

随着zk与kafka使用率普及率越来越高,可视化管理工具EFAK炙手可热。

2022年11月01日当前最新版本EFAK (Eagle For Apache Kafka®) 3.0.1 was released on Aug 30, 2022.

构建

采用Centos7.9.2009,JAVA8构建容器

Dockerfile

FROM centos:7.9.2009

# 中文语言包

RUN yum install -y kde-l10n-Chinese && \

yum reinstall -y glibc-common && \

yum install -y telnet net-tools wget && \

yum clean all && \

rm -rf /tmp/* rm -rf /var/cache/yum/* && \

localedef -c -f UTF-8 -i zh_CN zh_CN.UTF-8

RUN echo 'LC_ALL="zh_CN.UTF-8"' > /etc/locale.conf

RUN cat /etc/locale.conf

RUN cp /usr/share/zoneinfo/Asia/Shanghai /etc/localtime

ENV TZ=Asia/Shanghai

ENV LANG en_US.utf8

ENV LC_ALL en_US.utf8

# 安装centos:7.9.2009的java最新版本,目前最新为1.8.0_352

RUN yum install -y java-1.8.0-openjdk-devel.x86_64 unzip

WORKDIR /usr/local/

# 安装最新版本呢kafka-eagle

ARG KAFKA_EAGLE_VERSION=3.0.1

COPY kafka-eagle-bin-${KAFKA_EAGLE_VERSION}.tar.gz /usr/local

RUN tar -zxvf kafka-eagle-bin-${KAFKA_EAGLE_VERSION}.tar.gz && tar -zxvf kafka-eagle-bin-${KAFKA_EAGLE_VERSION}/efak-web-${KAFKA_EAGLE_VERSION}-bin.tar.gz && \

ln -s /usr/local/efak-web-${KAFKA_EAGLE_VERSION} kafka-eagle && chmod +x kafka-eagle/bin/ke.sh && \

rm -rf kafka-eagle-bin-${KAFKA_EAGLE_VERSION}.tar.gz

ENV KE_HOME=/usr/local/kafka-eagle

COPY *.properties /usr/local/kafka-eagle/conf/

COPY works /usr/local/kafka-eagle/conf/

COPY restart.sh /

RUN chmod +x /restart.sh

CMD [ "/restart.sh" ]

system-config.properties

EFAK压缩包中的默认配置文件

######################################

# multi zookeeper & kafka cluster list

# Settings prefixed with 'kafka.eagle.' will be deprecated, use 'efak.' instead

######################################

efak.zk.cluster.alias=cluster1,cluster2

cluster1.zk.list=tdn1:2181,tdn2:2181,tdn3:2181

cluster2.zk.list=xdn10:2181,xdn11:2181,xdn12:2181

######################################

# zookeeper enable acl

######################################

cluster1.zk.acl.enable=false

cluster1.zk.acl.schema=digest

cluster1.zk.acl.username=test

cluster1.zk.acl.password=test123

######################################

# broker size online list

######################################

cluster1.efak.broker.size=20

######################################

# zk client thread limit

######################################

kafka.zk.limit.size=16

######################################

# EFAK webui port

######################################

efak.webui.port=8048

######################################

# EFAK enable distributed

######################################

efak.distributed.enable=false

efak.cluster.mode.status=master

efak.worknode.master.host=localhost

efak.worknode.port=8085

######################################

# kafka jmx acl and ssl authenticate

######################################

cluster1.efak.jmx.acl=false

cluster1.efak.jmx.user=keadmin

cluster1.efak.jmx.password=keadmin123

cluster1.efak.jmx.ssl=false

cluster1.efak.jmx.truststore.location=/data/ssl/certificates/kafka.truststore

cluster1.efak.jmx.truststore.password=ke123456

######################################

# kafka offset storage

######################################

cluster1.efak.offset.storage=kafka

cluster2.efak.offset.storage=zk

######################################

# kafka jmx uri

######################################

cluster1.efak.jmx.uri=service:jmx:rmi:///jndi/rmi://%s/jmxrmi

######################################

# kafka metrics, 15 days by default

######################################

efak.metrics.charts=true

efak.metrics.retain=15

######################################

# kafka sql topic records max

######################################

efak.sql.topic.records.max=5000

efak.sql.topic.preview.records.max=10

######################################

# delete kafka topic token

######################################

efak.topic.token=keadmin

######################################

# kafka sasl authenticate

######################################

cluster1.efak.sasl.enable=false

cluster1.efak.sasl.protocol=SASL_PLAINTEXT

cluster1.efak.sasl.mechanism=SCRAM-SHA-256

cluster1.efak.sasl.jaas.config=org.apache.kafka.common.security.scram.ScramLoginModule required username="kafka" password="kafka-eagle";

cluster1.efak.sasl.client.id=

cluster1.efak.blacklist.topics=

cluster1.efak.sasl.cgroup.enable=false

cluster1.efak.sasl.cgroup.topics=

cluster2.efak.sasl.enable=false

cluster2.efak.sasl.protocol=SASL_PLAINTEXT

cluster2.efak.sasl.mechanism=PLAIN

cluster2.efak.sasl.jaas.config=org.apache.kafka.common.security.plain.PlainLoginModule required username="kafka" password="kafka-eagle";

cluster2.efak.sasl.client.id=

cluster2.efak.blacklist.topics=

cluster2.efak.sasl.cgroup.enable=false

cluster2.efak.sasl.cgroup.topics=

######################################

# kafka ssl authenticate

######################################

cluster3.efak.ssl.enable=false

cluster3.efak.ssl.protocol=SSL

cluster3.efak.ssl.truststore.location=

cluster3.efak.ssl.truststore.password=

cluster3.efak.ssl.keystore.location=

cluster3.efak.ssl.keystore.password=

cluster3.efak.ssl.key.password=

cluster3.efak.ssl.endpoint.identification.algorithm=https

cluster3.efak.blacklist.topics=

cluster3.efak.ssl.cgroup.enable=false

cluster3.efak.ssl.cgroup.topics=

######################################

# kafka sqlite jdbc driver address

######################################

#efak.driver=org.sqlite.JDBC

#efak.url=jdbc:sqlite:/hadoop/kafka-eagle/db/ke.db

#efak.username=root

#efak.password=www.kafka-eagle.org

######################################

# kafka mysql jdbc driver address

######################################

efak.driver=com.mysql.cj.jdbc.Driver

efak.url=jdbc:mysql://127.0.0.1:3306/ke?useUnicode=true&characterEncoding=UTF-8&zeroDateTimeBehavior=convertToNull

efak.username=root

efak.password=123456

works

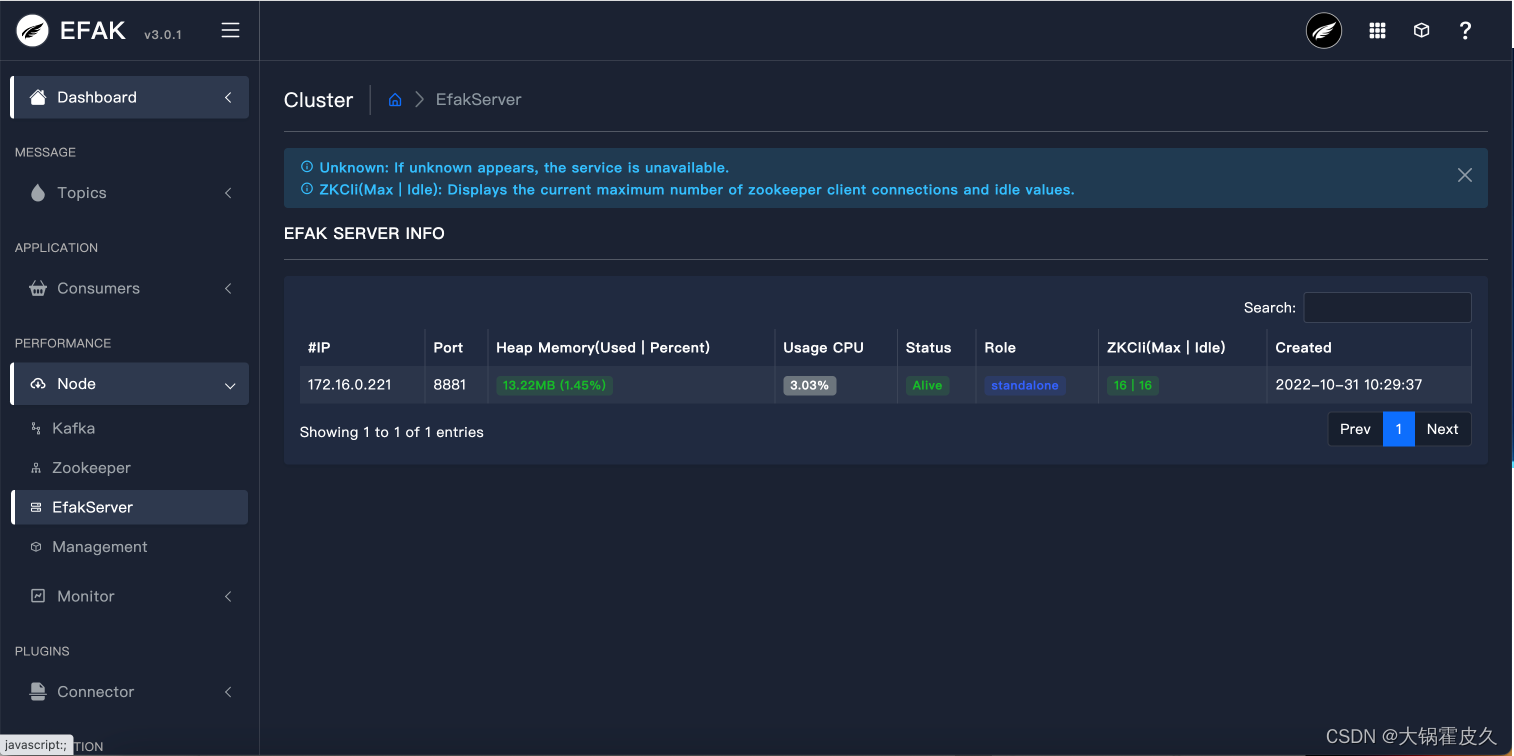

因为是单机部署 默认创建个works空文件即可,如果不是空文件,那在EFAK可视化界面查看EFAK SERVER INFO的时候会有多个连接地址。

PS:EFAK即挂了也不会不影响数据,standlone即可

restart.sh

此脚本乃是绝顶SSR功法,多说无益,请自行琢磨。

#!/bin/sh

base_dir=/usr/local/kafka-eagle

s_f=$base_dir/conf/system-config.properties

for VAR in $(env)

do

KEY=${VAR%=*}

VALUE=${VAR#*=}

# 忽略空值

if [ -z "$VALUE" ]; then

continue

fi

if [[ "$KEY" =~ ^par. ]]

then

REAL_KEY=${KEY#par.}

# 替换特殊字符/

VALUE=$(echo $VALUE|sed 's/\//\\\//g')

#sed -i "/^$REAL_KEY/d" $s_f

#sed -i '$a'" $SERVER_ID_INFO" ${zk_home}/conf/zoo.cfg

sed -i "/^$REAL_KEY/c$REAL_KEY=$VALUE" $s_f

fi

if [[ "$KEY" =~ ^add. ]]

then

REAL_KEY=${KEY#add.}

# 替换特殊字符/

echo "$REAL_KEY=$VALUE" >> $s_f

fi

done

# 替换特殊字符为空格符号和等于符号

eval sed -i 's/{SYMBOL_SPACE}/\ /g' $s_f

eval sed -i 's/{SYMBOL_EQUAL}/=/g' $s_f

# 设置java路径

export JAVA_HOME=${JAVA_HOME:=/usr}

#/usr/local/kafka-eagle/bin/ke.sh start

#集群方式启动,单机方式启动无法读取EFAK SERVER INFO

/usr/local/kafka-eagle/bin/ke.sh cluster start

while true

do

sleep 10s

done

Compose

kafka-eagle.env

par.efak.zk.cluster.alias=cluster_test

par.efak.webui.port=8888

par.efak.topic.token=keadmin

#DB 使用sqlite

par.efak.driver=org.sqlite.JDBC

par.efak.url=jdbc:sqlite:/usr/local/kafka-eagle/db/ke.db

par.efak.username=root

par.efak.password=123456

######################################

# EFAK enable distributed

######################################

par.efak.distributed.enable=false

par.efak.cluster.mode.status=master

par.efak.worknode.master.host=localhost

par.efak.worknode.port=8881

######################################

# cluster test

######################################

add.PET_GOC.zk.list=10.10.0.11:12181,10.10.0.12:12182,10.10.0.13:12183

add.cluster_test.efak.sasl.enable=true

add.cluster_test.efak.sasl.protocol=SASL_PLAINTEXT

add.cluster_test.efak.sasl.mechanism=PLAIN

add.cluster_test.efak.sasl.client.id=kafka-eagle-client-local

add.cluster_test.efak.blacklist.topics=

add.cluster_test.efak.sasl.cgroup.enable=false

add.cluster_test.efak.sasl.cgroup.topics=

add.cluster_test.efak.sasl.enable=true

add.cluster_test.efak.sasl.protocol=SASL_PLAINTEXT

add.cluster_test.efak.sasl.mechanism=PLAIN

add.cluster_test.efak.sasl.jaas.config=org.apache.kafka.common.security.scram.ScramLoginModule{SYMBOL_SPACE}required{SYMBOL_SPACE}username{SYMBOL_EQUAL}"admin"{SYMBOL_SPACE}password{SYMBOL_EQUAL}"test888";

add.cluster_test.efak.sasl.client.id=kafka-eagle-client-local

add.cluster_test.efak.blacklist.topics=

add.cluster_test.efak.sasl.cgroup.enable=false

add.cluster_test.efak.sasl.cgroup.topics=

add.cluster_test.efak.jmx.acl=false

add.cluster_test.efak.jmx.user=keadmin

add.cluster_test.efak.jmx.password=keadmin123

add.cluster_test.efak.jmx.ssl=false

add.cluster_test.efak.jmx.truststore.location=/data/ssl/certificates/kafka.truststore

add.cluster_test.efak.jmx.truststore.password=ke123456

add.cluster_test.zk.acl.enable=false

add.cluster_test.zk.acl.schema=digest

add.cluster_test.zk.acl.username=test

add.cluster_test.zk.acl.password=test123

add.cluster_test.efak.broker.size=20

add.cluster_test.efak.offset.storage=kafka

add.cluster_test.efak.jmx.uri=service:jmx:rmi:///jndi/rmi://%s/jmxrmi

docker-compose.yml

version: "3.8"

services:

kafka-eagle:

image: 7014253/kafka-eagle:1.0

hostname: kafka.eagle

container_name: test.kafka-eagle

network_mode: host

healthcheck:

test: "ps axu | grep -v 'grep' | grep 'kafka-eagle'"

interval: 10s

timeout: 5s

retries: 5

start_period: 10s

volumes:

- /data/kafka-eagle/logs:/usr/local/kafka-eagle/logs

env_file:

- kafka-eagle.env

deploy:

resources:

limits:

memory: 4G

cpus: 4

restart: unless-stopped

zk注意事项

四字白名单默认是开启的,如果关闭了请主动开启,否则kafka-eagle读取不到zk版本号。

SERVER_JVMFLAGS=-Dzookeeper.4lw.commands.whitelist=*

kafka注意事项

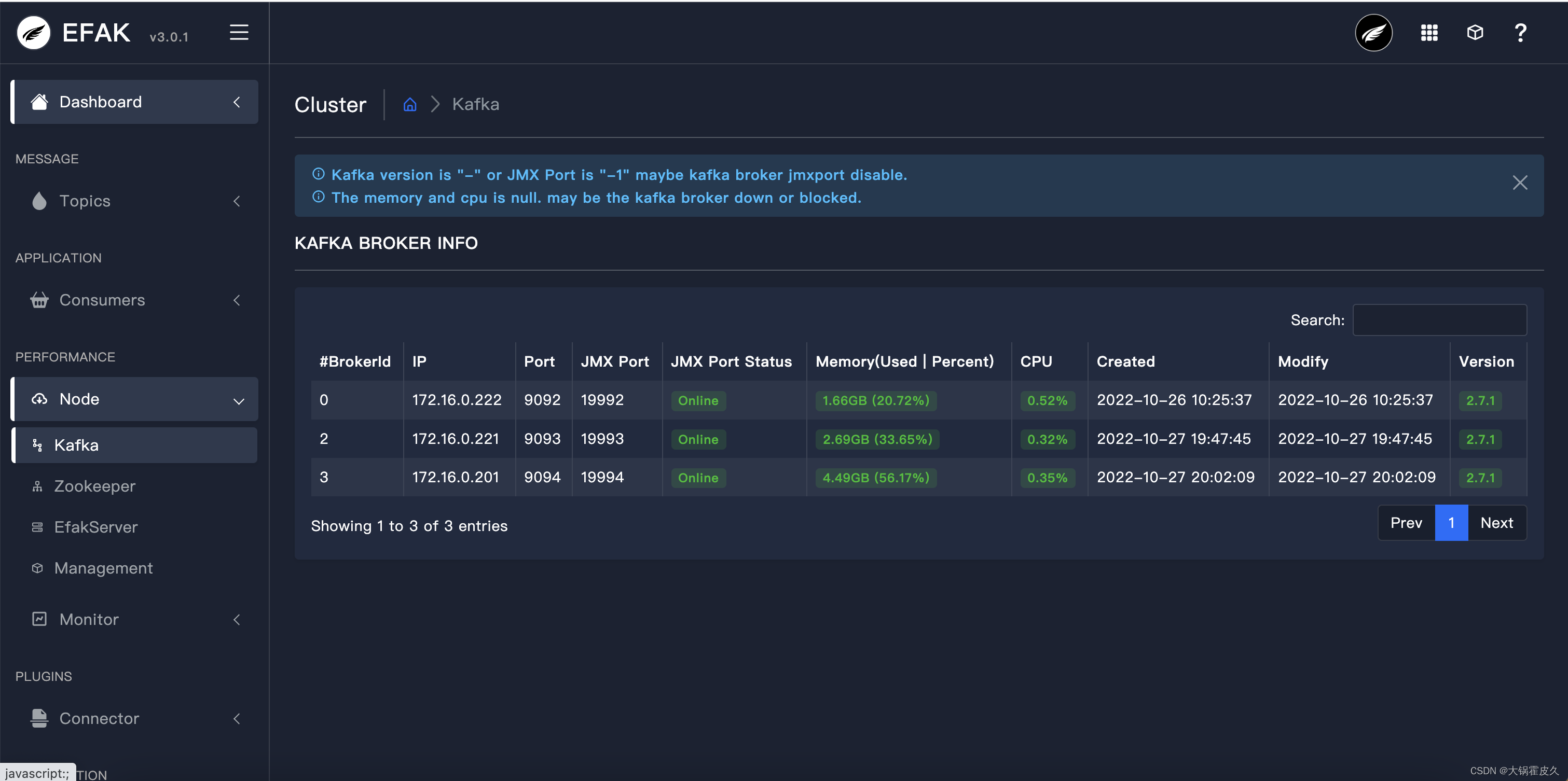

如果服务器网络所有端口都互通,那么-Dcom.sun.management.jmxremote.rmi.port=9998不用配,因为是如果不配,就会是随机端口,故需要自定义指定JMX端口。如果网络不通会导致无法读取到kafka的使用率,如CPU、内存、版本号等

KAFKA_HEAP_OPTS=-Dcom.sun.management.jmxremote.rmi.port=19902

演示

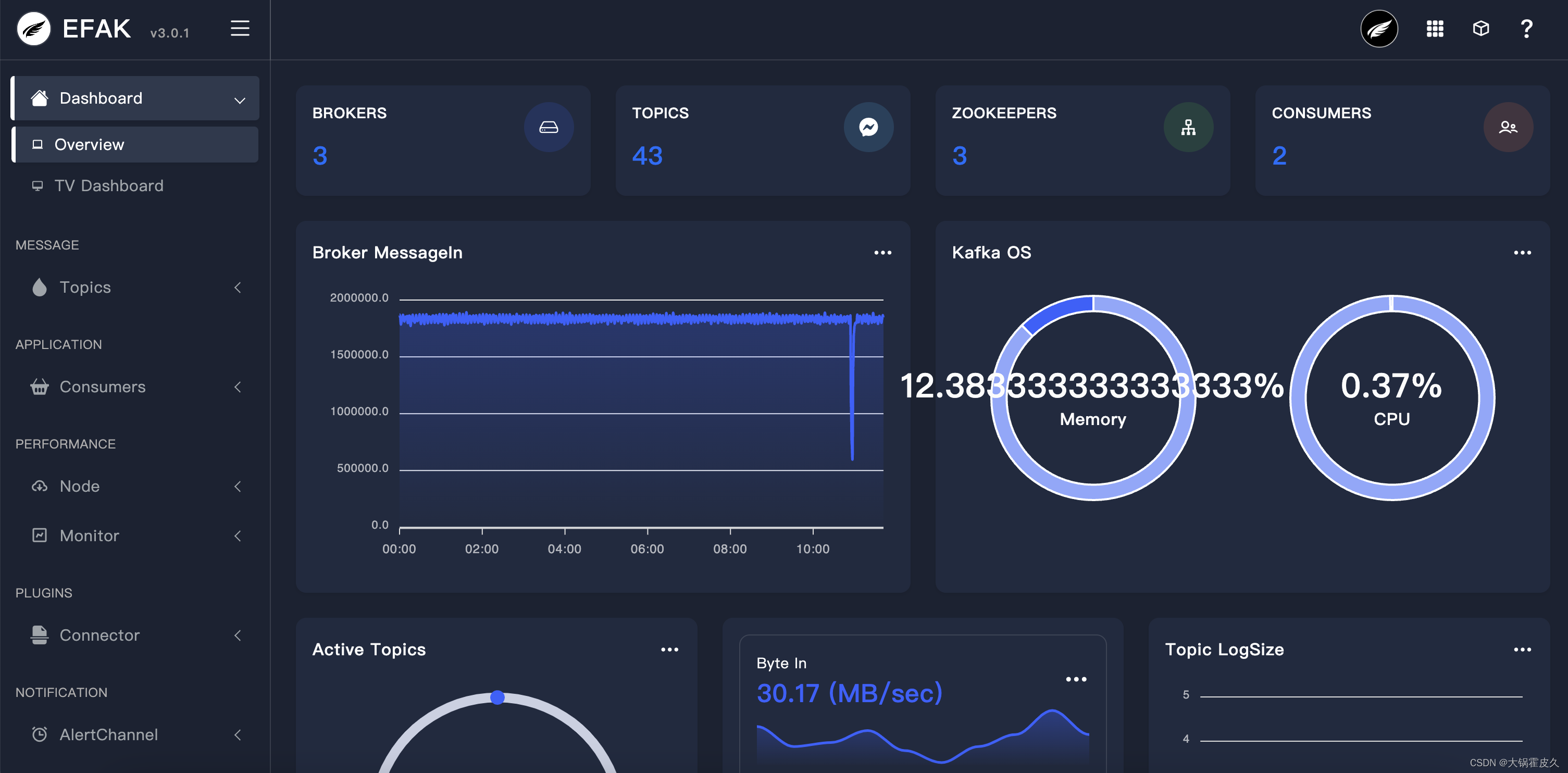

与2.*不同的新界面

首页

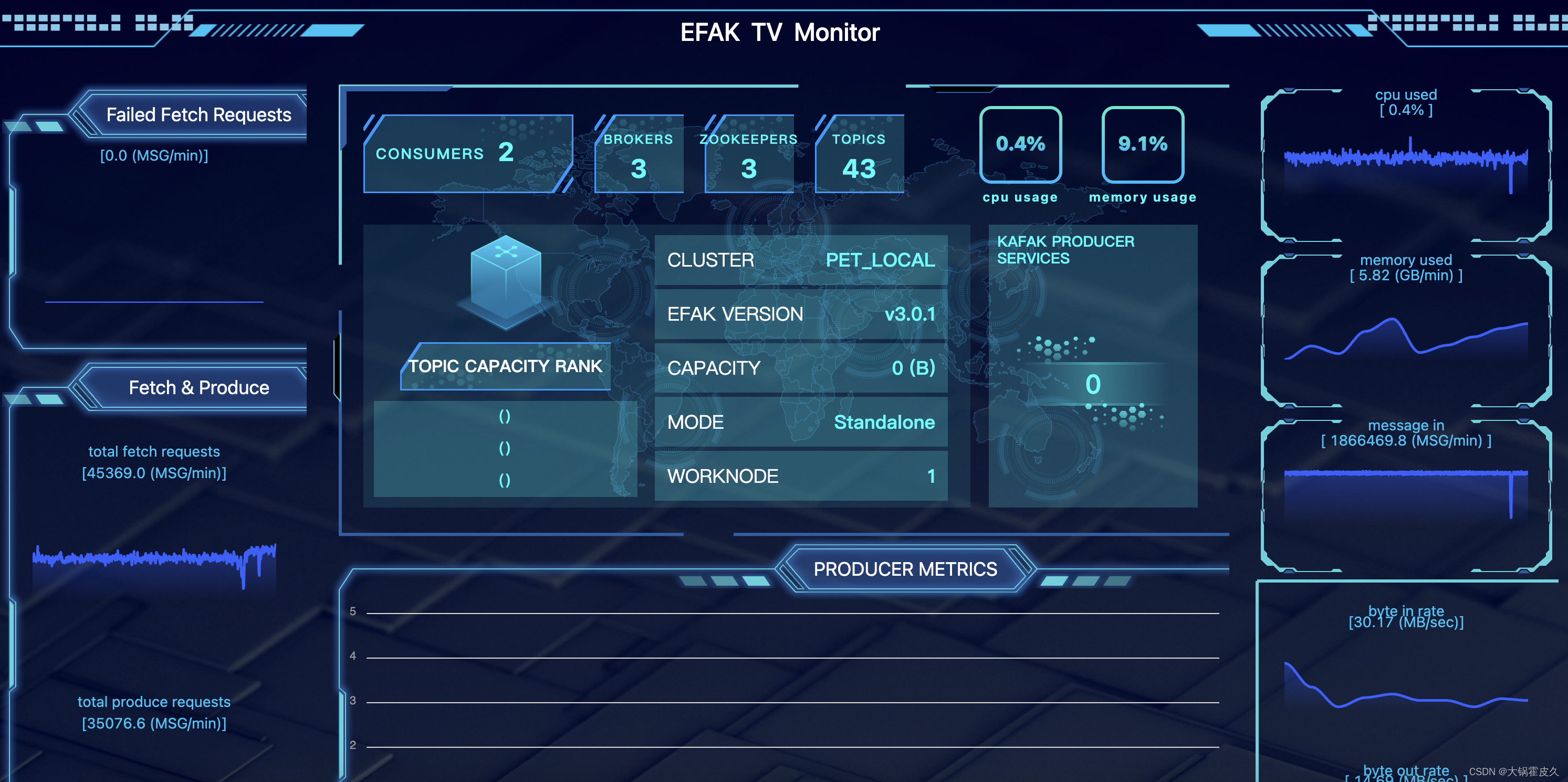

TV Dashboard

满屏的高科技感

KAFKA BROKER INFO

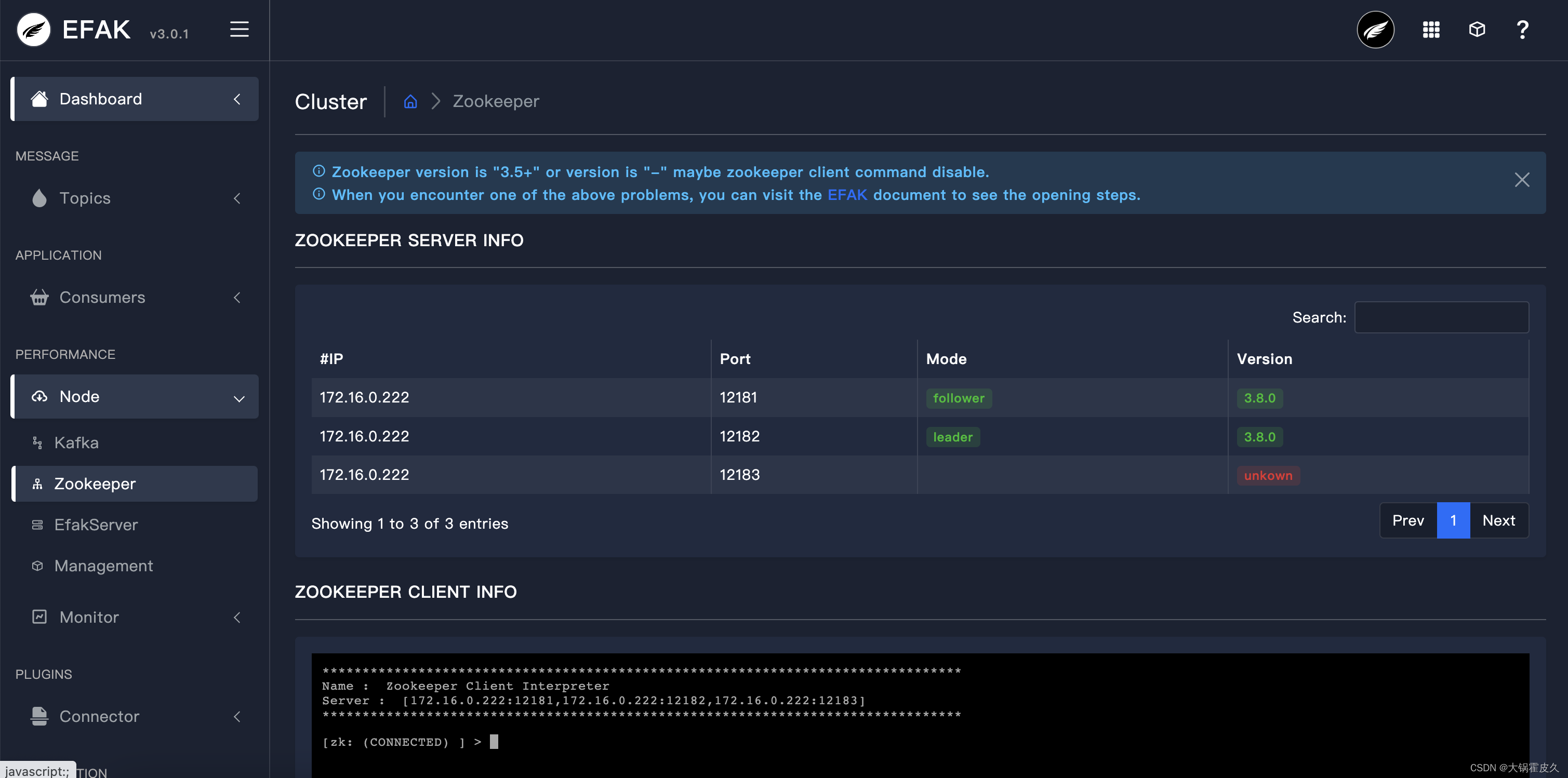

ZOOKEEPER SERVER INFO

EFAK SERVER INFO

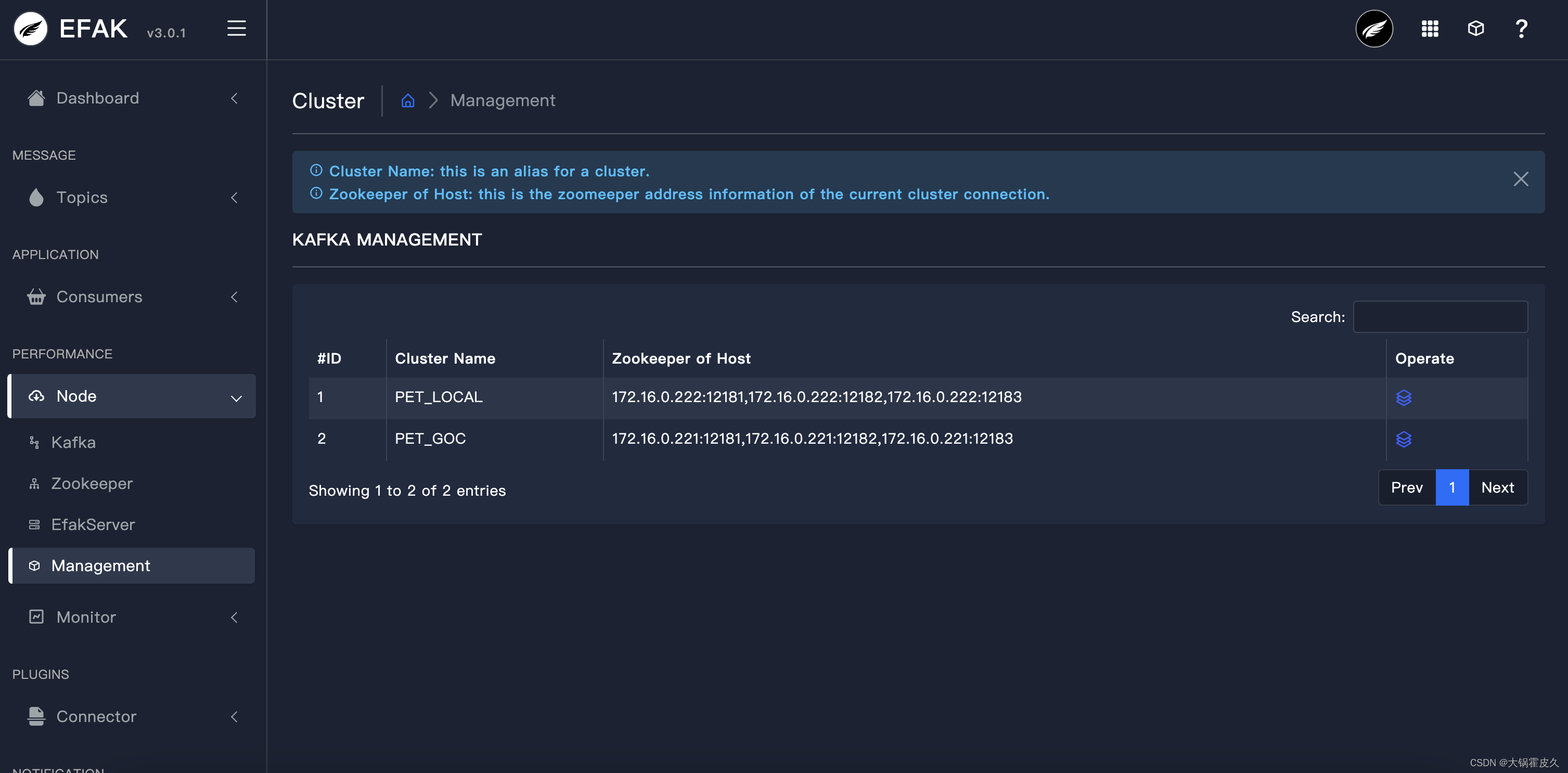

KAFKA MANAGEMENT

本文介绍如何在Centos上通过容器化安装与配置EFAK(kafka-eagle),实现对Zookeeper和Kafka集群的可视化管理。涵盖Dockerfile配置、环境变量设置及系统配置,展示了最新版本EFAK 3.0.1的主要功能。

本文介绍如何在Centos上通过容器化安装与配置EFAK(kafka-eagle),实现对Zookeeper和Kafka集群的可视化管理。涵盖Dockerfile配置、环境变量设置及系统配置,展示了最新版本EFAK 3.0.1的主要功能。

1610

1610