本篇文实现FFmpeg解码音频数据,分别用AudioTrack和OpenSL播放。

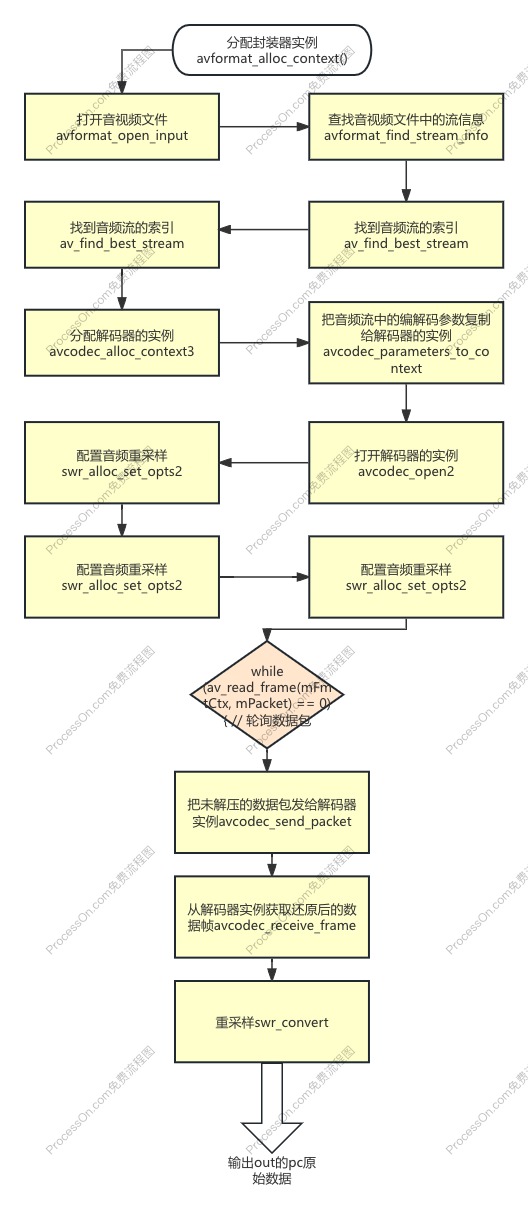

一.FFmpeg的数据提取:

ffmpeg的常规的流程为:

代码如下:

PlayAudioTrack::playAudioProcedure() {

LOGE("PlayAudio: %s", sAudioPath.c_str());

mFmtCtx = avformat_alloc_context(); //分配封装器实例

LOGI("Open audio file");

// 打开音视频文件

if (avformat_open_input(&mFmtCtx, sAudioPath.c_str(), NULL, NULL) != 0) {

LOGE("Cannot open audio file: %s\n", sAudioPath.c_str());

playAudioInfo = "Cannot open audio file: " + sAudioPath + "\n";

PostStatusMessage(playAudioInfo.c_str());

return;

}

playAudioInfo = "Open audio file: " + sAudioPath + "\n";

PostStatusMessage(playAudioInfo.c_str());

// 查找音视频文件中的流信息

if (avformat_find_stream_info(mFmtCtx, NULL) < 0) {

LOGE("Cannot find stream information.");

playAudioInfo = "Cannot find stream information \n";

PostStatusMessage(playAudioInfo.c_str());

return;

}

// 找到音频流的索引

int audio_index = av_find_best_stream(mFmtCtx, AVMEDIA_TYPE_AUDIO, -1, -1, NULL, 0);

if (audio_index == -1) {

LOGE("No audio stream found.");

playAudioInfo = "No audio stream found. \n";

PostStatusMessage(playAudioInfo.c_str());

return;

}

AVCodecParameters *codec_para = mFmtCtx->streams[audio_index]->codecpar;

LOGI("Find the decoder for the audio stream");

// 查找音频解码器

AVCodec *codec = (AVCodec *) avcodec_find_decoder(codec_para->codec_id);

if (codec == NULL) {

LOGE("Codec not found.");

playAudioInfo = "Codec not found.\n";

PostStatusMessage(playAudioInfo.c_str());

return;

}

// 分配解码器的实例

mDecodeCtx = avcodec_alloc_context3(codec);

if (mDecodeCtx == NULL) {

LOGE("CodecContext not found.");

playAudioInfo = "CodecContext not found. \n";

PostStatusMessage(playAudioInfo.c_str());

return;

}

// 把音频流中的编解码参数复制给解码器的实例

if (avcodec_parameters_to_context(mDecodeCtx, codec_para) < 0) {

LOGE("Fill CodecContext failed.");

playAudioInfo = "Fill CodecContext failed. \n";

PostStatusMessage(playAudioInfo.c_str());

return;

}

LOGE("mDecodeCtx bit_rate=%d", mDecodeCtx->bit_rate);

LOGE("mDecodeCtx sample_fmt=%d", mDecodeCtx->sample_fmt);

LOGE("mDecodeCtx sample_rate=%d", mDecodeCtx->sample_rate);

LOGE("mDecodeCtx nb_channels=%d", mDecodeCtx->channels);

LOGI("open Codec");

playAudioInfo = "open Codec \n";

PostStatusMessage(playAudioInfo.c_str());

// 打开解码器的实例

if (avcodec_open2(mDecodeCtx, codec, NULL)) {

LOGE("Open CodecContext failed.");

playAudioInfo = "Open CodecContext failed \n";

PostStatusMessage(playAudioInfo.c_str());

return;

}

AVChannelLayout out_ch_layout = AV_CHANNEL_LAYOUT_STEREO; // 输出的声道布局

swr_alloc_set_opts2(&mSwrCtx, &out_ch_layout, AV_SAMPLE_FMT_S16,

mDecodeCtx->sample_rate,

&mDecodeCtx->ch_layout, mDecodeCtx->sample_fmt,

mDecodeCtx->sample_rate, 0, NULL

);

LOGE("swr_init");

playAudioInfo = " swr_init..... \n";

PostStatusMessage(playAudioInfo.c_str());

swr_init(mSwrCtx); // 初始化音频采样器的实例

// 原音频的通道数

int channel_count = mDecodeCtx->ch_layout.nb_channels;

// 单通道最大存放转码数据。所占字节 = 采样率*量化格式 / 8

int out_size = 44100 * 16 / 8;

uint8_t *out = (uint8_t *) (av_malloc(out_size));

//回调AudioFormat参数给Java层

JavaAudioFormatCallback(mDecodeCtx->sample_rate, out_ch_layout.nb_channels);

mPacket = av_packet_alloc(); // 分配一个数据包

mFrame = av_frame_alloc(); // 分配一个数据帧

while (av_read_frame(mFmtCtx, mPacket) == 0) { // 轮询数据包

if (mPacket->stream_index == audio_index) { // 音频包需要解码

// 把未解压的数据包发给解码器实例

int ret = avcodec_send_packet(mDecodeCtx, mPacket);

if (ret == 0) {

// 从解码器实例获取还原后的数据帧

ret = avcodec_receive_frame(mDecodeCtx, mFrame);

if (ret == 0) {

// 重采样。也就是把输入的音频数据根据指定的采样规格转换为新的音频数据输出

swr_convert(mSwrCtx, &out, out_size,

(const uint8_t **) (mFrame->data), mFrame->nb_samples);

// 获取采样缓冲区的真实大小

int size = av_samples_get_buffer_size(nullptr, channel_count,

mFrame->nb_samples,

AV_SAMPLE_FMT_S16, 1);

LOGE("out_size=%d, size=%d", out_size, size);

playAudioInfo = "回调的音频数据:size= " + to_string(size) + "\n";

PostStatusMessage(playAudioInfo.c_str());

//回调 out_audio_data 音频数据给Java层

JavaAudioBytesCallback(out, size);

if (is_stop) { // 是否停止播放

break;

}

}

}

}

}

release();

}

以上的过程中ffmpeg提取出frame通过重采样获取到原始的pcm数据,这里就通过

回调 out_audio_data 音频数据给Java层后可以给AudioTrack使用。

二.AudioTrack的播放:

通过ffmpeg解码出的sampleRate和channelCount设置AudioTrack的参数;而解码回调的out_audio_data 音频数据给AudioTrack。

代码如下:

private void cppAudioFormatCallback(int sampleRate, int channelCount) {

Log.d(TAG, "create sampleRate=" + sampleRate + ", channelCount=" + channelCount);

int channelType = (channelCount==1) ? AudioFormat.CHANNEL_OUT_MONO

: AudioFormat.CHANNEL_OUT_STEREO;

// 根据定义好的几个配置,来获取合适的缓冲大小

int bufferSize = AudioTrack.getMinBufferSize(sampleRate,

channelType, AudioFormat.ENCODING_PCM_16BIT);

Log.d(TAG, "bufferSize="+bufferSize);

// 根据音频配置和缓冲区构建原始音频播放实例

mAudioTrack = new AudioTrack(AudioManager.STREAM_MUSIC,

sampleRate, channelType, AudioFormat.ENCODING_PCM_16BIT,

bufferSize, AudioTrack.MODE_STREAM);

mAudioTrack.play(); // 开始播放原始音频

Log.d(TAG, "end create");

}

private void cppAudioBytesCallback(byte[] bytes, int size) {

Log.d(TAG, "cppAudioBytesCallback size=" + size);

if (mAudioTrack != null &&

mAudioTrack.getPlayState() == AudioTrack.PLAYSTATE_PLAYING) {

mAudioTrack.write(bytes, 0, size); // 将数据写入到音轨AudioTrack

}

}三.OpenSL的播放:

OpenSL ES(Open Sound Library for Embedded Systems)是一个跨平台的、针对嵌入式系统的低级音频API。它由Khronos Group管理,广泛应用于移动设备(如Android和iOS)以及其他嵌入式系统中。OpenSL ES提供了对音频播放、录音、音效处理等功能的直接控制,允许开发者以较低延迟和较高性能处理音频。

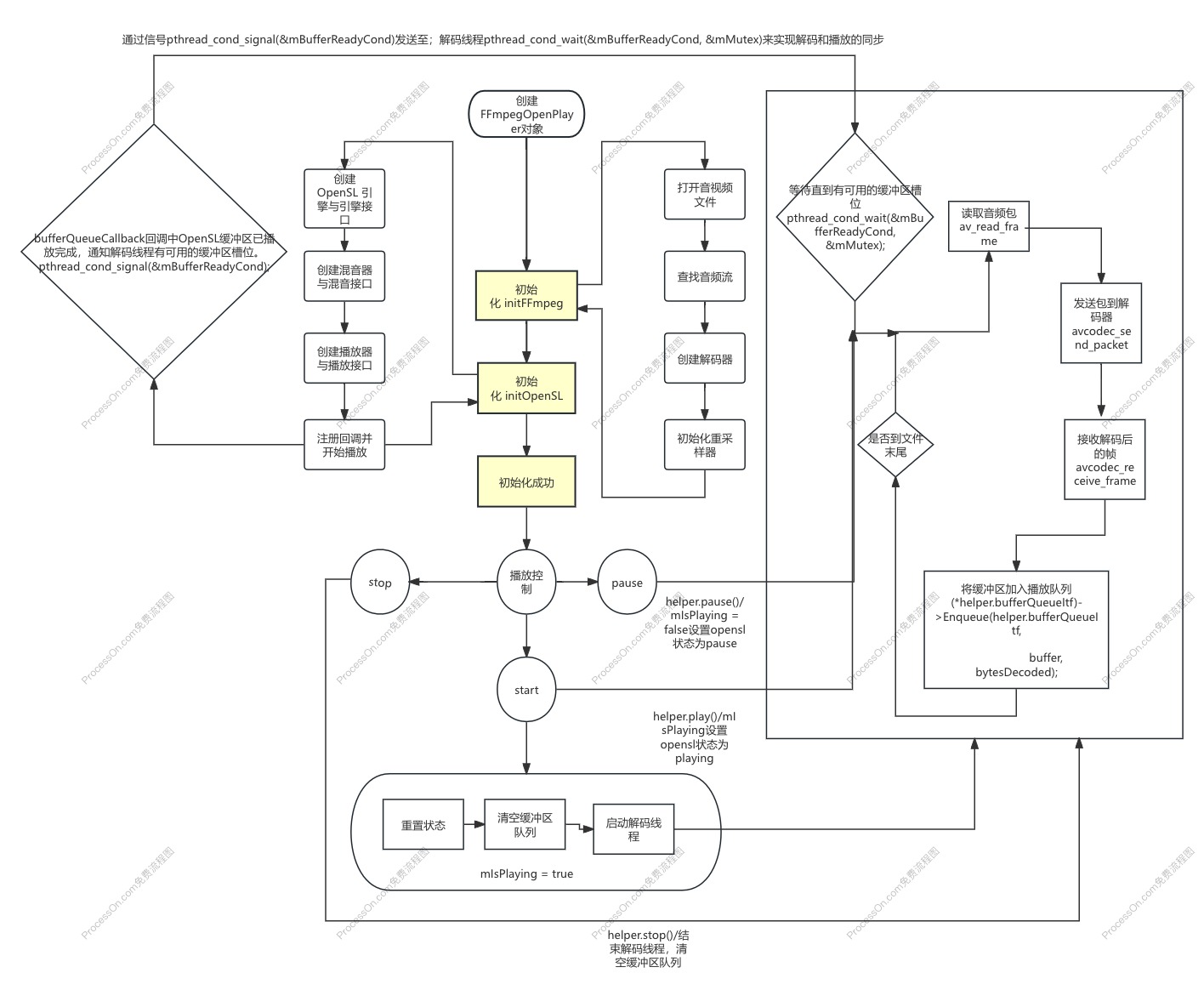

现在设计一个FFmpeg解码音频数据,用于OpenSL播放。解码的代码放在一个解码线程里,同时OpenSL内部维护一个回调(SLresult (*RegisterCallback))只负责通知解码线程有缓冲区可用。

总体的流程图如下:

OpenslHelper帮助类:

封装了opensl的创建OpenSL引擎与引擎接口createEngine()

/创建混音器与混音接口createMix() /创建播放器与播放接口createPlayer(int numChannels, long samplesRate, int bitsPerSample, int channelMask)/注册回调入口registerCallback(slAndroidSimpleBufferQueueCallback callback, void *pContext)/开始播放play()/暂停播放pause()/停止播放stop()/清理资源release()的方法封装。

以下是是OpenslHelper类的代码:

// Author : wangyongyao https://github.com/wangyongyao1989

// Created by MMM on 2025/11/11.

//

#include "includes/OpenslHelper.h"

// 是否成功

bool OpenslHelper::isSuccess(SLresult &result) {

return result == SL_RESULT_SUCCESS;

}

// 创建OpenSL引擎与引擎接口

SLresult OpenslHelper::createEngine() {

// 创建引擎

result = slCreateEngine(&engine, 0, NULL, 0, NULL, NULL);

if (!isSuccess(result)) {

return result;

}

// 实例化引擎,第二个参数为:是否异步

result = (*engine)->Realize(engine, SL_BOOLEAN_FALSE);

if (!isSuccess(result)) {

return result;

}

// 获取引擎接口

result = (*engine)->GetInterface(engine, SL_IID_ENGINE, &engineItf);

if (!isSuccess(result)) {

return result;

}

return result;

}

// 创建混音器与混音接口

SLresult OpenslHelper::createMix() {

// 获取混音器

result = (*engineItf)->CreateOutputMix(engineItf, &mix, 0, 0, 0);

if (!isSuccess(result)) {

return result;

}

// 实例化混音器

result = (*mix)->Realize(mix, SL_BOOLEAN_FALSE);

if (!isSuccess(result)) {

return result;

}

// 获取环境混响混音器接口

SLresult envResult = (*mix)->GetInterface(mix, SL_IID_ENVIRONMENTALREVERB, &envItf);

if (isSuccess(envResult)) {

// 给混音器设置环境

(*envItf)->SetEnvironmentalReverbProperties(envItf, &settings);

}

return result;

}

// 创建播放器与播放接口

SLresult

OpenslHelper::createPlayer(int numChannels, long samplesRate, int bitsPerSample, int channelMask) {

// 关联音频流缓冲区。设为2是防止延迟,可以在播放另一个缓冲区时填充新数据

SLDataLocator_AndroidSimpleBufferQueue buffQueque = {

SL_DATALOCATOR_ANDROIDSIMPLEBUFFERQUEUE, 4

};

// 缓冲区格式

SLDataFormat_PCM dataFormat_pcm = {

SL_DATAFORMAT_PCM,

(SLuint32) numChannels,

(SLuint32) samplesRate,

(SLuint32) bitsPerSample,

(SLuint32) bitsPerSample,

(SLuint32) channelMask,

SL_BYTEORDER_LITTLEENDIAN

};

// 数据源

SLDataSource audioSrc = {&buffQueque, &dataFormat_pcm};

// 关联混音器

SLDataLocator_OutputMix dataLocator_outputMix = {SL_DATALOCATOR_OUTPUTMIX, mix};

SLDataSink audioSink = {&dataLocator_outputMix, NULL};

// 通过引擎接口创建播放器

SLInterfaceID ids[3] = {SL_IID_BUFFERQUEUE, SL_IID_EFFECTSEND, SL_IID_VOLUME};

SLboolean required[3] = {SL_BOOLEAN_TRUE, SL_BOOLEAN_TRUE, SL_BOOLEAN_TRUE};

// 创建音频播放器

result = (*engineItf)->CreateAudioPlayer(

engineItf, &player, &audioSrc, &audioSink, sizeof(ids) / sizeof(ids[0]), ids, required);

if (!isSuccess(result)) {

return result;

}

// 实例化播放器

result = (*player)->Realize(player, SL_BOOLEAN_FALSE);

if (!isSuccess(result)) {

return result;

}

// 获取播放接口

result = (*player)->GetInterface(player, SL_IID_PLAY, &playItf);

if (!isSuccess(result)) {

return result;

}

// 获取音量接口

result = (*player)->GetInterface(player, SL_IID_VOLUME, &volumeItf);

if (!isSuccess(result)) {

return result;

}

// 注册缓冲区

result = (*player)->GetInterface(player, SL_IID_BUFFERQUEUE, &bufferQueueItf);

if (!isSuccess(result)) {

return result;

}

return result;

}

// 注册回调入口

SLresult

OpenslHelper::registerCallback(slAndroidSimpleBufferQueueCallback callback, void *pContext) {

result = (*bufferQueueItf)->RegisterCallback(bufferQueueItf, callback, pContext);

return result;

}

// 开始播放

SLresult OpenslHelper::play() {

playState = SL_PLAYSTATE_PLAYING;

result = (*playItf)->SetPlayState(playItf, SL_PLAYSTATE_PLAYING);

return result;

}

// 暂停播放

SLresult OpenslHelper::pause() {

playState = SL_PLAYSTATE_PAUSED;

result = (*playItf)->SetPlayState(playItf, SL_PLAYSTATE_PAUSED);

return result;

}

// 停止播放

SLresult OpenslHelper::stop() {

playState = SL_PLAYSTATE_STOPPED;

result = (*playItf)->SetPlayState(playItf, SL_PLAYSTATE_STOPPED);

return result;

}

// 清理资源

void OpenslHelper::release() {

if (player != nullptr) {

(*player)->Destroy(player);

player = nullptr;

playItf = nullptr;

bufferQueueItf = nullptr;

volumeItf = nullptr;

}

if (mix != nullptr) {

(*mix)->Destroy(mix);

mix = nullptr;

envItf = nullptr;

}

if (engine != nullptr) {

(*engine)->Destroy(engine);

engine = nullptr;

engineItf = nullptr;

}

}

// 析构

OpenslHelper::~OpenslHelper() {

release();

}decodeThread()解码线程:

通过mStopRequested和mIsPlaying来控制是否停止包的读取,通过以下代码中的信号量来控制解码和opensl的缓冲区填充的同步:

// 等待直到有可用的缓冲区槽位

while (mQueuedBufferCount >= NUM_BUFFERS && !mStopRequested && mIsPlaying) {

LOGI("Waiting for buffer slot, queued: %d", mQueuedBufferCount.load());

playAudioInfo =

"Waiting for buffer slot, queued:" + to_string(mQueuedBufferCount) + " \n";

PostStatusMessage(playAudioInfo.c_str());

pthread_cond_wait(&mBufferReadyCond, &mMutex);

}bufferQueueCallback缓冲区的回调:

在opensl缓冲区准备好后会回调该函数,进而通过发送信号量pthread_cond_signal(&mBufferReadyCond)通知解码线程缓冲区已经准备好:

void FFmpegOpenSLPlayer::bufferQueueCallback(SLAndroidSimpleBufferQueueItf bq, void *context) {

FFmpegOpenSLPlayer *player = static_cast<FFmpegOpenSLPlayer *>(context);

player->processBufferQueue();

}

void FFmpegOpenSLPlayer::processBufferQueue() {

pthread_mutex_lock(&mMutex);

// 缓冲区已播放完成,减少计数

if (mQueuedBufferCount > 0) {

mQueuedBufferCount--;

LOGI("Buffer processed, queued count: %d", mQueuedBufferCount.load());

}

// 通知解码线程有可用的缓冲区槽位

pthread_cond_signal(&mBufferReadyCond);

pthread_mutex_unlock(&mMutex);

}FFmpegOpenSLPlayer.cpp类:

以下是完整的代码:

// Author : wangyongyao https://github.com/wangyongyao1989

// Created by MMM on 2025/11/13.

//

#include "includes/FFmpegOpenSLPlayer.h"

#include <unistd.h>

FFmpegOpenSLPlayer::FFmpegOpenSLPlayer(JNIEnv *env, jobject thiz)

: mEnv(nullptr), mJavaObj(nullptr), mFormatContext(nullptr), mCodecContext(nullptr),

mSwrContext(nullptr),

mAudioStreamIndex(-1), mSampleRate(44100), mChannels(2), mDuration(0),

mSampleFormat(AV_SAMPLE_FMT_NONE), mIsPlaying(false), mInitialized(false),

mStopRequested(false), mCurrentBuffer(0), mCurrentPosition(0),

mQueuedBufferCount(0) { // 初始化为0

mEnv = env;

env->GetJavaVM(&mJavaVm);

mJavaObj = env->NewGlobalRef(thiz);

pthread_mutex_init(&mMutex, nullptr);

pthread_cond_init(&mBufferReadyCond, nullptr);

// 初始化缓冲区

for (int i = 0; i < NUM_BUFFERS; i++) {

mBuffers[i] = new uint8_t[BUFFER_SIZE];

memset(mBuffers[i], 0, BUFFER_SIZE); // 初始化为静音

}

}

FFmpegOpenSLPlayer::~FFmpegOpenSLPlayer() {

stop();

cleanup();

pthread_mutex_destroy(&mMutex);

pthread_cond_destroy(&mBufferReadyCond);

// 清理缓冲区

for (int i = 0; i < NUM_BUFFERS; i++) {

delete[] mBuffers[i];

}

mEnv->DeleteGlobalRef(mJavaObj);

}

bool FFmpegOpenSLPlayer::init(const std::string &filePath) {

if (mInitialized) {

LOGI("Already initialized");

return true;

}

// 初始化 FFmpeg

if (!initFFmpeg(filePath)) {

LOGE("Failed to initialize FFmpeg");

PostStatusMessage("Failed to initialize FFmpeg");

return false;

}

// 初始化 OpenSL ES

if (!initOpenSL()) {

LOGE("Failed to initialize OpenSL ES");

PostStatusMessage("Failed to initialize OpenSL ES");

cleanupFFmpeg();

return false;

}

mInitialized = true;

LOGI("FFmpegOpenSLPlayer initialized successfully");

PostStatusMessage("FFmpegOpenSLPlayer initialized successfully");

return true;

}

bool FFmpegOpenSLPlayer::initFFmpeg(const std::string &filePath) {

// 打开输入文件

if (avformat_open_input(&mFormatContext, filePath.c_str(), nullptr, nullptr) != 0) {

LOGE("Could not open file: %s", filePath.c_str());

return false;

}

// 查找流信息

if (avformat_find_stream_info(mFormatContext, nullptr) < 0) {

LOGE("Could not find stream information");

return false;

}

// 查找音频流

mAudioStreamIndex = -1;

for (unsigned int i = 0; i < mFormatContext->nb_streams; i++) {

if (mFormatContext->streams[i]->codecpar->codec_type == AVMEDIA_TYPE_AUDIO) {

mAudioStreamIndex = i;

break;

}

}

if (mAudioStreamIndex == -1) {

LOGE("Could not find audio stream");

return false;

}

// 获取编解码器参数

AVCodecParameters *codecParams = mFormatContext->streams[mAudioStreamIndex]->codecpar;

// 查找解码器

const AVCodec *codec = avcodec_find_decoder(codecParams->codec_id);

if (!codec) {

LOGE("Unsupported codec");

return false;

}

// 分配编解码器上下文

mCodecContext = avcodec_alloc_context3(codec);

if (!mCodecContext) {

LOGE("Could not allocate codec context");

return false;

}

// 复制参数到上下文

if (avcodec_parameters_to_context(mCodecContext, codecParams) < 0) {

LOGE("Could not copy codec parameters");

return false;

}

// 打开编解码器

if (avcodec_open2(mCodecContext, codec, nullptr) < 0) {

LOGE("Could not open codec");

return false;

}

// 设置音频参数

mSampleRate = mCodecContext->sample_rate;

mChannels = mCodecContext->channels;

mSampleFormat = mCodecContext->sample_fmt;

mDuration = mFormatContext->duration;

// 配置音频重采样

AVChannelLayout out_ch_layout = AV_CHANNEL_LAYOUT_STEREO;

swr_alloc_set_opts2(&mSwrContext,

&out_ch_layout, AV_SAMPLE_FMT_S16, mCodecContext->sample_rate,

&mCodecContext->ch_layout, mCodecContext->sample_fmt, mCodecContext->sample_rate,

0, NULL);

if (swr_init(mSwrContext) < 0) {

LOGE("Could not initialize resampler");

PostStatusMessage("Could not initialize resampler \n");

return false;

}

LOGI("FFmpeg initialized: %d Hz, %d channels, duration: %lld",

mSampleRate, mChannels, mDuration);

playAudioInfo = "FFmpeg initialized ,Hz:" + to_string(mSampleRate) + ",channels:" +

to_string(mChannels) + " ,duration:" + to_string(mDuration) + "\n";

PostStatusMessage(playAudioInfo.c_str());

return true;

}

bool FFmpegOpenSLPlayer::initOpenSL() {

// 创建 OpenSL 引擎与引擎接口

SLresult result = helper.createEngine();

if (!helper.isSuccess(result)) {

LOGE("create engine error: %d", result);

PostStatusMessage("Create engine error\n");

return false;

}

PostStatusMessage("OpenSL createEngine Success \n");

// 创建混音器与混音接口

result = helper.createMix();

if (!helper.isSuccess(result)) {

LOGE("create mix error: %d", result);

PostStatusMessage("Create mix error \n");

return false;

}

PostStatusMessage("OpenSL createMix Success \n");

result = helper.createPlayer(mChannels, mSampleRate * 1000, SL_PCMSAMPLEFORMAT_FIXED_16,

mChannels == 2 ? (SL_SPEAKER_FRONT_LEFT | SL_SPEAKER_FRONT_RIGHT)

: SL_SPEAKER_FRONT_CENTER);

if (!helper.isSuccess(result)) {

LOGE("create player error: %d", result);

PostStatusMessage("Create player error\n");

return false;

}

PostStatusMessage("OpenSL createPlayer Success \n");

// 注册回调并开始播放

result = helper.registerCallback(bufferQueueCallback, this);

if (!helper.isSuccess(result)) {

LOGE("register callback error: %d", result);

PostStatusMessage("Register callback error \n");

return false;

}

PostStatusMessage("OpenSL registerCallback Success \n");

// 清空缓冲区队列

result = (*helper.bufferQueueItf)->Clear(helper.bufferQueueItf);

if (result != SL_RESULT_SUCCESS) {

LOGE("Failed to clear buffer queue: %d, error: %s", result, getSLErrorString(result));

playAudioInfo = "Failed to clear buffer queue:" + string(getSLErrorString(result));

PostStatusMessage(playAudioInfo.c_str());

return false;

}

LOGI("OpenSL ES initialized successfully: %d Hz, %d channels", mSampleRate, mChannels);

playAudioInfo = "OpenSL ES initialized ,Hz:" + to_string(mSampleRate) + ",channels:" +

to_string(mChannels) + " ,duration:" + to_string(mDuration) + "\n";

PostStatusMessage(playAudioInfo.c_str());

return true;

}

bool FFmpegOpenSLPlayer::start() {

if (!mInitialized) {

LOGE("Player not initialized");

PostStatusMessage("Player not initialized \n");

return false;

}

pthread_mutex_lock(&mMutex);

if (mIsPlaying) {

pthread_mutex_unlock(&mMutex);

return true;

}

// 重置状态

mQueuedBufferCount = 0;

mCurrentBuffer = 0;

mStopRequested = false;

// 清空缓冲区队列

if (helper.bufferQueueItf) {

(*helper.bufferQueueItf)->Clear(helper.bufferQueueItf);

}

// 设置播放状态

SLresult result = helper.play();

if (result != SL_RESULT_SUCCESS) {

LOGE("Failed to set play state: %d, error: %s", result, getSLErrorString(result));

playAudioInfo = "Failed to set play state: " + string(getSLErrorString(result));

PostStatusMessage(playAudioInfo.c_str());

pthread_mutex_unlock(&mMutex);

return false;

}

mIsPlaying = true;

// 启动解码线程

if (pthread_create(&mDecodeThread, nullptr, decodeThreadWrapper, this) != 0) {

LOGE("Failed to create decode thread");

PostStatusMessage("Failed to create decode thread \n");

mIsPlaying = false;

pthread_mutex_unlock(&mMutex);

return false;

}

pthread_mutex_unlock(&mMutex);

LOGI("Playback started");

PostStatusMessage("Playback started \n");

return true;

}

bool FFmpegOpenSLPlayer::pause() {

if (!mInitialized || !mIsPlaying) {

return false;

}

pthread_mutex_lock(&mMutex);

SLresult result = helper.pause();

if (result == SL_RESULT_SUCCESS) {

mIsPlaying = false;

LOGI("Playback paused");

PostStatusMessage("Playback paused \n");

} else {

LOGE("Failed to pause playback: %d", result);

}

pthread_mutex_unlock(&mMutex);

return result == SL_RESULT_SUCCESS;

}

void FFmpegOpenSLPlayer::stop() {

if (!mInitialized) {

return;

}

mStopRequested = true;

mIsPlaying = false;

// 通知所有等待的线程

pthread_cond_broadcast(&mBufferReadyCond);

// 等待解码线程结束

if (mDecodeThread) {

pthread_join(mDecodeThread, nullptr);

mDecodeThread = 0;

}

if (helper.player) {

helper.stop();

}

// 清空缓冲区队列

if (helper.bufferQueueItf) {

(*helper.bufferQueueItf)->Clear(helper.bufferQueueItf);

}

LOGI("Playback stopped");

PostStatusMessage("Playback stopped \n");

}

void *FFmpegOpenSLPlayer::decodeThreadWrapper(void *context) {

FFmpegOpenSLPlayer *player = static_cast<FFmpegOpenSLPlayer *>(context);

player->decodeThread();

return nullptr;

}

void FFmpegOpenSLPlayer::decodeThread() {

AVPacket packet;

AVFrame *frame = av_frame_alloc();

int ret;

LOGI("Decode thread started");

PostStatusMessage("Decode thread started \n");

while (!mStopRequested && mIsPlaying) {

pthread_mutex_lock(&mMutex);

// 等待直到有可用的缓冲区槽位

while (mQueuedBufferCount >= NUM_BUFFERS && !mStopRequested && mIsPlaying) {

LOGI("Waiting for buffer slot, queued: %d", mQueuedBufferCount.load());

playAudioInfo =

"Waiting for buffer slot, queued:" + to_string(mQueuedBufferCount) + " \n";

PostStatusMessage(playAudioInfo.c_str());

pthread_cond_wait(&mBufferReadyCond, &mMutex);

}

if (mStopRequested || !mIsPlaying) {

pthread_mutex_unlock(&mMutex);

break;

}

// 读取音频包

ret = av_read_frame(mFormatContext, &packet);

if (ret < 0) {

pthread_mutex_unlock(&mMutex);

if (ret == AVERROR_EOF) {

LOGI("End of file reached");

// 可以选择循环播放或停止

break;

} else {

LOGE("Error reading frame: %d", ret);

usleep(10000); // 短暂休眠后继续

continue;

}

}

// 只处理音频包

if (packet.stream_index == mAudioStreamIndex) {

// 发送包到解码器

ret = avcodec_send_packet(mCodecContext, &packet);

if (ret < 0) {

LOGE("Error sending packet to decoder: %d", ret);

av_packet_unref(&packet);

pthread_mutex_unlock(&mMutex);

continue;

}

// 接收解码后的帧

while (avcodec_receive_frame(mCodecContext, frame) == 0) {

// 更新当前位置

if (frame->pts != AV_NOPTS_VALUE) {

mCurrentPosition = frame->pts;

}

// 重采样音频数据

uint8_t *buffer = mBuffers[mCurrentBuffer];

uint8_t *outBuffer = buffer;

// 计算最大输出样本数

int maxSamples = BUFFER_SIZE / (mChannels * 2);

int outSamples = swr_convert(mSwrContext, &outBuffer, maxSamples,

(const uint8_t **) frame->data, frame->nb_samples);

if (outSamples > 0) {

int bytesDecoded = outSamples * mChannels * 2;

// 确保不超过缓冲区大小

if (bytesDecoded > BUFFER_SIZE) {

LOGW("Decoded data exceeds buffer size: %d > %d", bytesDecoded,

BUFFER_SIZE);

bytesDecoded = BUFFER_SIZE;

}

// 检查是否还有可用的缓冲区槽位

if (mQueuedBufferCount >= NUM_BUFFERS) {

LOGW("No buffer slots available, skipping frame");

break;

}

// 将缓冲区加入播放队列

SLresult result = (*helper.bufferQueueItf)->Enqueue(helper.bufferQueueItf,

buffer, bytesDecoded);

if (result != SL_RESULT_SUCCESS) {

LOGE("Failed to enqueue buffer: %d, error: %s",

result, getSLErrorString(result));

if (result == SL_RESULT_BUFFER_INSUFFICIENT) {

// 等待一段时间后重试

pthread_mutex_unlock(&mMutex);

usleep(10000); // 10ms

pthread_mutex_lock(&mMutex);

}

break;

} else {

// 成功入队,更新状态

mQueuedBufferCount++;

mCurrentBuffer = (mCurrentBuffer + 1) % NUM_BUFFERS;

LOGI("Buffer enqueued successfully: %d bytes, buffer index: %d, queued: %d",

bytesDecoded, mCurrentBuffer, mQueuedBufferCount.load());

}

} else if (outSamples < 0) {

LOGE("swr_convert failed: %d", outSamples);

}

}

}

av_packet_unref(&packet);

pthread_mutex_unlock(&mMutex);

// 给其他线程一些执行时间

usleep(1000);

}

av_frame_free(&frame);

LOGI("Decode thread finished");

}

void FFmpegOpenSLPlayer::bufferQueueCallback(SLAndroidSimpleBufferQueueItf bq, void *context) {

FFmpegOpenSLPlayer *player = static_cast<FFmpegOpenSLPlayer *>(context);

player->processBufferQueue();

}

void FFmpegOpenSLPlayer::processBufferQueue() {

pthread_mutex_lock(&mMutex);

// 缓冲区已播放完成,减少计数

if (mQueuedBufferCount > 0) {

mQueuedBufferCount--;

LOGI("Buffer processed, queued count: %d", mQueuedBufferCount.load());

}

// 通知解码线程有可用的缓冲区槽位

pthread_cond_signal(&mBufferReadyCond);

pthread_mutex_unlock(&mMutex);

}

void FFmpegOpenSLPlayer::cleanup() {

cleanupFFmpeg();

cleanupOpenSL();

}

void FFmpegOpenSLPlayer::cleanupFFmpeg() {

if (mSwrContext) {

swr_free(&mSwrContext);

mSwrContext = nullptr;

}

if (mCodecContext) {

avcodec_close(mCodecContext);

avcodec_free_context(&mCodecContext);

mCodecContext = nullptr;

}

if (mFormatContext) {

avformat_close_input(&mFormatContext);

mFormatContext = nullptr;

}

mAudioStreamIndex = -1;

mInitialized = false;

}

void FFmpegOpenSLPlayer::cleanupOpenSL() {

mIsPlaying = false;

}

// 添加这个辅助函数来获取错误描述

const char *FFmpegOpenSLPlayer::getSLErrorString(SLresult result) {

switch (result) {

case SL_RESULT_SUCCESS:

return "SL_RESULT_SUCCESS";

case SL_RESULT_PRECONDITIONS_VIOLATED:

return "SL_RESULT_PRECONDITIONS_VIOLATED";

case SL_RESULT_PARAMETER_INVALID:

return "SL_RESULT_PARAMETER_INVALID";

case SL_RESULT_MEMORY_FAILURE:

return "SL_RESULT_MEMORY_FAILURE";

case SL_RESULT_RESOURCE_ERROR:

return "SL_RESULT_RESOURCE_ERROR";

case SL_RESULT_RESOURCE_LOST:

return "SL_RESULT_RESOURCE_LOST";

case SL_RESULT_IO_ERROR:

return "SL_RESULT_IO_ERROR";

case SL_RESULT_BUFFER_INSUFFICIENT:

return "SL_RESULT_BUFFER_INSUFFICIENT";

case SL_RESULT_CONTENT_CORRUPTED:

return "SL_RESULT_CONTENT_CORRUPTED";

case SL_RESULT_CONTENT_UNSUPPORTED:

return "SL_RESULT_CONTENT_UNSUPPORTED";

case SL_RESULT_CONTENT_NOT_FOUND:

return "SL_RESULT_CONTENT_NOT_FOUND";

case SL_RESULT_PERMISSION_DENIED:

return "SL_RESULT_PERMISSION_DENIED";

case SL_RESULT_FEATURE_UNSUPPORTED:

return "SL_RESULT_FEATURE_UNSUPPORTED";

case SL_RESULT_INTERNAL_ERROR:

return "SL_RESULT_INTERNAL_ERROR";

case SL_RESULT_UNKNOWN_ERROR:

return "SL_RESULT_UNKNOWN_ERROR";

case SL_RESULT_OPERATION_ABORTED:

return "SL_RESULT_OPERATION_ABORTED";

case SL_RESULT_CONTROL_LOST:

return "SL_RESULT_CONTROL_LOST";

default:

return "Unknown error";

}

}

JNIEnv *FFmpegOpenSLPlayer::GetJNIEnv(bool *isAttach) {

JNIEnv *env;

int status;

if (nullptr == mJavaVm) {

LOGE("GetJNIEnv mJavaVm == nullptr");

return nullptr;

}

*isAttach = false;

status = mJavaVm->GetEnv((void **) &env, JNI_VERSION_1_6);

if (status != JNI_OK) {

status = mJavaVm->AttachCurrentThread(&env, nullptr);

if (status != JNI_OK) {

LOGE("GetJNIEnv failed to attach current thread");

return nullptr;

}

*isAttach = true;

}

return env;

}

void FFmpegOpenSLPlayer::PostStatusMessage(const char *msg) {

bool isAttach = false;

JNIEnv *pEnv = GetJNIEnv(&isAttach);

if (pEnv == nullptr) {

return;

}

jmethodID mid = pEnv->GetMethodID(pEnv->GetObjectClass(mJavaObj), "CppStatusCallback",

"(Ljava/lang/String;)V");

if (mid) {

jstring jMsg = pEnv->NewStringUTF(msg);

pEnv->CallVoidMethod(mJavaObj, mid, jMsg);

pEnv->DeleteLocalRef(jMsg);

}

if (isAttach) {

mJavaVm->DetachCurrentThread();

}

}以上的代码放在本人的GitHub项目:https://github.com/wangyongyao1989/FFmpegPractices

1643

1643

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?