问题描述:

mysql版本:8.0.12 表存储引擎为innodb

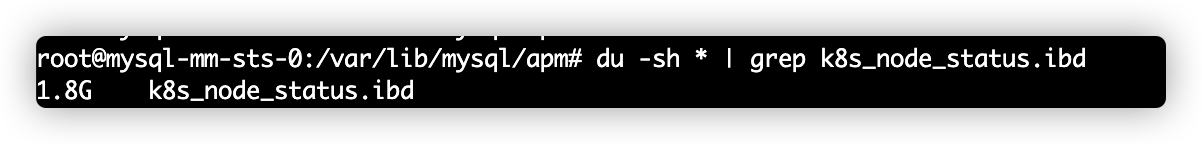

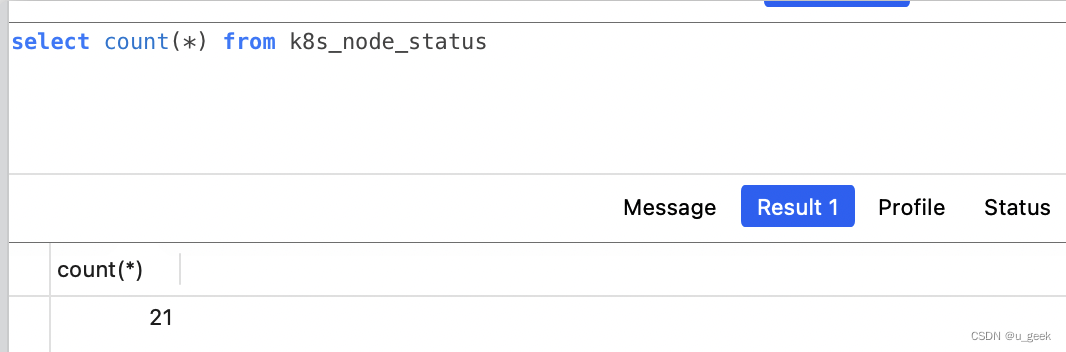

数据库某张表中数据只有100条左右数据,但表文件(ibd文件)占用磁盘空间却达到了1.8G。

该表的表结构如下:

CREATE TABLE `k8s_node_status` (

`id` int(11) NOT NULL AUTO_INCREMENT,

`master_ip` varchar(255) NOT NULL ,

`node_name` varchar(255) DEFAULT NULL,

`node_ip` varchar(255) DEFAULT NULL,

`node_status` tinyint(5) DEFAULT NULL ,

`update_time` timestamp NULL DEFAULT CURRENT_TIMESTAMP ON UPDATE CURRENT_TIMESTAMP ,

`create_time` timestamp NULL DEFAULT CURRENT_TIMESTAMP,

`delete_flag` tinyint(1) DEFAULT '0' ,

`reason` varchar(100) DEFAULT NULL ,

`message` varchar(4000) DEFAULT NULL,

`labels` text ,

`annotations` text ,

`taints` tinyint(1) DEFAULT NULL ,

`cpu_total` decimal(16,3) DEFAULT NULL ,

`memory_total` decimal(16,3) DEFAULT NULL ,

`fs_total` decimal(16,3) DEFAULT NULL ,

`version` varchar(32) DEFAULT NULL ,

`yaml_content` text CHARACTER SET utf8 COLLATE utf8_general_ci,

`master` tinyint(1) DEFAULT NULL ,

`allow_pods` int(8) DEFAULT NULL ,

`uid` varchar(64) DEFAULT NULL,

`start_time` timestamp NULL DEFAULT NULL ,

`delete_time` timestamp NULL DEFAULT NULL ,

PRIMARY KEY (`id`) USING BTREE,

UNIQUE KEY `unique_uid_deleteflag_masterip` (`uid`,`delete_flag`,`master_ip`) USING BTREE,

KEY `idx_nodename` (`node_name`) USING BTREE,

KEY `idx_nodeip` (`node_ip`) USING BTREE,

KEY `idx_masterip` (`master_ip`) USING BTREE

) ENGINE=InnoDB AUTO_INCREMENT=100 DEFAULT CHARSET=utf8 ROW_FORMAT=DYNAMIC;原因猜想1:数据页空缺造成的页空洞?

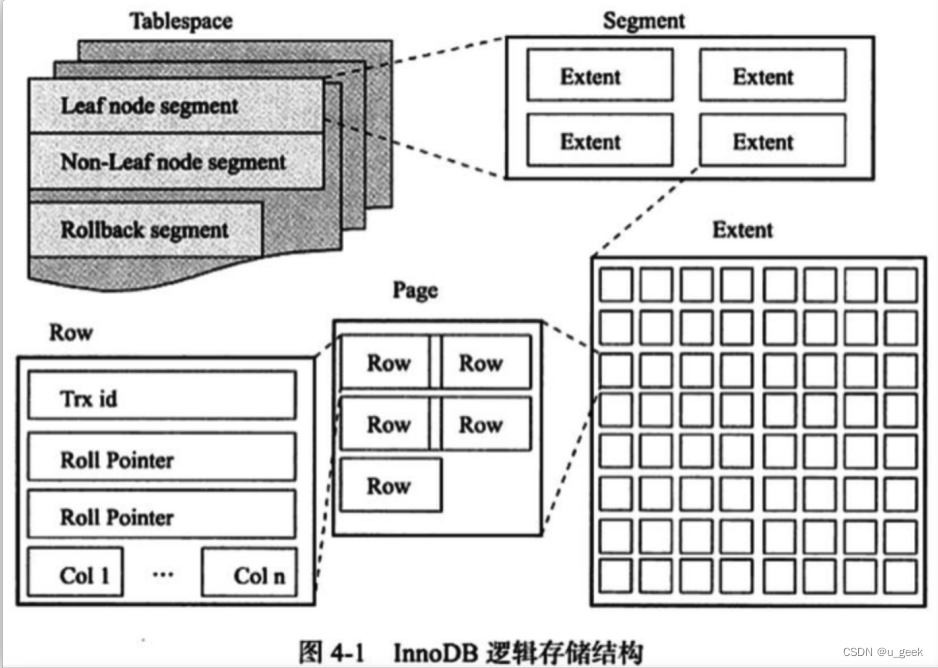

innodb存储引擎中,表数据是以B+树的数据结构存储的,树的每个叶子节点存储一行数据,而这些节点又是存储在数据页(page)上,innodb引擎默认数据页的大小是16Kb,多个连续的页又会组成区(extent)。其存储结构如下图:

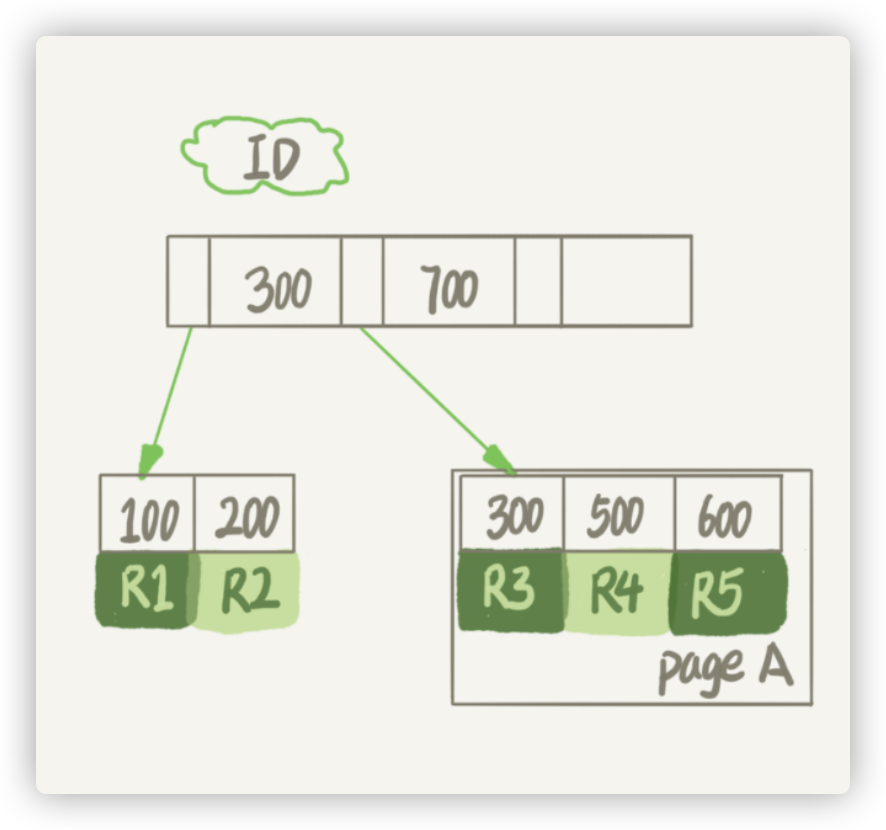

数据在B+树的实例如下:

我们要删掉 R4 这个记录,InnoDB 引擎只会把 R4 这个记录标记为删除。如果之后要再插入一个 ID 在 300 和 600 之间的记录时,可能会复用这个位置。但是,磁盘文件的大小并不会缩小。如果删除page A上的所有数据,那么这个页会被标记为可复用。

不止删除数据会造成页空洞,插入数据时,若主键值不是自增而是随机的,那么就有可能触发页分裂而造成页空洞。

更新数据时,若更新了索引值,那么会删除旧数据,插入一条新数据,也会造成页空洞。例如将R4记录的id从500更新为800,那么会将R4记录标记为删除,同时新增一条记录。

若没有更新索引值,只是更新某个字段,那么如果更新后的记录的长度(占用字节数)比原记录长,且原记录前后无空闲位置,那么会执行delete+update操作,可能会造成页空洞。(这个场景有待严格的验证)

结合以上可能造成数据页空洞的场景,我们的表并没有频繁删除/新增记录的操作,这一点通过自增主键可以看出来,该表的自增主键的值仅为100(AUTO_INCREMENT=100),因此不会是删除和新增数据造成页空洞。那么那就有可能是更新造成的页空洞。

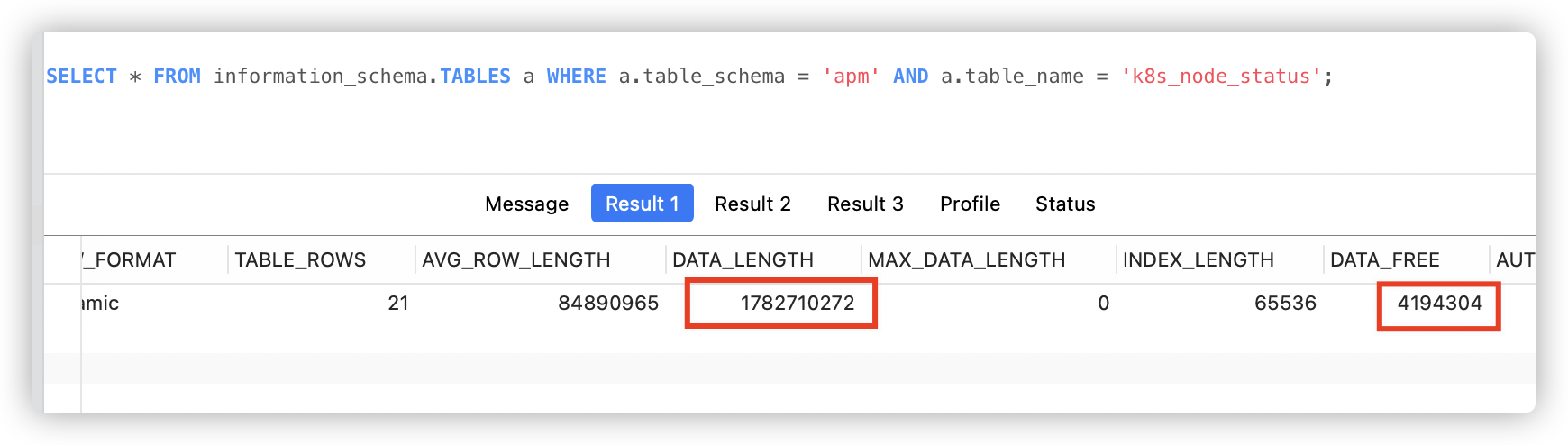

为进一步确定是否是频繁更新造成的页空洞,我们通过查看TABLES表数据,其中DATA_FREE字段表示被分配但未使用的字节数大小,这个值为4194304=4M,这部分空间是可以被分配的,包括上面所说的记录被删除后造成的空白页。显然页空洞并不是造成数据文件过大的原因。

原因猜想2:text频繁更新引起?

再次看我们的表结构,其中有3个text字段,分别是labels、annotations、yaml_content。其中labels、annotations字段实际存储的字符长度在100~500这个范围,不算太大。而yaml_content字段保存了yaml文件,字符长度竟达到的10000+的长度。而这个字段又是频繁变动更新的。因此猜测是yaml_content频繁更新引起的ibd文件过大。

在此之前需要先了解一下,text字段是如何存储的,innodb数据页大小为16KB=16384byte,也就是最多存16384个字节。每个页存储的记录是有限制的,最多存16KB/2-200行的记录,也就是7992行。最少存2行的记录。那么对于超出16384个字符长度的行记录该如何存储呢?对于Compact行格式,有以下结论:

- 结论一:当行长度发生溢出时,数据存放在页类型为Uncompress BLOB页(溢出页)中

- 结论二:对于行溢出数据,其保留数据的前768个字节的前缀数据,之后偏移量指向溢出页

- 结论三:为保证一个页中至少有2条行记录,单条行记录阈值长度为8098,若行长度超过这个阈值,则会发生行溢出,数据会保存在溢出页中,数据页只保留前缀768个字节。

- 结论四:当InnoDB更新存储在溢出页中的大字段时,将不会在原来的位置更新。而是会在写一个新值到一个新的空间,并且不会删除旧的值。

注:Dynamic行格式,列存储是否放到off-page页,主要取决于行大小,他会把行中最长的一列放到off-page,直到数据页能存放下两行。TEXT或BLOB列<=40bytes时总是存在于数据页。这种方式可以避免compact那样把太多的大列值放到B-tree Node(数据页中只存放20个字节的指针,实际的数据存放在Off Page中,之前的Compact 和 Redundant 两种格式会存放768个字前缀字节)。

实际上我们的k8s_node_status表中yaml_content字段长度在10000+字符,且频繁更新,触发了上面结论4,导致表文件(.ibd文件)持续膨胀。

我们在测试环境复制一张k8s_node_status表,此时表中无数据,然后插入一条数据如下:

INSERT INTO `test`.`k8s_node_status`(`id`, `master_ip`, `node_name`, `node_ip`, `node_status`, `update_time`, `create_time`, `delete_flag`, `reason`, `message`, `labels`, `annotations`, `taints`, `cpu_total`, `memory_total`, `fs_total`, `version`, `yaml_content`, `master`, `allow_pods`, `uid`, `start_time`, `delete_time`) VALUES (9, 'test_default_default', '10.10.103.219-slave', '10.10.103.219', 0, '2022-03-24 08:57:52', '2022-01-18 11:42:43', 0, '', '', 'os: linux', 'kubeadm.alpha.kubernetes.io/cri-socket: /var/run/dockershim.sock,management.cattle.io/pod-limits: {\"cpu\":\"6782m\",\"memory\":\"14756Mi\"},management.cattle.io/pod-requests: {\"cpu\":\"6718m\",\"memory\":\"9722Mi\",\"pods\":\"21\"},node.alpha.kubernetes.io/ttl: 0,projectcalico.org/IPv4Address: 10.10.103.219/24,projectcalico.org/IPv4IPIPTunnelAddr: 192.168.24.64,volumes.kubernetes.io/controller-managed-attach-detach: true', 0, 8.000, 16657936384.000, 157696397312.000, '26815075', 'apiVersion: v1', 0, 110, '8f5111b0-1edc-4e7c-bd22-11733693642b', '2022-01-07 03:08:13', NULL);这条数据中的text字段设置为几个字符,总字符数在1000左右,这时分析表文件(ibd文件),可以看到如下结果:

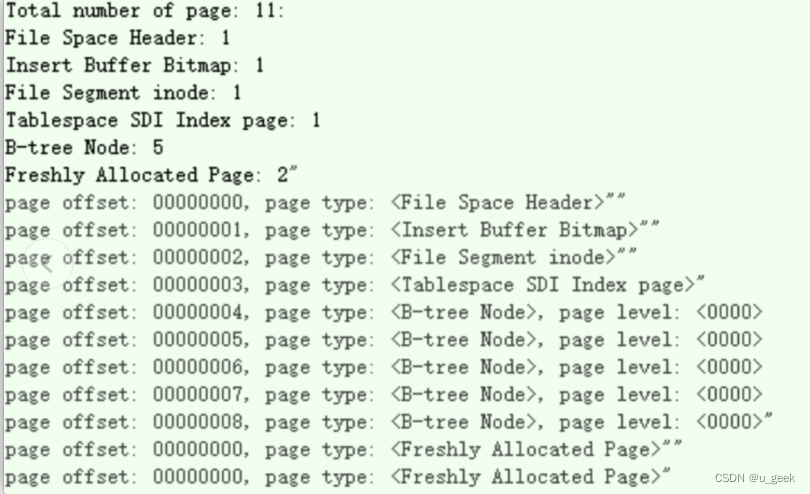

分析:插入一条数据时,表文件中只有4个数据页<B-tree Node>,没有溢出页。符合上述结论3

分析:插入一条数据时,表文件中只有4个数据页<B-tree Node>,没有溢出页。符合上述结论3

然后我们插入一条“正常”数据,这条数据的yaml_content字段长度达到10000个字符,再分析ibd文件如下:

INSERT INTO `test`.`k8s_node_status`(`id`, `master_ip`, `node_name`, `node_ip`, `node_status`, `update_time`, `create_time`, `delete_flag`, `reason`, `message`, `labels`, `annotations`, `taints`, `cpu_total`, `memory_total`, `fs_total`, `version`, `yaml_content`, `master`, `allow_pods`, `uid`, `start_time`, `delete_time`) VALUES (13, 'test_default_default', '10.10.103.221-slave', '10.10.103.221', 0, '2022-03-24 08:58:57', '2022-01-18 11:42:43', 0, '', '', 'beta.kubernetes.io/arch: amd64,beta.kubernetes.io/os: linux,kubernetes.io/arch: amd64,kubernetes.io/hostname: 10.10.103.221-slave,kubernetes.io/os: linux', 'kubeadm.alpha.kubernetes.io/cri-socket: /var/run/dockershim.sock,management.cattle.io/pod-limits: {\"cpu\":\"4882m\",\"memory\":\"6589Mi\"},management.cattle.io/pod-requests: {\"cpu\":\"4718m\",\"memory\":\"5895Mi\",\"pods\":\"12\"},node.alpha.kubernetes.io/ttl: 0,projectcalico.org/IPv4Address: 10.10.103.221/24,projectcalico.org/IPv4IPIPTunnelAddr: 192.168.68.192,volumes.kubernetes.io/controller-managed-attach-detach: true', 0, 8.000, 16657932288.000, 157696397312.000, '26815328', 'apiVersion: v1\nkind: Node\nmetadata:\n annotations:\n kubeadm.alpha.kubernetes.io/cri-socket: /var/run/dockershim.sock\n management.cattle.io/pod-limits: \'{\"cpu\":\"4882m\",\"memory\":\"6589Mi\"}\'\n management.cattle.io/pod-requests: \'{\"cpu\":\"4718m\",\"memory\":\"5895Mi\",\"pods\":\"12\"}\'\n node.alpha.kubernetes.io/ttl: \'0\'\n projectcalico.org/IPv4Address: 10.10.103.221/24\n projectcalico.org/IPv4IPIPTunnelAddr: 192.168.68.192\n volumes.kubernetes.io/controller-managed-attach-detach: \'true\'\n creationTimestamp: \'2022-01-07T03:08:02.000+08:00\'\n labels:\n beta.kubernetes.io/arch: amd64\n beta.kubernetes.io/os: linux\n kubernetes.io/arch: amd64\n kubernetes.io/hostname: 10.10.103.221-slave\n kubernetes.io/os: linux\n managedFields:\n - apiVersion: v1\n fieldsType: FieldsV1\n fieldsV1:\n f:metadata:\n f:annotations:\n f:kubeadm.alpha.kubernetes.io/cri-socket: {}\n manager: kubeadm\n operation: Update\n time: \'2022-01-07T03:08:03.000+08:00\'\n - apiVersion: v1\n fieldsType: FieldsV1\n fieldsV1:\n f:metadata:\n f:annotations:\n f:projectcalico.org/IPv4Address: {}\n f:projectcalico.org/IPv4IPIPTunnelAddr: {}\n f:status:\n f:conditions:\n k:{\"type\":\"NetworkUnavailable\"}:\n .: {}\n f:lastHeartbeatTime: {}\n f:lastTransitionTime: {}\n f:message: {}\n f:reason: {}\n f:status: {}\n f:type: {}\n manager: calico-node\n operation: Update\n time: \'2022-01-07T03:09:23.000+08:00\'\n - apiVersion: v1\n fieldsType: FieldsV1\n fieldsV1:\n f:metadata:\n f:annotations:\n f:management.cattle.io/pod-limits: {}\n f:management.cattle.io/pod-requests: {}\n manager: agent\n operation: Update\n time: \'2022-03-10T09:43:22.000+08:00\'\n - apiVersion: v1\n fieldsType: FieldsV1\n fieldsV1:\n f:metadata:\n f:annotations:\n f:node.alpha.kubernetes.io/ttl: {}\n f:spec:\n f:podCIDR: {}\n f:podCIDRs:\n .: {}\n v:\"192.168.3.0/24\": {}\n manager: kube-controller-manager\n operation: Update\n time: \'2022-03-22T09:47:38.000+08:00\'\n - apiVersion: v1\n fieldsType: FieldsV1\n fieldsV1:\n f:metadata:\n f:annotations:\n .: {}\n f:volumes.kubernetes.io/controller-managed-attach-detach: {}\n f:labels:\n .: {}\n f:beta.kubernetes.io/arch: {}\n f:beta.kubernetes.io/os: {}\n f:kubernetes.io/arch: {}\n f:kubernetes.io/hostname: {}\n f:kubernetes.io/os: {}\n f:status:\n f:addresses:\n .: {}\n k:{\"type\":\"Hostname\"}:\n .: {}\n f:address: {}\n f:type: {}\n k:{\"type\":\"InternalIP\"}:\n .: {}\n f:address: {}\n f:type: {}\n f:allocatable:\n .: {}\n f:cpu: {}\n f:ephemeral-storage: {}\n f:hugepages-1Gi: {}\n f:hugepages-2Mi: {}\n f:memory: {}\n f:pods: {}\n f:capacity:\n .: {}\n f:cpu: {}\n f:ephemeral-storage: {}\n f:hugepages-1Gi: {}\n f:hugepages-2Mi: {}\n f:memory: {}\n f:pods: {}\n f:conditions:\n .: {}\n k:{\"type\":\"DiskPressure\"}:\n .: {}\n f:lastHeartbeatTime: {}\n f:lastTransitionTime: {}\n f:message: {}\n f:reason: {}\n f:status: {}\n f:type: {}\n k:{\"type\":\"MemoryPressure\"}:\n .: {}\n f:lastHeartbeatTime: {}\n f:lastTransitionTime: {}\n f:message: {}\n f:reason: {}\n f:status: {}\n f:type: {}\n k:{\"type\":\"PIDPressure\"}:\n .: {}\n f:lastHeartbeatTime: {}\n f:lastTransitionTime: {}\n f:message: {}\n f:reason: {}\n f:status: {}\n f:type: {}\n k:{\"type\":\"Ready\"}:\n .: {}\n f:lastHeartbeatTime: {}\n f:lastTransitionTime: {}\n f:message: {}\n f:reason: {}\n f:status: {}\n f:type: {}\n f:daemonEndpoints:\n f:kubeletEndpoint:\n f:Port: {}\n f:images: {}\n f:nodeInfo:\n f:architecture: {}\n f:bootID: {}\n f:containerRuntimeVersion: {}\n f:kernelVersion: {}\n f:kubeProxyVersion: {}\n f:kubeletVersion: {}\n f:machineID: {}\n f:operatingSystem: {}\n f:osImage: {}\n f:systemUUID: {}\n manager: kubelet\n operation: Update\n time: \'2022-03-22T09:47:59.000+08:00\'\n name: 10.10.103.221-slave\n resourceVersion: \'26815328\'\n selfLink: /api/v1/nodes/10.10.103.221-slave\n uid: c8cac3c8-e229-40dc-93e5-219a372fc80e\nspec:\n podCIDR: 192.168.3.0/24\n podCIDRs:\n - 192.168.3.0/24\nstatus:\n addresses:\n - type: InternalIP\n address: 10.10.103.221\n - type: Hostname\n address: 10.10.103.221-slave\n allocatable:\n cpu: \'8\'\n ephemeral-storage: \'140969826890\'\n hugepages-1Gi: \'0\'\n hugepages-2Mi: \'0\'\n memory: 16165112Ki\n pods: \'110\'\n capacity:\n cpu: \'8\'\n ephemeral-storage: 152962052Ki\n hugepages-1Gi: \'0\'\n hugepages-2Mi: \'0\'\n memory: 16267512Ki\n pods: \'110\'\n conditions:\n - type: NetworkUnavailable\n lastHeartbeatTime: \'2022-03-22T09:46:31.000+08:00\'\n lastTransitionTime: \'2022-03-22T09:46:31.000+08:00\'\n message: Calico is running on this node\n reason: CalicoIsUp\n status: \'False\'\n - type: MemoryPressure\n lastHeartbeatTime: \'2022-03-24T08:56:50.000+08:00\'\n lastTransitionTime: \'2022-03-22T09:45:55.000+08:00\'\n message: kubelet has sufficient memory available\n reason: KubeletHasSufficientMemory\n status: \'False\'\n - type: DiskPressure\n lastHeartbeatTime: \'2022-03-24T08:56:50.000+08:00\'\n lastTransitionTime: \'2022-03-22T09:45:55.000+08:00\'\n message: kubelet has no disk pressure\n reason: KubeletHasNoDiskPressure\n status: \'False\'\n - type: PIDPressure\n lastHeartbeatTime: \'2022-03-24T08:56:50.000+08:00\'\n lastTransitionTime: \'2022-03-22T09:45:55.000+08:00\'\n message: kubelet has sufficient PID available\n reason: KubeletHasSufficientPID\n status: \'False\'\n - type: Ready\n lastHeartbeatTime: \'2022-03-24T08:56:50.000+08:00\'\n lastTransitionTime: \'2022-03-22T09:45:55.000+08:00\'\n message: kubelet is posting ready status\n reason: KubeletReady\n status: \'True\'\n daemonEndpoints:\n kubeletEndpoint:\n port: 10250\n images:\n - names:\n - 10.1.11.205/test-tool/myjmeter@sha256:08f8e98cdb364567d59f76b67c8c4879d0963eacc558b7e958d4b91b4fedd9a3\n - 10.10.102.120:8443/testapp/myjmeter@sha256:08f8e98cdb364567d59f76b67c8c4879d0963eacc558b7e958d4b91b4fedd9a3\n - 10.1.11.205/test-tool/myjmeter:v1.0\n - 10.10.102.120:8443/testapp/myjmeter:v1.0\n sizeBytes: 842714693\n - names:\n - 10.1.11.205/k8s-deploy/bookdemo@sha256:e80a8011e56092ea1ac19f622984ff985e3d5ef7d7025b779743222a2eb0c8ab\n - 10.1.11.205/k8s-deploy/bookdemo:v5\n sizeBytes: 840693868\n - names:\n - 10.10.102.213:8443/cloudnevro-test/nephele@sha256:e53ccbb3397ce2da422d5b5644f178c3b65aacded64cdc9aeb997eb63689fbcd\n - 10.10.102.213:8443/cloudnevro-test/nephele:v1.0.0\n sizeBytes: 773951646\n - names:\n - 10.10.102.213:8443/cloudnevro-jxjk/nephele@sha256:c8a728f32113a22a2becf7b89b916de6d79dcfab2c51f43a75c4bf284b1f86c5\n - 10.10.102.213:8443/cloudnevro-jxjk/nephele:v1.0.0\n sizeBytes: 773943383\n - names:\n - 10.10.102.213:8443/cloudnevro-jxjk/nephele@sha256:8a8fd140e074a2a5da3ba64250a93cbb49e03830ace5562fed6d29ecd26a26f1\n sizeBytes: 773937443\n - names:\n - 10.10.102.213:8443/cloudnevro-jxjk/nephele@sha256:d6155d7d8db24b7b2e0a65052cd27b00bbc89c78ad4fc426bb694d45f2b2c187\n sizeBytes: 773927434\n - names:\n - 10.1.11.205/k8s-deploy-test2/nephele@sha256:8d345345366ed39c55b125eb4a343c375266b79e19608ee6d2221c0f380ca4b4\n sizeBytes: 772415391\n - names:\n - 10.1.11.205/k8s-deploy-test2/nephele@sha256:a0e9754459b2733cf7514aa0c0d318933f3335ca58b4a7153bb0f312b1a2ea47\n - 10.1.11.205/k8s-deploy-test2/nephele:v1.0.0\n sizeBytes: 772415336\n - names:\n - 10.1.11.205/k8s-deploy-test2/nephele@sha256:0b7baac8e4a3125161c342948729e995dbad086dd69e3cea21bbc4c4bbea731d\n sizeBytes: 772408699\n - names:\n - 10.1.11.205/practice/bookdemo@sha256:9c735080822acb751c0c48c56711b81fb33a3074247979db5cb5f63852af620e\n - 10.1.11.205/practice/bookdemo:v0.1\n sizeBytes: 769109585\n - names:\n - 10.1.11.205/k8s-deploy-test2/nephele@sha256:a7793d97feaafb76683dfca04cff48d4372f58ee5fd09062a105243ce5c8afaa\n sizeBytes: 759450360\n - names:\n - 10.1.11.205/k8s-deploy-test2/nephele@sha256:090bc396c88f2d3ffb8fba4d71fae95c62830002dd3620a290cb549e59abcb30\n sizeBytes: 759434977\n - names:\n - 10.1.11.205/k8s-deploy-test2/nginx-ingress-controller@sha256:f778a612096d158bcad7196f30099eaf3cbdf34780dcdbb618107ccd25e3647a\n - 10.10.102.120:8443/cloudmonitor/nginx-ingress-controller@sha256:f778a612096d158bcad7196f30099eaf3cbdf34780dcdbb618107ccd25e3647a\n - 10.1.11.205/k8s-deploy-test2/nginx-ingress-controller:0.24.1-hc3\n - 10.10.102.120:8443/cloudmonitor/nginx-ingress-controller:0.24.1-hc3\n sizeBytes: 690449615\n - names:\n - 10.1.11.205/k8s-deploy-test2/apm-es-server@sha256:85ba7f7ba225106797131c9dc428b35e18308b4edf12830717af3666f61a0690\n - 10.1.11.205/k8s-deploy-test2/apm-es-server:v1.0.0\n sizeBytes: 685758589\n - names:\n - 10.1.11.205/k8s-deploy-test2/apm-es-server@sha256:0295530a3b82a54200336841b741a69867fa4674ce7a8ae10130e3cbbd5a2669\n sizeBytes: 685758449\n - names:\n - 10.1.11.205/k8s-deploy-test2/apm-es-server@sha256:0a05d018f439b25033d3dc811c3f77f028c6c1e45f80641a5803248fea5c6924\n sizeBytes: 685758444\n - names:\n - 10.1.11.205/k8s-deploy-test2/apm-es-server@sha256:5a9f0205b470f81b8d57b95ccd2c96ec1a654386dbe0c0122dbe90b95696552d\n sizeBytes: 685758441\n - names:\n - 10.1.11.205/k8s-deploy-test2/apm-es-server@sha256:28a8abb342aacfdab7d948dd84681cb1cb9432473b662fc3bc81bd2100444a0b\n sizeBytes: 685758400\n - names:\n - 10.1.11.205/k8s-deploy-test2/apm-es-server@sha256:4d88565334294b4061c0a7070205f1ed3cdd39538fc44b3252d701e5e293c5a6\n sizeBytes: 685757724\n - names:\n - 10.1.11.205/k8s-deploy-test2/apm-es-server@sha256:5e062ab920630698b66a8e491e38fa699fcad930f5fefb7573c05a94fbc9da2d\n sizeBytes: 685737656\n - names:\n - 10.1.11.205/k8s-deploy-test2/apm-es-server@sha256:326d5fe5ccfa0018aa7fd8b96aa3e24b19dce5c9ef16d247a79a1a93ae712a28\n sizeBytes: 685694315\n - names:\n - 10.1.11.205/k8s-deploy-test2/apm-es-server@sha256:4dadd93520765c3493adf02248727fe2ddee5207429aa46319de06dc51201114\n sizeBytes: 685694273\n - names:\n - 10.1.11.205/k8s-deploy-test2/apm-es-server@sha256:0c1d4a33e3b87aeb918f8bac862270ec59eb7d78ea9d21b55d36e2f53a14a830\n sizeBytes: 685694265\n - names:\n - 10.1.11.205/k8s-deploy-test2/apm-es-server@sha256:fc42f962222a286751096044a66f754139322e91102ac9423b854d8ecb761d41\n sizeBytes: 685694260\n - names:\n - 10.1.11.205/k8s-deploy-test2/apm-es-server@sha256:b1a3871927a913afd1d907b3b7e47190f8b70f7e0d36c9f9f7fe28fabe07891b\n sizeBytes: 685681834\n - names:\n - 10.1.11.205/k8s-deploy-test2/apm-es-server@sha256:c435c426df269c0ba15e00ca64bc68d5cdfaa6ebf38ff6ac6ecbd79d1def93c1\n sizeBytes: 685681780\n - names:\n - 10.1.11.205/k8s-deploy-test2/apm-es-server@sha256:0fb5bef7bf5647bace810d26954028849afaafcd17fbc2bd07ca383ee8d373e0\n sizeBytes: 685681780\n - names:\n - 10.1.11.205/k8s-deploy-test2/apm-es-server@sha256:a37d86030d7847dae6cc9ceb2e5e0f934853bad8f40ad4769fd91b18d85e6f9e\n sizeBytes: 685681452\n - names:\n - 10.1.11.205/k8s-deploy-test2/apm-es-server@sha256:797bfab4d0e202be082ed3727dc7d1d86c47a25e1286eb8e82fd9702c87f9671\n sizeBytes: 685681386\n - names:\n - 10.1.11.205/k8s-deploy-test2/apm-es-server@sha256:94099e785dc6caa22bb19bee541b551f8d40facd77eeecebb3eb60c6a2fa1e0d\n sizeBytes: 685681319\n - names:\n - 10.1.11.205/k8s-deploy-test2/apm-es-server@sha256:9c99aa4bc12a94b0085a4df59e824d2f14d0a43975bde91dfc5842bb7094c034\n sizeBytes: 685209709\n - names:\n - 10.10.102.213:8443/cloudnevro-jxjk/apm-es-server@sha256:ca00480579aa73d6fc276996c1be057f4ba5f12f6a620caab2c43ed8d7cc3b5f\n - 10.10.102.213:8443/cloudnevro-jxjk/apm-es-server:v1.0.0\n sizeBytes: 682980650\n - names:\n - 10.10.102.213:8443/cloudnevro-jxjk/apm-es-server@sha256:045d610323cd35ba63477567a80dde86995878abbfd3239817c1d0d81cb963e2\n sizeBytes: 682980650\n - names:\n - 10.10.102.213:8443/cloudnevro-jxjk/apm-es-server@sha256:4c751ba0f68a92dc1cf02c2b483dd25b7238772e1ee23ad1a95f18f1c37c3ac8\n sizeBytes: 682980589\n - names:\n - 10.10.102.213:8443/cloudnevro-jxjk/apm-es-server@sha256:e8a0b1446aa899ae5eebe34ed3af02b1c31bc330dcf2b96430616a4b042d48ed\n sizeBytes: 682980589\n - names:\n - 10.10.102.213:8443/cloudnevro-jxjk/apm-es-server@sha256:f05c3143f5b5021a27f2f4e34a4f14f601bbebb10cc634d14424db3db06d213c\n sizeBytes: 682978980\n - names:\n - 10.10.102.120:8443/cloudmonitor-jxjk/apm-es-server@sha256:ef220df4ab1ac0c1105dd84677ce14225163b881fbd55166ae7501a0561d60bf\n - 10.10.102.120:8443/cloudmonitor-jxjk/apm-es-server:v1.0.0\n sizeBytes: 682978922\n - names:\n - 10.10.102.120:8443/cloudmonitor-jxjk/apm-es-server@sha256:af0b690c1b52ee8ae0195a827cec6eefd1d91fa15161153fe3efe6824f9a94e7\n sizeBytes: 682978729\n - names:\n - 10.10.102.120:8443/cloudmonitor-test/apm-es-server@sha256:212f05220ff1952d486b8c326ccfbfd115583d4d194fed88a4bb9ddcabeacbee\n - 10.10.102.120:8443/cloudmonitor-test/apm-es-server:v1.0.0\n sizeBytes: 682974277\n - names:\n - 10.1.11.205/k8s-deploy/bookdemo@sha256:c3fe72f35c6b36d9502b0d3a4125dac4f711a096aac13a300b9360fc5eab41dd\n - 10.1.11.205/k8s-deploy/bookdemo:v6\n sizeBytes: 646684901\n - names:\n - 10.10.102.213:8443/cloudnevro-test/application-monitor@sha256:a96c4f23cf22d4a1f87a078f8043d519e5f28026637ff76d53a0628aa28af856\n sizeBytes: 631964747\n - names:\n - 10.10.102.213:8443/cloudnevro-test/application-monitor@sha256:f4854b35997fc11568f68f7d6a034ea0f167cbb96c9abd26aa5ce2ff34ccb180\n - 10.10.102.213:8443/cloudnevro-test/application-monitor:v1.0.0\n sizeBytes: 631964747\n - names:\n - 10.10.102.120:8443/cloudmonitor-test/application-monitor@sha256:f0ef6b6b2e42cdfa9aa3889a78c3e84c2af9871f601a10b4456710209b6ea626\n - 10.10.102.120:8443/cloudmonitor-test/application-monitor:v1.0.0\n sizeBytes: 631964747\n - names:\n - 10.1.11.205/k8s-deploy-test2/apm-config-server@sha256:c88c8d20ede63247447f73368f6ba3efd942937398fd7e92f12a5e69eefed40a\n - 10.1.11.205/k8s-deploy-test2/apm-config-server:v1.0.0\n sizeBytes: 628053443\n - names:\n - 10.1.11.205/k8s-deploy-test2/apm-config-server@sha256:21afc3ee1cb0aa5a2107657162a1bde2220ee26535378a4ab4f052b8d594fe81\n sizeBytes: 628053404\n - names:\n - 10.1.11.205/k8s-deploy-test2/apm-config-server@sha256:8d8b85c838c128f6e1923894a434d09e7614100d4ba2397d7cbdf01205174986\n sizeBytes: 628052411\n - names:\n - 10.1.11.205/k8s-deploy-test2/application-monitor@sha256:5305e429d4d80b190404247af9afac8240deb910dc056e8cef4def1bc6a8cd17\n - 10.1.11.205/k8s-deploy-test2/application-monitor:v1.0.0\n sizeBytes: 618764177\n - names:\n - 10.1.11.205/k8s-deploy-test2/application-monitor@sha256:793afed6053fd14c53d7cadbcdc0738c096632b2525be83a0af3ec9ecc8d681f\n sizeBytes: 615885903\n - names:\n - 10.10.102.213:8443/cloudnevro-test/apm-alarm@sha256:4a0d7854da18903da541c940b36796a8c831c9c1115b6d641f55053bd685876e\n - 10.10.102.213:8443/cloudnevro-test/apm-alarm:v1.0.0\n sizeBytes: 599375621\n - names:\n - 10.10.102.213:8443/cloudnevro-test/bookdemo@sha256:ce6da0f0a92256a446a7427ebd4f5da65e36ddb524bebc8af2ca9f0b8685b405\n - 10.10.102.213:8443/cloudnevro-test/bookdemo:v.2.0-okhttp-nomq-3\n sizeBytes: 596488490\n nodeInfo:\n architecture: amd64\n bootID: 358c7056-d168-4ff9-af0e-84d1af398df8\n containerRuntimeVersion: docker://20.10.12\n kernelVersion: 3.10.0-693.el7.x86_64\n kubeProxyVersion: v1.18.1\n kubeletVersion: v1.18.1\n machineID: 8a33de0213194776a70fc54cd50c024e\n operatingSystem: linux\n osImage: CentOS Linux 7 (Core)\n systemUUID: 1B0D1242-F65D-310E-E70D-7731ABAE59F0\n', 0, 110, 'c8cac3c8-e229-40dc-93e5-219a372fc80e', '2022-01-07 03:08:02', NULL);

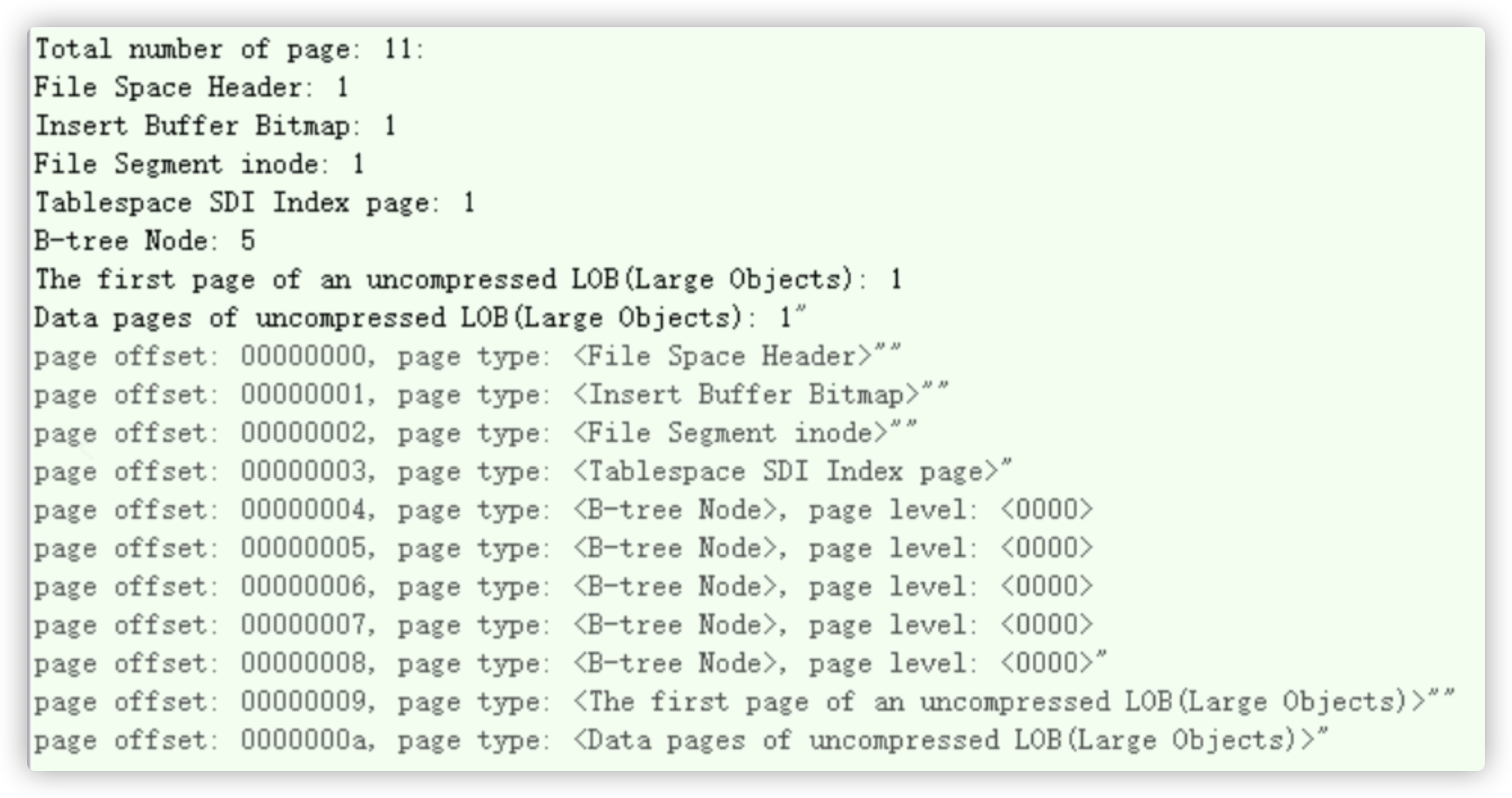

分析:此时因为数据长度超过溢出阈值,发生了页溢出,从上图可以看出新增了2个溢出页<pages of uncompressed LOB>.

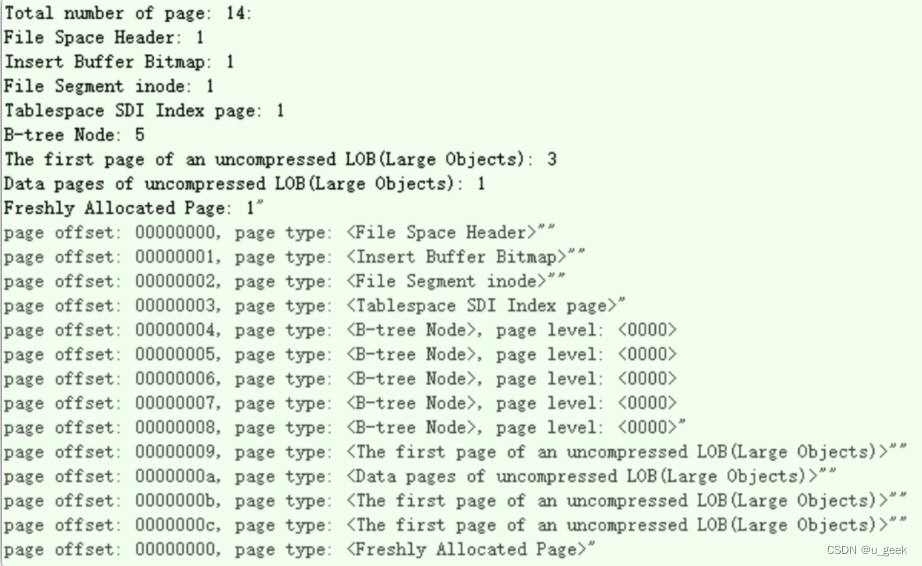

我们将这个条数据更新100次,每次更新仅更新yaml_content字段,且每次在原yaml_content字段后随机增加0-100个字符,然后分析ibd文件,结果如下:

分析:此时虽更新100次,但数据页<B-tree Node>并未增加,而溢出页<pages of uncompressed LOB>持续增加。

分析:此时虽更新100次,但数据页<B-tree Node>并未增加,而溢出页<pages of uncompressed LOB>持续增加。

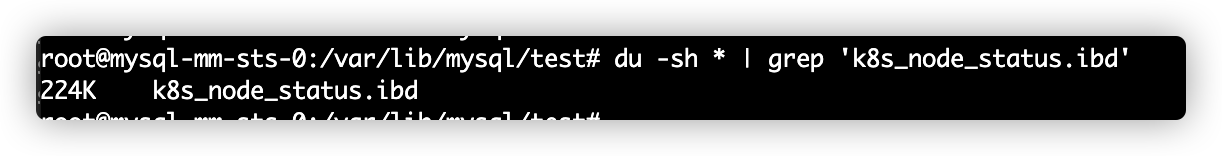

同时ibd文件大小已从177K增加到224K大小。

这个结果验证了之前的结论,也就是大的text字段更新时,将不会在原来的位置更新。而是会在写一个新值到一个新的空间,并且不会删除旧的值。

解决方案:

1、重建表

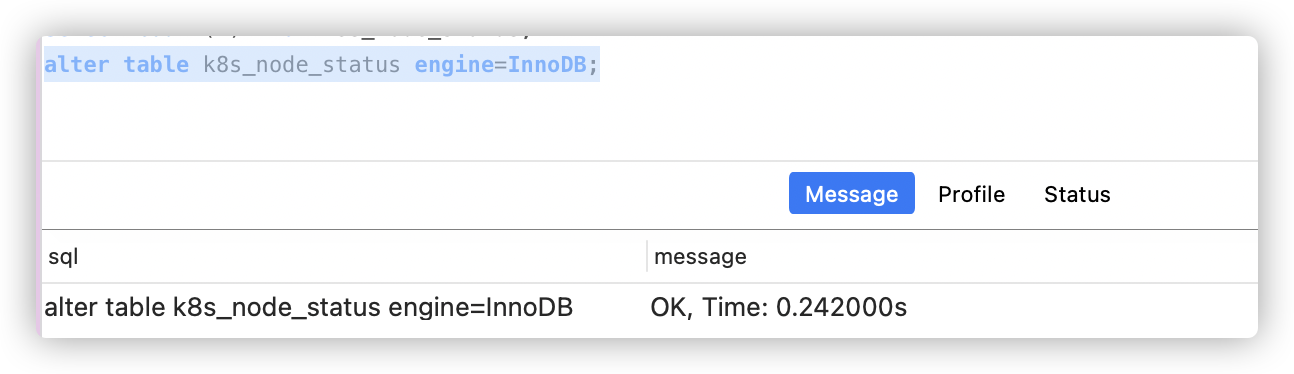

由于表记录持续频繁更新,表文件也持续增大,我们可以通过重建表来释放空间,可执行以下语句:

alter table k8s_node_status engine=InnoDB;

这个语句首先会建一张临时表,然后将旧表数据迁移到临时表,迁移完之后,使用临时表替换旧表,最后释放旧表空间。并且这个过程是Online的,即迁移过程中表依旧可供业务增删改查,不影响业务。

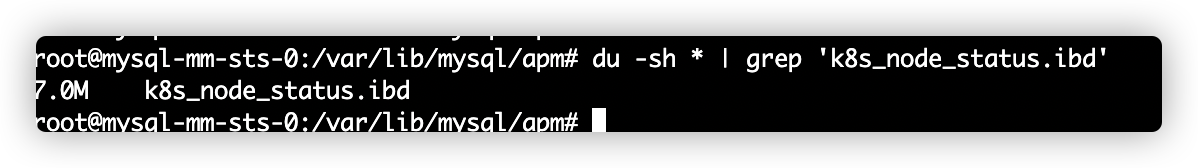

因为k8s_node_status表数据量比较小,这个重建过程耗时也是比较短,重建之后表文件从之前的1.8G减小到7M大小。

该方案可作为一种临时方案,通过一个定时任务,每天/每周执行一次重建表过程,在表数据不大的情况下,重建过程对业务影响较小。

2、优化字段

对于数据比较大的text/blob/varchar字段,若字段不会频繁更新或不会更新,那么存储在mysql中没什么问题。若确实需要频繁更新,那么需要考虑这个字段是否需要存储这么长的字段,能否将字段长度保持在一定长度内(8089),超出长度对字段进行截断。避免页溢出。

ref:

1、官方文档TABLES表说明MySQL :: MySQL 8.0 Reference Manual :: 26.3.38 The INFORMATION_SCHEMA TABLES Table

2、林晓斌:为什么表数据删掉一半,表文件大小不变?

3、MySQL技术内幕:InnoDB存储引擎,第四章

4、高性能MySQL

https://github.com/SimonOrK/py_innodb_page_info_GUI.git

https://github.com/SimonOrK/py_innodb_page_info_GUI.git

1822

1822

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?