在eclipse中新建java工程mapreduce

1)导入包如下

hadoop-2.8.5\share\hadoop\hdfs\hadoop-hdfs-2.8.5.jar

hadoop-2.8.5\share\hadoop\hdfs\lib\*

hadoop-2.8.5\share\hadoop\common\hadoop-common-2.8.5.jar

hadoop-2.8.5\share\hadoop\common\lib\*

hadoop-2.8.5\share\hadoop\mapreduce\hadoop-mapreduce-client-app-2.8.5

hadoop-2.8.5\share\hadoop\mapreduce\hadoop-mapreduce-client-common-2.8.5

hadoop-2.8.5\share\hadoop\mapreduce\hadoop-mapreduce-client-core-2.8.5

hadoop-2.8.5\share\hadoop\mapreduce\hadoop-mapreduce-client-hs-2.8.5

hadoop-2.8.5\share\hadoop\mapreduce\hadoop-mapreduce-client-hs-plugins-2.8.5

hadoop-2.8.5\share\hadoop\mapreduce\hadoop-mapreduce-client-jobclient-2.8.5

hadoop-2.8.5\share\hadoop\mapreduce\hadoop-mapreduce-client-jobclient-2.8.5-tests

hadoop-2.8.5\share\hadoop\mapreduce\hadoop-mapreduce-client-shuffle-2.8.5

hadoop-2.8.5\share\hadoop\mapreduce\lib\*

hadoop-2.8.5\share\hadoop\yarn\hadoop-yarn-api-2.8.5

hadoop-2.8.5\share\hadoop\yarn\hadoop-yarn-applications-distributedshell-2.8.5

hadoop-2.8.5\share\hadoop\yarn\hadoop-yarn-applications-unmanaged-am-launcher-2.8.5

hadoop-2.8.5\share\hadoop\yarn\hadoop-yarn-client-2.8.5

hadoop-2.8.5\share\hadoop\yarn\hadoop-yarn-common-2.8.5

hadoop-2.8.5\share\hadoop\yarn\hadoop-yarn-registry-2.8.5

hadoop-2.8.5\share\hadoop\yarn\lib\*

2)新建WordCoutMapper 类,用来切割字符串

/*

* 单词计数

* <单词,1>

*

* KEYIN:数据起始偏移量 0~10 11~20

* VALUEIN:数据

*

* KEYOUT:mapper输出reduce阶段key的类型

* VALUEOUT:mapper输出到reduce阶段value的类型

*/

public class WordCoutMapper extends Mapper<LongWritable,Text,Text,IntWritable>{

@Override

protected void map(LongWritable key, Text value, Mapper<LongWritable, Text, Text, IntWritable>.Context context)

throws IOException, InterruptedException {

//1.读取数据

String line = value.toString();

//2.切割hello hunter

String[] words = line.split(" ");

//3.循环的写到下一个阶段

for(String w:words) {

//4.输出到reduce

context.write(new Text(w),new IntWritable(1));

}

}

}

3)新建WordCoutReduce 类,用来统计单词数

public class WordCoutReduce extends Reducer<Text,IntWritable,Text,IntWritable>{

@Override

protected void reduce(Text key, Iterable<IntWritable> values,Context context) throws IOException, InterruptedException {

//1.统计单词出现的次数

int sum = 0;

//2.累加求和

for(IntWritable count:values) {

//拿到值累加

sum+=count.get();

}

//3.结果输出

context.write(key,new IntWritable(sum));

}

}

4)新建驱动类WordCountDriver类

public class WordCoutDriver {

public static void main(String[] args) throws IOException, ClassNotFoundException, InterruptedException {

//1.获取job任务

Configuration conf = new Configuration();

Job job = Job.getInstance();

//2.获取jar包

job.setJarByClass(WordCoutDriver.class);

//3.获取自定义的mapper与reducer类

job.setMapperClass(WordCoutMapper.class);

job.setReducerClass(WordCoutReduce.class);

//4.设置map输出的数据类型

job.setMapOutputKeyClass(Text.class);

job.setMapOutputValueClass(IntWritable.class);

//5.设置reduce输出的数据类型(最终的数据类型)

job.setOutputKeyClass(Text.class);

job.setOutputValueClass(IntWritable.class);

//6.设置输入数据存在的路径与处理后的结果路径

FileInputFormat.setInputPaths(job, new Path("e://in"));

FileOutputFormat.setOutputPath(job,new Path("e://out"));

//7.提交任务

boolean rs = job.waitForCompletion(true);

System.out.println(rs?0:1);

}

}

5)执行驱动类

e://in为目录,in目录下面新建word.txt里面输入需要统计的内容

hello tony

hello hunter

hello dilireba

tony henshuai

aa zz

e://out为统计结果输出的目录,在执行成功后,结果会自动输出到该目录

执行后,可能会报错,错误如下:

Exception in thread “main” java.lang.UnsatisfiedLinkError: org.apache.hadoop.io.nativeio.NativeIOWindows.access0(Ljava/lang/String;I)Zatorg.apache.hadoop.io.nativeio.NativeIOWindows.access0(Ljava/lang/String;I)Z at org.apache.hadoop.io.nativeio.NativeIOWindows.access0(Ljava/lang/String;I)Zatorg.apache.hadoop.io.nativeio.NativeIOWindows.access0(Native Method)

at org.apache.hadoop.io.nativeio.NativeIOWindows.access(NativeIO.java:606)atorg.apache.hadoop.fs.FileUtil.canRead(FileUtil.java:969)atorg.apache.hadoop.util.DiskChecker.checkAccessByFileMethods(DiskChecker.java:160)atorg.apache.hadoop.util.DiskChecker.checkDirInternal(DiskChecker.java:100)atorg.apache.hadoop.util.DiskChecker.checkDir(DiskChecker.java:77)atorg.apache.hadoop.fs.LocalDirAllocatorWindows.access(NativeIO.java:606) at org.apache.hadoop.fs.FileUtil.canRead(FileUtil.java:969) at org.apache.hadoop.util.DiskChecker.checkAccessByFileMethods(DiskChecker.java:160) at org.apache.hadoop.util.DiskChecker.checkDirInternal(DiskChecker.java:100) at org.apache.hadoop.util.DiskChecker.checkDir(DiskChecker.java:77) at org.apache.hadoop.fs.LocalDirAllocatorWindows.access(NativeIO.java:606)atorg.apache.hadoop.fs.FileUtil.canRead(FileUtil.java:969)atorg.apache.hadoop.util.DiskChecker.checkAccessByFileMethods(DiskChecker.java:160)atorg.apache.hadoop.util.DiskChecker.checkDirInternal(DiskChecker.java:100)atorg.apache.hadoop.util.DiskChecker.checkDir(DiskChecker.java:77)atorg.apache.hadoop.fs.LocalDirAllocatorAllocatorPerContext.confChanged(LocalDirAllocator.java:314)

at org.apache.hadoop.fs.LocalDirAllocatorAllocatorPerContext.getLocalPathForWrite(LocalDirAllocator.java:377)atorg.apache.hadoop.fs.LocalDirAllocator.getLocalPathForWrite(LocalDirAllocator.java:151)atorg.apache.hadoop.fs.LocalDirAllocator.getLocalPathForWrite(LocalDirAllocator.java:132)atorg.apache.hadoop.fs.LocalDirAllocator.getLocalPathForWrite(LocalDirAllocator.java:116)atorg.apache.hadoop.mapred.LocalDistributedCacheManager.setup(LocalDistributedCacheManager.java:125)atorg.apache.hadoop.mapred.LocalJobRunnerAllocatorPerContext.getLocalPathForWrite(LocalDirAllocator.java:377) at org.apache.hadoop.fs.LocalDirAllocator.getLocalPathForWrite(LocalDirAllocator.java:151) at org.apache.hadoop.fs.LocalDirAllocator.getLocalPathForWrite(LocalDirAllocator.java:132) at org.apache.hadoop.fs.LocalDirAllocator.getLocalPathForWrite(LocalDirAllocator.java:116) at org.apache.hadoop.mapred.LocalDistributedCacheManager.setup(LocalDistributedCacheManager.java:125) at org.apache.hadoop.mapred.LocalJobRunnerAllocatorPerContext.getLocalPathForWrite(LocalDirAllocator.java:377)atorg.apache.hadoop.fs.LocalDirAllocator.getLocalPathForWrite(LocalDirAllocator.java:151)atorg.apache.hadoop.fs.LocalDirAllocator.getLocalPathForWrite(LocalDirAllocator.java:132)atorg.apache.hadoop.fs.LocalDirAllocator.getLocalPathForWrite(LocalDirAllocator.java:116)atorg.apache.hadoop.mapred.LocalDistributedCacheManager.setup(LocalDistributedCacheManager.java:125)atorg.apache.hadoop.mapred.LocalJobRunnerJob.(LocalJobRunner.java:171)

at org.apache.hadoop.mapred.LocalJobRunner.submitJob(LocalJobRunner.java:758)

at org.apache.hadoop.mapreduce.JobSubmitter.submitJobInternal(JobSubmitter.java:242)

at org.apache.hadoop.mapreduce.Job$11.run(Job.java:1341)

at org.apache.hadoop.mapreduce.Job$11.run(Job.java:1338)

at java.security.AccessController.doPrivileged(Native Method)

at javax.security.auth.Subject.doAs(Unknown Source)

at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1844)

at org.apache.hadoop.mapreduce.Job.submit(Job.java:1338)

at org.apache.hadoop.mapreduce.Job.waitForCompletion(Job.java:1359)

at com.tony.wordcout.WordCoutDriver.main(WordCoutDriver.java:41)

这个错误是缺少一个组件,hadoop.dll

找到后,放在hadoop的bin目录下即可。

执行成功后,输出日志

2019-01-06 17:55:15,706 INFO [LocalJobRunner Map Task Executor #0] mapred.Task (Task.java:done(1139)) - Final Counters for attempt_local1688756921_0001_m_000000_0: Counters: 17

File System Counters

FILE: Number of bytes read=202

FILE: Number of bytes written=384701

FILE: Number of read operations=0

FILE: Number of large read operations=0

FILE: Number of write operations=0

Map-Reduce Framework

Map input records=5

Map output records=10

Map output bytes=99

Map output materialized bytes=125

Input split bytes=86

Combine input records=0

Spilled Records=10

Failed Shuffles=0

Merged Map outputs=0

GC time elapsed (ms)=0

Total committed heap usage (bytes)=192937984

File Input Format Counters

Bytes Read=62

2019-01-06 17:55:15,707 INFO [LocalJobRunner Map Task Executor #0] mapred.LocalJobRunner (LocalJobRunner.java:run(276)) - Finishing task: attempt_local1688756921_0001_m_000000_0

2019-01-06 17:55:15,708 INFO [Thread-4] mapred.LocalJobRunner (LocalJobRunner.java:runTasks(483)) - map task executor complete.

2019-01-06 17:55:15,713 INFO [Thread-4] mapred.LocalJobRunner (LocalJobRunner.java:runTasks(475)) - Waiting for reduce tasks

2019-01-06 17:55:15,714 INFO [pool-4-thread-1] mapred.LocalJobRunner (LocalJobRunner.java:run(329)) - Starting task: attempt_local1688756921_0001_r_000000_0

2019-01-06 17:55:15,735 INFO [pool-4-thread-1] output.FileOutputCommitter (FileOutputCommitter.java:<init>(123)) - File Output Committer Algorithm version is 1

2019-01-06 17:55:15,735 INFO [pool-4-thread-1] output.FileOutputCommitter (FileOutputCommitter.java:<init>(138)) - FileOutputCommitter skip cleanup _temporary folders under output directory:false, ignore cleanup failures: false

2019-01-06 17:55:15,736 INFO [pool-4-thread-1] util.ProcfsBasedProcessTree (ProcfsBasedProcessTree.java:isAvailable(168)) - ProcfsBasedProcessTree currently is supported only on Linux.

2019-01-06 17:55:15,882 INFO [pool-4-thread-1] mapred.Task (Task.java:initialize(620)) - Using ResourceCalculatorProcessTree : org.apache.hadoop.yarn.util.WindowsBasedProcessTree@79858512

2019-01-06 17:55:15,888 INFO [pool-4-thread-1] mapred.ReduceTask (ReduceTask.java:run(362)) - Using ShuffleConsumerPlugin: org.apache.hadoop.mapreduce.task.reduce.Shuffle@637843d4

2019-01-06 17:55:15,915 INFO [pool-4-thread-1] reduce.MergeManagerImpl (MergeManagerImpl.java:<init>(206)) - MergerManager: memoryLimit=2004562688, maxSingleShuffleLimit=501140672, mergeThreshold=1323011456, ioSortFactor=10, memToMemMergeOutputsThreshold=10

2019-01-06 17:55:15,920 INFO [EventFetcher for fetching Map Completion Events] reduce.EventFetcher (EventFetcher.java:run(61)) - attempt_local1688756921_0001_r_000000_0 Thread started: EventFetcher for fetching Map Completion Events

2019-01-06 17:55:16,046 INFO [main] mapreduce.Job (Job.java:monitorAndPrintJob(1411)) - Job job_local1688756921_0001 running in uber mode : false

2019-01-06 17:55:16,050 INFO [main] mapreduce.Job (Job.java:monitorAndPrintJob(1418)) - map 100% reduce 0%

2019-01-06 17:55:16,080 INFO [localfetcher#1] reduce.LocalFetcher (LocalFetcher.java:copyMapOutput(145)) - localfetcher#1 about to shuffle output of map attempt_local1688756921_0001_m_000000_0 decomp: 121 len: 125 to MEMORY

2019-01-06 17:55:16,095 INFO [localfetcher#1] reduce.InMemoryMapOutput (InMemoryMapOutput.java:doShuffle(93)) - Read 121 bytes from map-output for attempt_local1688756921_0001_m_000000_0

2019-01-06 17:55:16,098 INFO [localfetcher#1] reduce.MergeManagerImpl (MergeManagerImpl.java:closeInMemoryFile(321)) - closeInMemoryFile -> map-output of size: 121, inMemoryMapOutputs.size() -> 1, commitMemory -> 0, usedMemory ->121

2019-01-06 17:55:16,102 INFO [EventFetcher for fetching Map Completion Events] reduce.EventFetcher (EventFetcher.java:run(76)) - EventFetcher is interrupted.. Returning

2019-01-06 17:55:16,105 INFO [pool-4-thread-1] mapred.LocalJobRunner (LocalJobRunner.java:statusUpdate(618)) - 1 / 1 copied.

2019-01-06 17:55:16,106 INFO [pool-4-thread-1] reduce.MergeManagerImpl (MergeManagerImpl.java:finalMerge(693)) - finalMerge called with 1 in-memory map-outputs and 0 on-disk map-outputs

2019-01-06 17:55:16,172 INFO [pool-4-thread-1] mapred.Merger (Merger.java:merge(606)) - Merging 1 sorted segments

2019-01-06 17:55:16,173 INFO [pool-4-thread-1] mapred.Merger (Merger.java:merge(705)) - Down to the last merge-pass, with 1 segments left of total size: 116 bytes

2019-01-06 17:55:16,177 INFO [pool-4-thread-1] reduce.MergeManagerImpl (MergeManagerImpl.java:finalMerge(760)) - Merged 1 segments, 121 bytes to disk to satisfy reduce memory limit

2019-01-06 17:55:16,180 INFO [pool-4-thread-1] reduce.MergeManagerImpl (MergeManagerImpl.java:finalMerge(790)) - Merging 1 files, 125 bytes from disk

2019-01-06 17:55:16,181 INFO [pool-4-thread-1] reduce.MergeManagerImpl (MergeManagerImpl.java:finalMerge(805)) - Merging 0 segments, 0 bytes from memory into reduce

2019-01-06 17:55:16,182 INFO [pool-4-thread-1] mapred.Merger (Merger.java:merge(606)) - Merging 1 sorted segments

2019-01-06 17:55:16,185 INFO [pool-4-thread-1] mapred.Merger (Merger.java:merge(705)) - Down to the last merge-pass, with 1 segments left of total size: 116 bytes

2019-01-06 17:55:16,186 INFO [pool-4-thread-1] mapred.LocalJobRunner (LocalJobRunner.java:statusUpdate(618)) - 1 / 1 copied.

2019-01-06 17:55:16,197 INFO [pool-4-thread-1] Configuration.deprecation (Configuration.java:logDeprecation(1285)) - mapred.skip.on is deprecated. Instead, use mapreduce.job.skiprecords

2019-01-06 17:55:16,213 INFO [pool-4-thread-1] mapred.Task (Task.java:done(1105)) - Task:attempt_local1688756921_0001_r_000000_0 is done. And is in the process of committing

2019-01-06 17:55:16,224 INFO [pool-4-thread-1] mapred.LocalJobRunner (LocalJobRunner.java:statusUpdate(618)) - 1 / 1 copied.

2019-01-06 17:55:16,225 INFO [pool-4-thread-1] mapred.Task (Task.java:commit(1284)) - Task attempt_local1688756921_0001_r_000000_0 is allowed to commit now

2019-01-06 17:55:16,244 INFO [pool-4-thread-1] output.FileOutputCommitter (FileOutputCommitter.java:commitTask(582)) - Saved output of task 'attempt_local1688756921_0001_r_000000_0' to file:/e:/out/_temporary/0/task_local1688756921_0001_r_000000

2019-01-06 17:55:16,246 INFO [pool-4-thread-1] mapred.LocalJobRunner (LocalJobRunner.java:statusUpdate(618)) - reduce > reduce

2019-01-06 17:55:16,247 INFO [pool-4-thread-1] mapred.Task (Task.java:sendDone(1243)) - Task 'attempt_local1688756921_0001_r_000000_0' done.

2019-01-06 17:55:16,249 INFO [pool-4-thread-1] mapred.Task (Task.java:done(1139)) - Final Counters for attempt_local1688756921_0001_r_000000_0: Counters: 24

File System Counters

FILE: Number of bytes read=484

FILE: Number of bytes written=384894

FILE: Number of read operations=0

FILE: Number of large read operations=0

FILE: Number of write operations=0

Map-Reduce Framework

Combine input records=0

Combine output records=0

Reduce input groups=7

Reduce shuffle bytes=125

Reduce input records=10

Reduce output records=7

Spilled Records=10

Shuffled Maps =1

Failed Shuffles=0

Merged Map outputs=1

GC time elapsed (ms)=44

Total committed heap usage (bytes)=192937984

Shuffle Errors

BAD_ID=0

CONNECTION=0

IO_ERROR=0

WRONG_LENGTH=0

WRONG_MAP=0

WRONG_REDUCE=0

File Output Format Counters

Bytes Written=68

2019-01-06 17:55:16,249 INFO [pool-4-thread-1] mapred.LocalJobRunner (LocalJobRunner.java:run(352)) - Finishing task: attempt_local1688756921_0001_r_000000_0

2019-01-06 17:55:16,250 INFO [Thread-4] mapred.LocalJobRunner (LocalJobRunner.java:runTasks(483)) - reduce task executor complete.

2019-01-06 17:55:17,052 INFO [main] mapreduce.Job (Job.java:monitorAndPrintJob(1418)) - map 100% reduce 100%

2019-01-06 17:55:17,054 INFO [main] mapreduce.Job (Job.java:monitorAndPrintJob(1429)) - Job job_local1688756921_0001 completed successfully

2019-01-06 17:55:17,080 INFO [main] mapreduce.Job (Job.java:monitorAndPrintJob(1436)) - Counters: 30

File System Counters

FILE: Number of bytes read=686

FILE: Number of bytes written=769595

FILE: Number of read operations=0

FILE: Number of large read operations=0

FILE: Number of write operations=0

Map-Reduce Framework

Map input records=5

Map output records=10

Map output bytes=99

Map output materialized bytes=125

Input split bytes=86

Combine input records=0

Combine output records=0

Reduce input groups=7

Reduce shuffle bytes=125

Reduce input records=10

Reduce output records=7

Spilled Records=20

Shuffled Maps =1

Failed Shuffles=0

Merged Map outputs=1

GC time elapsed (ms)=44

Total committed heap usage (bytes)=385875968

Shuffle Errors

BAD_ID=0

CONNECTION=0

IO_ERROR=0

WRONG_LENGTH=0

WRONG_MAP=0

WRONG_REDUCE=0

File Input Format Counters

Bytes Read=62

File Output Format Counters

Bytes Written=68

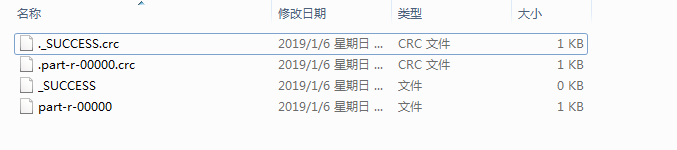

到e://out目录下可以看到如下文件

输入内容在part-r-00000文件中

统计结果如下

aa 1

dilireba 1

hello 3

henshuai 1

hunter 1

tony 2

zz 1

本文详细介绍如何在Eclipse中创建并配置MapReduce工程,包括导入必要的Hadoop库,实现WordCount程序的Mapper和Reducer类,以及运行MapReduce作业的步骤。

本文详细介绍如何在Eclipse中创建并配置MapReduce工程,包括导入必要的Hadoop库,实现WordCount程序的Mapper和Reducer类,以及运行MapReduce作业的步骤。

1072

1072