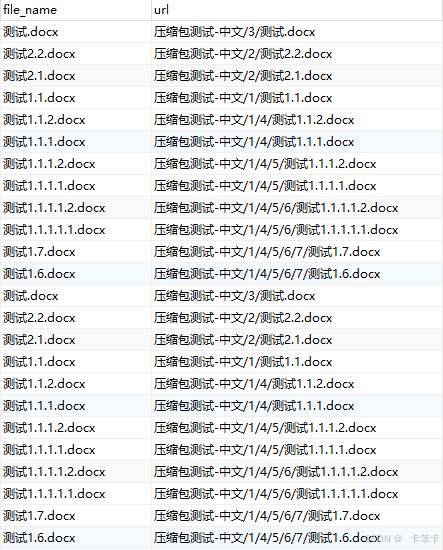

将压缩包每个文件路径记录,一个个上传服务器,最后下载的时候根据文件路径进行组装成压缩包

上传代码

controller

/**

* 上传压缩包

* @return

* @throws Exception

*/

@PostMapping("/translations/fileList")

public WebResult transByFileList(OutsideTransByFileVo vo,@RequestParam("url") String url,MultipartFile[] file) throws Exception {

return outsideTransService.transByFileList(vo,url,file);

}

url参数举例:[{"name":"1.pdf","url":"1.pdf"},{"name":"2.pdf","url":"1/2.pdf"}]

service

@Transactional(rollbackFor = Exception.class)

public WebResult transByFileList(OutsideTransByFileVo vo, String url, MultipartFile[] files) throws Exception {

if (null == files || files.length == 0) {

return WebResult.failure("文件内容不能为空!");

}

//解析 url

JSONArray jsonArray = JSONObject.parseArray(url);

HashMap<String, String> urlMap = new HashMap<>();

for (int i = 0; i < jsonArray.size(); i++) {

String name = ((JSONObject) jsonArray.get(i)).getString("name");

String url1 = ((JSONObject) jsonArray.get(i)).getString("url");

urlMap.put(name,url1);

}

long size = 0;

Integer wordCount = 0;

for (MultipartFile file : files) {

//结构保存

FileZipEntity zipEntity = new FileZipEntity();

zipEntity.setUrl(urlMap.get(file.getOriginalFilename()));

zipEntity.setFileName(file.getOriginalFilename());

//文件保存

FileInfo fileInfo = saveMongodb(file);

}

fileEntity.setFileSize(String.valueOf(size/1024) + "KB");

fileEntity.setWordCount(wordCount);

FileEntity save = fileDao.save(fileEntity);

return WebResult.success(save);

}

下载接口

controller

/**

* 文件下载-zip-

*/

@GetMapping(value = "/downloadFileZip")

public void downloadFileZip(@RequestParam(name = "id") String id, @RequestParam(name = "type") Integer type,

@RequestParam(name = "fileExt", defaultValue = "", required = false) String fileExt,

@RequestParam(name = "fileName", defaultValue = "", required = false) String fileName,

HttpServletRequest request, HttpServletResponse response) throws Exception {

fileService.downloadFileZip(id,type,fileExt,fileName,request,response);

}

service

public void downloadFileZip(String id, Integer type, String fileExt, String fileName, HttpServletRequest request, HttpServletResponse response) throws IOException {

Map<String,String> idsAndUrl = new HashMap<>();

List<FileZipEntity> zipEntityList = fileZipDao.findAllByFileId(id);

//设置浏览器响应

response.setContentType("application/octet-stream");

response.setHeader("Content-Disposition", "attachment;filename=" + URLEncoder.encode(fileEntity.getFileName(), "UTF-8"));

//获取文件结构

idsAndUrl = zipEntityList.stream().collect(Collectors.toMap(FileZipEntity::getOrgFileId,FileZipEntity::getUrl));

ZipOutputStream zipOutputStream = new ZipOutputStream(new BufferedOutputStream(response.getOutputStream()));

zipOutputStream.setMethod(ZipOutputStream.DEFLATED);//设置压缩方法

zipOutputStream.setEncoding("UTF-8");

idsAndUrl.forEach((k,v) -> {

try {

GridFSFile gfsfile = mongoDbFileService.getFSFile(k);

String realFileName = gfsfile.getFilename().replace(",", "");

// //处理中文文件名乱码

// if (request.getHeader("User-Agent").toUpperCase().contains("MSIE") ||

// request.getHeader("User-Agent").toUpperCase().contains("TRIDENT")

// || request.getHeader("User-Agent").toUpperCase().contains("EDGE")) {

// realFileName = java.net.URLEncoder.encode(realFileName, "UTF-8");

// } else {

// //非IE浏览器的处理:

// realFileName = new String(realFileName.getBytes("UTF-8"), "ISO-8859-1");

// }

GridFsResource gridFsResource = mongoDbFileService.convertGridFSFile2Resource(gfsfile);

InputStream inputStream = gridFsResource.getInputStream();

ZipUtil.compressFileInputStream(inputStream,zipOutputStream,v);

} catch (Exception e) {

e.printStackTrace();

}

});

//强行把Buffer的 内容写到客户端浏览器

response.flushBuffer();

// 冲刷输出流

zipOutputStream.flush();

// 关闭输出流

zipOutputStream.close();

}

ZipUtil

package com.sunther.trans.util;

import org.apache.tools.zip.ZipEntry;

import org.apache.tools.zip.ZipOutputStream;

import org.slf4j.Logger;

import org.slf4j.LoggerFactory;

import org.springframework.util.CollectionUtils;

import java.io.*;

import java.nio.charset.Charset;

import java.util.ArrayList;

import java.util.List;

/**

* @author csb

* @description:

* @date 2023/12/14 10:24

*/

public class ZipUtil {

private static Logger logger = LoggerFactory.getLogger(ZipUtil.class);

// 目录标识判断符

private static final String PATCH = "/";

// 基目录

private static final String BASE_DIR = "/b/";

// 缓冲区大小

private static final int BUFFER = 2048;

// 字符集

private static final String CHAR_SET = "UTF-8";

/**

*

* @param srcFile file对象

* @param zipOutputStream

* @param basePath 压缩文件路径

* @throws Exception

*/

public static void compress(File srcFile, ZipOutputStream zipOutputStream, String basePath) throws Exception {

if (srcFile.isDirectory()) {

compressDir(srcFile, zipOutputStream, basePath);

} else {

compressFile(srcFile, zipOutputStream, basePath);

}

}

private static void compressDir(File dir, ZipOutputStream zipOutputStream, String basePath) throws Exception {

try {

// 获取文件列表

File[] files = dir.listFiles();

if (files.length < 1) {

ZipEntry zipEntry = new ZipEntry(dir.getParent().substring(3,dir.getParent().length()) + PATCH);

zipOutputStream.putNextEntry(zipEntry);

zipOutputStream.closeEntry();

}

for (int i = 0,size = files.length; i < size; i++) {

compress(files[i], zipOutputStream, basePath + dir.getName() + PATCH);

}

} catch (Exception e) {

throw new Exception(e.getMessage(), e);

}

}

private static void compressFile(File file, ZipOutputStream zipOutputStream, String dir) throws Exception {

try {

// 压缩文件

ZipEntry zipEntry = new ZipEntry(file.getParent().substring(3,file.getParent().length()) + PATCH + file.getName());

zipOutputStream.putNextEntry(zipEntry);

// 读取文件

BufferedInputStream bis = new BufferedInputStream(new FileInputStream(file));

int count = 0;

byte data[] = new byte[BUFFER];

while ((count = bis.read(data, 0, BUFFER)) != -1) {

zipOutputStream.write(data, 0, count);

}

bis.close();

zipOutputStream.closeEntry();

} catch (Exception e) {

throw new Exception(e.getMessage(),e);

}

}

public static void compressFileInputStream(InputStream inputStream, ZipOutputStream zipOutputStream, String dir) throws Exception {

try {

// 压缩文件

ZipEntry zipEntry = new ZipEntry(dir);

zipEntry.setComment("UTF-8");

zipOutputStream.putNextEntry(zipEntry);

// 读取文件

BufferedInputStream bis = new BufferedInputStream(inputStream);

int count = 0;

byte data[] = new byte[BUFFER];

while ((count = bis.read(data, 0, BUFFER)) != -1) {

zipOutputStream.write(data, 0, count);

}

bis.close();

zipOutputStream.closeEntry();

} catch (Exception e) {

throw new Exception(e.getMessage(),e);

}

}

public static void main(String[] args) {

try {

ZipOutputStream zipOutputStream = new ZipOutputStream(new FileOutputStream(new File("D:/test/aa/a.zip")));

zipOutputStream.setEncoding(CHAR_SET);

List<File> files = new ArrayList<File>();

files.add(new File("D:\\test\\aa\\b\\1.doc"));

files.add(new File("D:\\test\\aa\\11.docx"));

if (CollectionUtils.isEmpty(files) == false) {

for (int i = 0,size = files.size(); i < size; i++) {

compress(files.get(i), zipOutputStream, BASE_DIR);

}

}

// 冲刷输出流

zipOutputStream.flush();

// 关闭输出流

zipOutputStream.close();

} catch (Exception e) {

e.printStackTrace();

}

}

}

mongodbUtil

package com.sunther.trans.service;

import com.mongodb.client.MongoDatabase;

import com.mongodb.client.gridfs.GridFSBucket;

import com.mongodb.client.gridfs.GridFSBuckets;

import com.mongodb.client.gridfs.GridFSDownloadStream;

import com.mongodb.client.gridfs.model.GridFSFile;

import org.bson.types.ObjectId;

import org.springframework.beans.factory.annotation.Autowired;

import org.springframework.context.annotation.Bean;

import org.springframework.data.mongodb.MongoDbFactory;

import org.springframework.data.mongodb.core.query.Criteria;

import org.springframework.data.mongodb.core.query.Query;

import org.springframework.data.mongodb.gridfs.GridFsResource;

import org.springframework.data.mongodb.gridfs.GridFsTemplate;

import org.springframework.stereotype.Service;

import javax.annotation.Resource;

import java.io.IOException;

import java.io.InputStream;

import java.util.HashMap;

/**

* MongoDB数据库文件存储操作

*/

@Service

public class MongoDbFileService {

@Autowired

private GridFsTemplate gridFsTemplate;

@Resource

private MongoDbFactory mongoDbFactory;

@Bean

public GridFSBucket getGridFSBuckets() {

MongoDatabase db = mongoDbFactory.getDb();

return GridFSBuckets.create(db);

}

@Resource

private GridFSBucket gridFSBucket;

public GridFsResource convertGridFSFile2Resource(GridFSFile gridFsFile) {

GridFSDownloadStream gridFSDownloadStream = gridFSBucket.openDownloadStream(gridFsFile.getObjectId());

return new GridFsResource(gridFsFile, gridFSDownloadStream);

}

public GridFSFile getFSFile(String fileid){

Query query = Query.query(Criteria.where("_id").is(fileid));

// 查询单个文件

return gridFsTemplate.findOne(query);

}

public GridFSFile getFSFile(Query query){

// 查询单个文件

return gridFsTemplate.findOne(query);

}

public InputStream getFileInputStream(String fileid) throws IOException {

GridFSFile gridFSFile = getFSFile(fileid);

GridFSDownloadStream gridFSDownloadStream = gridFSBucket.openDownloadStream(gridFSFile.getObjectId());

GridFsResource resource = new GridFsResource(gridFSFile,gridFSDownloadStream);

return resource.getInputStream();

}

public void delFile(String fileid){

gridFsTemplate.delete(Query.query(Criteria.where("_id").is(fileid)));

}

public ObjectId saveFile(InputStream inputStream, String fileName, HashMap metaData){

return gridFsTemplate.store(inputStream, fileName, metaData);

}

}

864

864

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?