什么是TBase(来自https://www.bookstack.cn/read/TBase/196700)

TBase是一个提供写可靠性,多主节点数据同步的关系数据库集群平台。你可以将TBase配置一台或者多台主机上,TBase数据存储在多台物理主机上面。数据表的存储有两种方式, 分别是distributed或者replicated ,当向TBase发送查询 SQL时,TBase会自动向数据节点发出查询语句并获取最终结果。

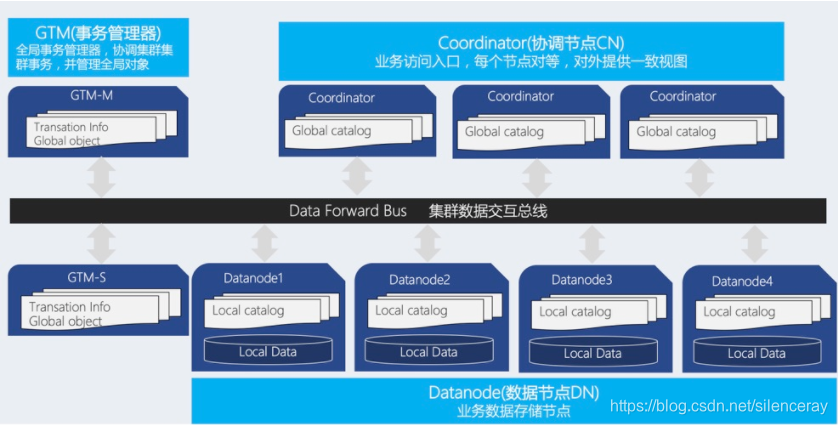

TBase采用分布式集群架构(如下图), 该架构分布式为无共享(share nothing)模式,节点之间相应独立,各自处理自己的数据,处理后的结果可能向上层汇总或在节点间流转,各处理单元之间通过网络协议进行通信,并行处理和扩展能力更好,这也意味着只需要简单的x86服务器就可以部署TBase数据库集群

TBase的三大模块

- Coordinator:协调节点(简称CN)

业务访问入口,负责数据的分发和查询规划,多个节点位置对等,每个节点都提供相同的数据库视图;在功能上CN上只存储系统的全局元数据,并不存储实际的业务数据。

- Datanode:数据节点(简称DN)

每个节点还存储业务数据的分片在功能上,DN节点负责完成执行协调节点分发的执行请求。

- GTM:全局事务管理器(Global Transaction Manager)

负责管理集群事务信息,同时管理集群的全局对象,比如序列等。

TBase集群环境的搭建。

安装环境:centos 7.4

内存:6g(至少4g)

参考文章:https://github.com/Tencent/TBase/wiki/1%E3%80%81TBase_Quick_Start

提前配置事项

防火墙与selinux配置

关闭seLinux:setenforce 0

设置selinu开机不启动:

vi /etc/sysconfig/selinux

将其中的SELINUX= XXXXXX修改为SELINUX=disabled

关闭防火墙:systemctl stop firewalld

禁止防火墙开机自启:systemctl disable firewalld

1.源码获取

git clone https://github.com/Tencent/TBase

2.创建目录和用户

#集群所有机器都需要配置

mkdir /data

mkdir -p /data/tbase/data/gtm

mkdir -p /data/tbase/data/coord

mkdir -p /data/tbase/data/dn001

mkdir -p /data/tbase/data/dn002

mkdir -p /data/tbase/TBase-master

mkdir -p /data/tbase/install

useradd -d /data/tbase tbase

#设密码

passwd tbase3.源码编译

export SOURCECODE_PATH=/data/tbase/TBase-master

export INSTALL_PATH=/data/tbase/install

cd ${SOURCECODE_PATH}

rm -rf ${INSTALL_PATH}/tbase_bin_v2.0

chmod +x configure*

./configure --prefix=${INSTALL_PATH}/tbase_bin_v2.0 --enable-user-switch --with-openssl --with-ossp-uuid CFLAGS=-g

make clean

make -sj

make install

chmod +x contrib/pgxc_ctl/make_signature

cd contrib

make -sj

make install4.集群规划

下面以两台服务器上搭建1GTM主,1GTM备,2CN主(CN主之间对等,因此无需备CN),2DN主,2DN备的集群,该集群为具备容灾能力的最小配置

机器1:192.168.199.240

机器2:192.168.199.241

集群规划如下:

| 节点名称 | IP | 数据目录 |

| GTM master | 192.168.199.240 | /data/tbase/data/gtm |

| GTM slave | 192.168.199.241 | /data/tbase/data/gtm |

| CN1 | 192.168.199.240 | /data/tbase/data/coord |

| CN2 | 192.168.199.241 | /data/tbase/data/coord |

| DN1 master | 192.168.199.240 | /data/tbase/data/dn001 |

| DN1 slave | 192.168.199.241 | /data/tbase/data/dn001 |

| DN2 master | 192.168.199.240 | /data/tbase/data/dn002 |

| DN2 slave | 192.168.199.241 | /data/tbase/data/dn002 |

5.机器互信

# 240

su tbase

ssh-keygen -t rsa

ssh-copy-id -i ~/.ssh/id_rsa.pub tbase@192.168.199.241

# 241

su tbase

ssh-keygen -t rsa

ssh-copy-id -i ~/.ssh/id_rsa.pub tbase@192.168.199.2406.设置环境变量

#集群所有机器都需要配置

[tbase@node1 ~]$ vim ~/.bashrc

export TBASE_HOME=/data/tbase/install/tbase_bin_v2.0

export PATH=$TBASE_HOME/bin:$PATH

export LD_LIBRARY_PATH=$TBASE_HOME/lib:${LD_LIBRARY_PATH}7.初始化集群

[tbase@node1 etc]$ mkdir /data/tbase/pgxc_ctl

[tbase@node1 etc]$ cd /data/tbase/pgxc_ctl

[tbase@node1 pgxc_ctl]$ ll

total 0

[tbase@node1 pgxc_ctl]$ vim pgxc_ctl.conf

#!/bin/bash

pgxcInstallDir=/data/tbase/install/tbase_bin_v2.0

pgxcOwner=tbase

defaultDatabase=postgres

pgxcUser=$pgxcOwner

tmpDir=/tmp

localTmpDir=$tmpDir

configBackup=n

configBackupHost=pgxc-linker

configBackupDir=$HOME/pgxc

configBackupFile=pgxc_ctl.bak

#---- GTM ----------

gtmName=gtm

gtmMasterServer=192.168.199.240

gtmMasterPort=50001

gtmMasterDir=/data/tbase/data/gtm

gtmExtraConfig=none

gtmMasterSpecificExtraConfig=none

gtmSlave=y

gtmSlaveServer=192.168.199.241

gtmSlavePort=50001

gtmSlaveDir=/data/tbase/data/gtm

gtmSlaveSpecificExtraConfig=none

#---- Coordinators -------

coordMasterDir=/data/tbase/data/coord

coordArchLogDir=/data/tbase/data/coord_archlog

coordNames=(cn001 cn002 )

coordPorts=(30004 30004 )

poolerPorts=(31110 31110 )

coordPgHbaEntries=(0.0.0.0/0)

coordMasterServers=(192.168.199.240 192.168.199.241)

coordMasterDirs=($coordMasterDir $coordMasterDir)

coordMaxWALsernder=2

coordMaxWALSenders=($coordMaxWALsernder $coordMaxWALsernder )

coordSlave=n

coordSlaveSync=n

coordArchLogDirs=($coordArchLogDir $coordArchLogDir)

coordExtraConfig=coordExtraConfig

cat > $coordExtraConfig <<EOF

#================================================

# Added to all the coordinator postgresql.conf

# Original: $coordExtraConfig

include_if_exists = '/data/tbase/global/global_tbase.conf'

wal_level = replica

wal_keep_segments = 256

max_wal_senders = 4

archive_mode = on

archive_timeout = 1800

archive_command = 'echo 0'

log_truncate_on_rotation = on

log_filename = 'postgresql-%M.log'

log_rotation_age = 4h

log_rotation_size = 100MB

hot_standby = on

wal_sender_timeout = 30min

wal_receiver_timeout = 30min

shared_buffers = 1024MB

max_pool_size = 2000

log_statement = 'ddl'

log_destination = 'csvlog'

logging_collector = on

log_directory = 'pg_log'

listen_addresses = '*'

max_connections = 2000

EOF

coordSpecificExtraConfig=(none none)

coordExtraPgHba=coordExtraPgHba

cat > $coordExtraPgHba <<EOF

local all all trust

host all all 0.0.0.0/0 trust

host replication all 0.0.0.0/0 trust

host all all ::1/128 trust

host replication all ::1/128 trust

EOF

coordSpecificExtraPgHba=(none none)

coordAdditionalSlaves=n

cad1_Sync=n

#---- Datanodes ---------------------

dn1MstrDir=/data/tbase/data/dn001

dn2MstrDir=/data/tbase/data/dn002

dn1SlvDir=/data/tbase/data/dn001

dn2SlvDir=/data/tbase/data/dn002

dn1ALDir=/data/tbase/data/datanode_archlog

dn2ALDir=/data/tbase/data/datanode_archlog

primaryDatanode=dn001

datanodeNames=(dn001 dn002)

datanodePorts=(40004 40004)

datanodePoolerPorts=(41110 41110)

datanodePgHbaEntries=(0.0.0.0/0)

datanodeMasterServers=(192.168.199.240 192.168.199.241)

datanodeMasterDirs=($dn1MstrDir $dn2MstrDir)

dnWALSndr=4

datanodeMaxWALSenders=($dnWALSndr $dnWALSndr)

datanodeSlave=y

datanodeSlaveServers=(192.168.199.241 192.168.199.240)

datanodeSlavePorts=(50004 54004)

datanodeSlavePoolerPorts=(51110 51110)

datanodeSlaveSync=n

datanodeSlaveDirs=($dn1SlvDir $dn2SlvDir)

datanodeArchLogDirs=($dn1ALDir/dn001 $dn2ALDir/dn002)

datanodeExtraConfig=datanodeExtraConfig

cat > $datanodeExtraConfig <<EOF

#================================================

# Added to all the coordinator postgresql.conf

# Original: $datanodeExtraConfig

include_if_exists = '/data/tbase/global/global_tbase.conf'

listen_addresses = '*'

wal_level = replica

wal_keep_segments = 256

max_wal_senders = 4

archive_mode = on

archive_timeout = 1800

archive_command = 'echo 0'

log_directory = 'pg_log'

logging_collector = on

log_truncate_on_rotation = on

log_filename = 'postgresql-%M.log'

log_rotation_age = 4h

log_rotation_size = 100MB

hot_standby = on

wal_sender_timeout = 30min

wal_receiver_timeout = 30min

shared_buffers = 1024MB

max_connections = 4000

max_pool_size = 4000

log_statement = 'ddl'

log_destination = 'csvlog'

wal_buffers = 1GB

EOF

datanodeSpecificExtraConfig=(none none)

datanodeExtraPgHba=datanodeExtraPgHba

cat > $datanodeExtraPgHba <<EOF

local all all trust

host all all 0.0.0.0/0 trust

host replication all 0.0.0.0/0 trust

host all all ::1/128 trust

host replication all ::1/128 trust

EOF

datanodeSpecificExtraPgHba=(none none)

datanodeAdditionalSlaves=n

walArchive=n8.分发二进制包

#在一个节点配置好配置文件后,需要预先将二进制包部署到所有节点所在的机器上,这个可以使用pgxc_ctl工具,执行deploy all命令来完成。

[tbase@node1 etc]$ pgxc_ctl

/bin/bash

Installing pgxc_ctl_bash script as /data/tbase/pgxc_ctl/pgxc_ctl_bash.

Installing pgxc_ctl_bash script as /data/tbase/pgxc_ctl/pgxc_ctl_bash.

Reading configuration using /data/tbase/pgxc_ctl/pgxc_ctl_bash --home /data/tbase/pgxc_ctl --configuration /data/tbase/pgxc_ctl/pgxc_ctl.conf

Finished reading configuration.

******** PGXC_CTL START ***************

Current directory: /data/tbase/pgxc_ctl

PGXC deploy all

Deploying Postgres-XL components to all the target servers.

Prepare tarball to deploy ...

Deploying to the server 192.168.199.240.

Deploying to the server 192.168.199.241.

登录到所有节点,check二进制包是否分发OK

[root@node2 install]# cd tbase_bin_v2.0/

[root@node2 tbase_bin_v2.0]# ll

total 12

drwxr-xr-x. 2 tbase tbase 4096 Nov 12 2020 bin

drwxr-xr-x. 4 tbase tbase 4096 Nov 12 2020 include

drwxr-xr-x. 4 tbase tbase 4096 Nov 12 2020 lib

drwxr-xr-x. 4 tbase tbase 35 Nov 12 2020 share

[root@node2 tbase_bin_v2.0]# pwd

/data/tbase/install/tbase_bin_v2.0

执行init all命令,完成集群初始化命令

[tbase@node1 pgxc_ctl]$ pgxc_ctl

/bin/bash

Installing pgxc_ctl_bash script as /data/tbase/pgxc_ctl/pgxc_ctl_bash.

Installing pgxc_ctl_bash script as /data/tbase/pgxc_ctl/pgxc_ctl_bash.

Reading configuration using /data/tbase/pgxc_ctl/pgxc_ctl_bash --home /data/tbase/pgxc_ctl --configuration /data/tbase/pgxc_ctl/pgxc_ctl.conf

Finished reading configuration.

******** PGXC_CTL START ***************

Current directory: /data/tbase/pgxc_ctl

PGXC init all

Initialize GTM master

The files belonging to this GTM system will be owned by user "tbase".

This user must also own the server process.

fixing permissions on existing directory /data/tbase/data/gtm ... ok

creating configuration files ... ok

creating xlog dir ... ok

Success.

1:1958545216:2020-11-12 17:33:45.003 CST -LOG: ControlData context:

LOCATION: GTM_PrintControlData, gtm_xlog.c:2055

2:1958545216:2020-11-12 17:33:45.003 CST -LOG: ControlData->gtm_control_version = 20180716

LOCATION: GTM_PrintControlData, gtm_xlog.c:2056

3:1958545216:2020-11-12 17:33:45.003 CST -LOG: ControlData->xlog_seg_size = 2097152

LOCATION: GTM_PrintControlData, gtm_xlog.c:2057

4:1958545216:2020-11-12 17:33:45.003 CST -LOG: ControlData->xlog_blcksz = 4096

LOCATION: GTM_PrintControlData, gtm_xlog.c:2058

5:1958545216:2020-11-12 17:33:45.003 CST -LOG: ControlData->state = 1

LOCATION: GTM_PrintControlData, gtm_xlog.c:2059

6:1958545216:2020-11-12 17:33:45.003 CST -LOG: ControlData->CurrBytePos = 4080

LOCATION: GTM_PrintControlData, gtm_xlog.c:2060

7:1958545216:2020-11-12 17:33:45.003 CST -LOG: ControlData->PrevBytePos = 4080

LOCATION: GTM_PrintControlData, gtm_xlog.c:2061

8:1958545216:2020-11-12 17:33:45.003 CST -LOG: ControlData->thisTimeLineID = 1

LOCATION: GTM_PrintControlData, gtm_xlog.c:2062

9:1958545216:2020-11-12 17:33:45.003 CST -LOG: ControlData->prevCheckPoint = 0/0

LOCATION: GTM_PrintControlData, gtm_xlog.c:2063

10:1958545216:2020-11-12 17:33:45.003 CST -LOG: ControlData->checkPoint = 0/1000

LOCATION: GTM_PrintControlData, gtm_xlog.c:2064

11:1958545216:2020-11-12 17:33:45.003 CST -LOG: ControlData->gts = 1000000000

LOCATION: GTM_PrintControlData, gtm_xlog.c:2065

12:1958545216:2020-11-12 17:33:45.003 CST -LOG: ControlData->time = 1605173624

LOCATION: GTM_PrintControlData, gtm_xlog.c:2066

waiting for server to shut down....TBase create 1 worker thread.

Start sever loop start thread count 9 running thread count 9.

TBase GTM is ready to go!!

done

server stopped

Done.

Start GTM master

server starting

1:15963968:2020-11-12 17:33:47.558 CST -LOG: ControlData context:

LOCATION: GTM_PrintControlData, gtm_xlog.c:2055

2:15963968:2020-11-12 17:33:47.558 CST -LOG: ControlData->gtm_control_version = 20180716

LOCATION: GTM_PrintControlData, gtm_xlog.c:2056

3:15963968:2020-11-12 17:33:47.558 CST -LOG: ControlData->xlog_seg_size = 2097152

LOCATION: GTM_PrintControlData, gtm_xlog.c:2057

4:15963968:2020-11-12 17:33:47.558 CST -LOG: ControlData->xlog_blcksz = 4096

LOCATION: GTM_PrintControlData, gtm_xlog.c:2058

5:15963968:2020-11-12 17:33:47.558 CST -LOG: ControlData->state = 1

LOCATION: GTM_PrintControlData, gtm_xlog.c:2059

6:15963968:2020-11-12 17:33:47.558 CST -LOG: ControlData->CurrBytePos = 4178000

LOCATION: GTM_PrintControlData, gtm_xlog.c:2060

7:15963968:2020-11-12 17:33:47.558 CST -LOG: ControlData->PrevBytePos = 4177920

LOCATION: GTM_PrintControlData, gtm_xlog.c:2061

8:15963968:2020-11-12 17:33:47.558 CST -LOG: ControlData->thisTimeLineID = 1

LOCATION: GTM_PrintControlData, gtm_xlog.c:2062

9:15963968:2020-11-12 17:33:47.558 CST -LOG: ControlData->prevCheckPoint = 0/200000

LOCATION: GTM_PrintControlData, gtm_xlog.c:2063

10:15963968:2020-11-12 17:33:47.558 CST -LOG: ControlData->checkPoint = 0/400000

LOCATION: GTM_PrintControlData, gtm_xlog.c:2064

TBase是一款提供写可靠性及多主节点数据同步的关系数据库集群平台。它采用无共享模式的分布式架构,支持简单x86服务器部署。本文档详细介绍了TBase集群的搭建过程,包括环境配置、源码获取、编译安装及集群规划。

TBase是一款提供写可靠性及多主节点数据同步的关系数据库集群平台。它采用无共享模式的分布式架构,支持简单x86服务器部署。本文档详细介绍了TBase集群的搭建过程,包括环境配置、源码获取、编译安装及集群规划。

最低0.47元/天 解锁文章

最低0.47元/天 解锁文章

1444

1444

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?