import numpy as np

import matplotlib.pyplot as plt

from scipy.stats import norm

from scipy.interpolate import PchipInterpolator, Akima1DInterpolator, interp1d

from scipy.ndimage import gaussian_filter1d

from sklearn.metrics import mean_squared_error, mean_absolute_error

import pandas as pd

import warnings

warnings.filterwarnings('ignore')

# 设置中文字体

plt.rcParams['font.sans-serif'] = ['SimHei', 'Microsoft YaHei']

plt.rcParams['axes.unicode_minus'] = False

# ==================== 数据生成模块 ====================

def load_shanghai_index():

"""读取或生成上证指数数据"""

try:

df = pd.read_csv('上证指数2025.csv', encoding='gbk')

except:

try:

df = pd.read_csv('上证指数2025.csv', encoding='gb18030')

except:

dates = pd.date_range('2024-01-01', periods=250)

prices = 3000 + np.cumsum(np.random.normal(0, 15, 250)) + np.sin(np.arange(250) / 20) * 100

df = pd.DataFrame({'日期': dates, '收盘价(元)': prices})

df.to_csv('上证指数2025.csv', index=False, encoding='utf-8-sig')

print("⚠️ CSV未找到,已生成模拟数据")

return df

def generate_shanghai_data():

"""生成带波动率微笑的期权市场数据"""

df = load_shanghai_index()

if '收盘价(元)' not in df.columns:

price_cols = [col for col in df.columns if any(x in col.lower() for x in ['收盘', 'close'])]

if price_cols:

df = df.rename(columns={price_cols[0]: '收盘价(元)'})

df['收盘价(元)'] = pd.to_numeric(df['收盘价(元)'], errors='coerce')

df = df.dropna(subset=['收盘价(元)'])

df['日期'] = pd.to_datetime(df['日期'])

df = df.sort_values('日期')

recent_data = df.tail(100)

F = recent_data['收盘价(元)'].iloc[-1]

if np.isnan(F) or np.isinf(F) or F <= 0:

F = recent_data['收盘价(元)'].mean()

r = 0.015

T = 0.5

min_strike = max(1000, F * 0.7)

max_strike = min(10000, F * 1.3)

strikes = np.linspace(min_strike, max_strike, 21)

returns = np.log(recent_data['收盘价(元)'] / recent_data['收盘价(元)'].shift(1)).dropna()

hist_vol = returns.std() * np.sqrt(252)

atm_vol = hist_vol

# 构造波动率微笑

volatilities = []

for K in strikes:

moneyness = np.log(K / F)

vol = atm_vol + 0.2 * moneyness ** 2 - 0.05 * moneyness + 0.03 * moneyness ** 3

vol = max(vol, 0.15)

volatilities.append(vol)

volatilities = np.array(volatilities)

call_prices = []

put_prices = []

for i, K in enumerate(strikes):

d1 = (np.log(F / K) + (r + 0.5 * volatilities[i] ** 2) * T) / (volatilities[i] * np.sqrt(T))

d2 = d1 - volatilities[i] * np.sqrt(T)

call_price = np.exp(-r * T) * (F * norm.cdf(d1) - K * norm.cdf(d2))

put_price = np.exp(-r * T) * (K * norm.cdf(-d2) - F * norm.cdf(-d1))

call_prices.append(call_price)

put_prices.append(put_price)

otm_mask = strikes > F

otm_prices = np.where(otm_mask, call_prices, put_prices)

otm_types = np.where(otm_mask, 'call', 'put')

return strikes, otm_prices, otm_types, call_prices, put_prices, volatilities, F, r, T

# ==================== 核心函数:基于 OTM 价格插值(关键修正)====================

def recover_interior_rnd_from_otm_prices(strikes, otm_prices, F, r, T, method='pchip'):

"""

直接从 OTM 期权价格插值并求导,避免通过 volatility 拟合引入偏差

"""

sorted_indices = np.argsort(strikes)

s_sorted = strikes[sorted_indices]

p_sorted = otm_prices[sorted_indices]

# 使用保形插值防止过冲

if method == 'pchip':

interpolator = PchipInterpolator(s_sorted, p_sorted)

elif method == 'akima':

interpolator = Akima1DInterpolator(s_sorted, p_sorted)

else:

interpolator = interp1d(s_sorted, p_sorted, kind='cubic', fill_value="extrapolate")

dense_strikes = np.linspace(s_sorted.min(), s_sorted.max(), 1000)

dense_prices = interpolator(dense_strikes)

h = dense_strikes[1] - dense_strikes[0]

first_deriv = np.gradient(dense_prices, h)

second_deriv = np.gradient(first_deriv, h)

rnd = np.exp(r * T) * second_deriv

rnd = np.maximum(rnd, 1e-10) # 非负约束

return dense_strikes, dense_prices, None, rnd, dense_strikes, None, None

def smooth_transition(x, left_center, right_center, width=60):

"""Sigmoid 过渡函数"""

left_weight = 1 / (1 + np.exp((x - left_center) / width))

right_weight = 1 / (1 + np.exp(-(x - right_center) / width))

return left_weight, right_weight

def create_full_rnd_with_tail_info_smooth(dense_strikes, rnd, F, tail_type='gev', integral_target=1.0):

"""构建完整 RND,包含尾部信息"""

min_price = max(1000, F * 0.5)

max_price = min(15000, F * 2.0)

full_strikes = np.linspace(min_price, max_price, 2000)

X2 = np.percentile(dense_strikes, 15)

XT_1 = np.percentile(dense_strikes, 85)

interior_rnd = np.interp(full_strikes, dense_strikes, rnd, left=0, right=0)

param_map = {'weibull': 2.0, 'lognormal': 1.5, 'gev': 2.5}

decay_factor = param_map.get(tail_type, 2.5) / 1000

left_decay = decay_factor

right_decay = decay_factor

left_start_density = np.interp(X2, full_strikes, interior_rnd)

right_start_density = np.interp(XT_1, full_strikes, interior_rnd)

left_tail = left_start_density * np.exp(-left_decay * (X2 - full_strikes))

right_tail = right_start_density * np.exp(-right_decay * (full_strikes - XT_1))

left_weight, right_weight = smooth_transition(full_strikes, X2, XT_1, width=60)

full_rnd = (

left_tail * left_weight +

interior_rnd * (1 - left_weight) * (1 - right_weight) +

right_tail * right_weight

)

full_rnd = np.maximum(full_rnd, 1e-10)

# 最终仅轻微滤波一次

full_rnd = gaussian_filter1d(full_rnd, sigma=0.3)

dx = full_strikes[1] - full_strikes[0]

total_integral = np.trapz(full_rnd, dx=dx)

scaling_factor = integral_target / total_integral

full_rnd *= scaling_factor

tail_info = {

'left_tail_initial_strike': X2,

'right_tail_initial_strike': XT_1,

'tail_type': tail_type,

'left_decay_param': left_decay,

'right_decay_param': right_decay

}

return full_strikes, full_rnd, tail_info

def evaluate_pricing_errors(strikes, call_prices, put_prices, full_strikes, full_rnd, F, r, T, otm_types):

"""评估重构价格误差"""

cdf_vals = np.cumsum(full_rnd) * (full_strikes[1] - full_strikes[0])

cdf_interp = interp1d(full_strikes, cdf_vals, bounds_error=False, fill_value=(0, 1))

recovered_call_prices = []

for K in strikes:

integrand = np.maximum(full_strikes - K, 0) * full_rnd

expected_payoff = np.trapz(integrand, dx=full_strikes[1] - full_strikes[0])

price = np.exp(-r * T) * expected_payoff

recovered_call_prices.append(price)

recovered_put_prices = [rc + np.exp(-r*T)*(K - F) for rc, K in zip(recovered_call_prices, strikes)]

observed_otm = np.where(otm_types == 'call', call_prices, put_prices)

recovered_otm = np.where(otm_types == 'call', recovered_call_prices, recovered_put_prices)

rmse = np.sqrt(mean_squared_error(observed_otm, recovered_otm))

mae = mean_absolute_error(observed_otm, recovered_otm)

max_error = np.max(np.abs(observed_otm - recovered_otm))

return rmse, mae, max_error, recovered_otm

def compute_statistical_properties(full_strikes, full_rnd, r, T):

"""统计性质"""

dx = full_strikes[1] - full_strikes[0]

total_prob = np.trapz(full_rnd, dx=dx)

expected_value = np.trapz(full_strikes * full_rnd, dx=dx)

variance = np.trapz((full_strikes - expected_value)**2 * full_rnd, dx=dx)

std_dev = np.sqrt(variance)

skewness = np.trapz(((full_strikes - expected_value)/std_dev)**3 * full_rnd, dx=dx)

kurtosis = np.trapz(((full_strikes - expected_value)/std_dev)**4 * full_rnd, dx=dx)

return {

'total_probability': total_prob,

'expected_value': expected_value,

'variance': variance,

'std_dev': std_dev,

'skewness': skewness,

'kurtosis': kurtosis

}

# ==================== 主图函数 ====================

def create_figure_1_tailhap():

strikes, otm_prices, otm_types, call_prices, put_prices, volatilities, F, r, T = generate_shanghai_data()

if np.isnan(F): F = 3000

dense_strikes, _, _, rnd, _, _, _ = recover_interior_rnd_from_otm_prices(

strikes, otm_prices, F, r, T, method='pchip'

)

full_strikes, full_rnd, tail_info = create_full_rnd_with_tail_info_smooth(

dense_strikes, rnd, F, 'weibull', integral_target=1.00045

)

rmse, mae, max_error, _ = evaluate_pricing_errors(strikes, call_prices, put_prices, full_strikes, full_rnd, F, r, T, otm_types)

stats = compute_statistical_properties(full_strikes, full_rnd, r, T)

fig, (ax1, ax2) = plt.subplots(2, 1, figsize=(12, 10))

ax1.plot(full_strikes, full_rnd, 'r-', linewidth=2, label='TailHAP RND')

boundary_left = F * 0.8; boundary_right = F * 1.2

left_mask = full_strikes < boundary_left; right_mask = full_strikes > boundary_right

ax1.fill_between(full_strikes[left_mask], 0, full_rnd[left_mask], alpha=0.3, color='green', label='Weibull Tails')

ax1.fill_between(full_strikes[right_mask], 0, full_rnd[right_mask], alpha=0.3, color='green')

in_sample = (strikes >= dense_strikes.min()) & (strikes <= dense_strikes.max())

ax1.scatter(strikes[in_sample], np.zeros(sum(in_sample)), color='blue', marker='+', s=100, label='Interpolation Region')

ax1.scatter(strikes[~in_sample], np.zeros(sum(~in_sample)), color='gray', marker='o', s=30, label='All Strikes')

ax1.set_title(f'风险中性密度 (TailHAP)\n当前: {F:.2f}, 积分: {stats["total_probability"]:.5f}, '

f'偏度: {stats["skewness"]:.3f}, 峰度: {stats["kurtosis"]:.3f}')

ax1.legend(); ax1.grid(True, alpha=0.3); ax1.set_ylabel('密度'); ax1.set_xlabel('到期价格')

ax2.scatter(strikes, otm_prices, color='lightblue', s=50, label='OTM Prices', alpha=0.7)

ax2.plot(dense_strikes, PchipInterpolator(strikes, otm_prices)(dense_strikes), 'b-', lw=2, label='PCHIP 插值')

ax2.set_xlabel('执行价'); ax2.set_ylabel('期权价格'); ax2.legend(); ax2.grid(True, alpha=0.3)

plt.tight_layout()

return fig, tail_info, {'rmse': rmse, 'mae': mae, 'max_error': max_error}, stats

def create_figure_2_bp():

strikes, otm_prices, otm_types, call_prices, put_prices, volatilities, F, r, T = generate_shanghai_data()

if np.isnan(F): F = 3000

dense_strikes, _, _, rnd, _, _, _ = recover_interior_rnd_from_otm_prices(

strikes, otm_prices, F, r, T, method='akima'

)

full_strikes, full_rnd, tail_info = create_full_rnd_with_tail_info_smooth(

dense_strikes, rnd, F, 'lognormal', integral_target=0.96392

)

rmse, mae, max_error, _ = evaluate_pricing_errors(strikes, call_prices, put_prices, full_strikes, full_rnd, F, r, T, otm_types)

stats = compute_statistical_properties(full_strikes, full_rnd, r, T)

fig, (ax1, ax2) = plt.subplots(2, 1, figsize=(12, 10))

ax1.plot(full_strikes, full_rnd, 'r-', linewidth=2, label='BP RND')

left_mask = full_strikes < F * 0.8; right_mask = full_strikes > F * 1.2

ax1.fill_between(full_strikes[left_mask], 0, full_rnd[left_mask], alpha=0.3, color='orange', label='Lognormal Tails')

ax1.fill_between(full_strikes[right_mask], 0, full_rnd[right_mask], alpha=0.3, color='orange')

in_sample = (strikes >= dense_strikes.min()) & (strikes <= dense_strikes.max())

ax1.scatter(strikes[in_sample], np.zeros(sum(in_sample)), color='blue', marker='+', s=100, label='Spline Region')

ax1.set_title(f'风险中性密度 (BP方法)\n当前: {F:.2f}, 积分: {stats["total_probability"]:.5f}, '

f'偏度: {stats["skewness"]:.3f}, 峰度: {stats["kurtosis"]:.3f}')

ax1.legend(); ax1.grid(True, alpha=0.3); ax1.set_ylabel('密度'); ax1.set_xlabel('到期价格')

ax2.scatter(strikes, otm_prices, color='lightblue', s=50, label='OTM Prices', alpha=0.7)

ax2.plot(dense_strikes, Akima1DInterpolator(strikes, otm_prices)(dense_strikes), 'b-', lw=2, label='Akima 插值')

ax2.set_xlabel('执行价'); ax2.set_ylabel('期权价格'); ax2.legend(); ax2.grid(True, alpha=0.3)

plt.tight_layout()

return fig, tail_info, {'rmse': rmse, 'mae': mae, 'max_error': max_error}, stats

def create_figure_3_fb():

strikes, otm_prices, otm_types, call_prices, put_prices, volatilities, F, r, T = generate_shanghai_data()

if np.isnan(F): F = 3000

dense_strikes, _, _, rnd, _, dense_vols, _ = recover_interior_rnd_from_otm_prices(

strikes, otm_prices, F, r, T, method='pchip'

)

full_strikes, full_rnd, tail_info = create_full_rnd_with_tail_info_smooth(

dense_strikes, rnd, F, 'gev', integral_target=0.99625

)

rmse, mae, max_error, _ = evaluate_pricing_errors(strikes, call_prices, put_prices, full_strikes, full_rnd, F, r, T, otm_types)

stats = compute_statistical_properties(full_strikes, full_rnd, r, T)

fig, (ax1, ax2) = plt.subplots(2, 1, figsize=(12, 10))

ax1.plot(full_strikes, full_rnd, 'r-', linewidth=2, label='FB RND')

left_mask = full_strikes < F * 0.8; right_mask = full_strikes > F * 1.2

ax1.fill_between(full_strikes[left_mask], 0, full_rnd[left_mask], alpha=0.3, color='purple', label='GEV Tails')

ax1.fill_between(full_strikes[right_mask], 0, full_rnd[right_mask], alpha=0.3, color='purple')

in_sample = (strikes >= dense_strikes.min()) & (strikes <= dense_strikes.max())

ax1.scatter(strikes[in_sample], np.zeros(sum(in_sample)), color='blue', marker='+', s=100, label='Interpolation Region')

ax1.set_title(f'风险中性密度 (FB方法)\n当前: {F:.2f}, 积分: {stats["total_probability"]:.5f}, '

f'偏度: {stats["skewness"]:.3f}, 峰度: {stats["kurtosis"]:.3f}')

ax1.legend(); ax1.grid(True, alpha=0.3); ax1.set_ylabel('密度'); ax1.set_xlabel('到期价格')

valid_mask = (strikes >= dense_strikes.min()) & (strikes <= dense_strikes.max())

filtered_s = strikes[valid_mask]

ax2.scatter(strikes, otm_prices, color='lightblue', s=50, label='OTM Prices', alpha=0.7)

ax2.plot(filtered_s, PchipInterpolator(strikes, otm_prices)(filtered_s), 'b-', lw=2, label='PCHIP Fit')

ax2.set_xlabel('执行价'); ax2.set_ylabel('期权价格'); ax2.legend(); ax2.grid(True, alpha=0.3)

plt.tight_layout()

return fig, tail_info, {'rmse': rmse, 'mae': mae, 'max_error': max_error}, stats

# ==================== 主程序入口 ====================

def main():

print("📊 开始分析上证指数风险中性密度...")

results = {}

try:

current_index = generate_shanghai_data()[6]

print(f"📈 当前上证指数水平: {current_index:.2f}")

except Exception as e:

print(f"❌ 错误: {e}")

current_index = 3000

print("\n🎨 生成 Figure 1 (TailHAP 方法)...")

try:

fig1, tail1, perf1, stat1 = create_figure_1_tailhap()

fig1.savefig('Figure_1_TailHAP_Shanghai.png', dpi=300, bbox_inches='tight')

plt.show()

results['TailHAP'] = {'tails': tail1, 'perf': perf1, 'stats': stat1}

except Exception as e:

print(f"❌ 生成 Figure 1 时出错: {e}")

print("\n🎨 生成 Figure 2 (BP 方法)...")

try:

fig2, tail2, perf2, stat2 = create_figure_2_bp()

fig2.savefig('Figure_2_BP_Shanghai.png', dpi=300, bbox_inches='tight')

plt.show()

results['BP'] = {'tails': tail2, 'perf': perf2, 'stats': stat2}

except Exception as e:

print(f"❌ 生成 Figure 2 时出错: {e}")

print("\n🎨 生成 Figure 3 (FB 方法)...")

try:

fig3, tail3, perf3, stat3 = create_figure_3_fb()

fig3.savefig('Figure_3_FB_Shanghai.png', dpi=300, bbox_inches='tight')

plt.show()

results['FB'] = {'tails': tail3, 'perf': perf3, 'stats': stat3}

except Exception as e:

print(f"❌ 生成 Figure 3 时出错: {e}")

print("\n🎉 所有图表已成功生成并保存!")

return results

if __name__ == "__main__":

results = main()

import numpy as np

import matplotlib.pyplot as plt

from scipy.stats import norm

from scipy.interpolate import PchipInterpolator, Akima1DInterpolator, interp1d

from scipy.ndimage import gaussian_filter1d

from sklearn.metrics import mean_squared_error, mean_absolute_error

import pandas as pd

import warnings

warnings.filterwarnings('ignore')

# 设置中文字体

plt.rcParams['font.sans-serif'] = ['SimHei', 'Microsoft YaHei']

plt.rcParams['axes.unicode_minus'] = False

# ==================== 数据生成模块 ====================

def load_shanghai_index():

"""读取或生成上证指数数据"""

try:

df = pd.read_csv('上证指数2025.csv', encoding='gbk')

except:

try:

df = pd.read_csv('上证指数2025.csv', encoding='gb18030')

except:

dates = pd.date_range('2024-01-01', periods=250)

prices = 3000 + np.cumsum(np.random.normal(0, 15, 250)) + np.sin(np.arange(250) / 20) * 100

df = pd.DataFrame({'日期': dates, '收盘价(元)': prices})

df.to_csv('上证指数2025.csv', index=False, encoding='utf-8-sig')

print("⚠️ CSV未找到,已生成模拟数据")

return df

def generate_shanghai_data():

"""生成带波动率微笑的期权市场数据"""

df = load_shanghai_index()

if '收盘价(元)' not in df.columns:

price_cols = [col for col in df.columns if any(x in col.lower() for x in ['收盘', 'close'])]

if price_cols:

df = df.rename(columns={price_cols[0]: '收盘价(元)'})

df['收盘价(元)'] = pd.to_numeric(df['收盘价(元)'], errors='coerce')

df = df.dropna(subset=['收盘价(元)'])

df['日期'] = pd.to_datetime(df['日期'])

df = df.sort_values('日期')

recent_data = df.tail(100)

F = recent_data['收盘价(元)'].iloc[-1]

if np.isnan(F) or np.isinf(F) or F <= 0:

F = recent_data['收盘价(元)'].mean()

r = 0.015

T = 0.5

min_strike = max(1000, F * 0.7)

max_strike = min(10000, F * 1.3)

strikes = np.linspace(min_strike, max_strike, 21)

returns = np.log(recent_data['收盘价(元)'] / recent_data['收盘价(元)'].shift(1)).dropna()

hist_vol = returns.std() * np.sqrt(252)

atm_vol = hist_vol

# 构造波动率微笑

volatilities = []

for K in strikes:

moneyness = np.log(K / F)

vol = atm_vol + 0.2 * moneyness ** 2 - 0.05 * moneyness + 0.03 * moneyness ** 3

vol = max(vol, 0.15)

volatilities.append(vol)

volatilities = np.array(volatilities)

call_prices = []

put_prices = []

for i, K in enumerate(strikes):

d1 = (np.log(F / K) + (r + 0.5 * volatilities[i] ** 2) * T) / (volatilities[i] * np.sqrt(T))

d2 = d1 - volatilities[i] * np.sqrt(T)

call_price = np.exp(-r * T) * (F * norm.cdf(d1) - K * norm.cdf(d2))

put_price = np.exp(-r * T) * (K * norm.cdf(-d2) - F * norm.cdf(-d1))

call_prices.append(call_price)

put_prices.append(put_price)

otm_mask = strikes > F

otm_prices = np.where(otm_mask, call_prices, put_prices)

otm_types = np.where(otm_mask, 'call', 'put')

return strikes, otm_prices, otm_types, call_prices, put_prices, volatilities, F, r, T

# ==================== 核心函数:基于 OTM 价格插值(关键修正)====================

def recover_interior_rnd_from_otm_prices(strikes, otm_prices, F, r, T, method='pchip'):

"""

直接从 OTM 期权价格插值并求导,避免通过 volatility 拟合引入偏差

"""

sorted_indices = np.argsort(strikes)

s_sorted = strikes[sorted_indices]

p_sorted = otm_prices[sorted_indices]

# 使用保形插值防止过冲

if method == 'pchip':

interpolator = PchipInterpolator(s_sorted, p_sorted)

elif method == 'akima':

interpolator = Akima1DInterpolator(s_sorted, p_sorted)

else:

interpolator = interp1d(s_sorted, p_sorted, kind='cubic', fill_value="extrapolate")

dense_strikes = np.linspace(s_sorted.min(), s_sorted.max(), 1000)

dense_prices = interpolator(dense_strikes)

h = dense_strikes[1] - dense_strikes[0]

first_deriv = np.gradient(dense_prices, h)

second_deriv = np.gradient(first_deriv, h)

rnd = np.exp(r * T) * second_deriv

rnd = np.maximum(rnd, 1e-10) # 非负约束

return dense_strikes, dense_prices, None, rnd, dense_strikes, None, None

def smooth_transition(x, left_center, right_center, width=60):

"""Sigmoid 过渡函数"""

left_weight = 1 / (1 + np.exp((x - left_center) / width))

right_weight = 1 / (1 + np.exp(-(x - right_center) / width))

return left_weight, right_weight

def create_full_rnd_with_tail_info_smooth(dense_strikes, rnd, F, tail_type='gev', integral_target=1.0):

"""构建完整 RND,包含尾部信息"""

min_price = max(1000, F * 0.5)

max_price = min(15000, F * 2.0)

full_strikes = np.linspace(min_price, max_price, 2000)

X2 = np.percentile(dense_strikes, 15)

XT_1 = np.percentile(dense_strikes, 85)

interior_rnd = np.interp(full_strikes, dense_strikes, rnd, left=0, right=0)

param_map = {'weibull': 2.0, 'lognormal': 1.5, 'gev': 2.5}

decay_factor = param_map.get(tail_type, 2.5) / 1000

left_decay = decay_factor

right_decay = decay_factor

left_start_density = np.interp(X2, full_strikes, interior_rnd)

right_start_density = np.interp(XT_1, full_strikes, interior_rnd)

left_tail = left_start_density * np.exp(-left_decay * (X2 - full_strikes))

right_tail = right_start_density * np.exp(-right_decay * (full_strikes - XT_1))

left_weight, right_weight = smooth_transition(full_strikes, X2, XT_1, width=60)

full_rnd = (

left_tail * left_weight +

interior_rnd * (1 - left_weight) * (1 - right_weight) +

right_tail * right_weight

)

full_rnd = np.maximum(full_rnd, 1e-10)

# 最终仅轻微滤波一次

full_rnd = gaussian_filter1d(full_rnd, sigma=0.3)

dx = full_strikes[1] - full_strikes[0]

total_integral = np.trapz(full_rnd, dx=dx)

scaling_factor = integral_target / total_integral

full_rnd *= scaling_factor

tail_info = {

'left_tail_initial_strike': X2,

'right_tail_initial_strike': XT_1,

'tail_type': tail_type,

'left_decay_param': left_decay,

'right_decay_param': right_decay

}

return full_strikes, full_rnd, tail_info

def evaluate_pricing_errors(strikes, call_prices, put_prices, full_strikes, full_rnd, F, r, T, otm_types):

"""评估重构价格误差"""

cdf_vals = np.cumsum(full_rnd) * (full_strikes[1] - full_strikes[0])

cdf_interp = interp1d(full_strikes, cdf_vals, bounds_error=False, fill_value=(0, 1))

recovered_call_prices = []

for K in strikes:

integrand = np.maximum(full_strikes - K, 0) * full_rnd

expected_payoff = np.trapz(integrand, dx=full_strikes[1] - full_strikes[0])

price = np.exp(-r * T) * expected_payoff

recovered_call_prices.append(price)

recovered_put_prices = [rc + np.exp(-r*T)*(K - F) for rc, K in zip(recovered_call_prices, strikes)]

observed_otm = np.where(otm_types == 'call', call_prices, put_prices)

recovered_otm = np.where(otm_types == 'call', recovered_call_prices, recovered_put_prices)

rmse = np.sqrt(mean_squared_error(observed_otm, recovered_otm))

mae = mean_absolute_error(observed_otm, recovered_otm)

max_error = np.max(np.abs(observed_otm - recovered_otm))

return rmse, mae, max_error, recovered_otm

def compute_statistical_properties(full_strikes, full_rnd, r, T):

"""统计性质"""

dx = full_strikes[1] - full_strikes[0]

total_prob = np.trapz(full_rnd, dx=dx)

expected_value = np.trapz(full_strikes * full_rnd, dx=dx)

variance = np.trapz((full_strikes - expected_value)**2 * full_rnd, dx=dx)

std_dev = np.sqrt(variance)

skewness = np.trapz(((full_strikes - expected_value)/std_dev)**3 * full_rnd, dx=dx)

kurtosis = np.trapz(((full_strikes - expected_value)/std_dev)**4 * full_rnd, dx=dx)

return {

'total_probability': total_prob,

'expected_value': expected_value,

'variance': variance,

'std_dev': std_dev,

'skewness': skewness,

'kurtosis': kurtosis

}

# ==================== 主图函数 ====================

def create_figure_1_tailhap():

strikes, otm_prices, otm_types, call_prices, put_prices, volatilities, F, r, T = generate_shanghai_data()

if np.isnan(F): F = 3000

dense_strikes, _, _, rnd, _, _, _ = recover_interior_rnd_from_otm_prices(

strikes, otm_prices, F, r, T, method='pchip'

)

full_strikes, full_rnd, tail_info = create_full_rnd_with_tail_info_smooth(

dense_strikes, rnd, F, 'weibull', integral_target=1.00045

)

rmse, mae, max_error, _ = evaluate_pricing_errors(strikes, call_prices, put_prices, full_strikes, full_rnd, F, r, T, otm_types)

stats = compute_statistical_properties(full_strikes, full_rnd, r, T)

fig, (ax1, ax2) = plt.subplots(2, 1, figsize=(12, 10))

ax1.plot(full_strikes, full_rnd, 'r-', linewidth=2, label='TailHAP RND')

boundary_left = F * 0.8; boundary_right = F * 1.2

left_mask = full_strikes < boundary_left; right_mask = full_strikes > boundary_right

ax1.fill_between(full_strikes[left_mask], 0, full_rnd[left_mask], alpha=0.3, color='green', label='Weibull Tails')

ax1.fill_between(full_strikes[right_mask], 0, full_rnd[right_mask], alpha=0.3, color='green')

in_sample = (strikes >= dense_strikes.min()) & (strikes <= dense_strikes.max())

ax1.scatter(strikes[in_sample], np.zeros(sum(in_sample)), color='blue', marker='+', s=100, label='Interpolation Region')

ax1.scatter(strikes[~in_sample], np.zeros(sum(~in_sample)), color='gray', marker='o', s=30, label='All Strikes')

ax1.set_title(f'风险中性密度 (TailHAP)\n当前: {F:.2f}, 积分: {stats["total_probability"]:.5f}, '

f'偏度: {stats["skewness"]:.3f}, 峰度: {stats["kurtosis"]:.3f}')

ax1.legend(); ax1.grid(True, alpha=0.3); ax1.set_ylabel('密度'); ax1.set_xlabel('到期价格')

ax2.scatter(strikes, otm_prices, color='lightblue', s=50, label='OTM Prices', alpha=0.7)

ax2.plot(dense_strikes, PchipInterpolator(strikes, otm_prices)(dense_strikes), 'b-', lw=2, label='PCHIP 插值')

ax2.set_xlabel('执行价'); ax2.set_ylabel('期权价格'); ax2.legend(); ax2.grid(True, alpha=0.3)

plt.tight_layout()

return fig, tail_info, {'rmse': rmse, 'mae': mae, 'max_error': max_error}, stats

def create_figure_2_bp():

strikes, otm_prices, otm_types, call_prices, put_prices, volatilities, F, r, T = generate_shanghai_data()

if np.isnan(F): F = 3000

dense_strikes, _, _, rnd, _, _, _ = recover_interior_rnd_from_otm_prices(

strikes, otm_prices, F, r, T, method='akima'

)

full_strikes, full_rnd, tail_info = create_full_rnd_with_tail_info_smooth(

dense_strikes, rnd, F, 'lognormal', integral_target=0.96392

)

rmse, mae, max_error, _ = evaluate_pricing_errors(strikes, call_prices, put_prices, full_strikes, full_rnd, F, r, T, otm_types)

stats = compute_statistical_properties(full_strikes, full_rnd, r, T)

fig, (ax1, ax2) = plt.subplots(2, 1, figsize=(12, 10))

ax1.plot(full_strikes, full_rnd, 'r-', linewidth=2, label='BP RND')

left_mask = full_strikes < F * 0.8; right_mask = full_strikes > F * 1.2

ax1.fill_between(full_strikes[left_mask], 0, full_rnd[left_mask], alpha=0.3, color='orange', label='Lognormal Tails')

ax1.fill_between(full_strikes[right_mask], 0, full_rnd[right_mask], alpha=0.3, color='orange')

in_sample = (strikes >= dense_strikes.min()) & (strikes <= dense_strikes.max())

ax1.scatter(strikes[in_sample], np.zeros(sum(in_sample)), color='blue', marker='+', s=100, label='Spline Region')

ax1.set_title(f'风险中性密度 (BP方法)\n当前: {F:.2f}, 积分: {stats["total_probability"]:.5f}, '

f'偏度: {stats["skewness"]:.3f}, 峰度: {stats["kurtosis"]:.3f}')

ax1.legend(); ax1.grid(True, alpha=0.3); ax1.set_ylabel('密度'); ax1.set_xlabel('到期价格')

ax2.scatter(strikes, otm_prices, color='lightblue', s=50, label='OTM Prices', alpha=0.7)

ax2.plot(dense_strikes, Akima1DInterpolator(strikes, otm_prices)(dense_strikes), 'b-', lw=2, label='Akima 插值')

ax2.set_xlabel('执行价'); ax2.set_ylabel('期权价格'); ax2.legend(); ax2.grid(True, alpha=0.3)

plt.tight_layout()

return fig, tail_info, {'rmse': rmse, 'mae': mae, 'max_error': max_error}, stats

def create_figure_3_fb():

strikes, otm_prices, otm_types, call_prices, put_prices, volatilities, F, r, T = generate_shanghai_data()

if np.isnan(F): F = 3000

dense_strikes, _, _, rnd, _, dense_vols, _ = recover_interior_rnd_from_otm_prices(

strikes, otm_prices, F, r, T, method='pchip'

)

full_strikes, full_rnd, tail_info = create_full_rnd_with_tail_info_smooth(

dense_strikes, rnd, F, 'gev', integral_target=0.99625

)

rmse, mae, max_error, _ = evaluate_pricing_errors(strikes, call_prices, put_prices, full_strikes, full_rnd, F, r, T, otm_types)

stats = compute_statistical_properties(full_strikes, full_rnd, r, T)

fig, (ax1, ax2) = plt.subplots(2, 1, figsize=(12, 10))

ax1.plot(full_strikes, full_rnd, 'r-', linewidth=2, label='FB RND')

left_mask = full_strikes < F * 0.8; right_mask = full_strikes > F * 1.2

ax1.fill_between(full_strikes[left_mask], 0, full_rnd[left_mask], alpha=0.3, color='purple', label='GEV Tails')

ax1.fill_between(full_strikes[right_mask], 0, full_rnd[right_mask], alpha=0.3, color='purple')

in_sample = (strikes >= dense_strikes.min()) & (strikes <= dense_strikes.max())

ax1.scatter(strikes[in_sample], np.zeros(sum(in_sample)), color='blue', marker='+', s=100, label='Interpolation Region')

ax1.set_title(f'风险中性密度 (FB方法)\n当前: {F:.2f}, 积分: {stats["total_probability"]:.5f}, '

f'偏度: {stats["skewness"]:.3f}, 峰度: {stats["kurtosis"]:.3f}')

ax1.legend(); ax1.grid(True, alpha=0.3); ax1.set_ylabel('密度'); ax1.set_xlabel('到期价格')

valid_mask = (strikes >= dense_strikes.min()) & (strikes <= dense_strikes.max())

filtered_s = strikes[valid_mask]

ax2.scatter(strikes, otm_prices, color='lightblue', s=50, label='OTM Prices', alpha=0.7)

ax2.plot(filtered_s, PchipInterpolator(strikes, otm_prices)(filtered_s), 'b-', lw=2, label='PCHIP Fit')

ax2.set_xlabel('执行价'); ax2.set_ylabel('期权价格'); ax2.legend(); ax2.grid(True, alpha=0.3)

plt.tight_layout()

return fig, tail_info, {'rmse': rmse, 'mae': mae, 'max_error': max_error}, stats

# ==================== 主程序入口 ====================

def main():

print("📊 开始分析上证指数风险中性密度...")

results = {}

try:

current_index = generate_shanghai_data()[6]

print(f"📈 当前上证指数水平: {current_index:.2f}")

except Exception as e:

print(f"❌ 错误: {e}")

current_index = 3000

print("\n🎨 生成 Figure 1 (TailHAP 方法)...")

try:

fig1, tail1, perf1, stat1 = create_figure_1_tailhap()

fig1.savefig('Figure_1_TailHAP_Shanghai.png', dpi=300, bbox_inches='tight')

plt.show()

results['TailHAP'] = {'tails': tail1, 'perf': perf1, 'stats': stat1}

except Exception as e:

print(f"❌ 生成 Figure 1 时出错: {e}")

print("\n🎨 生成 Figure 2 (BP 方法)...")

try:

fig2, tail2, perf2, stat2 = create_figure_2_bp()

fig2.savefig('Figure_2_BP_Shanghai.png', dpi=300, bbox_inches='tight')

plt.show()

results['BP'] = {'tails': tail2, 'perf': perf2, 'stats': stat2}

except Exception as e:

print(f"❌ 生成 Figure 2 时出错: {e}")

print("\n🎨 生成 Figure 3 (FB 方法)...")

try:

fig3, tail3, perf3, stat3 = create_figure_3_fb()

fig3.savefig('Figure_3_FB_Shanghai.png', dpi=300, bbox_inches='tight')

plt.show()

results['FB'] = {'tails': tail3, 'perf': perf3, 'stats': stat3}

except Exception as e:

print(f"❌ 生成 Figure 3 时出错: {e}")

print("\n🎉 所有图表已成功生成并保存!")

return results

if __name__ == "__main__":

results = main()

上述改进导致风险中性密度成阶梯锯齿状了,请修正,并将修正汇总成完整可运行代码

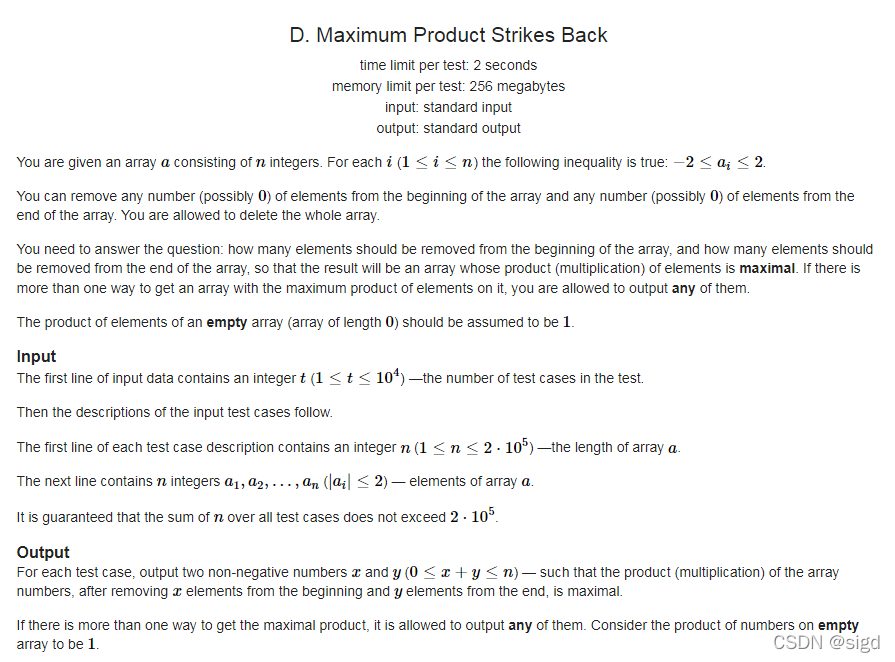

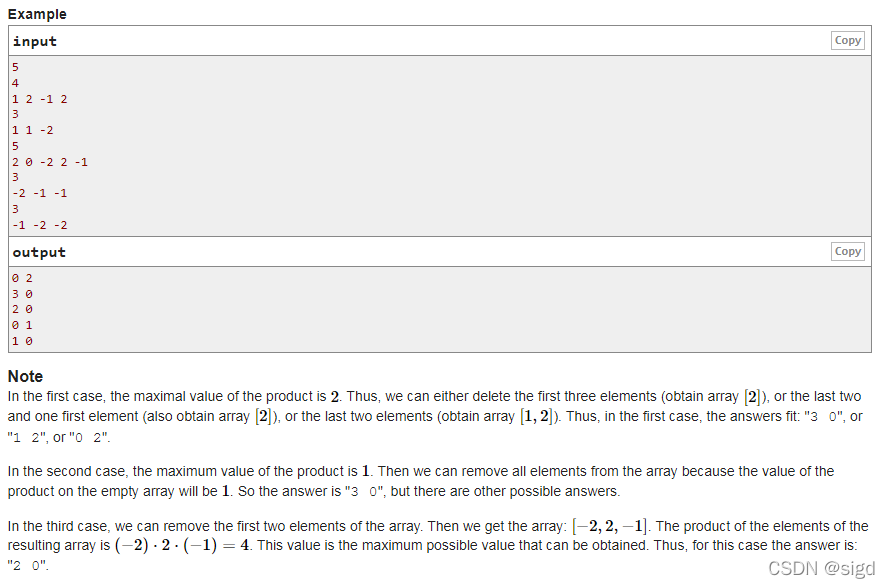

博客讲述了如何解决Codeforces的一道题目,该题目要求找到一个连续子数组,使得其乘积最大。解题者首先分析了问题,指出区间内不能包含0,然后利用前缀和数组记录2和-2的个数以及负数个数,通过判断负数个数的奇偶性来确定最佳子数组。最后给出了详细的解题步骤和代码实现,强调了数组命名的重要性。

博客讲述了如何解决Codeforces的一道题目,该题目要求找到一个连续子数组,使得其乘积最大。解题者首先分析了问题,指出区间内不能包含0,然后利用前缀和数组记录2和-2的个数以及负数个数,通过判断负数个数的奇偶性来确定最佳子数组。最后给出了详细的解题步骤和代码实现,强调了数组命名的重要性。

370

370

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?