《What is vm.min_free_kbytes and how to tune it?》这篇文章对min_free_kbytes的测试很有意思,通过这篇文章我才发现设置这个参数不仅仅是影响Linux内存回收中的water_mark(尤其是direct reclaim回收方式,Linux有两种内存回收方式——一种是kswapd后台回收,在早期的内核版本kswapd是周期性唤醒,因此又叫周期回收,但是没有必要,真的没有必要,所以在现代Linux内核版本中已经不再是周期唤醒,而是在分配内存的时候会基于zone的water_mark来唤醒做后台回收内存;另一种就是direct reclaim,这种又叫同步回收,因为此时系统可用内存到了water_mark_min,意味着系统内存非常非常紧张,所以allocate page申请内存的进程会被阻塞直到回收可用内存。当然,如果经历了内存回收流程仍旧没有回收到足够的内存,那么在allocate page函数中会走out_of_memory函数的oom流程),还会影响系统的可用内存available memory。

设置了/proc/sys/vm/min_free_kbytes之后,通过water_mark_min计算water_mark_low和water_mark_high的默认公式:

watermark[min] = per_zone_min_free_pages (min_free_kbytes换算为page单位)

watermark[low] = watermark[min] * 5 / 4

watermark[high] = watermark[min] * 3 / 2

可以通过查看/proc/zoneinfo来验证:

min_free_kbytes设置的过高或过低都会导致系统性能问题:https://access.redhat.com/documentation/zh-cn/red_hat_enterprise_linux/6/html/performance_tuning_guide/s-memory-tunables

如果你发现系统因为direct reclaim而导致卡顿、延迟(此时IO相关的指标会比较异常,并且系统负载会增加),那么你就需要调高min_free_kbytes,你可以通过sar -B命令观测pgscand和%vmeff来慢慢调整。这是为什么呢?因为min=min_free_kbytes,low=1.25min,high=1.5min,到low会唤醒kswapd做内存的后台回收,这时候即便是刷脏页、swap out等虽然会消耗磁盘io性能,但是绝大多数情况下不会影响进程;但是到了min,所有此时申请物理内存的进程都会被阻塞做direct reclaim,直到回收满足申请的内存。如果min_free_kbytes太小,那么就意味着kswapd后台回收启动没多久就进入direct reclaim,如果能把两者的时间间隔拉长,让后台回收有充分的时间来回收内存,那么就会降低direct reclaim的影响。

当然,设置比较大的min_free_kbytes会导致系统的可用内存减少(见下文引用《What is vm.min_free_kbytes and how to tune it?》),所以不可能没有限制的设置。这时候你就要通过sar -B来做观测慢慢调整。

《What is vm.min_free_kbytes and how to tune it?》原文地址:https://linuxhint.com/vm_min_free_kbytes_sysctl/

What is vm.min_free_kbytes and how to tune it?

What is vm.min_free_kbytes sysctl tunable for linux kernel and what value should it be set to? We will study this parameter and how it impacts a running linux system in this article. We will test its impact on the OS page cache and on mallocs and what the system free command shows when this parameter is set. We will make some educated guesses on ideal values for this tunable and we will show how to set vm.min_free_kbytes permanently to survive reboots. So let’s go.

How vm.min_free_kbytes works

Memory allocations may be needed by the system in order to ensure proper functioning of the system itself. If the kernel allows all memory to be allocated it might struggle when needing memory for regular operations to keep the OS running smoothly. That is why the kernel provides the tunable vm.min_free_kbytes. The tunable will force the kernel’s memory manager to keep at least X amount of free memory. Here is the official definition from the linux kernel documentation: “This is used to force the Linux VM to keep a minimum number of kilobytes free. The VM uses this number to compute a watermark[WMARK_MIN] value for each lowmem zone in the system. Each lowmem zone gets a number of reserved free pages based proportionally on its size. Some minimal amount of memory is needed to satisfy PF_MEMALLOC allocations; if you set this to lower than 1024KB, your system will become subtly broken, and prone to deadlock under high loads. Setting this too high will OOM your machine instantly.“

Validating vm.min_free_kbytes Works

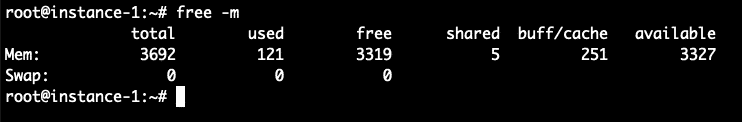

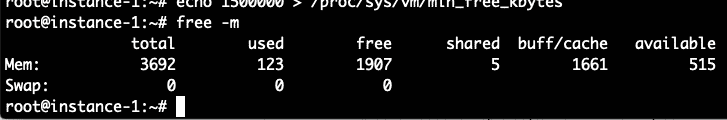

In order to test that the setting of min_free_kbytes is working as designed, I have created a linux virtual instance with only 3.75 GB of RAM. Use the free command below to analyze the system:

# free -m

Looking at the free memory utility above using the -m flag to have the values printed in MB. The total memory is 3.5 to 3.75 GB of memory. 121 MB of memory is used, 3.3 GB of memory is free, 251 MB is used by the buffer cache. And 3.3 GB of memory is available.

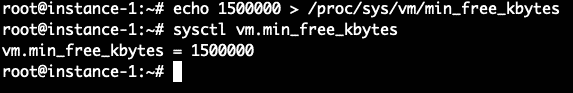

Now we are going to change the value of vm.min_free_kbytes and see what the impact is on the system memory. We will echo the new value to the proc virtual filesystem to change the kernel parameter value as per below:

# echo 1500000 > /proc/sys/vm/min_free_kbytes

# sysctl vm.min_free_kbytes

You can see that the parameter was changed to 1.5 GB approximately and has taken effect. Now let’s use the free command again to see any changes recognized by the system.

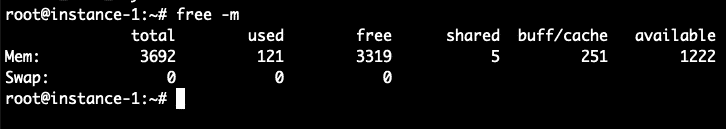

# free -m

The free memory and the buffer cache are unchanged by the command, but the amount of memory displayed as available has been reduced from 3327 to 1222 MB. Which is an approximate reduction of the change in the parameter to 1.5 GB min free memory.

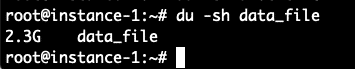

Now let’s create a 2GB data file and then see what reading that file into the buffer cache does to the values. Here is how to create a 2GB data file in 2 lines of bash script below. The script will generate a 35MB random file using the dd command and then copy it 70 times into a new data_file output:

# dd if=/dev/random of=/root/d1.txt count=1000000

# for i in `seq 1 70`; do echo $i; cat /root/d1.txt >> /root/data_file; doneLet’s read the file and ignore the contents by reading and redirecting the file to /dev/null as per below:

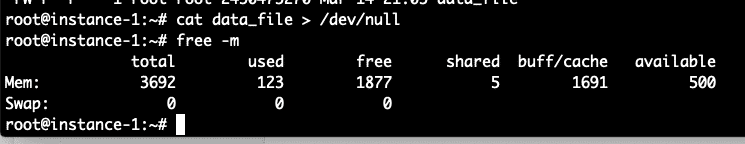

# cat data_file > /dev/nullOk, what has happened to our system memory with this set of maneuvers, let’s check it now:

# free -m

Analyzing the results above. We still have 1.8 GB of free memory so the kernel has protected a large chunk of memory as reserved because of our min_free_kbytes setting. The buffer cache has used 1691 MB, which is less than the total size of our data file which is 2.3 GB. Apparently the entire data_file could not be stored in cache due to the lack of available memory to use for the buffer cache. We can validate that the entire file is not stored in cache but timing the repeated attempts to read the file. If it was cached, it would take a fraction of a second to read the file. Let’s try it.

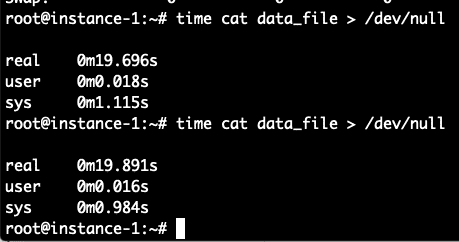

# time cat data_file > /dev/null

# time cat data_file > /dev/nullThe file read took almost 20 seconds which implies its almost certainly not all cached.

As one final validation let’s reduce the vm.min_free_kbytes to allow the page cache to have more room to operate and we can expect to see the cache working and the file read getting much faster.

# echo 67584 > /proc/sys/vm/min_free_kbytes

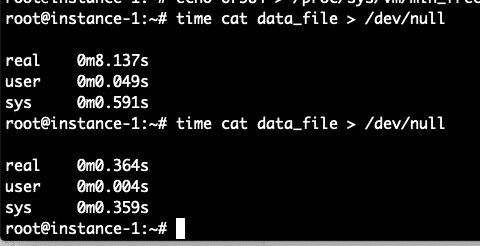

# time cat data_file > /dev/null

# time cat data_file > /dev/null

With the extra memory available for caching the file read time dropped from 20 seconds before to .364 seconds with it all in cache.

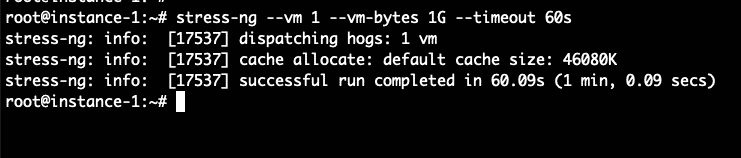

I am curious to do another experiment. What happens with malloc calls to allocate memory from a C program in the face of this really high vm.min_free_kbytes setting. Will it fail the malloc? Will the system die? First reset the the vm.min_free_kbytes setting to the really high value to resume our experiments:

# echo 1500000 > /proc/sys/vm/min_free_kbytesLet’s look again at our free memory:

Theoretically we have 1.9 GB free and 515 MB available. Let’s use a stress test program called stress-ng in order to use some memory and see where we fail. We will use the vm tester and try to allocate 1 GB of memory. Since we have only reserved 1.5 GB on a 3.75 GB system, i guess this should work.

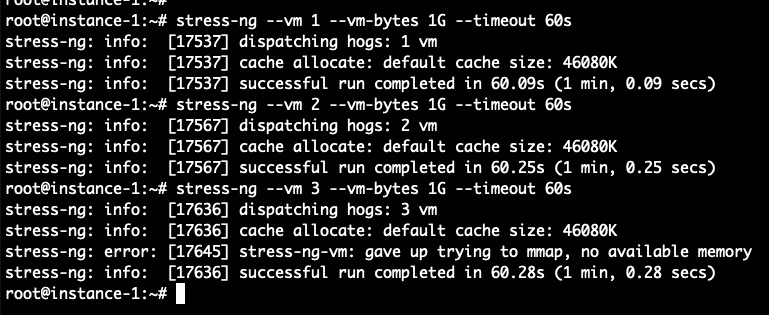

# stress-ng --vm 1 --vm-bytes 1G --timeout 60s

stress-ng: info: [17537] dispatching hogs: 1 vm

stress-ng: info: [17537] cache allocate: default cache size: 46080K

stress-ng: info: [17537] successful run completed in 60.09s (1 min, 0.09 secs)

# stress-ng --vm 2 --vm-bytes 1G --timeout 60s

# stress-ng --vm 3 --vm-bytes 1G --timeout 60sLet’s try it again with more workers, we can try 1, 2, 3, 4 workers and at some point it should fail. In my test it passed with 1 and 2 workers but failed with 3 workers.

Let’s reset the vm.min_free_kbytes to a low number and see if that helps us run 3 memory stressors with 1GB each on a 3.75GB system.

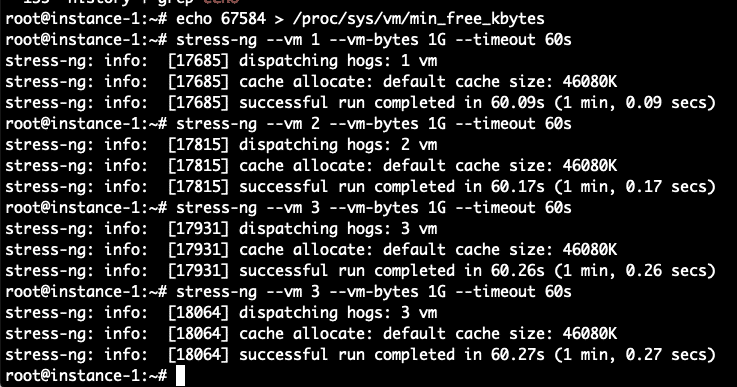

# echo 67584 > /proc/sys/vm/min_free_kbytes

# stress-ng --vm 3 --vm-bytes 1G --timeout 60sThis time it ran successfully without error, i tried it two times without problems. So I can conclude there is a behavioral difference of having more memory available for malloc, when the vm.min_free_kbytes value is set to a lower value.

Default setting for vm.min_free_kbytes

The default value for the setting on my system is 67584 which is about 1.8% of RAM on the system or 64 MB. For safety reasons on a heavily thrashed system i would tend to increase it a bit perhaps to 128MB to allow for more reserved free memory, however for average usage the default value seems sensible enough. The official documentation warns about making the value too high. Setting it to 5 or 10% of the system RAM is probably not the intended usage of the setting, and is too high.

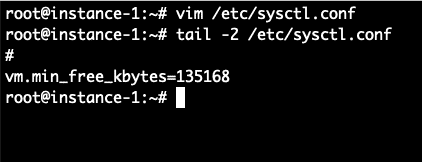

Setting vm.min_free_kbytes to survive reboots

In order to ensure the setting can survive reboots and is not restored to the default values when rebooting be sure to make the sysctl setting persistent by by putting the desired new value in the /etc/sysctl.conf file.

Conclusion

We have seen that the vm.min_free_kbytes linux kernel tunable can be modified and can reserve memory on the system in order to ensure the system is more stable especially during heavy usage and heavy memory allocations. The default settings might be a little too low, especially on high memory systems and should be considered to be increased carefully. We have seen that the memory reserved by this tunable prevents the OS cache from using all the memory and also prevents some malloc operations from using all the memory too.

本文探讨了Linux内核参数vm.min_free_kbytes如何工作并影响内存管理,特别是直接回收策略。设置此参数过高或过低可能导致性能问题。调整min_free_kbytes可以减少直接回收导致的卡顿,但设置过高会减少可用内存。通过sar -B命令可监控调整效果。

本文探讨了Linux内核参数vm.min_free_kbytes如何工作并影响内存管理,特别是直接回收策略。设置此参数过高或过低可能导致性能问题。调整min_free_kbytes可以减少直接回收导致的卡顿,但设置过高会减少可用内存。通过sar -B命令可监控调整效果。

1649

1649

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?