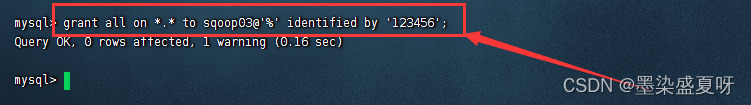

- 在MySQL中,创建一个用户,用户名为sqoop03,密码为:123456

启动MySQL:support-files/mysql.server start

进入MySQL:mysql -u root -p

创建用户sqoop03:grant all on *.* to sqoop03@'%' identified by '123456';

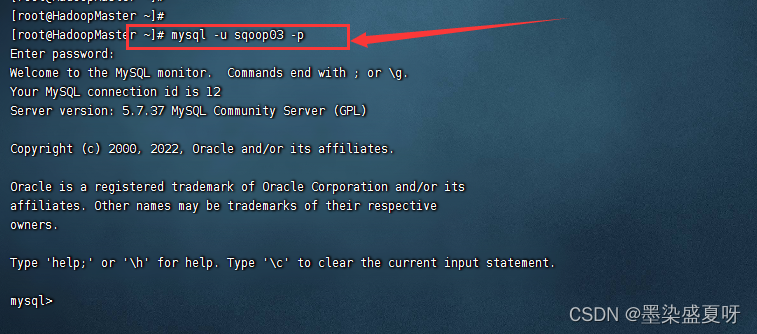

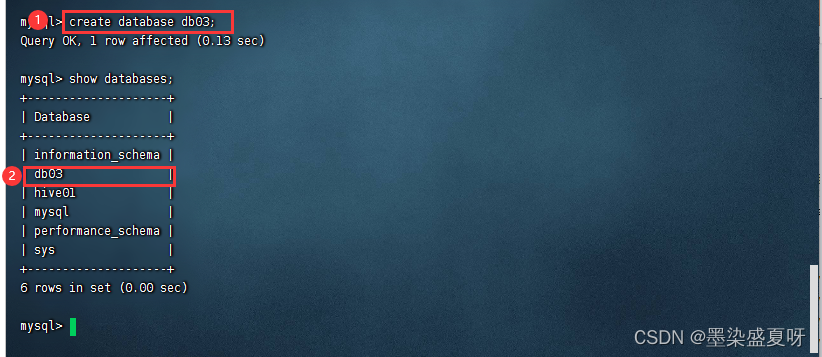

(1)登陆MySQL的sqoop03用户,创建一张数据库,数据库的名称为db03。登录到mysql的sqoop03用户

mysql -u sqoop03 -p

(2)创建数据库sqoop03

create database db03;

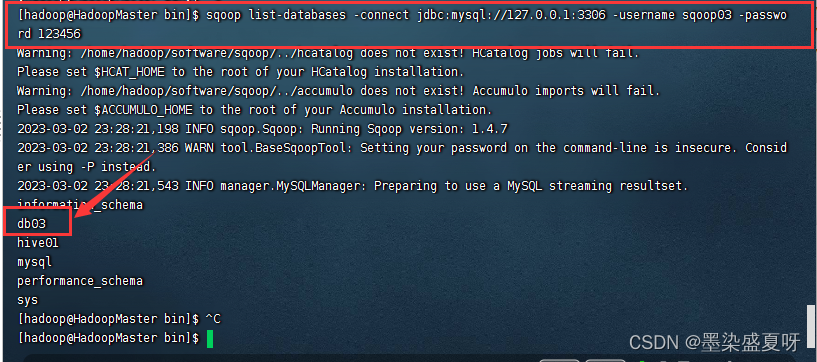

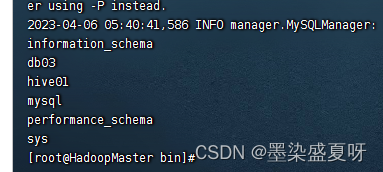

2.通过sqoop查看mysql的sqoop03 用户下有哪些数据库

命令:sqoop list-databases -connect jdbc:mysql://127.0.0.1:3306 -username sqoop03 -password 123456

3.在数据库db03中创建10张表,表名和字段可以自定义,每张表的数据不少于5行

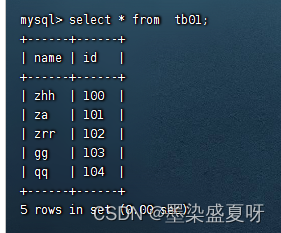

(1)创建第一张表

mysql> use db03

Reading table information for completion of table and column names

You can turn off this feature to get a quicker startup with -A

Database changed

mysql> create table tb01(name char(100),id char(100));

Query OK, 0 rows affected (0.75 sec)

mysql> insert into tb01 values('zhh','100');

Query OK, 1 row affected (0.16 sec)

mysql> insert into tb01 values('za','101');

Query OK, 1 row affected (0.09 sec)

mysql> insert into tb01 values('zrr','102');

Query OK, 1 row affected (0.02 sec)

mysql> insert into tb01 values('gg','103');

Query OK, 1 row affected (0.01 sec)

mysql> insert into tb01 values('qq','104');

Query OK, 1 row affected (0.04 sec)

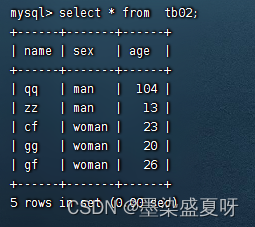

(2)创建第二张表

mysql> create table tb02(name char(100),sex char(30),age int);

Query OK, 0 rows affected (0.26 sec)

mysql> insert into tb02 values('qq','man','104');

Query OK, 1 row affected (0.12 sec)

mysql> insert into tb02 values('zz','man','13');

Query OK, 1 row affected (0.00 sec)

mysql> insert into tb02 values('cf','woman','23');

Query OK, 1 row affected (0.01 sec)

mysql> insert into tb02 values('gg','woman','20');

Query OK, 1 row affected (0.02 sec)

mysql> insert into tb02 values('gf','woman','26');

Query OK, 1 row affected (0.00 sec)

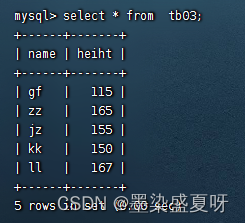

(3)创建第三张表

mysql> create table tb03(name char(100),heiht float);

Query OK, 0 rows affected (0.26 sec)

mysql> insert into tb03 values('gf',115);

Query OK, 1 row affected (0.26 sec)

mysql> insert into tb03 values('zz',165);

Query OK, 1 row affected (0.06 sec)

mysql> insert into tb03 values('jz',155);

Query OK, 1 row affected (0.01 sec)

mysql> insert into tb03 values('kk',150);

Query OK, 1 row affected (0.00 sec)

mysql> insert into tb03 values('ll',167);

Query OK, 1 row affected (0.01 sec)

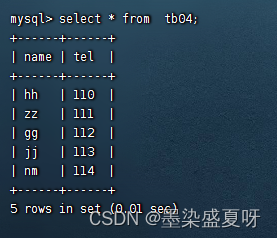

(4)创建第四张表

mysql> create table tb04 (name char(100), tel char(30));

Query OK, 0 rows affected (0.11 sec)

mysql> insert into tb04 values('hh','110');

Query OK, 1 row affected (0.04 sec)

mysql> insert into tb04 values('zz','111');

Query OK, 1 row affected (0.01 sec)

mysql> insert into tb04 values('gg','112');

Query OK, 1 row affected (0.01 sec)

mysql> insert into tb04 values('jj','113');

Query OK, 1 row affected (0.01 sec)

mysql> insert into tb04 values('nm','114');

Query OK, 1 row affected (0.01 sec)

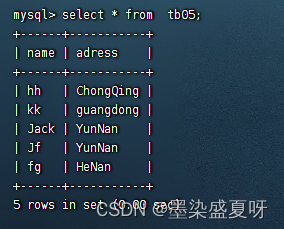

(5)创建第五张表

mysql> create table tb05 (name char(100),adress char(100));

Query OK, 0 rows affected (0.08 sec)

mysql> insert into tb05 values('hh','ChongQing');

Query OK, 1 row affected (0.04 sec)

mysql> insert into tb05 values(kk','guangdong');

mysql> insert into tb05 values('kk','guangdong');

Query OK, 1 row affected (0.00 sec)

mysql> insert into tb05 values('Jack','YunNan');

Query OK, 1 row affected (0.01 sec)

mysql> insert into tb05 values('Jf','YunNan');

Query OK, 1 row affected (0.00 sec)

mysql> insert into tb05 values('fg','HeNan');

Query OK, 1 row affected (0.00 sec)

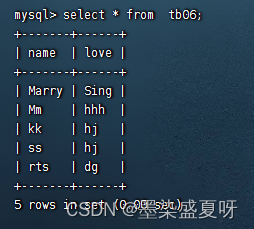

(6)创建第六张表

mysql> create table tb06 (name char(100),love char(50));

Query OK, 0 rows affected (0.14 sec)

mysql> insert into tb06 values('Marry','Sing');

Query OK, 1 row affected (0.00 sec)

mysql> insert into tb06 values('Mm','hhh');

Query OK, 1 row affected (0.06 sec)

mysql> insert into tb06 values('kk','hj');

Query OK, 1 row affected (0.01 sec)

mysql> insert into tb06 values('ss','hj');

Query OK, 1 row affected (0.00 sec)

mysql> insert into tb06 values('rts','dg');

Query OK, 1 row affected (0.01 sec)

(7)创建第七张表

mysql> create table tb07 (name char(100),academic char(50));

Query OK, 0 rows affected (0.14 sec)

mysql> insert into tb07 values('Marry','Senior');

Query OK, 1 row affected (0.01 sec)

mysql> insert into tb07 values('Mm','Senior');

Query OK, 1 row affected (0.00 sec)

mysql> insert into tb07 values('oo','Ungergraduate');

Query OK, 1 row affected (0.01 sec)

mysql> insert into tb07 values('hh','Ungergraduate');

Query OK, 1 row affected (0.01 sec)

mysql> insert into tb07 values('iu','Ungergraduate');

Query OK, 1 row affected (0.00 sec)

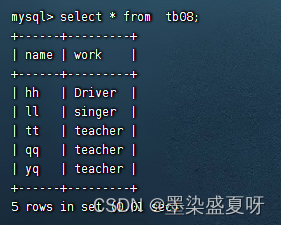

(8)创建第八张表

mysql> create table table08 (name char(100),work char(50));

ERROR 1050 (42S01): Table 'table08' already exists

mysql> create table tb08 (name char(100),work char(50));

Query OK, 0 rows affected (0.14 sec)

mysql> insert into tb08 values('hh','Driver');

Query OK, 1 row affected (0.03 sec)

mysql> insert into tb08 values('ll','singer');

Query OK, 1 row affected (0.00 sec)

mysql> insert into tb08 values('tt','teacher');

Query OK, 1 row affected (0.01 sec)

mysql> insert into tb08 values('qq','teacher');

Query OK, 1 row affected (0.00 sec)

mysql> insert into tb08 values('yq','teacher');

Query OK, 1 row affected (0.00 sec)

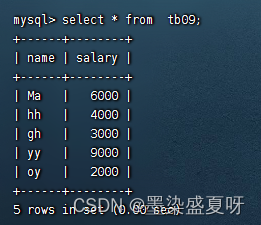

(9)创建第九张表

mysql> create table tb09 (name char(100),salary int);

Query OK, 0 rows affected (0.23 sec)

mysql> insert into tb09 values('Ma',6000);

Query OK, 1 row affected (0.01 sec)

mysql> insert into tb09 values('hh',4000);

Query OK, 1 row affected (0.00 sec)

mysql> insert into tb09 values('gh',3000);

Query OK, 1 row affected (0.00 sec)

mysql> insert into tb09 values('yy',9000);

Query OK, 1 row affected (0.00 sec)

mysql> insert into tb09 values('oy',2000);

Query OK, 1 row affected (0.00 sec)

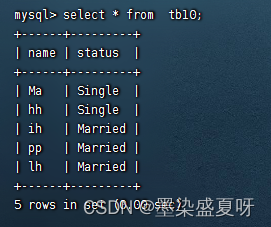

(10)创建第十张表

mysql> create table tb10 (name char(100),status char(30));

Query OK, 0 rows affected (0.11 sec)

mysql> insert into tb10 values('Ma','Single');

Query OK, 1 row affected (0.09 sec)

mysql> insert into tb10 values('hh','Single');

Query OK, 1 row affected (0.00 sec)

mysql> insert into tb10 values('ih','Married');

Query OK, 1 row affected (0.01 sec)

mysql> insert into tb10 values('pp','Married');

Query OK, 1 row affected (0.00 sec)

mysql> insert into tb10 values('lh','Married');

Query OK, 1 row affected (0.01 sec)

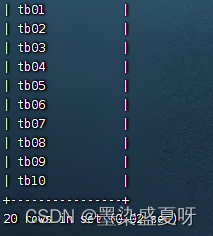

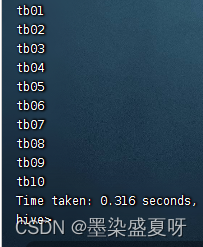

建立的10张表如下:

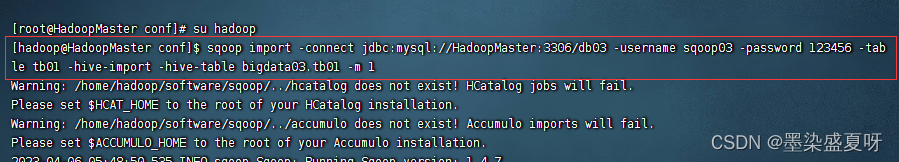

3、使用ETL工具Sqoop,将MySQL数据库db03中的10张表的表结构和数据导入(同步)到大数据平台的Hive中

(1)启动sqoop:

[root@HadoopMaster bin]# ./sqoop list-databases -connect jdbc:mysql://HadoopMaster:3306/ -username sqoop03 -password 123456

命令:

sqoop import -connect jdbc:mysql://HadoopMaster:3306/db03 -username sqoop03 -password 123456 -table tb01 -hive-import -hive-table bigdata03.tb01 -m 1

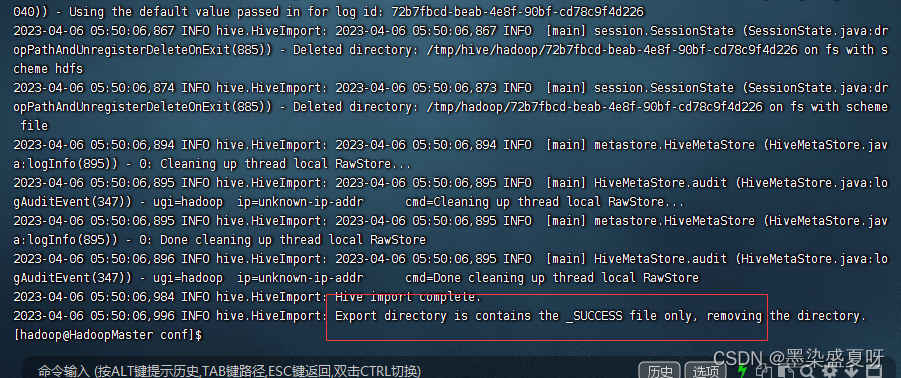

显示成功:

(10个表依次输入命令即可。)

4、结果如下:

hive> show tables;

在9870端口查看如下:

在MySQL中创建了一个名为sqoop03的用户,设置了密码,并创建了数据库db03。然后通过sqoop03用户在db03数据库中创建了10张不同结构的表,并插入了数据。最后,使用Sqoop工具将这些表的结构和数据同步导入到了Hadoop集群的Hive中。

在MySQL中创建了一个名为sqoop03的用户,设置了密码,并创建了数据库db03。然后通过sqoop03用户在db03数据库中创建了10张不同结构的表,并插入了数据。最后,使用Sqoop工具将这些表的结构和数据同步导入到了Hadoop集群的Hive中。

1196

1196

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?