/

19/05/27 08:08:39 INFO namenode.NameNode: registered UNIX signal handlers for [TERM, HUP, INT]

19/05/27 08:08:39 INFO namenode.NameNode: createNameNode [-bootstrapStandby]

19/05/27 08:08:42 INFO ipc.Client: Retrying connect to server: hadoop01/192.168.100.12:9000. Already tried 0 time(s); retry policy is RetryUpToMaximumCountWithFixedSleep(maxRetries=10, sleepTime=1000 MILLISECONDS)

19/05/27 08:08:43 INFO ipc.Client: Retrying connect to server: hadoop01/192.168.100.12:9000. Already tried 1 time(s); retry policy is RetryUpToMaximumCountWithFixedSleep(maxRetries=10, sleepTime=1000 MILLISECONDS)

19/05/27 08:08:44 INFO ipc.Client: Retrying connect to server: hadoop01/192.168.100.12:9000. Already tried 2 time(s); retry policy is RetryUpToMaximumCountWithFixedSleep(maxRetries=10, sleepTime=1000 MILLISECONDS)

19/05/27 08:08:45 INFO ipc.Client: Retrying connect to server: hadoop01/192.168.100.12:9000. Already tried 3 time(s); retry policy is RetryUpToMaximumCountWithFixedSleep(maxRetries=10, sleepTime=1000 MILLISECONDS)

19/05/27 08:08:46 INFO ipc.Client: Retrying connect to server: hadoop01/192.168.100.12:9000. Already tried 4 time(s); retry policy is RetryUpToMaximumCountWithFixedSleep(maxRetries=10, sleepTime=1000 MILLISECONDS)

19/05/27 08:08:47 INFO ipc.Client: Retrying connect to server: hadoop01/192.168.100.12:9000. Already tried 5 time(s); retry policy is RetryUpToMaximumCountWithFixedSleep(maxRetries=10, sleepTime=1000 MILLISECONDS)

19/05/27 08:08:48 INFO ipc.Client: Retrying connect to server: hadoop01/192.168.100.12:9000. Already tried 6 time(s); retry policy is RetryUpToMaximumCountWithFixedSleep(maxRetries=10, sleepTime=1000 MILLISECONDS)

19/05/27 08:08:49 INFO ipc.Client: Retrying connect to server: hadoop01/192.168.100.12:9000. Already tried 7 time(s); retry policy is RetryUpToMaximumCountWithFixedSleep(maxRetries=10, sleepTime=1000 MILLISECONDS)

19/05/27 08:08:50 INFO ipc.Client: Retrying connect to server: hadoop01/192.168.100.12:9000. Already tried 8 time(s); retry policy is RetryUpToMaximumCountWithFixedSleep(maxRetries=10, sleepTime=1000 MILLISECONDS)

19/05/27 08:08:51 INFO ipc.Client: Retrying connect to server: hadoop01/192.168.100.12:9000. Already tried 9 time(s); retry policy is RetryUpToMaximumCountWithFixedSleep(maxRetries=10, sleepTime=1000 MILLISECONDS)

19/05/27 08:08:51 FATAL ha.BootstrapStandby: Unable to fetch namespace information from active NN at hadoop01/192.168.100.12:9000: Call From hadoop02/192.168.100.13 to hadoop01:9000 failed on connection exception: java.net.ConnectException: Connection refused; For more details see: http://wiki.apache.org/hadoop/ConnectionRefused

19/05/27 08:08:51 INFO util.ExitUtil: Exiting with status 2

19/05/27 08:08:51 INFO namenode.NameNode: SHUTDOWN_MSG:

/

SHUTDOWN_MSG: Shutting down NameNode at hadoop02/192.168.100.13

************************************************************/

解决方案:

scp -r hadooop02:/apps/hadoop/data/dfs/ hadoop01:/root/apps/hadoop/data/

datanode动态添加报错:

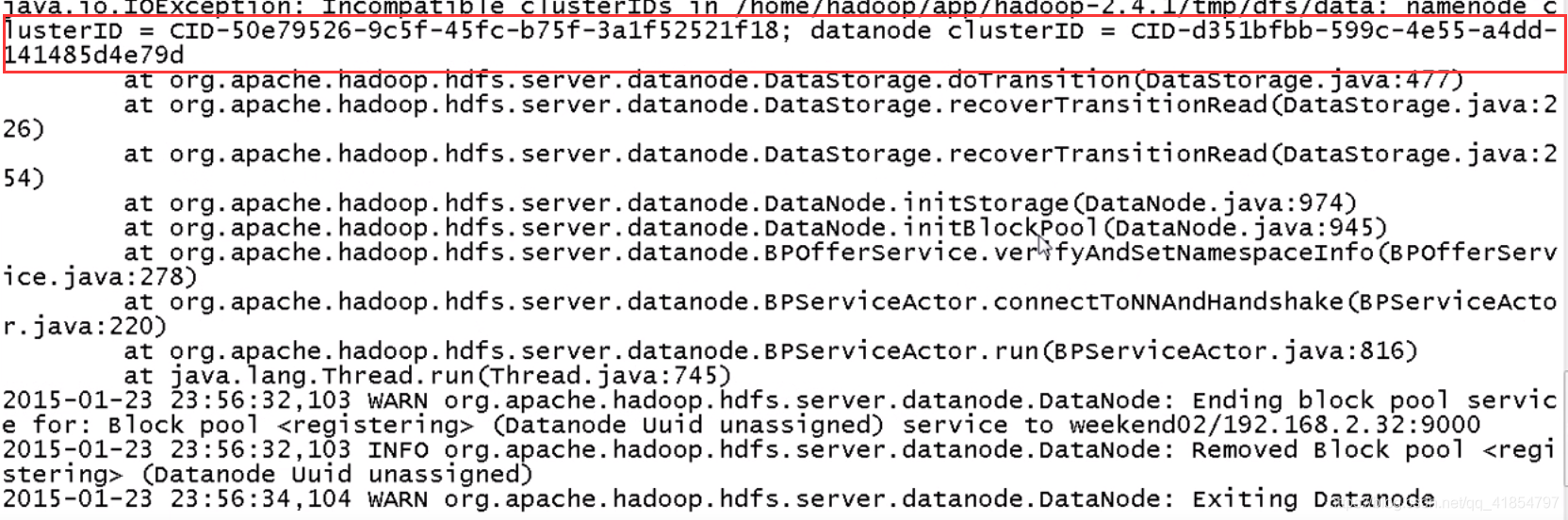

clusterID不一致问题导致!!!

删除datanode节点上的hadoop/tmp文件即可

在尝试实现Hadoop HA过程中,遇到了datanode动态添加时报错,显示无法连接到服务器hadoop01:9000。错误信息表明连接被拒绝。经过排查,发现是由于clusterID不一致导致的问题。解决方法是删除datanode节点上hadoop/tmp文件,以确保clusterID的一致性。

在尝试实现Hadoop HA过程中,遇到了datanode动态添加时报错,显示无法连接到服务器hadoop01:9000。错误信息表明连接被拒绝。经过排查,发现是由于clusterID不一致导致的问题。解决方法是删除datanode节点上hadoop/tmp文件,以确保clusterID的一致性。

4041

4041

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?