0

import requests

import jieba.analyse

import codecs

from wordcloud import WordCloud

import matplotlib.pyplot as plt

import matplotlib.image as mpimg

import re

jieba 和 词云都要额外导入

1 先爬

def get_user_info(uid):

# 发送请求

result = requests.get('https://m.weibo.cn/api/container/getIndex?type=uid&value={}'.format(uid))

json_data = result.json()

# 获取微博信息中json内容

userinfo = {

'name': json_data['data']['userInfo']['screen_name'], # 获取用户头像

'description': json_data['data']['userInfo']['description'], # 获取用户描述

'follow_count': json_data['data']['userInfo']['follow_count'], # 获取关注数

'followers_count': json_data['data']['userInfo']['followers_count'], # 获取粉丝数

'profile_image_url': json_data['data']['userInfo']['profile_image_url'], # 获取头像

'verified_reason': json_data['data']['userInfo']['verified_reason'], # 认证信息

'containerid': json_data['data']['tabsInfo']['tabs'][1]['containerid'] # 此字段在获取博文中需要

}

# 获取性别,微博中m表示男性,f表示女性

if json_data['data']['userInfo']['gender'] == 'm':

gender = '男'

elif json_data['data']['userInfo']['gender'] == 'f':

gender = '女'

else:

gender = '未知'

userinfo['gender'] = gender

return userinfo

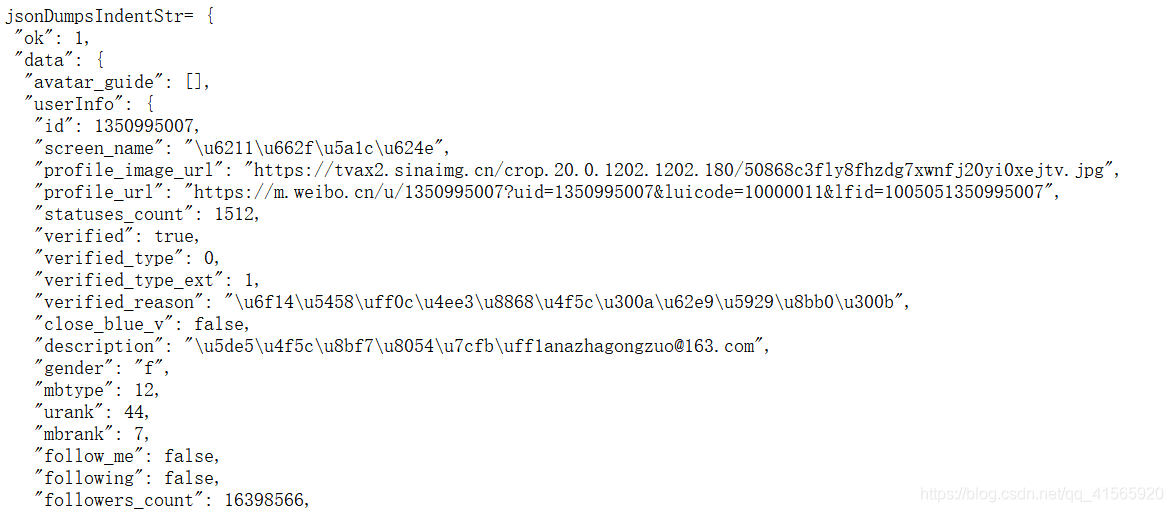

调试时可以用来查看树形的字典更加直观

#demoDictList is the value we want format to output

jsonDumpsIndentStr = json.dumps(json_data, indent=1);

print(jsonDumpsIndentStr)

2 持续爬—翻页

def get_all_post(uid,containerid):

# 从第一页开始

page = 1

count = 0

# 这个用来存放博文列表

posts = []

while True:

# 请求博文列表

result = requests.get('https://m.weibo.cn/api/container/getIndex?type=uid&value={}&containerid={}&page={}'.format(uid, containerid, page))

json_data = result.json()

# 当博文获取完毕,退出循环

if not json_data['data']['cards']:

break

if page >= 3:

break

# 循环将新的博文加入列表

for i in json_data['data']['cards']:

try:

posts.append(i['mblog']['text'])

count += 1

except KeyError:

continue

# 停顿半秒,避免被反爬虫

# sleep(0.5)

#print('正在抓取第{}页,已将捕获{}条微博'.format(page, count))

# 跳转至下一页

page += 1

# 返回所有博文

return posts

第0页和第1页一样,有的没有i[‘mblog’][‘text’],except KeyError continue

3

# 获取古力娜扎信息

#userinfo = get_user_info('1350995007')

# 信息如下

#print(userinfo)

posts = get_all_post('1350995007', '1076031350995007')

# 查看博文条数

# print(len(posts))

# 显示前3个

# print(posts[:3], sep='-----')

with codecs.open('weibo1.txt', 'w', encoding='utf-8') as f:

f.write("\n".join(posts))

4 处理爬下来的文本

def clean_html(raw_html):

pattern = re.compile(r'<.*?>|转发微博|//:|Repost|,|?|。|、|分享图片|回复@.*?:|//@.*')

text = re.sub(pattern, '', raw_html)

return text

txt = []

with codecs.open("weibo1.txt", 'r', encoding="utf-8") as f:

for text in f.readlines():

text = clean_html(text)

txt.append(text)

# print(txt)

with codecs.open('after_filter_words.txt', 'w', encoding='utf-8')as f:

f.write(''.join(txt))

txt这个list变量在两个文本中中介

写入list并且换行

5

data = []

with codecs.open("after_filter_words.txt", 'r', encoding="utf-8") as f:

for text in f.readlines():

print(text)

data.extend(jieba.analyse.extract_tags(text, topK=20))

data = " ".join(data)

print('------------------------------------')

print(data)

# mask_img = imageio.imread('./52f90c9a5131c.jpg', flatten=True)

mask_img = mpimg.imread('./52f90c9a5131c.jpg')

plt.imshow(mask_img)

wordcloud = WordCloud(

font_path='msyh.ttc',

background_color='white',

mask=mask_img

).generate(data)

plt.imshow(wordcloud, interpolation="bilinear")

plt.show()

6 过程中的错误

- 生成词云时:ValueError: We need at least 1 word to plot a word cloud, got 0.生成的after_filter_word格式有问题,改成UTF-8编码没有效果,后来随便复制一句话进去成功,注意文本文档的编码方式

- 导入词云背景图:image is deprecated! toimage is deprecated in SciPy 1.0.0, and will be removed in 1.2.0. 解决:import matplotlib.image as mpimg .mpimg.imread()来导

2020

2020

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?