安装MySQL

https://blog.youkuaiyun.com/qq_38821502/article/details/90053717

安装HIVE

-

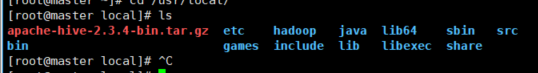

切换到/usr/local/目录下

cd /usr/local/ -

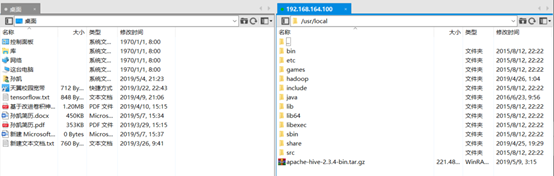

上传hive安装包

-

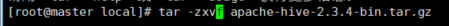

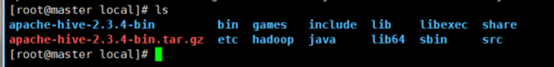

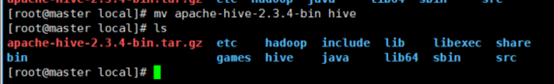

解压hive tar -zxvf apache-hive-2.3.4-bin.tar.gz

-

修改目录名 mv apache-hive-2.3.4-bin hive

-

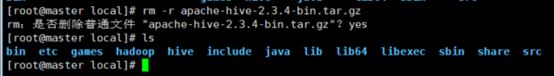

删除hive安装包 rm -r apache-hive-2.3.4-bin.tar.gz

-

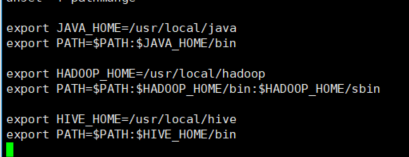

配置环境变量 vi /etc/profile/

-

让环境变量生效 source /etc/profile

-

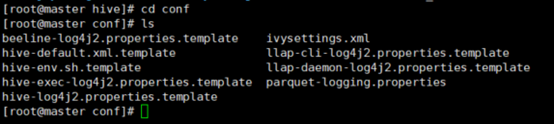

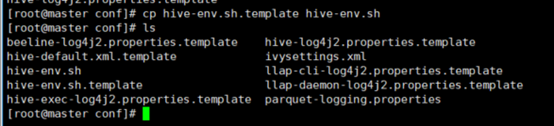

cd conf 切换到conf目录下

-

修改 hive-env.sh.template 名称为hive-site.sh

cp hive-env.sh.template hive-env.sh

-

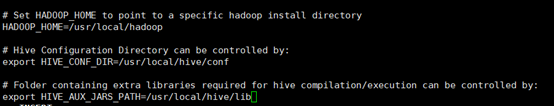

配置hive-env.sh 文件 vi hive-env.sh

配置HADOOP_HOME、HIVE_CONF_DIR、HIVE_AUX_JARS_PATH路径

-

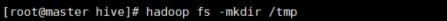

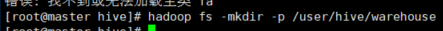

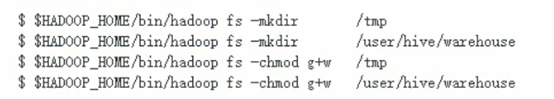

创建两个hdfs目录

hadoop fs -mkdir /tmp

hadoop fs -mkdir -p /user/hive/warehouse

-

cp hive-default.xml.template hive-site.xml (以下配置极为重要配置错误可能会出现各种错误)

<property>

<name>javax.jdo.option.ConnectionURL</name>

<value>jdbc:mysql://master:3306/metastore?createDatabaseIfNotExist=true&useSSL=false&useUnicode=true&characterEncoding=utf-8</value>

<description>JDBC connect string for a JDBC metastore</description>

</property>

<property>

<name>javax.jdo.option.ConnectionDriverName</name>

<value>com.mysql.jdbc.Driver</value>

<description>Driver class name for a JDBC metastore</description>

</property>

<property>

<name>javax.jdo.option.ConnectionPassword</name>

<value>tsk007</value>

<description>password to use against metastore database</description>

</property>

<property>

<name>javax.jdo.option.ConnectionUserName</name>

<value>root</value>

<description>Username to use against metastore database</description>

</property>

<property>

<name>hive.exec.local.scratchdir</name>

<value>/usr/local/hive/tmp//${user.name}</value>

<description>Local scratch space for Hive jobs</description>

</property>

<property>

<name>hive.downloaded.resources.dir</name>

<value>/usr/local/hive/tmp//${hive.session.id}_resources</value>

<description>Temporary local directory for added resources in the remote file system.</description>

</property>

<property>

<name>hive.server2.logging.operation.log.location</name>

<value>/usr/local/hive/tmp//root/operation_logs</value>

<description>Top level directory where operation logs are stored if logging functionality is enabled</description>

</property>

<property>

<name>hive.querylog.location</name>

<value>/usr/local/hive/tmp//root</value>

<description>Location of Hive run time structured log file</description>

</property>

<property>

<name>datanucleus.schema.autoCreateAll</name>

<value>true</value>

<description>Auto creates necessary schema on a startup if one doesn't exist. Set this to false, after creating it once.To enable auto create also set hive.metastore.schema.verification=false. Auto creation is not recommended for production use cases, run schematool command instead.</description>

</property>

<property>

<name>hive.metastore.schema.verification</name>

<value>false</value>

<description>

Enforce metastore schema version consistency.

True: Verify that version information stored in is compatible with one from Hive jars. Also disable automatic

schema migration attempt. Users are required to manually migrate schema after Hive upgrade which ensures

proper metastore schema migration. (Default)

False: Warn if the version information stored in metastore doesn't match with one from in Hive jars.

</description>

</property>

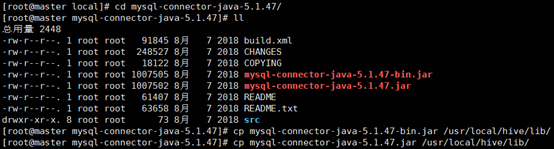

- 上传mysql-connector-java-5.1.47.tar.gz,然后解压

tar -zxvf mysql-connector-java-5.1.47.tar.gz - 切换到该压缩包目录下

将jar包拷贝到hive安装目录的lib目录下

cp mysql-connector-java-5.1.47-bin.jar /usr/local/hive/lib/

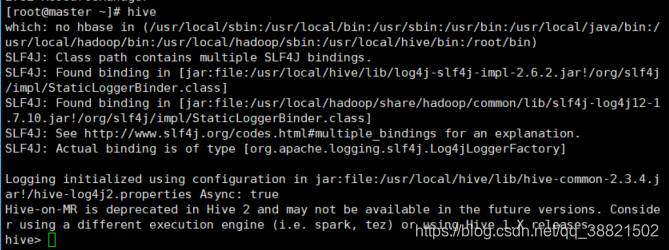

- schematool -dbType mysql -initSchema 初始化源数据库

- hive 运行hive即可

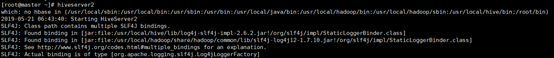

hiveserver2

在hive的hive-site.xml中添加如下内容

<property>

<name>hive.server2.thrift.bind.host</name>

<value>192.168.164.100</value>

<description>Bind host on which to run the HiveServer2 Thrift service.</description>

</property>

在hadoop的core-site.xml中添加如下内容,然后重启hadoop

hadoop.proxyuser.root.hosts

hadoop.proxyuser.root.groups

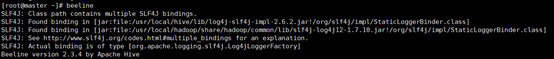

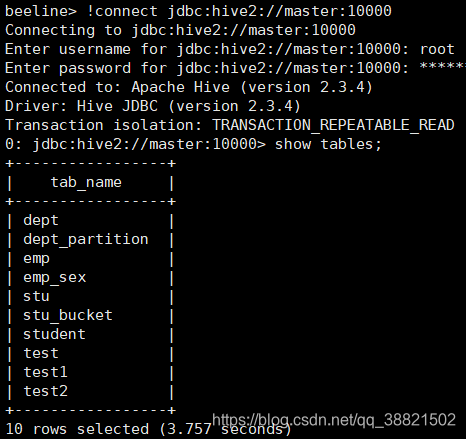

hiveserver2 启动

beeline

本文详细介绍了在Linux环境下安装和配置Hive的过程,包括上传并解压安装包、配置环境变量、设置HDFS目录、修改hive-site.xml文件以连接MySQL作为元数据存储,以及配置HiveServer2和Beeline客户端。

本文详细介绍了在Linux环境下安装和配置Hive的过程,包括上传并解压安装包、配置环境变量、设置HDFS目录、修改hive-site.xml文件以连接MySQL作为元数据存储,以及配置HiveServer2和Beeline客户端。

4963

4963

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?