1 非线性激活

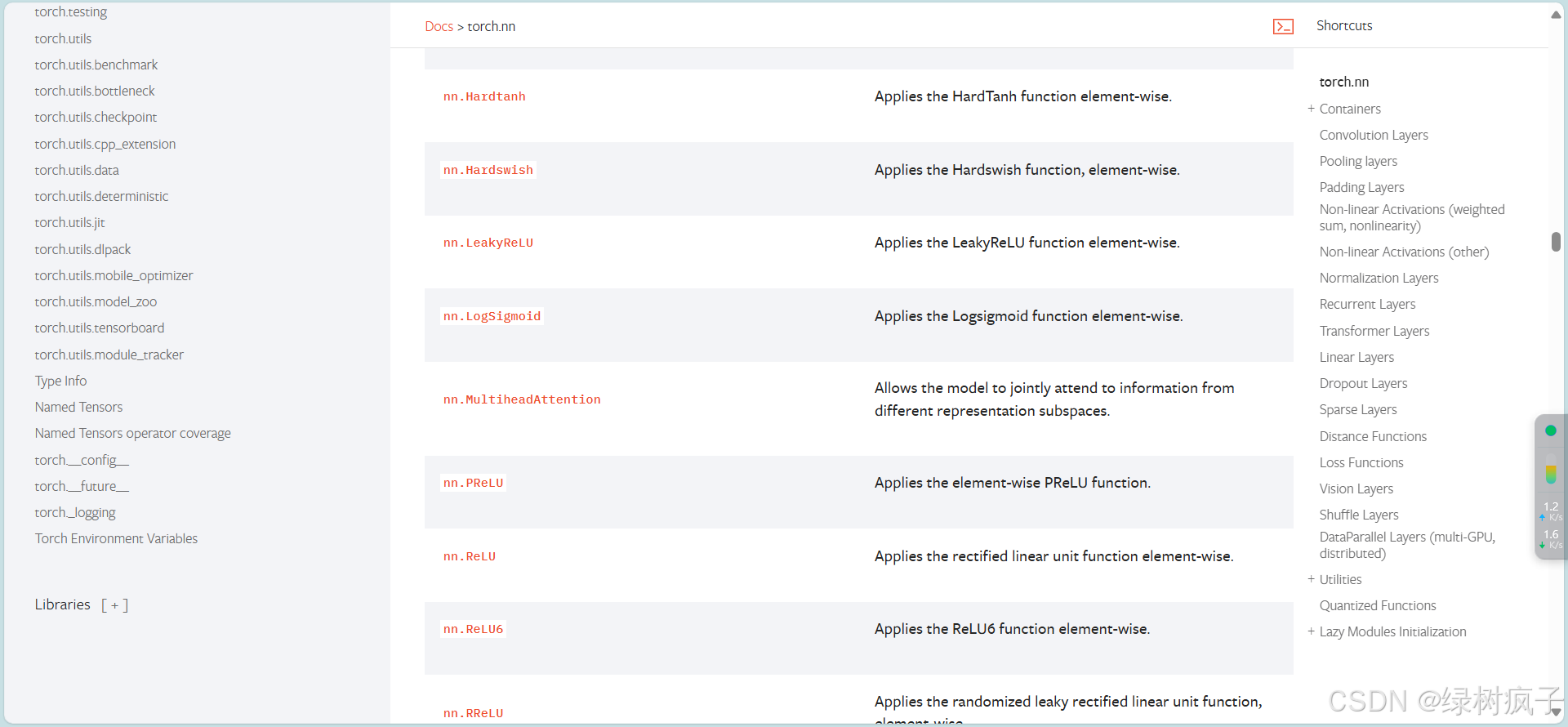

1.1 几种常见的非线性激活:

ReLU (Rectified Linear Unit)线性整流函数

Sigmoid

1.2代码实战:

1.2.1 ReLU

import torch

from torch import nn

from torch.nn import ReLU

input=torch.tensor([[1,-0.5],

[-1,3]])

input=torch.reshape(input,(-1,1,2,2))

print(input.shape)

class Tudui(nn.Module):

def __init__(self):

super(Tudui,self).__init__()

self.relu1 = ReLU(

最低0.47元/天 解锁文章

最低0.47元/天 解锁文章

2512

2512

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?