本程序用两个主要url网址:东方财富网以及百度股市通。采用requests-bs4-re技术路线爬取了整个东方财富网的所有股票的信息并保存在本地D盘下的新建的TXT文本中。

1、爬取url网页获得html页面:

def getHTMLText(url, code="utf-8"):

try:

r = requests.get(url)

r.raise_for_status()

r.encoding = code

return r.text

except:

return ""网页默认编码为“utf-8”编码,比

def getHTMLText(url):

try:

r = requests.get(url)

r.raise_for_status()

r.encoding = r.apparent_encoding

return r.text

except:

return ""这个方法可以爬取速度更加快速。

2、在东方财富网上截取股票信息上海股票和深圳股票的最后字符段,用来接到百度股票网页后来查询股票信息:

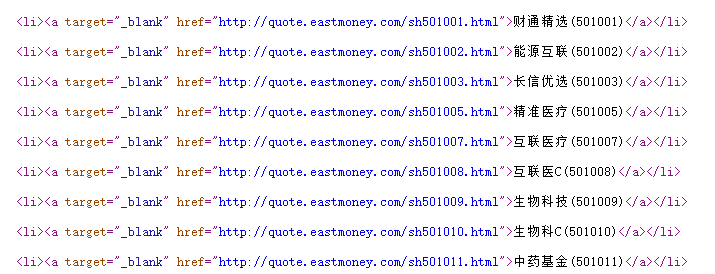

这里查看东方财富网网页的源代码,如下图为部分代码:

用以下代码中正则表达式匹配sh/z+6个数字到lst列表中:

def getStockList(lst, stockURL):

html = getHTMLText(stockURL, "GB2312")

soup = BeautifulSoup(html, 'html.parser')

a = soup.find_all('a')

for i in a:

try:

href = i.attrs['href']

lst.append(re.findall(r"[s][hz]\d{6}", href)[0])

except:

continue3、将截取到的字符串连接到百度股票后查询股票完整信息:

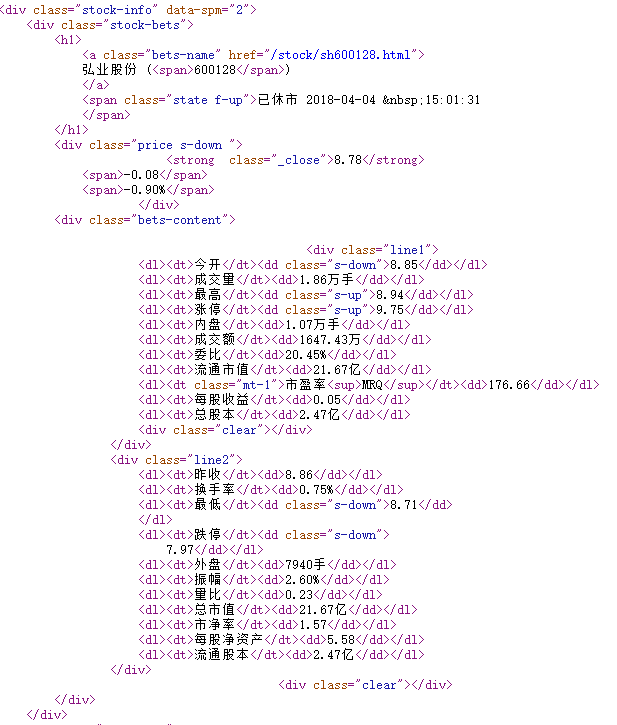

先在百度股票中查询一个股票并查看其源代码如下:

很明显可以看出股票的基本信息都在<dt>与<dd>标签中,将两个标签中的内容存于字典中并保存在本地。此代码还有个利用进度条读条的方法来增加用户体验(注意不要再idle中执行此程序,否则进度条不会自动在本行刷新,而是保存一个股票的信息后就跳转到下一行)

然后写出爬取股票信息的代码:

def getStockInfo(lst, stockURL, fpath):

count = 0

for stock in lst:

url = stockURL + stock + ".html"

html = getHTMLText(url)

try:

if html=="":

continue

infoDict = {}

soup = BeautifulSoup(html, 'html.parser')

stockInfo = soup.find('div',attrs={'class':'stock-bets'})

name = stockInfo.find_all(attrs={'class':'bets-name'})[0]

infoDict.update({'股票名称': name.text.split()[0]})

keyList = stockInfo.find_all('dt')

valueList = stockInfo.find_all('dd')

for i in range(len(keyList)):

key = keyList[i].text

val = valueList[i].text

infoDict[key] = val

with open(fpath, 'a', encoding='utf-8') as f:

f.write( str(infoDict) + '\n' )

count = count + 1

print("\r当前进度: {:.2f}%".format(count*100/len(lst)),end="")

except:

count = count + 1

print("\r当前进度: {:.2f}%".format(count*100/len(lst)),end="")

continue4、最后,贴出本实验的完整代码:

import requests

from bs4 import BeautifulSoup

import traceback

import re

def getHTMLText(url, code="utf-8"):

try:

r = requests.get(url)

r.raise_for_status()

r.encoding = code

return r.text

except:

return ""

def getStockList(lst, stockURL):

html = getHTMLText(stockURL, "GB2312")

soup = BeautifulSoup(html, 'html.parser')

a = soup.find_all('a')

for i in a:

try:

href = i.attrs['href']

lst.append(re.findall(r"[s][hz]\d{6}", href)[0])

except:

continue

def getStockInfo(lst, stockURL, fpath):

count = 0

for stock in lst:

url = stockURL + stock + ".html"

html = getHTMLText(url)

try:

if html=="":

continue

infoDict = {}

soup = BeautifulSoup(html, 'html.parser')

stockInfo = soup.find('div',attrs={'class':'stock-bets'})

name = stockInfo.find_all(attrs={'class':'bets-name'})[0]

infoDict.update({'股票名称': name.text.split()[0]})

keyList = stockInfo.find_all('dt')

valueList = stockInfo.find_all('dd')

for i in range(len(keyList)):

key = keyList[i].text

val = valueList[i].text

infoDict[key] = val

with open(fpath, 'a', encoding='utf-8') as f:

f.write( str(infoDict) + '\n' )

count = count + 1

print("\r当前进度: {:.2f}%".format(count*100/len(lst)),end="")

except:

count = count + 1

print("\r当前进度: {:.2f}%".format(count*100/len(lst)),end="")

continue

def main():

stock_list_url = 'http://quote.eastmoney.com/stocklist.html'

stock_info_url = 'https://gupiao.baidu.com/stock/'

output_file = 'D:/BaiduStockInfo.txt'

slist=[]

getStockList(slist, stock_list_url)

getStockInfo(slist, stock_info_url, output_file)

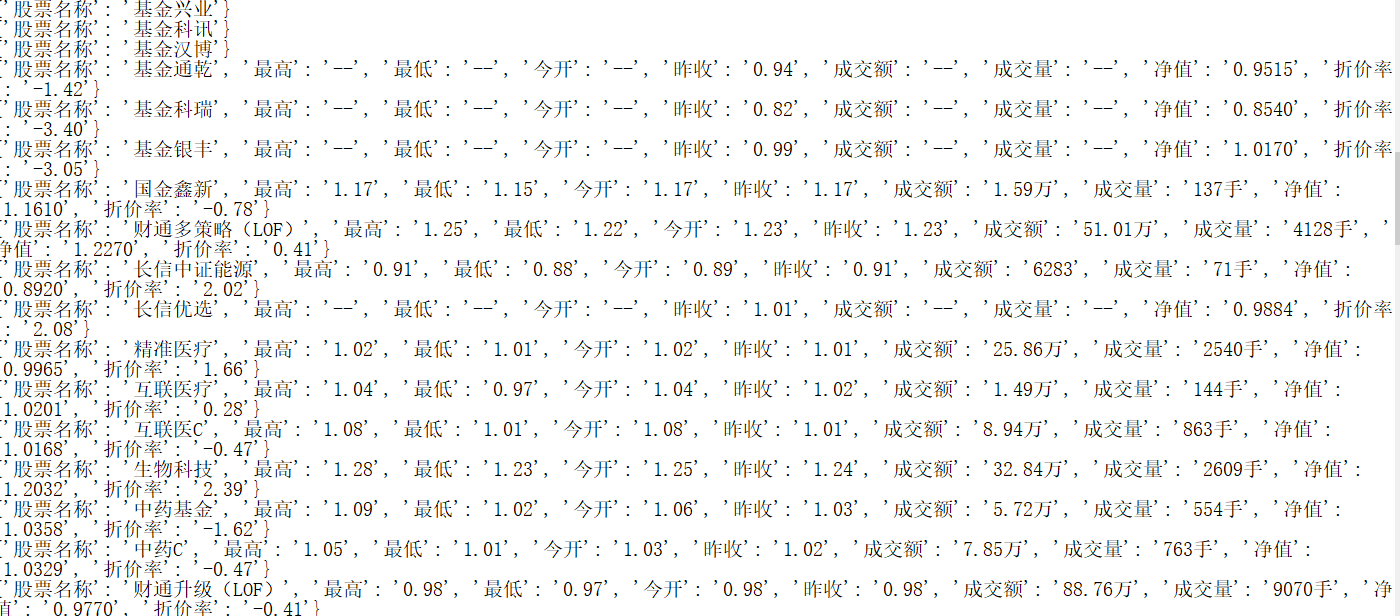

main()以下是程序运行的截图:

因东方财富网上有些股票已经消失了,所以转到百度股票查看具体的信息时只会为空,爬取下来的信息中只有简单的股票名称而没有具体的信息。

本文介绍了一个Python爬虫程序,该程序从东方财富网抓取股票URL,通过正则表达式提取上海和深圳股票代码,并结合百度股市通查询完整股票信息。程序利用requests、bs4和re库,将爬取的数据保存到本地TXT文件,同时展示了进度条提升用户体验。由于部分股票信息缺失,实际结果可能只包含股票名称。

本文介绍了一个Python爬虫程序,该程序从东方财富网抓取股票URL,通过正则表达式提取上海和深圳股票代码,并结合百度股市通查询完整股票信息。程序利用requests、bs4和re库,将爬取的数据保存到本地TXT文件,同时展示了进度条提升用户体验。由于部分股票信息缺失,实际结果可能只包含股票名称。

345

345

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?