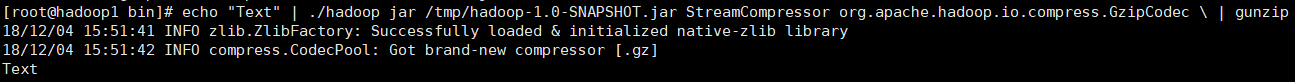

1.压缩从标准输入读取的数据,然后将其写到标准输出

通过GzipCodec的StreamCompressor对象对字符串“Text”进行压缩,再使用gunzip从标准输出中对它进行读取并解压缩

public class StreamCompressor {

public static void main(String[] args) throws Exception {

String codecClassname = args[0];

Class<?> codecClass = Class.forName(codecClassname);

Configuration conf = new Configuration();

CompressionCodec codec = (CompressionCodec)

ReflectionUtils.newInstance(codecClass, conf);

CompressionOutputStream out = codec.createOutputStream(System.out);

IOUtils.copyBytes(System.in, out, 4096, false);

out.finish();

}

}

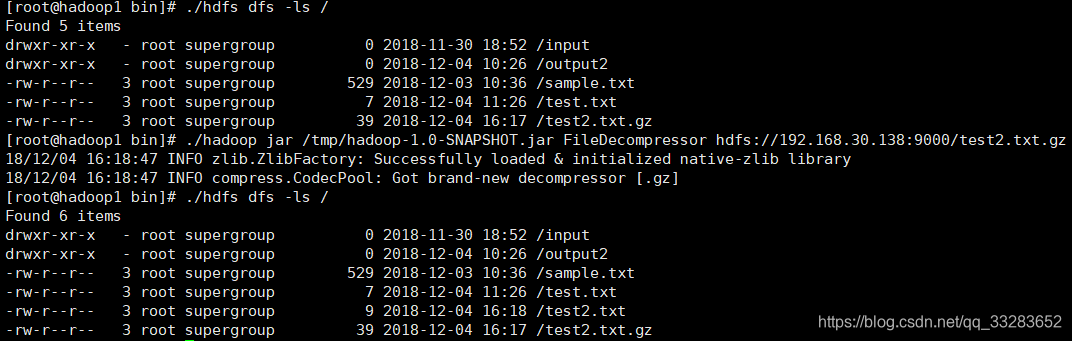

2.根据文件扩展名选取codec解压缩文件

public class FileDecompressor {

public static void main(String[] args) throws Exception {

String uri = args[0];

Configuration conf = new Configuration();

FileSystem fs = FileSystem.get(URI.create(uri), conf);

Path inputPath = new Path(uri);

CompressionCodecFactory factory = new CompressionCodecFactory(conf);

CompressionCodec codec = factory.getCodec(inputPath);

if (codec == null) {

System.err.println("No codec found for " + uri);

System.exit(1);

}

String outputUri =

CompressionCodecFactory.removeSuffix(uri, codec.getDefaultExtension());

InputStream in = null;

OutputStream out = null;

try {

in = codec.createInputStream(fs.open(inputPath));

out = fs.create(new Path(outputUri));

IOUtils.copyBytes(in, out, conf);

} finally {

IOUtils.closeStream(in);

IOUtils.closeStream(out);

}

}

}

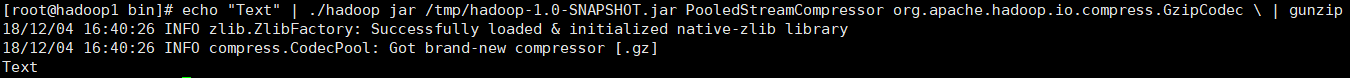

3. 使用压缩池对读取自标准输入数据进行压缩,然后将其写在标准输出里

public class PooledStreamCompressor {

public static void main(String[] args) throws Exception {

String codecClassname = args[0];

Class<?> codecClass = Class.forName(codecClassname);

Configuration conf = new Configuration();

CompressionCodec codec = (CompressionCodec)

ReflectionUtils.newInstance(codecClass, conf);

Compressor compressor = null;

try {

compressor = CodecPool.getCompressor(codec);

CompressionOutputStream out =

codec.createOutputStream(System.out, compressor);

IOUtils.copyBytes(System.in, out, 4096, false);

out.finish();

} finally {

CodecPool.returnCompressor(compressor);

}

}

}

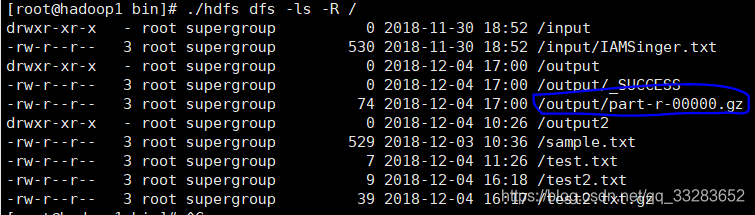

4.在MapReduce中使用压缩

对输出进行压缩

./hadoop jar /tmp/hadoop-1.0-SNAPSHOT.jar HotSearch /input/IAMSinger.txt /output/

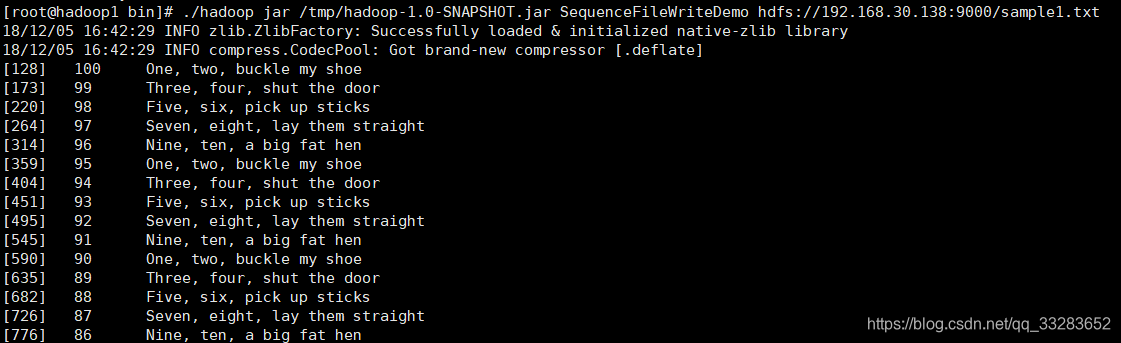

5.使用SequenceFile对小文件进行读取

public class SequenceFileWriteDemo {

private static final String[] DATA = {

"One, two, buckle my shoe",

"Three, four, shut the door",

"Five, six, pick up sticks",

"Seven, eight, lay them straight",

"Nine, ten, a big fat hen"

};

public static void main(String[] args) throws IOException {

String uri = args[0];

Configuration conf = new Configuration();

FileSystem fs = FileSystem.get(URI.create(uri), conf);

Path path = new Path(uri);

IntWritable key = new IntWritable();

Text value = new Text();

SequenceFile.Writer writer = null;

try {

writer = SequenceFile.createWriter(fs, conf, path,

key.getClass(), value.getClass());

for (int i = 0; i < 100; i++) {

key.set(100 - i);

value.set(DATA[i % DATA.length]);

System.out.printf("[%s]\t%s\t%s\n", writer.getLength(), key, value);

writer.append(key, value);

}

} finally {

IOUtils.closeStream(writer);

}

}

}

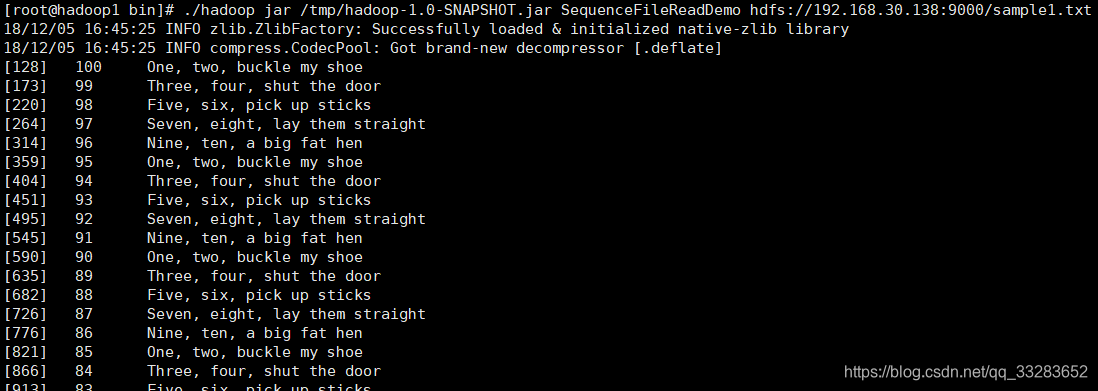

public class SequenceFileReadDemo {

public static void main(String[] args) throws IOException {

String uri = args[0];

Configuration conf = new Configuration();

FileSystem fs = FileSystem.get(URI.create(uri), conf);

Path path = new Path(uri);

SequenceFile.Reader reader = null;

try {

reader = new SequenceFile.Reader(fs, path, conf);

Writable key = (Writable)

ReflectionUtils.newInstance(reader.getKeyClass(), conf);

Writable value = (Writable)

ReflectionUtils.newInstance(reader.getValueClass(), conf);

long position = reader.getPosition();

while (reader.next(key, value)) {

String syncSeen = reader.syncSeen() ? "*" : "";

System.out.printf("[%s%s]\t%s\t%s\n", position, syncSeen, key, value);

position = reader.getPosition(); // beginning of next record

}

} finally {

IOUtils.closeStream(reader);

}

}

}

本文深入探讨Hadoop生态系统中的压缩技术,包括压缩流、文件解压缩、压缩池使用及MapReduce任务输出压缩方法,同时介绍了如何利用SequenceFile读取小文件,为大数据处理提供高效压缩解决方案。

本文深入探讨Hadoop生态系统中的压缩技术,包括压缩流、文件解压缩、压缩池使用及MapReduce任务输出压缩方法,同时介绍了如何利用SequenceFile读取小文件,为大数据处理提供高效压缩解决方案。

3651

3651

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?