文章目录

- Robotic Assembling

- Model-based

-

- Imagination-Augmented Agents for Deep Reinforcement Learning (NIPS 2017)

- Neural Network Dynamics for Model-Based Deep Reinforcement Learning with Model-Free Fine-Tuning (ICRA 2018)

- Uncertainty-driven Imagination for Continuous Deep Reinforcement Learning(CoRL 2017)

- Model-Based Value Expansion for Efficient Model-Free Reinforcement Learning (ICML 2018)

- MODEL-ENSEMBLE TRUST-REGION POLICY OPTIMIZATION (ICLR 2018)

- Sample-Efficient Reinforcement Learning with Stochastic Ensemble Value Expansion (NeurIPS 2018)

- Model-Based Reinforcement Learning via Meta-Policy Optimization(CoRL 2018)

- When to Trust Your Model: Model-Based Policy Optimization(2019)

- Meta Learning

Robotic Assembling

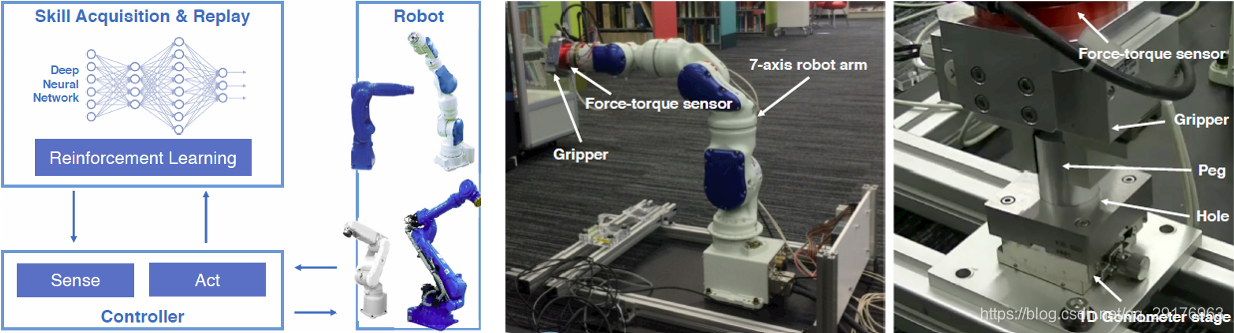

Deep Reinforcement Learning for High Precision Assembly Tasks (IROS 2017)

[Blog][Paper][Video]

Abstract:

High precision assembly of mechanical parts requires accuracy exceeding the robot precision. Conventional part mating methods used in the current manufacturing requires tedious tuning of numerous parameters before deployment. We show how the robot can successfully perform a tight clearance peg-in-hole task through training a recurrent neural network with reinforcement learning. In addition to saving the manual effort, the proposed technique also shows robustness against position and angle errors for the peg-in-hole task. The neural network learns to take the optimal action by observing the robot sensors to estimate the system state. The advantages of our proposed method is validated experimentally on a 7-axis articulated robot arm.

Model-based

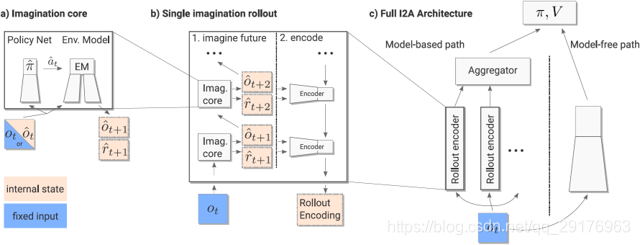

Imagination-Augmented Agents for Deep Reinforcement Learning (NIPS 2017)

[Blog][Paper]

Abstract:

We introduce Imagination-Augmented Agents (I2As), a novel architecture for deep reinforcement learning combining model-free and model-based aspects. In contrast to most existing model-based reinforcement learning and planning methods, which prescribe how a model should be used to arrive at a policy, I2As learn to interpret predictions from a trained environment model to construct implicit plans in arbitrary ways, by using the predictions as additional context in deep policy networks. I2As show improved data efficiency, performance, and robustness to model misspecification compared to several strong baselines.

Neural Network Dynamics for Model-Based Deep Reinforcement Learning with Model-Free Fine-Tuning (ICRA 2018)

[Blog][Paper][Video][Code]

Abstract:

Model-free deep reinforcement learning algorithms have been shown to be capable of learning a wide range of robotic skills, but typically require a very large number of samples to achieve good performance. Model-based algorithms, in principle, can provide for much more efficient learning, but have proven difficult to extend to expressive, high-capacity models such as deep neural networks. In this work, we demonstrate that medium-sized neural network models can in fact be combined w

最低0.47元/天 解锁文章

最低0.47元/天 解锁文章

861

861

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?