- 安装sbt

- 创建应用

- 使用sbt打包Scala程序

- spark-submit 运行程序

- 安装idea

安装sbt

创建目录

mkdir /usr/local/sbt

cp ~/下载/sbt-launch.jar .

chmod u+x ./sbt

检查

hadoop@dhjvirtualmachine:/usr/local/sbt$ ./sbt sbt-version

[info] Set current project to sbt (in build file:/usr/local/sbt/)

[warn] The `-` command is deprecated in favor of `onFailure` and will be removed in 0.14.0

hadoop@dhjvirtualmachine:/usr/local/sbt$ ./sbt sbt-version

[info] Set current project to sbt (in build file:/usr/local/sbt/)

[info] 0.13.11

创建应用

终端中执行如下命令创建一个文件夹 sparkapp 作为应用程序根目录

cd ~ # 进入用户主文件夹

mkdir ./sparkapp # 创建应用程序根目录

mkdir -p ./sparkapp/src/main/scala # 创建所需的文件夹结构

创建代码文件

vim ./sparkapp/src/main/scala/SimpleApp.scala

/* SimpleApp.scala */

import org.apache.spark.SparkContext

import org.apache.spark.SparkContext._

import org.apache.spark.SparkConf

object SimpleApp {

def main(args: Array[String]) {

val logFile = "file:///usr/local/spark/README.md" // Should be some file on your system

val conf = new SparkConf().setAppName("Simple Application")

val sc = new SparkContext(conf)

val logData = sc.textFile(logFile, 2).cache()

val numAs = logData.filter(line => line.contains("a")).count()

val numBs = logData.filter(line => line.contains("b")).count()

println("Lines with a: %s, Lines with b: %s".format(numAs, numBs))

}

}

使用sbt打包Scala程序

./sparkapp 中新建文件 simple.sbt

name := "Simple Project"

version := "1.0"

scalaVersion := "2.10.5"

libraryDependencies += "org.apache.spark" %% "spark-core" % "1.6.2"

检查目录结构

cd ~/sparkapp

find .

./simple.sbt

./src

./src/main

./src/main/scala

./src/main/scala/SimpleApp.scala

打包

hadoop@dhjvirtualmachine:~/sparkapp$ /usr/local/sbt/sbt package

spark-submit 运行程序

/usr/local/spark/bin/spark-submit --class "SimpleApp" /home/hadoop/sparkapp/target/scala-2.10/simple-project_2.10-1.0.jar 2>&1 | grep "Lines with a:"

Lines with a: 58, Lines with b: 2617/12/27 23:43:23 INFO scheduler.TaskSetManager: Finished task 1.0 in stage 1.0 (TID 3) in 65 ms on localhost (2/2)

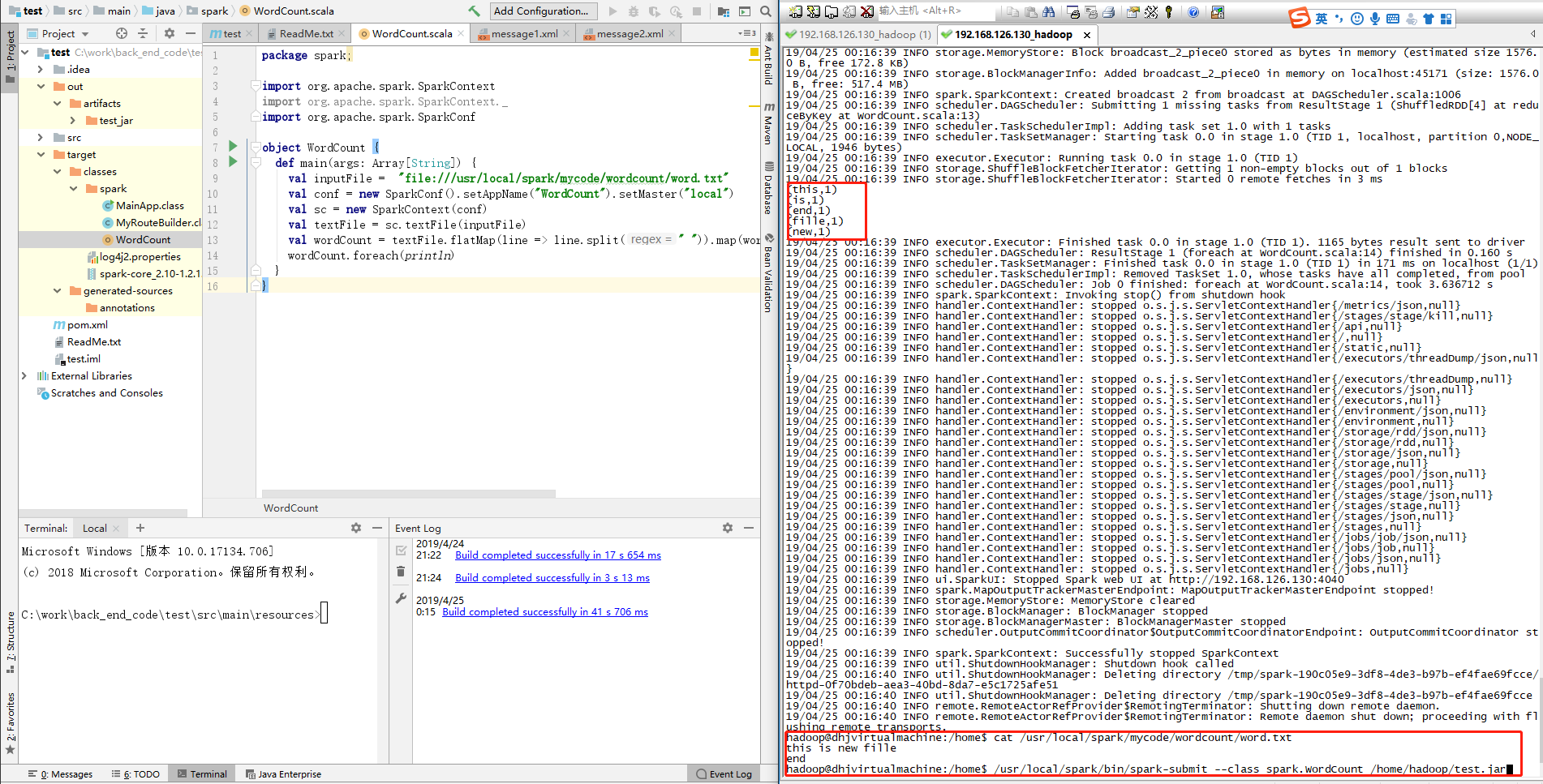

使用idea maven 打包在spark上运行 scala程序

本文介绍了如何开发Spark独立应用程序,从安装sbt开始,逐步讲解创建Scala应用,使用sbt进行打包,以及通过`spark-submit`命令运行程序。同时,文中也提到了使用IDEA和Maven进行打包的方法,以支持在Spark上运行Scala程序。

本文介绍了如何开发Spark独立应用程序,从安装sbt开始,逐步讲解创建Scala应用,使用sbt进行打包,以及通过`spark-submit`命令运行程序。同时,文中也提到了使用IDEA和Maven进行打包的方法,以支持在Spark上运行Scala程序。

885

885

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?