2025-07-02 11:15:25,551 INFO - task run command: sudo -u hadoop -E bash /tmp/dolphinscheduler/exec/process/hadoop/16836554651104/18167664743392_10/32581/56672/32581_56672.command

2025-07-02 11:15:25,552 INFO - process start, process id is: 1190

2025-07-02 11:15:26,553 INFO - -> /usr/lib/dolphinscheduler/worker-server/conf/dolphinscheduler_env.sh: line 23: export: `zookeeper.quorum=': not a valid identifier

/usr/lib/dolphinscheduler/worker-server/conf/dolphinscheduler_env.sh: line 23: export: `dominos-usdp-fun01:2181,dominos-usdp-fun02:2181,dominos-usdp-fun03:2181': not a valid identifier

2025-07-02 11:15:31,554 INFO - -> SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/usr/lib/hadoop/lib/slf4j-reload4j-1.7.36.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/usr/lib/hive/lib/log4j-slf4j-impl-2.17.1.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.slf4j.impl.Reload4jLoggerFactory]

2025-07-02 11:15:31,226 INFO [main] conf.HiveConf (HiveConf.java:findConfigFile(187)) - Found configuration file file:/etc/hive/conf/hive-site.xml

2025-07-02 11:15:32,554 INFO - -> 2025-07-02 11:15:32,428 main ERROR Cannot access RandomAccessFile java.io.FileNotFoundException: /data/log/hive/hive.log (Permission denied) java.io.FileNotFoundException: /data/log/hive/hive.log (Permission denied)

at java.io.RandomAccessFile.open0(Native Method)

at java.io.RandomAccessFile.open(RandomAccessFile.java:316)

at java.io.RandomAccessFile.<init>(RandomAccessFile.java:243)

at java.io.RandomAccessFile.<init>(RandomAccessFile.java:124)

at org.apache.logging.log4j.core.appender.rolling.RollingRandomAccessFileManager$RollingRandomAccessFileManagerFactory.createManager(RollingRandomAccessFileManager.java:232)

at org.apache.logging.log4j.core.appender.rolling.RollingRandomAccessFileManager$RollingRandomAccessFileManagerFactory.createManager(RollingRandomAccessFileManager.java:204)

at org.apache.logging.log4j.core.appender.AbstractManager.getManager(AbstractManager.java:114)

at org.apache.logging.log4j.core.appender.OutputStreamManager.getManager(OutputStreamManager.java:100)

at org.apache.logging.log4j.core.appender.rolling.RollingRandomAccessFileManager.getRollingRandomAccessFileManager(RollingRandomAccessFileManager.java:107)

at org.apache.logging.log4j.core.appender.RollingRandomAccessFileAppender$Builder.build(RollingRandomAccessFileAppender.java:132)

at org.apache.logging.log4j.core.appender.RollingRandomAccessFileAppender$Builder.build(RollingRandomAccessFileAppender.java:53)

at org.apache.logging.log4j.core.config.plugins.util.PluginBuilder.build(PluginBuilder.java:122)

at org.apache.logging.log4j.core.config.AbstractConfiguration.createPluginObject(AbstractConfiguration.java:1120)

at org.apache.logging.log4j.core.config.AbstractConfiguration.createConfiguration(AbstractConfiguration.java:1045)

at org.apache.logging.log4j.core.config.AbstractConfiguration.createConfiguration(AbstractConfiguration.java:1037)

at org.apache.logging.log4j.core.config.AbstractConfiguration.doConfigure(AbstractConfiguration.java:651)

at org.apache.logging.log4j.core.config.AbstractConfiguration.initialize(AbstractConfiguration.java:247)

at org.apache.logging.log4j.core.config.AbstractConfiguration.start(AbstractConfiguration.java:293)

at org.apache.logging.log4j.core.LoggerContext.setConfiguration(LoggerContext.java:626)

at org.apache.logging.log4j.core.LoggerContext.start(LoggerContext.java:302)

at org.apache.logging.log4j.core.async.AsyncLoggerContext.start(AsyncLoggerContext.java:87)

at org.apache.logging.log4j.core.impl.Log4jContextFactory.getContext(Log4jContextFactory.java:242)

at org.apache.logging.log4j.core.config.Configurator.initialize(Configurator.java:159)

at org.apache.logging.log4j.core.config.Configurator.initialize(Configurator.java:131)

at org.apache.logging.log4j.core.config.Configurator.initialize(Configurator.java:101)

at org.apache.logging.log4j.core.config.Configurator.initialize(Configurator.java:210)

at org.apache.hadoop.hive.common.LogUtils.initHiveLog4jDefault(LogUtils.java:173)

at org.apache.hadoop.hive.common.LogUtils.initHiveLog4jCommon(LogUtils.java:106)

at org.apache.hadoop.hive.common.LogUtils.initHiveLog4jCommon(LogUtils.java:98)

at org.apache.hadoop.hive.common.LogUtils.initHiveLog4j(LogUtils.java:81)

at org.apache.hadoop.hive.cli.CliDriver.run(CliDriver.java:699)

at org.apache.hadoop.hive.cli.CliDriver.main(CliDriver.java:683)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at org.apache.hadoop.util.RunJar.run(RunJar.java:323)

at org.apache.hadoop.util.RunJar.main(RunJar.java:236)

2025-07-02 11:15:32,430 main ERROR Could not create plugin of type class org.apache.logging.log4j.core.appender.RollingRandomAccessFileAppender for element RollingRandomAccessFile: java.lang.IllegalStateException: ManagerFactory [org.apache.logging.log4j.core.appender.rolling.RollingRandomAccessFileManager$RollingRandomAccessFileManagerFactory@5ef6ae06] unable to create manager for [/data/log/hive/hive.log] with data [org.apache.logging.log4j.core.appender.rolling.RollingRandomAccessFileManager$FactoryData@55dfebeb] java.lang.IllegalStateException: ManagerFactory [org.apache.logging.log4j.core.appender.rolling.RollingRandomAccessFileManager$RollingRandomAccessFileManagerFactory@5ef6ae06] unable to create manager for [/data/log/hive/hive.log] with data [org.apache.logging.log4j.core.appender.rolling.RollingRandomAccessFileManager$FactoryData@55dfebeb]

at org.apache.logging.log4j.core.appender.AbstractManager.getManager(AbstractManager.java:116)

at org.apache.logging.log4j.core.appender.OutputStreamManager.getManager(OutputStreamManager.java:100)

at org.apache.logging.log4j.core.appender.rolling.RollingRandomAccessFileManager.getRollingRandomAccessFileManager(RollingRandomAccessFileManager.java:107)

at org.apache.logging.log4j.core.appender.RollingRandomAccessFileAppender$Builder.build(RollingRandomAccessFileAppender.java:132)

at org.apache.logging.log4j.core.appender.RollingRandomAccessFileAppender$Builder.build(RollingRandomAccessFileAppender.java:53)

at org.apache.logging.log4j.core.config.plugins.util.PluginBuilder.build(PluginBuilder.java:122)

at org.apache.logging.log4j.core.config.AbstractConfiguration.createPluginObject(AbstractConfiguration.java:1120)

at org.apache.logging.log4j.core.config.AbstractConfiguration.createConfiguration(AbstractConfiguration.java:1045)

at org.apache.logging.log4j.core.config.AbstractConfiguration.createConfiguration(AbstractConfiguration.java:1037)

at org.apache.logging.log4j.core.config.AbstractConfiguration.doConfigure(AbstractConfiguration.java:651)

at org.apache.logging.log4j.core.config.AbstractConfiguration.initialize(AbstractConfiguration.java:247)

at org.apache.logging.log4j.core.config.AbstractConfiguration.start(AbstractConfiguration.java:293)

at org.apache.logging.log4j.core.LoggerContext.setConfiguration(LoggerContext.java:626)

at org.apache.logging.log4j.core.LoggerContext.start(LoggerContext.java:302)

at org.apache.logging.log4j.core.async.AsyncLoggerContext.start(AsyncLoggerContext.java:87)

at org.apache.logging.log4j.core.impl.Log4jContextFactory.getContext(Log4jContextFactory.java:242)

at org.apache.logging.log4j.core.config.Configurator.initialize(Configurator.java:159)

at org.apache.logging.log4j.core.config.Configurator.initialize(Configurator.java:131)

at org.apache.logging.log4j.core.config.Configurator.initialize(Configurator.java:101)

at org.apache.logging.log4j.core.config.Configurator.initialize(Configurator.java:210)

at org.apache.hadoop.hive.common.LogUtils.initHiveLog4jDefault(LogUtils.java:173)

at org.apache.hadoop.hive.common.LogUtils.initHiveLog4jCommon(LogUtils.java:106)

at org.apache.hadoop.hive.common.LogUtils.initHiveLog4jCommon(LogUtils.java:98)

at org.apache.hadoop.hive.common.LogUtils.initHiveLog4j(LogUtils.java:81)

at org.apache.hadoop.hive.cli.CliDriver.run(CliDriver.java:699)

at org.apache.hadoop.hive.cli.CliDriver.main(CliDriver.java:683)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at org.apache.hadoop.util.RunJar.run(RunJar.java:323)

at org.apache.hadoop.util.RunJar.main(RunJar.java:236)

2025-07-02 11:15:32,431 main ERROR Unable to invoke factory method in class org.apache.logging.log4j.core.appender.RollingRandomAccessFileAppender for element RollingRandomAccessFile: java.lang.IllegalStateException: No factory method found for class org.apache.logging.log4j.core.appender.RollingRandomAccessFileAppender java.lang.IllegalStateException: No factory method found for class org.apache.logging.log4j.core.appender.RollingRandomAccessFileAppender

at org.apache.logging.log4j.core.config.plugins.util.PluginBuilder.findFactoryMethod(PluginBuilder.java:236)

at org.apache.logging.log4j.core.config.plugins.util.PluginBuilder.build(PluginBuilder.java:134)

at org.apache.logging.log4j.core.config.AbstractConfiguration.createPluginObject(AbstractConfiguration.java:1120)

at org.apache.logging.log4j.core.config.AbstractConfiguration.createConfiguration(AbstractConfiguration.java:1045)

at org.apache.logging.log4j.core.config.AbstractConfiguration.createConfiguration(AbstractConfiguration.java:1037)

at org.apache.logging.log4j.core.config.AbstractConfiguration.doConfigure(AbstractConfiguration.java:651)

at org.apache.logging.log4j.core.config.AbstractConfiguration.initialize(AbstractConfiguration.java:247)

at org.apache.logging.log4j.core.config.AbstractConfiguration.start(AbstractConfiguration.java:293)

at org.apache.logging.log4j.core.LoggerContext.setConfiguration(LoggerContext.java:626)

at org.apache.logging.log4j.core.LoggerContext.start(LoggerContext.java:302)

at org.apache.logging.log4j.core.async.AsyncLoggerContext.start(AsyncLoggerContext.java:87)

at org.apache.logging.log4j.core.impl.Log4jContextFactory.getContext(Log4jContextFactory.java:242)

at org.apache.logging.log4j.core.config.Configurator.initialize(Configurator.java:159)

at org.apache.logging.log4j.core.config.Configurator.initialize(Configurator.java:131)

at org.apache.logging.log4j.core.config.Configurator.initialize(Configurator.java:101)

at org.apache.logging.log4j.core.config.Configurator.initialize(Configurator.java:210)

at org.apache.hadoop.hive.common.LogUtils.initHiveLog4jDefault(LogUtils.java:173)

at org.apache.hadoop.hive.common.LogUtils.initHiveLog4jCommon(LogUtils.java:106)

at org.apache.hadoop.hive.common.LogUtils.initHiveLog4jCommon(LogUtils.java:98)

at org.apache.hadoop.hive.common.LogUtils.initHiveLog4j(LogUtils.java:81)

at org.apache.hadoop.hive.cli.CliDriver.run(CliDriver.java:699)

at org.apache.hadoop.hive.cli.CliDriver.main(CliDriver.java:683)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at org.apache.hadoop.util.RunJar.run(RunJar.java:323)

at org.apache.hadoop.util.RunJar.main(RunJar.java:236)

2025-07-02 11:15:32,432 main ERROR Null object returned for RollingRandomAccessFile in Appenders.

2025-07-02 11:15:32,432 main ERROR Unable to locate appender "DRFA" for logger config "root"

Hive Session ID = 63fc22ae-87a3-4d13-b59e-6ea5a99a9941

2025-07-02 11:15:32,528 INFO [main] SessionState (SessionState.java:printInfo(1227)) - Hive Session ID = 63fc22ae-87a3-4d13-b59e-6ea5a99a9941

2025-07-02 11:15:33,555 INFO - ->

Logging initialized using configuration in file:/etc/hive/conf/hive-log4j2.properties Async: true

2025-07-02 11:15:32,577 INFO [main] SessionState (SessionState.java:printInfo(1227)) -

Logging initialized using configuration in file:/etc/hive/conf/hive-log4j2.properties Async: true

2025-07-02 11:15:34,556 INFO - -> 2025-07-02 11:15:33,630 INFO [main] session.SessionState (SessionState.java:createPath(790)) - Created HDFS directory: /tmp/hive/hadoop/63fc22ae-87a3-4d13-b59e-6ea5a99a9941

2025-07-02 11:15:33,652 INFO [main] session.SessionState (SessionState.java:createPath(790)) - Created local directory: /tmp/hadoop/63fc22ae-87a3-4d13-b59e-6ea5a99a9941

2025-07-02 11:15:33,659 INFO [main] session.SessionState (SessionState.java:createPath(790)) - Created HDFS directory: /tmp/hive/hadoop/63fc22ae-87a3-4d13-b59e-6ea5a99a9941/_tmp_space.db

2025-07-02 11:15:33,691 INFO [main] tez.TezSessionState (TezSessionState.java:openInternal(277)) - User of session id 63fc22ae-87a3-4d13-b59e-6ea5a99a9941 is hadoop

2025-07-02 11:15:33,714 INFO [main] tez.DagUtils (DagUtils.java:localizeResource(1159)) - Localizing resource because it does not exist: file:/usr/lib/hive/auxlib/hudi-hadoop-mr-bundle-0.13.0.jar to dest: hdfs://dominos-usdp-v3-fun/tmp/hive/hadoop/_tez_session_dir/63fc22ae-87a3-4d13-b59e-6ea5a99a9941-resources/hudi-hadoop-mr-bundle-0.13.0.jar

2025-07-02 11:15:34,365 INFO [main] tez.DagUtils (DagUtils.java:createLocalResource(842)) - Resource modification time: 1751426134293 for hdfs://dominos-usdp-v3-fun/tmp/hive/hadoop/_tez_session_dir/63fc22ae-87a3-4d13-b59e-6ea5a99a9941-resources/hudi-hadoop-mr-bundle-0.13.0.jar

2025-07-02 11:15:34,384 INFO [main] tez.DagUtils (DagUtils.java:localizeResource(1159)) - Localizing resource because it does not exist: file:/usr/lib/hive/auxlib/hudi-hive-sync-bundle-0.13.0.jar to dest: hdfs://dominos-usdp-v3-fun/tmp/hive/hadoop/_tez_session_dir/63fc22ae-87a3-4d13-b59e-6ea5a99a9941-resources/hudi-hive-sync-bundle-0.13.0.jar

2025-07-02 11:15:35,557 INFO - -> 2025-07-02 11:15:34,776 INFO [main] tez.DagUtils (DagUtils.java:createLocalResource(842)) - Resource modification time: 1751426134737 for hdfs://dominos-usdp-v3-fun/tmp/hive/hadoop/_tez_session_dir/63fc22ae-87a3-4d13-b59e-6ea5a99a9941-resources/hudi-hive-sync-bundle-0.13.0.jar

2025-07-02 11:15:34,851 INFO [main] tez.TezSessionState (TezSessionState.java:openInternal(288)) - Created new resources: null

2025-07-02 11:15:34,854 INFO [main] tez.DagUtils (DagUtils.java:getHiveJarDirectory(1058)) - Jar dir is null / directory doesn't exist. Choosing HIVE_INSTALL_DIR - /user/hadoop/.hiveJars

2025-07-02 11:15:35,179 INFO [main] tez.TezSessionState (TezSessionState.java:getSha(854)) - Computed sha: 3420a6126cfea97266fe35b708da5d5f95a5b158cad390dc4124081a39cf906f for file: file:/usr/lib/hive/lib/hive-exec-3.1.3.jar of length: 40.36MB in 321 ms

2025-07-02 11:15:35,191 INFO [main] tez.DagUtils (DagUtils.java:createLocalResource(842)) - Resource modification time: 1715146950410 for hdfs://dominos-usdp-v3-fun/user/hadoop/.hiveJars/hive-exec-3.1.3-3420a6126cfea97266fe35b708da5d5f95a5b158cad390dc4124081a39cf906f.jar

2025-07-02 11:15:35,240 INFO [main] tez.TezSessionState (TezSessionState.java:setupTezParamsBasedOnMR(562)) - Config: mr(unset):mapreduce.task.io.sort.mb, mr initial value=100, tez(original):tez.runtime.io.sort.mb=null, tez(final):tez.runtime.io.sort.mb=100

2025-07-02 11:15:35,241 INFO [main] tez.TezSessionState (TezSessionState.java:setupTezParamsBasedOnMR(562)) - Config: mr(unset):mapreduce.reduce.shuffle.read.timeout, mr initial value=180000, tez(original):tez.runtime.shuffle.read.timeout=null, tez(final):tez.runtime.shuffle.read.timeout=180000

2025-07-02 11:15:35,241 INFO [main] tez.TezSessionState (TezSessionState.java:setupTezParamsBasedOnMR(562)) - Config: mr(unset):mapreduce.job.speculative.minimum-allowed-tasks, mr initial value=10, tez(original):tez.am.minimum.allowed.speculative.tasks=null, tez(final):tez.am.minimum.allowed.speculative.tasks=10

2025-07-02 11:15:35,241 INFO [main] tez.TezSessionState (TezSessionState.java:setupTezParamsBasedOnMR(562)) - Config: mr(unset):mapreduce.ifile.readahead.bytes, mr initial value=4194304, tez(original):tez.runtime.ifile.readahead.bytes=null, tez(final):tez.runtime.ifile.readahead.bytes=4194304

2025-07-02 11:15:35,241 INFO [main] tez.TezSessionState (TezSessionState.java:setupTezParamsBasedOnMR(562)) - Config: mr(unset):mapreduce.shuffle.ssl.enabled, mr initial value=false, tez(original):tez.runtime.shuffle.ssl.enable=null, tez(final):tez.runtime.shuffle.ssl.enable=false

2025-07-02 11:15:35,241 INFO [main] tez.TezSessionState (TezSessionState.java:setupTezParamsBasedOnMR(562)) - Config: mr(unset):mapreduce.map.sort.spill.percent, mr initial value=0.80, tez(original):tez.runtime.sort.spill.percent=null, tez(final):tez.runtime.sort.spill.percent=0.80

2025-07-02 11:15:35,241 INFO [main] tez.TezSessionState (TezSessionState.java:setupTezParamsBasedOnMR(562)) - Config: mr(unset):mapreduce.job.speculative.speculative-cap-running-tasks, mr initial value=0.1, tez(original):tez.am.proportion.running.tasks.speculatable=null, tez(final):tez.am.proportion.running.tasks.speculatable=0.1

2025-07-02 11:15:35,241 INFO [main] tez.TezSessionState (TezSessionState.java:setupTezParamsBasedOnMR(562)) - Config: mr(unset):mapreduce.job.speculative.speculative-cap-total-tasks, mr initial value=0.01, tez(original):tez.am.proportion.total.tasks.speculatable=null, tez(final):tez.am.proportion.total.tasks.speculatable=0.01

2025-07-02 11:15:35,241 INFO [main] tez.TezSessionState (TezSessionState.java:setupTezParamsBasedOnMR(562)) - Config: mr(unset):mapreduce.ifile.readahead, mr initial value=true, tez(original):tez.runtime.ifile.readahead=null, tez(final):tez.runtime.ifile.readahead=true

2025-07-02 11:15:35,241 INFO [main] tez.TezSessionState (TezSessionState.java:setupTezParamsBasedOnMR(562)) - Config: mr(unset):mapreduce.reduce.shuffle.merge.percent, mr initial value=0.66, tez(original):tez.runtime.shuffle.merge.percent=null, tez(final):tez.runtime.shuffle.merge.percent=0.66

2025-07-02 11:15:35,241 INFO [main] tez.TezSessionState (TezSessionState.java:setupTezParamsBasedOnMR(562)) - Config: mr(unset):mapreduce.reduce.shuffle.parallelcopies, mr initial value=50, tez(original):tez.runtime.shuffle.parallel.copies=null, tez(final):tez.runtime.shuffle.parallel.copies=50

2025-07-02 11:15:35,241 INFO [main] tez.TezSessionState (TezSessionState.java:setupTezParamsBasedOnMR(562)) - Config: mr(unset):mapreduce.job.speculative.retry-after-speculate, mr initial value=15000, tez(original):tez.am.soonest.retry.after.speculate=null, tez(final):tez.am.soonest.retry.after.speculate=15000

2025-07-02 11:15:35,241 INFO [main] tez.TezSessionState (TezSessionState.java:setupTezParamsBasedOnMR(562)) - Config: mr(unset):mapreduce.job.reduce.slowstart.completedmaps, mr initial value=0.95, tez(original):tez.shuffle-vertex-manager.min-src-fraction=null, tez(final):tez.shuffle-vertex-manager.min-src-fraction=0.95

2025-07-02 11:15:35,241 INFO [main] tez.TezSessionState (TezSessionState.java:setupTezParamsBasedOnMR(562)) - Config: mr(unset):mapreduce.reduce.shuffle.memory.limit.percent, mr initial value=0.25, tez(original):tez.runtime.shuffle.memory.limit.percent=null, tez(final):tez.runtime.shuffle.memory.limit.percent=0.25

2025-07-02 11:15:35,241 INFO [main] tez.TezSessionState (TezSessionState.java:setupTezParamsBasedOnMR(562)) - Config: mr(unset):mapreduce.job.speculative.retry-after-no-speculate, mr initial value=1000, tez(original):tez.am.soonest.retry.after.no.speculate=null, tez(final):tez.am.soonest.retry.after.no.speculate=1000

2025-07-02 11:15:35,241 INFO [main] tez.TezSessionState (TezSessionState.java:setupTezParamsBasedOnMR(562)) - Config: mr(unset):mapreduce.task.io.sort.factor, mr initial value=100, tez(original):tez.runtime.io.sort.factor=null, tez(final):tez.runtime.io.sort.factor=100

2025-07-02 11:15:35,241 INFO [main] tez.TezSessionState (TezSessionState.java:setupTezParamsBasedOnMR(562)) - Config: mr(unset):mapreduce.map.output.compress, mr initial value=false, tez(original):tez.runtime.compress=null, tez(final):tez.runtime.compress=false

2025-07-02 11:15:35,242 INFO [main] tez.TezSessionState (TezSessionState.java:setupTezParamsBasedOnMR(562)) - Config: mr(unset):mapreduce.reduce.shuffle.connect.timeout, mr initial value=180000, tez(original):tez.runtime.shuffle.connect.timeout=null, tez(final):tez.runtime.shuffle.connect.timeout=180000

2025-07-02 11:15:35,242 INFO [main] tez.TezSessionState (TezSessionState.java:setupTezParamsBasedOnMR(562)) - Config: mr(unset):mapreduce.reduce.input.buffer.percent, mr initial value=0.0, tez(original):tez.runtime.task.input.post-merge.buffer.percent=null, tez(final):tez.runtime.task.input.post-merge.buffer.percent=0.0

2025-07-02 11:15:35,242 INFO [main] tez.TezSessionState (TezSessionState.java:setupTezParamsBasedOnMR(562)) - Config: mr(unset):mapreduce.map.output.compress.codec, mr initial value=org.apache.hadoop.io.compress.DefaultCodec, tez(original):tez.runtime.compress.codec=null, tez(final):tez.runtime.compress.codec=org.apache.hadoop.io.compress.DefaultCodec

2025-07-02 11:15:35,242 INFO [main] tez.TezSessionState (TezSessionState.java:setupTezParamsBasedOnMR(562)) - Config: mr(unset):mapreduce.task.merge.progress.records, mr initial value=10000, tez(original):tez.runtime.merge.progress.records=null, tez(final):tez.runtime.merge.progress.records=10000

2025-07-02 11:15:35,242 INFO [main] tez.TezSessionState (TezSessionState.java:setupTezParamsBasedOnMR(562)) - Config: mr(unset):map.sort.class, mr initial value=org.apache.hadoop.util.QuickSort, tez(original):tez.runtime.internal.sorter.class=null, tez(final):tez.runtime.internal.sorter.class=org.apache.hadoop.util.QuickSort

2025-07-02 11:15:35,242 INFO [main] tez.TezSessionState (TezSessionState.java:setupTezParamsBasedOnMR(562)) - Config: mr(unset):mapreduce.reduce.shuffle.input.buffer.percent, mr initial value=0.70, tez(original):tez.runtime.shuffle.fetch.buffer.percent=null, tez(final):tez.runtime.shuffle.fetch.buffer.percent=0.70

2025-07-02 11:15:35,242 INFO [main] tez.TezSessionState (TezSessionState.java:setupTezParamsBasedOnMR(562)) - Config: mr(unset):mapreduce.job.counters.max, mr initial value=120, tez(original):tez.counters.max=null, tez(final):tez.counters.max=120

2025-07-02 11:15:35,242 INFO [main] tez.TezSessionState (TezSessionState.java:setupTezParamsBasedOnMR(562)) - Config: mr(unset):mapreduce.job.hdfs-servers, mr initial value=hdfs://dominos-usdp-v3-fun, tez(original):tez.job.fs-servers=null, tez(final):tez.job.fs-servers=hdfs://dominos-usdp-v3-fun

2025-07-02 11:15:35,242 INFO [main] tez.TezSessionState (TezSessionState.java:setupTezParamsBasedOnMR(562)) - Config: mr(unset):mapreduce.job.queuename, mr initial value=default, tez(original):tez.queue.name=default, tez(final):tez.queue.name=default

2025-07-02 11:15:35,242 INFO [main] tez.TezSessionState (TezSessionState.java:setupTezParamsBasedOnMR(562)) - Config: mr(unset):mapreduce.job.maxtaskfailures.per.tracker, mr initial value=3, tez(original):tez.am.maxtaskfailures.per.node=null, tez(final):tez.am.maxtaskfailures.per.node=3

2025-07-02 11:15:35,242 INFO [main] tez.TezSessionState (TezSessionState.java:setupTezParamsBasedOnMR(562)) - Config: mr(unset):mapreduce.task.timeout, mr initial value=600000, tez(original):tez.task.timeout-ms=null, tez(final):tez.task.timeout-ms=600000

2025-07-02 11:15:35,242 INFO [main] tez.TezSessionState (TezSessionState.java:setupTezParamsBasedOnMR(562)) - Config: mr(unset):yarn.app.mapreduce.am.job.task.listener.thread-count, mr initial value=30, tez(original):tez.am.task.listener.thread-count=null, tez(final):tez.am.task.listener.thread-count=30

2025-07-02 11:15:35,261 INFO [main] sqlstd.SQLStdHiveAccessController (SQLStdHiveAccessController.java:<init>(96)) - Created SQLStdHiveAccessController for session context : HiveAuthzSessionContext [sessionString=63fc22ae-87a3-4d13-b59e-6ea5a99a9941, clientType=HIVECLI]

2025-07-02 11:15:35,263 WARN [main] session.SessionState (SessionState.java:setAuthorizerV2Config(950)) - METASTORE_FILTER_HOOK will be ignored, since hive.security.authorization.manager is set to instance of HiveAuthorizerFactory.

2025-07-02 11:15:35,328 INFO [main] metastore.HiveMetaStoreClient (HiveMetaStoreClient.java:open(441)) - Trying to connect to metastore with URI thrift://dc3-dominos-usdp-fun01:9083

2025-07-02 11:15:35,350 INFO [main] metastore.HiveMetaStoreClient (HiveMetaStoreClient.java:open(517)) - Opened a connection to metastore, current connections: 1

2025-07-02 11:15:35,358 INFO [main] metastore.HiveMetaStoreClient (HiveMetaStoreClient.java:open(570)) - Connected to metastore.

2025-07-02 11:15:35,358 INFO [main] metastore.RetryingMetaStoreClient (RetryingMetaStoreClient.java:<init>(97)) - RetryingMetaStoreClient proxy=class org.apache.hadoop.hive.ql.metadata.SessionHiveMetaStoreClient ugi=hadoop (auth:SIMPLE) retries=1 delay=1 lifetime=0

2025-07-02 11:15:36,558 INFO - -> 2025-07-02 11:15:35,864 INFO [main] counters.Limits (Limits.java:init(61)) - Counter limits initialized with parameters: GROUP_NAME_MAX=256, MAX_GROUPS=500, COUNTER_NAME_MAX=64, MAX_COUNTERS=1200

2025-07-02 11:15:35,864 INFO [main] counters.Limits (Limits.java:init(61)) - Counter limits initialized with parameters: GROUP_NAME_MAX=256, MAX_GROUPS=500, COUNTER_NAME_MAX=64, MAX_COUNTERS=120

2025-07-02 11:15:35,864 INFO [main] client.TezClient (TezClient.java:<init>(210)) - Tez Client Version: [ component=tez-api, version=0.10.2, revision=22f46fe39a7cf99b24275304e99867b9135caba2, SCM-URL=scm:git:https://gitbox.apache.org/repos/asf/tez.git, buildTime=2023-02-08T02:24:56Z, buildUser=jenkins, buildJavaVersion=1.8.0_362 ]

2025-07-02 11:15:35,864 INFO [main] tez.TezSessionState (TezSessionState.java:openInternal(363)) - Opening new Tez Session (id: 63fc22ae-87a3-4d13-b59e-6ea5a99a9941, scratch dir: hdfs://dominos-usdp-v3-fun/tmp/hive/hadoop/_tez_session_dir/63fc22ae-87a3-4d13-b59e-6ea5a99a9941)

2025-07-02 11:15:35,884 INFO [main] conf.HiveConf (HiveConf.java:getLogIdVar(5037)) - Using the default value passed in for log id: 63fc22ae-87a3-4d13-b59e-6ea5a99a9941

2025-07-02 11:15:35,884 INFO [main] session.SessionState (SessionState.java:updateThreadName(441)) - Updating thread name to 63fc22ae-87a3-4d13-b59e-6ea5a99a9941 main

2025-07-02 11:15:35,954 INFO [63fc22ae-87a3-4d13-b59e-6ea5a99a9941 main] metastore.HiveMetaStoreClient (HiveMetaStoreClient.java:isCompatibleWith(346)) - Mestastore configuration metastore.filter.hook changed from org.apache.hadoop.hive.ql.security.authorization.plugin.AuthorizationMetaStoreFilterHook to org.apache.hadoop.hive.metastore.DefaultMetaStoreFilterHookImpl

2025-07-02 11:15:35,958 INFO [63fc22ae-87a3-4d13-b59e-6ea5a99a9941 main] metastore.HiveMetaStoreClient (HiveMetaStoreClient.java:close(600)) - Closed a connection to metastore, current connections: 0

2025-07-02 11:15:36,152 INFO [Tez session start thread] impl.TimelineReaderClientImpl (TimelineReaderClientImpl.java:serviceInit(97)) - Initialized TimelineReader URI=http://dc3-dominos-usdp-fun02:8198/ws/v2/timeline/, clusterId=dominos-usdp-v3-fun

2025-07-02 11:15:36,342 INFO [63fc22ae-87a3-4d13-b59e-6ea5a99a9941 main] metastore.HiveMetaStoreClient (HiveMetaStoreClient.java:open(441)) - Trying to connect to metastore with URI thrift://dc3-dominos-usdp-fun01:9083

2025-07-02 11:15:36,344 INFO [63fc22ae-87a3-4d13-b59e-6ea5a99a9941 main] metastore.HiveMetaStoreClient (HiveMetaStoreClient.java:open(517)) - Opened a connection to metastore, current connections: 1

2025-07-02 11:15:36,345 INFO [63fc22ae-87a3-4d13-b59e-6ea5a99a9941 main] metastore.HiveMetaStoreClient (HiveMetaStoreClient.java:open(570)) - Connected to metastore.

2025-07-02 11:15:36,345 INFO [63fc22ae-87a3-4d13-b59e-6ea5a99a9941 main] metastore.RetryingMetaStoreClient (RetryingMetaStoreClient.java:<init>(97)) - RetryingMetaStoreClient proxy=class org.apache.hadoop.hive.ql.metadata.SessionHiveMetaStoreClient ugi=hadoop (auth:SIMPLE) retries=1 delay=1 lifetime=0

Hive Session ID = 4caadf81-0f27-469e-8de0-87e177d910e3

2025-07-02 11:15:36,366 INFO [pool-7-thread-1] SessionState (SessionState.java:printInfo(1227)) - Hive Session ID = 4caadf81-0f27-469e-8de0-87e177d910e3

2025-07-02 11:15:36,385 INFO [63fc22ae-87a3-4d13-b59e-6ea5a99a9941 main] conf.HiveConf (HiveConf.java:getLogIdVar(5037)) - Using the default value passed in for log id: 63fc22ae-87a3-4d13-b59e-6ea5a99a9941

2025-07-02 11:15:36,386 INFO [pool-7-thread-1] session.SessionState (SessionState.java:createPath(790)) - Created HDFS directory: /tmp/hive/hadoop/4caadf81-0f27-469e-8de0-87e177d910e3

2025-07-02 11:15:36,412 INFO [pool-7-thread-1] session.SessionState (SessionState.java:createPath(790)) - Created local directory: /tmp/hadoop/4caadf81-0f27-469e-8de0-87e177d910e3

2025-07-02 11:15:36,420 INFO [pool-7-thread-1] session.SessionState (SessionState.java:createPath(790)) - Created HDFS directory: /tmp/hive/hadoop/4caadf81-0f27-469e-8de0-87e177d910e3/_tmp_space.db

2025-07-02 11:15:36,420 INFO [pool-7-thread-1] tez.TezSessionState (TezSessionState.java:openInternal(277)) - User of session id 4caadf81-0f27-469e-8de0-87e177d910e3 is hadoop

2025-07-02 11:15:36,441 INFO [pool-7-thread-1] tez.DagUtils (DagUtils.java:localizeResource(1159)) - Localizing resource because it does not exist: file:/usr/lib/hive/auxlib/hudi-hadoop-mr-bundle-0.13.0.jar to dest: hdfs://dominos-usdp-v3-fun/tmp/hive/hadoop/_tez_session_dir/4caadf81-0f27-469e-8de0-87e177d910e3-resources/hudi-hadoop-mr-bundle-0.13.0.jar

2025-07-02 11:15:36,455 INFO [63fc22ae-87a3-4d13-b59e-6ea5a99a9941 main] ql.Driver (Driver.java:compile(554)) - Compiling command(queryId=hadoop_20250702111536_a8fe6b15-57e0-4288-895c-6d4f8fd58503): ALTER TABLE ddp_dmo_dwd.DWD_OrdCusSrvDetail DROP IF EXISTS PARTITION(DT='')

2025-07-02 11:15:36,484 INFO [63fc22ae-87a3-4d13-b59e-6ea5a99a9941 main] hooks.ATSHook (ATSHook.java:<init>(146)) - Created ATS Hook

2025-07-02 11:15:36,484 INFO [63fc22ae-87a3-4d13-b59e-6ea5a99a9941 main] hooks.ATSHook (ATSHook.java:<init>(146)) - Created ATS Hook

2025-07-02 11:15:36,484 INFO [63fc22ae-87a3-4d13-b59e-6ea5a99a9941 main] hooks.ATSHook (ATSHook.java:<init>(146)) - Created ATS Hook

2025-07-02 11:15:37,561 INFO - -> 2025-07-02 11:15:36,669 INFO [Tez session start thread] client.AHSProxy (AHSProxy.java:createAHSProxy(43)) - Connecting to Application History server at dc3-dominos-usdp-fun01/10.30.10.60:10200

2025-07-02 11:15:36,686 INFO [Tez session start thread] client.TezClient (TezClient.java:start(388)) - Session mode. Starting session.

2025-07-02 11:15:36,727 INFO [Tez session start thread] client.ConfiguredRMFailoverProxyProvider (ConfiguredRMFailoverProxyProvider.java:performFailover(100)) - Failing over to rm-dc3-dominos-usdp-fun01

2025-07-02 11:15:36,809 INFO [Tez session start thread] client.TezClientUtils (TezClientUtils.java:setupTezJarsLocalResources(180)) - Using tez.lib.uris value from configuration: hdfs:////dominos-usdp-v3-fun/tez/tez.tar.gz

2025-07-02 11:15:36,809 INFO [Tez session start thread] client.TezClientUtils (TezClientUtils.java:setupTezJarsLocalResources(182)) - Using tez.lib.uris.classpath value from configuration: null

2025-07-02 11:15:36,880 INFO [Tez session start thread] client.TezClient (TezCommonUtils.java:createTezSystemStagingPath(123)) - Tez system stage directory hdfs://dominos-usdp-v3-fun/tmp/hive/hadoop/_tez_session_dir/63fc22ae-87a3-4d13-b59e-6ea5a99a9941/.tez/application_1740624029612_5078 doesn't exist and is created

2025-07-02 11:15:36,913 INFO [Tez session start thread] conf.Configuration (Configuration.java:getConfResourceAsInputStream(2845)) - resource-types.xml not found

2025-07-02 11:15:36,914 INFO [Tez session start thread] resource.ResourceUtils (ResourceUtils.java:addResourcesFileToConf(476)) - Unable to find 'resource-types.xml'.

2025-07-02 11:15:36,948 INFO [Tez session start thread] Configuration.deprecation (Configuration.java:logDeprecation(1441)) - yarn.resourcemanager.system-metrics-publisher.enabled is deprecated. Instead, use yarn.system-metrics-publisher.enabled

2025-07-02 11:15:37,000 INFO [pool-7-thread-1] tez.DagUtils (DagUtils.java:createLocalResource(842)) - Resource modification time: 1751426136955 for hdfs://dominos-usdp-v3-fun/tmp/hive/hadoop/_tez_session_dir/4caadf81-0f27-469e-8de0-87e177d910e3-resources/hudi-hadoop-mr-bundle-0.13.0.jar

2025-07-02 11:15:37,005 INFO [pool-7-thread-1] tez.DagUtils (DagUtils.java:localizeResource(1159)) - Localizing resource because it does not exist: file:/usr/lib/hive/auxlib/hudi-hive-sync-bundle-0.13.0.jar to dest: hdfs://dominos-usdp-v3-fun/tmp/hive/hadoop/_tez_session_dir/4caadf81-0f27-469e-8de0-87e177d910e3-resources/hudi-hive-sync-bundle-0.13.0.jar

2025-07-02 11:15:38,562 INFO - -> 2025-07-02 11:15:37,601 INFO [63fc22ae-87a3-4d13-b59e-6ea5a99a9941 main] metastore.HiveMetaStoreClient (HiveMetaStoreClient.java:isCompatibleWith(346)) - Mestastore configuration metastore.filter.hook changed from org.apache.hadoop.hive.metastore.DefaultMetaStoreFilterHookImpl to org.apache.hadoop.hive.ql.security.authorization.plugin.AuthorizationMetaStoreFilterHook

2025-07-02 11:15:37,602 INFO [63fc22ae-87a3-4d13-b59e-6ea5a99a9941 main] metastore.HiveMetaStoreClient (HiveMetaStoreClient.java:close(600)) - Closed a connection to metastore, current connections: 0

2025-07-02 11:15:37,603 INFO [63fc22ae-87a3-4d13-b59e-6ea5a99a9941 main] ql.Driver (Driver.java:checkConcurrency(285)) - Concurrency mode is disabled, not creating a lock manager

2025-07-02 11:15:37,610 INFO [63fc22ae-87a3-4d13-b59e-6ea5a99a9941 main] metastore.HiveMetaStoreClient (HiveMetaStoreClient.java:open(441)) - Trying to connect to metastore with URI thrift://dc3-dominos-usdp-fun02:9083

2025-07-02 11:15:37,615 INFO [63fc22ae-87a3-4d13-b59e-6ea5a99a9941 main] metastore.HiveMetaStoreClient (HiveMetaStoreClient.java:open(517)) - Opened a connection to metastore, current connections: 1

2025-07-02 11:15:37,620 INFO [63fc22ae-87a3-4d13-b59e-6ea5a99a9941 main] metastore.HiveMetaStoreClient (HiveMetaStoreClient.java:open(570)) - Connected to metastore.

2025-07-02 11:15:37,621 INFO [63fc22ae-87a3-4d13-b59e-6ea5a99a9941 main] metastore.RetryingMetaStoreClient (RetryingMetaStoreClient.java:<init>(97)) - RetryingMetaStoreClient proxy=class org.apache.hadoop.hive.ql.metadata.SessionHiveMetaStoreClient ugi=hadoop (auth:SIMPLE) retries=1 delay=1 lifetime=0

2025-07-02 11:15:37,676 INFO [Tez session start thread] impl.YarnClientImpl (YarnClientImpl.java:submitApplication(338)) - Submitted application application_1740624029612_5078

2025-07-02 11:15:37,685 INFO [Tez session start thread] client.TezClient (TezClient.java:start(404)) - The url to track the Tez Session: http://dc3-dominos-usdp-fun01:8088/proxy/application_1740624029612_5078/

2025-07-02 11:15:38,143 INFO [63fc22ae-87a3-4d13-b59e-6ea5a99a9941 main] ql.Driver (Driver.java:compile(666)) - Semantic Analysis Completed (retrial = false)

2025-07-02 11:15:38,145 INFO [63fc22ae-87a3-4d13-b59e-6ea5a99a9941 main] ql.Driver (Driver.java:getSchema(374)) - Returning Hive schema: Schema(fieldSchemas:null, properties:null)

2025-07-02 11:15:38,149 INFO [63fc22ae-87a3-4d13-b59e-6ea5a99a9941 main] ql.Driver (Driver.java:compile(781)) - Completed compiling command(queryId=hadoop_20250702111536_a8fe6b15-57e0-4288-895c-6d4f8fd58503); Time taken: 1.723 seconds

2025-07-02 11:15:38,150 INFO [63fc22ae-87a3-4d13-b59e-6ea5a99a9941 main] reexec.ReExecDriver (ReExecDriver.java:run(156)) - Execution #1 of query

2025-07-02 11:15:38,150 INFO [63fc22ae-87a3-4d13-b59e-6ea5a99a9941 main] ql.Driver (Driver.java:checkConcurrency(285)) - Concurrency mode is disabled, not creating a lock manager

2025-07-02 11:15:38,150 INFO [63fc22ae-87a3-4d13-b59e-6ea5a99a9941 main] ql.Driver (Driver.java:execute(2255)) - Executing command(queryId=hadoop_20250702111536_a8fe6b15-57e0-4288-895c-6d4f8fd58503): ALTER TABLE ddp_dmo_dwd.DWD_OrdCusSrvDetail DROP IF EXISTS PARTITION(DT='')

2025-07-02 11:15:38,153 INFO [63fc22ae-87a3-4d13-b59e-6ea5a99a9941 main] hooks.ATSHook (ATSHook.java:setupAtsExecutor(115)) - Creating ATS executor queue with capacity 64

2025-07-02 11:15:38,177 INFO [63fc22ae-87a3-4d13-b59e-6ea5a99a9941 main] impl.TimelineClientImpl (TimelineClientImpl.java:serviceInit(130)) - Timeline service address: dc3-dominos-usdp-fun01:8188

2025-07-02 11:15:38,295 INFO [63fc22ae-87a3-4d13-b59e-6ea5a99a9941 main] ql.Driver (Driver.java:launchTask(2662)) - Starting task [Stage-0:DDL] in serial mode

2025-07-02 11:15:38,414 INFO [ATS Logger 0] hooks.ATSHook (ATSHook.java:createTimelineDomain(155)) - ATS domain created:hive_63fc22ae-87a3-4d13-b59e-6ea5a99a9941(hadoop,hadoop)

2025-07-02 11:15:38,528 INFO [63fc22ae-87a3-4d13-b59e-6ea5a99a9941 main] ql.Driver (Driver.java:execute(2531)) - Completed executing command(queryId=hadoop_20250702111536_a8fe6b15-57e0-4288-895c-6d4f8fd58503); Time taken: 0.378 seconds

OK

2025-07-02 11:15:38,528 INFO [63fc22ae-87a3-4d13-b59e-6ea5a99a9941 main] ql.Driver (SessionState.java:printInfo(1227)) - OK

2025-07-02 11:15:38,529 INFO [63fc22ae-87a3-4d13-b59e-6ea5a99a9941 main] ql.Driver (Driver.java:checkConcurrency(285)) - Concurrency mode is disabled, not creating a lock manager

Time taken: 2.104 seconds

2025-07-02 11:15:38,529 INFO [63fc22ae-87a3-4d13-b59e-6ea5a99a9941 main] CliDriver (SessionState.java:printInfo(1227)) - Time taken: 2.104 seconds

2025-07-02 11:15:38,530 INFO [63fc22ae-87a3-4d13-b59e-6ea5a99a9941 main] conf.HiveConf (HiveConf.java:getLogIdVar(5037)) - Using the default value passed in for log id: 63fc22ae-87a3-4d13-b59e-6ea5a99a9941

2025-07-02 11:15:38,530 INFO [63fc22ae-87a3-4d13-b59e-6ea5a99a9941 main] session.SessionState (SessionState.java:resetThreadName(452)) - Resetting thread name to main

2025-07-02 11:15:38,530 INFO [main] conf.HiveConf (HiveConf.java:getLogIdVar(5037)) - Using the default value passed in for log id: 63fc22ae-87a3-4d13-b59e-6ea5a99a9941

2025-07-02 11:15:38,530 INFO [main] session.SessionState (SessionState.java:updateThreadName(441)) - Updating thread name to 63fc22ae-87a3-4d13-b59e-6ea5a99a9941 main

2025-07-02 11:15:38,533 INFO [63fc22ae-87a3-4d13-b59e-6ea5a99a9941 main] ql.Driver (Driver.java:compile(554)) - Compiling command(queryId=hadoop_20250702111538_f08ba63c-7b09-48c8-86fe-f29aa249329c):

ALTER TABLE ddp_dmo_dwd.DWD_OrdCusSrvDetail ADD IF NOT EXISTS PARTITION(DT='')

2025-07-02 11:15:38,551 INFO [63fc22ae-87a3-4d13-b59e-6ea5a99a9941 main] hooks.ATSHook (ATSHook.java:<init>(146)) - Created ATS Hook

2025-07-02 11:15:38,551 INFO [63fc22ae-87a3-4d13-b59e-6ea5a99a9941 main] hooks.ATSHook (ATSHook.java:<init>(146)) - Created ATS Hook

2025-07-02 11:15:38,551 INFO [63fc22ae-87a3-4d13-b59e-6ea5a99a9941 main] hooks.ATSHook (ATSHook.java:<init>(146)) - Created ATS Hook

2025-07-02 11:15:38,559 INFO [63fc22ae-87a3-4d13-b59e-6ea5a99a9941 main] ql.Driver (Driver.java:checkConcurrency(285)) - Concurrency mode is disabled, not creating a lock manager

2025-07-02 11:15:39,286 INFO - process has exited. execute path:/tmp/dolphinscheduler/exec/process/hadoop/16836554651104/18167664743392_10/32581/56672, processId:1190 ,exitStatusCode:1 ,processWaitForStatus:true ,processExitValue:1

2025-07-02 11:15:39,287 INFO - Send task execute result to master, the current task status: TaskExecutionStatus{code=6, desc='failure'}

2025-07-02 11:15:39,287 INFO - Remove the current task execute context from worker cache

2025-07-02 11:15:39,287 INFO - The current execute mode isn't develop mode, will clear the task execute file: /tmp/dolphinscheduler/exec/process/hadoop/16836554651104/18167664743392_10/32581/56672

2025-07-02 11:15:39,288 INFO - Success clear the task execute file: /tmp/dolphinscheduler/exec/process/hadoop/16836554651104/18167664743392_10/32581/56672

2025-07-02 11:15:39,562 INFO - -> 2025-07-02 11:15:38,630 INFO [pool-7-thread-1] tez.DagUtils (DagUtils.java:createLocalResource(842)) - Resource modification time: 1751426138580 for hdfs://dominos-usdp-v3-fun/tmp/hive/hadoop/_tez_session_dir/4caadf81-0f27-469e-8de0-87e177d910e3-resources/hudi-hive-sync-bundle-0.13.0.jar

2025-07-02 11:15:38,630 INFO [pool-7-thread-1] tez.TezSessionState (TezSessionState.java:openInternal(288)) - Created new resources: null

2025-07-02 11:15:38,644 INFO [pool-7-thread-1] tez.DagUtils (DagUtils.java:getHiveJarDirectory(1058)) - Jar dir is null / directory doesn't exist. Choosing HIVE_INSTALL_DIR - /user/hadoop/.hiveJars

2025-07-02 11:15:38,666 INFO [pool-7-thread-1] tez.DagUtils (DagUtils.java:createLocalResource(842)) - Resource modification time: 1715146950410 for hdfs://dominos-usdp-v3-fun/user/hadoop/.hiveJars/hive-exec-3.1.3-3420a6126cfea97266fe35b708da5d5f95a5b158cad390dc4124081a39cf906f.jar

2025-07-02 11:15:38,697 INFO [63fc22ae-87a3-4d13-b59e-6ea5a99a9941 main] ql.Driver (Driver.java:compile(666)) - Semantic Analysis Completed (retrial = false)

2025-07-02 11:15:38,698 INFO [63fc22ae-87a3-4d13-b59e-6ea5a99a9941 main] ql.Driver (Driver.java:getSchema(374)) - Returning Hive schema: Schema(fieldSchemas:null, properties:null)

2025-07-02 11:15:38,698 INFO [63fc22ae-87a3-4d13-b59e-6ea5a99a9941 main] ql.Driver (Driver.java:compile(781)) - Completed compiling command(queryId=hadoop_20250702111538_f08ba63c-7b09-48c8-86fe-f29aa249329c); Time taken: 0.165 seconds

2025-07-02 11:15:38,698 INFO [63fc22ae-87a3-4d13-b59e-6ea5a99a9941 main] reexec.ReExecDriver (ReExecDriver.java:run(156)) - Execution #1 of query

2025-07-02 11:15:38,698 INFO [63fc22ae-87a3-4d13-b59e-6ea5a99a9941 main] ql.Driver (Driver.java:checkConcurrency(285)) - Concurrency mode is disabled, not creating a lock manager

2025-07-02 11:15:38,698 INFO [63fc22ae-87a3-4d13-b59e-6ea5a99a9941 main] ql.Driver (Driver.java:execute(2255)) - Executing command(queryId=hadoop_20250702111538_f08ba63c-7b09-48c8-86fe-f29aa249329c):

ALTER TABLE ddp_dmo_dwd.DWD_OrdCusSrvDetail ADD IF NOT EXISTS PARTITION(DT='')

2025-07-02 11:15:38,700 INFO [63fc22ae-87a3-4d13-b59e-6ea5a99a9941 main] ql.Driver (Driver.java:launchTask(2662)) - Starting task [Stage-0:DDL] in serial mode

2025-07-02 11:15:38,726 INFO [pool-7-thread-1] tez.TezSessionState (TezSessionState.java:setupTezParamsBasedOnMR(562)) - Config: mr(unset):mapreduce.task.io.sort.mb, mr initial value=100, tez(original):tez.runtime.io.sort.mb=null, tez(final):tez.runtime.io.sort.mb=100

2025-07-02 11:15:38,726 INFO [pool-7-thread-1] tez.TezSessionState (TezSessionState.java:setupTezParamsBasedOnMR(562)) - Config: mr(unset):mapreduce.reduce.shuffle.read.timeout, mr initial value=180000, tez(original):tez.runtime.shuffle.read.timeout=null, tez(final):tez.runtime.shuffle.read.timeout=180000

2025-07-02 11:15:38,726 INFO [pool-7-thread-1] tez.TezSessionState (TezSessionState.java:setupTezParamsBasedOnMR(562)) - Config: mr(unset):mapreduce.job.speculative.minimum-allowed-tasks, mr initial value=10, tez(original):tez.am.minimum.allowed.speculative.tasks=null, tez(final):tez.am.minimum.allowed.speculative.tasks=10

2025-07-02 11:15:38,726 INFO [pool-7-thread-1] tez.TezSessionState (TezSessionState.java:setupTezParamsBasedOnMR(562)) - Config: mr(unset):mapreduce.ifile.readahead.bytes, mr initial value=4194304, tez(original):tez.runtime.ifile.readahead.bytes=null, tez(final):tez.runtime.ifile.readahead.bytes=4194304

2025-07-02 11:15:38,726 INFO [pool-7-thread-1] tez.TezSessionState (TezSessionState.java:setupTezParamsBasedOnMR(562)) - Config: mr(unset):mapreduce.shuffle.ssl.enabled, mr initial value=false, tez(original):tez.runtime.shuffle.ssl.enable=null, tez(final):tez.runtime.shuffle.ssl.enable=false

2025-07-02 11:15:38,726 INFO [pool-7-thread-1] tez.TezSessionState (TezSessionState.java:setupTezParamsBasedOnMR(562)) - Config: mr(unset):mapreduce.map.sort.spill.percent, mr initial value=0.80, tez(original):tez.runtime.sort.spill.percent=null, tez(final):tez.runtime.sort.spill.percent=0.80

2025-07-02 11:15:38,726 INFO [pool-7-thread-1] tez.TezSessionState (TezSessionState.java:setupTezParamsBasedOnMR(562)) - Config: mr(unset):mapreduce.job.speculative.speculative-cap-running-tasks, mr initial value=0.1, tez(original):tez.am.proportion.running.tasks.speculatable=null, tez(final):tez.am.proportion.running.tasks.speculatable=0.1

2025-07-02 11:15:38,726 INFO [pool-7-thread-1] tez.TezSessionState (TezSessionState.java:setupTezParamsBasedOnMR(562)) - Config: mr(unset):mapreduce.job.speculative.speculative-cap-total-tasks, mr initial value=0.01, tez(original):tez.am.proportion.total.tasks.speculatable=null, tez(final):tez.am.proportion.total.tasks.speculatable=0.01

2025-07-02 11:15:38,726 INFO [pool-7-thread-1] tez.TezSessionState (TezSessionState.java:setupTezParamsBasedOnMR(562)) - Config: mr(unset):mapreduce.ifile.readahead, mr initial value=true, tez(original):tez.runtime.ifile.readahead=null, tez(final):tez.runtime.ifile.readahead=true

2025-07-02 11:15:38,726 INFO [pool-7-thread-1] tez.TezSessionState (TezSessionState.java:setupTezParamsBasedOnMR(562)) - Config: mr(unset):mapreduce.reduce.shuffle.merge.percent, mr initial value=0.66, tez(original):tez.runtime.shuffle.merge.percent=null, tez(final):tez.runtime.shuffle.merge.percent=0.66

2025-07-02 11:15:38,726 INFO [pool-7-thread-1] tez.TezSessionState (TezSessionState.java:setupTezParamsBasedOnMR(562)) - Config: mr(unset):mapreduce.reduce.shuffle.parallelcopies, mr initial value=50, tez(original):tez.runtime.shuffle.parallel.copies=null, tez(final):tez.runtime.shuffle.parallel.copies=50

2025-07-02 11:15:38,726 INFO [pool-7-thread-1] tez.TezSessionState (TezSessionState.java:setupTezParamsBasedOnMR(562)) - Config: mr(unset):mapreduce.job.speculative.retry-after-speculate, mr initial value=15000, tez(original):tez.am.soonest.retry.after.speculate=null, tez(final):tez.am.soonest.retry.after.speculate=15000

2025-07-02 11:15:38,726 INFO [pool-7-thread-1] tez.TezSessionState (TezSessionState.java:setupTezParamsBasedOnMR(562)) - Config: mr(unset):mapreduce.job.reduce.slowstart.completedmaps, mr initial value=0.95, tez(original):tez.shuffle-vertex-manager.min-src-fraction=null, tez(final):tez.shuffle-vertex-manager.min-src-fraction=0.95

2025-07-02 11:15:38,727 INFO [pool-7-thread-1] tez.TezSessionState (TezSessionState.java:setupTezParamsBasedOnMR(562)) - Config: mr(unset):mapreduce.reduce.shuffle.memory.limit.percent, mr initial value=0.25, tez(original):tez.runtime.shuffle.memory.limit.percent=null, tez(final):tez.runtime.shuffle.memory.limit.percent=0.25

2025-07-02 11:15:38,727 INFO [pool-7-thread-1] tez.TezSessionState (TezSessionState.java:setupTezParamsBasedOnMR(562)) - Config: mr(unset):mapreduce.job.speculative.retry-after-no-speculate, mr initial value=1000, tez(original):tez.am.soonest.retry.after.no.speculate=null, tez(final):tez.am.soonest.retry.after.no.speculate=1000

2025-07-02 11:15:38,727 INFO [pool-7-thread-1] tez.TezSessionState (TezSessionState.java:setupTezParamsBasedOnMR(562)) - Config: mr(unset):mapreduce.task.io.sort.factor, mr initial value=100, tez(original):tez.runtime.io.sort.factor=null, tez(final):tez.runtime.io.sort.factor=100

2025-07-02 11:15:38,727 INFO [pool-7-thread-1] tez.TezSessionState (TezSessionState.java:setupTezParamsBasedOnMR(562)) - Config: mr(unset):mapreduce.map.output.compress, mr initial value=false, tez(original):tez.runtime.compress=null, tez(final):tez.runtime.compress=false

2025-07-02 11:15:38,727 INFO [pool-7-thread-1] tez.TezSessionState (TezSessionState.java:setupTezParamsBasedOnMR(562)) - Config: mr(unset):mapreduce.reduce.shuffle.connect.timeout, mr initial value=180000, tez(original):tez.runtime.shuffle.connect.timeout=null, tez(final):tez.runtime.shuffle.connect.timeout=180000

2025-07-02 11:15:38,727 INFO [pool-7-thread-1] tez.TezSessionState (TezSessionState.java:setupTezParamsBasedOnMR(562)) - Config: mr(unset):mapreduce.reduce.input.buffer.percent, mr initial value=0.0, tez(original):tez.runtime.task.input.post-merge.buffer.percent=null, tez(final):tez.runtime.task.input.post-merge.buffer.percent=0.0

2025-07-02 11:15:38,727 INFO [pool-7-thread-1] tez.TezSessionState (TezSessionState.java:setupTezParamsBasedOnMR(562)) - Config: mr(unset):mapreduce.map.output.compress.codec, mr initial value=org.apache.hadoop.io.compress.DefaultCodec, tez(original):tez.runtime.compress.codec=null, tez(final):tez.runtime.compress.codec=org.apache.hadoop.io.compress.DefaultCodec

2025-07-02 11:15:38,727 INFO [pool-7-thread-1] tez.TezSessionState (TezSessionState.java:setupTezParamsBasedOnMR(562)) - Config: mr(unset):mapreduce.task.merge.progress.records, mr initial value=10000, tez(original):tez.runtime.merge.progress.records=null, tez(final):tez.runtime.merge.progress.records=10000

2025-07-02 11:15:38,727 INFO [pool-7-thread-1] tez.TezSessionState (TezSessionState.java:setupTezParamsBasedOnMR(562)) - Config: mr(unset):map.sort.class, mr initial value=org.apache.hadoop.util.QuickSort, tez(original):tez.runtime.internal.sorter.class=null, tez(final):tez.runtime.internal.sorter.class=org.apache.hadoop.util.QuickSort

2025-07-02 11:15:38,727 INFO [pool-7-thread-1] tez.TezSessionState (TezSessionState.java:setupTezParamsBasedOnMR(562)) - Config: mr(unset):mapreduce.reduce.shuffle.input.buffer.percent, mr initial value=0.70, tez(original):tez.runtime.shuffle.fetch.buffer.percent=null, tez(final):tez.runtime.shuffle.fetch.buffer.percent=0.70

2025-07-02 11:15:38,727 INFO [pool-7-thread-1] tez.TezSessionState (TezSessionState.java:setupTezParamsBasedOnMR(562)) - Config: mr(unset):mapreduce.job.counters.max, mr initial value=120, tez(original):tez.counters.max=null, tez(final):tez.counters.max=120

2025-07-02 11:15:38,727 INFO [pool-7-thread-1] tez.TezSessionState (TezSessionState.java:setupTezParamsBasedOnMR(562)) - Config: mr(unset):mapreduce.job.hdfs-servers, mr initial value=hdfs://dominos-usdp-v3-fun, tez(original):tez.job.fs-servers=null, tez(final):tez.job.fs-servers=hdfs://dominos-usdp-v3-fun

2025-07-02 11:15:38,727 INFO [pool-7-thread-1] tez.TezSessionState (TezSessionState.java:setupTezParamsBasedOnMR(562)) - Config: mr(unset):mapreduce.job.queuename, mr initial value=default, tez(original):tez.queue.name=default, tez(final):tez.queue.name=default

2025-07-02 11:15:38,727 INFO [pool-7-thread-1] tez.TezSessionState (TezSessionState.java:setupTezParamsBasedOnMR(562)) - Config: mr(unset):mapreduce.job.maxtaskfailures.per.tracker, mr initial value=3, tez(original):tez.am.maxtaskfailures.per.node=null, tez(final):tez.am.maxtaskfailures.per.node=3

2025-07-02 11:15:38,727 INFO [pool-7-thread-1] tez.TezSessionState (TezSessionState.java:setupTezParamsBasedOnMR(562)) - Config: mr(unset):mapreduce.task.timeout, mr initial value=600000, tez(original):tez.task.timeout-ms=null, tez(final):tez.task.timeout-ms=600000

2025-07-02 11:15:38,727 INFO [pool-7-thread-1] tez.TezSessionState (TezSessionState.java:setupTezParamsBasedOnMR(562)) - Config: mr(unset):yarn.app.mapreduce.am.job.task.listener.thread-count, mr initial value=30, tez(original):tez.am.task.listener.thread-count=null, tez(final):tez.am.task.listener.thread-count=30

2025-07-02 11:15:38,731 INFO [pool-7-thread-1] sqlstd.SQLStdHiveAccessController (SQLStdHiveAccessController.java:<init>(96)) - Created SQLStdHiveAccessController for session context : HiveAuthzSessionContext [sessionString=4caadf81-0f27-469e-8de0-87e177d910e3, clientType=HIVECLI]

2025-07-02 11:15:38,731 WARN [pool-7-thread-1] session.SessionState (SessionState.java:setAuthorizerV2Config(950)) - METASTORE_FILTER_HOOK will be ignored, since hive.security.authorization.manager is set to instance of HiveAuthorizerFactory.

2025-07-02 11:15:38,735 INFO [pool-7-thread-1] metastore.HiveMetaStoreClient (HiveMetaStoreClient.java:open(441)) - Trying to connect to metastore with URI thrift://dc3-dominos-usdp-fun02:9083

2025-07-02 11:15:38,739 INFO [pool-7-thread-1] metastore.HiveMetaStoreClient (HiveMetaStoreClient.java:open(517)) - Opened a connection to metastore, current connections: 2

2025-07-02 11:15:38,745 INFO [pool-7-thread-1] metastore.HiveMetaStoreClient (HiveMetaStoreClient.java:open(570)) - Connected to metastore.

2025-07-02 11:15:38,745 INFO [pool-7-thread-1] metastore.RetryingMetaStoreClient (RetryingMetaStoreClient.java:<init>(97)) - RetryingMetaStoreClient proxy=class org.apache.hadoop.hive.ql.metadata.SessionHiveMetaStoreClient ugi=hadoop (auth:SIMPLE) retries=1 delay=1 lifetime=0

2025-07-02 11:15:38,750 INFO [pool-7-thread-1] client.TezClient (TezClient.java:<init>(210)) - Tez Client Version: [ component=tez-api, version=0.10.2, revision=22f46fe39a7cf99b24275304e99867b9135caba2, SCM-URL=scm:git:https://gitbox.apache.org/repos/asf/tez.git, buildTime=2023-02-08T02:24:56Z, buildUser=jenkins, buildJavaVersion=1.8.0_362 ]

2025-07-02 11:15:38,750 INFO [pool-7-thread-1] tez.TezSessionState (TezSessionState.java:openInternal(363)) - Opening new Tez Session (id: 4caadf81-0f27-469e-8de0-87e177d910e3, scratch dir: hdfs://dominos-usdp-v3-fun/tmp/hive/hadoop/_tez_session_dir/4caadf81-0f27-469e-8de0-87e177d910e3)

2025-07-02 11:15:38,773 INFO [pool-7-thread-1] impl.TimelineReaderClientImpl (TimelineReaderClientImpl.java:serviceInit(97)) - Initialized TimelineReader URI=http://dc3-dominos-usdp-fun02:8198/ws/v2/timeline/, clusterId=dominos-usdp-v3-fun

2025-07-02 11:15:38,779 ERROR [63fc22ae-87a3-4d13-b59e-6ea5a99a9941 main] exec.DDLTask (DDLTask.java:failed(927)) - Failed

org.apache.hadoop.hive.ql.metadata.HiveException: partition spec is invalid; field dt does not exist or is empty

at org.apache.hadoop.hive.ql.metadata.Partition.createMetaPartitionObject(Partition.java:129)

at org.apache.hadoop.hive.ql.metadata.Hive.convertAddSpecToMetaPartition(Hive.java:2525)

at org.apache.hadoop.hive.ql.metadata.Hive.createPartitions(Hive.java:2466)

at org.apache.hadoop.hive.ql.exec.DDLTask.addPartitions(DDLTask.java:1320)

at org.apache.hadoop.hive.ql.exec.DDLTask.execute(DDLTask.java:466)

at org.apache.hadoop.hive.ql.exec.Task.executeTask(Task.java:210)

at org.apache.hadoop.hive.ql.exec.TaskRunner.runSequential(TaskRunner.java:97)

at org.apache.hadoop.hive.ql.Driver.launchTask(Driver.java:2664)

at org.apache.hadoop.hive.ql.Driver.execute(Driver.java:2335)

at org.apache.hadoop.hive.ql.Driver.runInternal(Driver.java:2011)

at org.apache.hadoop.hive.ql.Driver.run(Driver.java:1709)

at org.apache.hadoop.hive.ql.Driver.run(Driver.java:1703)

at org.apache.hadoop.hive.ql.reexec.ReExecDriver.run(ReExecDriver.java:157)

at org.apache.hadoop.hive.ql.reexec.ReExecDriver.run(ReExecDriver.java:218)

at org.apache.hadoop.hive.cli.CliDriver.processLocalCmd(CliDriver.java:239)

at org.apache.hadoop.hive.cli.CliDriver.processCmd(CliDriver.java:188)

at org.apache.hadoop.hive.cli.CliDriver.processLine(CliDriver.java:402)

at org.apache.hadoop.hive.cli.CliDriver.processLine(CliDriver.java:335)

at org.apache.hadoop.hive.cli.CliDriver.executeDriver(CliDriver.java:787)

at org.apache.hadoop.hive.cli.CliDriver.run(CliDriver.java:759)

at org.apache.hadoop.hive.cli.CliDriver.main(CliDriver.java:683)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at org.apache.hadoop.util.RunJar.run(RunJar.java:323)

at org.apache.hadoop.util.RunJar.main(RunJar.java:236)

2025-07-02 11:15:38,790 INFO [63fc22ae-87a3-4d13-b59e-6ea5a99a9941 main] reexec.ReOptimizePlugin (ReOptimizePlugin.java:run(70)) - ReOptimization: retryPossible: false

FAILED: Execution Error, return code 1 from org.apache.hadoop.hive.ql.exec.DDLTask. partition spec is invalid; field dt does not exist or is empty

2025-07-02 11:15:38,791 ERROR [63fc22ae-87a3-4d13-b59e-6ea5a99a9941 main] ql.Driver (SessionState.java:printError(1250)) - FAILED: Execution Error, return code 1 from org.apache.hadoop.hive.ql.exec.DDLTask. partition spec is invalid; field dt does not exist or is empty

2025-07-02 11:15:38,792 INFO [63fc22ae-87a3-4d13-b59e-6ea5a99a9941 main] ql.Driver (Driver.java:execute(2531)) - Completed executing command(queryId=hadoop_20250702111538_f08ba63c-7b09-48c8-86fe-f29aa249329c); Time taken: 0.094 seconds

2025-07-02 11:15:38,792 INFO [63fc22ae-87a3-4d13-b59e-6ea5a99a9941 main] ql.Driver (Driver.java:checkConcurrency(285)) - Concurrency mode is disabled, not creating a lock manager

2025-07-02 11:15:38,793 INFO [63fc22ae-87a3-4d13-b59e-6ea5a99a9941 main] conf.HiveConf (HiveConf.java:getLogIdVar(5037)) - Using the default value passed in for log id: 63fc22ae-87a3-4d13-b59e-6ea5a99a9941

2025-07-02 11:15:38,793 INFO [63fc22ae-87a3-4d13-b59e-6ea5a99a9941 main] session.SessionState (SessionState.java:resetThreadName(452)) - Resetting thread name to main

2025-07-02 11:15:38,793 INFO [main] conf.HiveConf (HiveConf.java:getLogIdVar(5037)) - Using the default value passed in for log id: 63fc22ae-87a3-4d13-b59e-6ea5a99a9941

2025-07-02 11:15:38,799 INFO [main] tez.TezSessionPoolManager (TezSessionPoolManager.java:closeIfNotDefault(351)) - Closing tez session if not default: sessionId=63fc22ae-87a3-4d13-b59e-6ea5a99a9941, queueName=null, user=hadoop, doAs=false, isOpen=false, isDefault=false

2025-07-02 11:15:38,800 INFO [Tez session start thread] client.TezClient (TezClient.java:stop(731)) - Shutting down Tez Session, sessionName=HIVE-63fc22ae-87a3-4d13-b59e-6ea5a99a9941, applicationId=application_1740624029612_5078

2025-07-02 11:15:38,815 INFO [Tez session start thread] client.TezClient (TezClient.java:stop(777)) - Could not connect to AM, killing session via YARN, sessionName=HIVE-63fc22ae-87a3-4d13-b59e-6ea5a99a9941, applicationId=application_1740624029612_5078

2025-07-02 11:15:38,824 INFO [main] tez.TezSessionState (TezSessionState.java:cleanupDagResources(721)) - Attemting to clean up resources for 63fc22ae-87a3-4d13-b59e-6ea5a99a9941: hdfs://dominos-usdp-v3-fun/tmp/hive/hadoop/_tez_session_dir/63fc22ae-87a3-4d13-b59e-6ea5a99a9941-resources; 0 additional files, 2 localized resources

2025-07-02 11:15:38,839 INFO [pool-7-thread-1] client.AHSProxy (AHSProxy.java:createAHSProxy(43)) - Connecting to Application History server at dc3-dominos-usdp-fun01/10.30.10.60:10200

2025-07-02 11:15:38,840 INFO [pool-7-thread-1] client.TezClient (TezClient.java:start(388)) - Session mode. Starting session.

2025-07-02 11:15:38,840 INFO [pool-7-thread-1] client.ConfiguredRMFailoverProxyProvider (ConfiguredRMFailoverProxyProvider.java:performFailover(100)) - Failing over to rm-dc3-dominos-usdp-fun01

2025-07-02 11:15:38,851 INFO [pool-7-thread-1] client.TezClientUtils (TezClientUtils.java:setupTezJarsLocalResources(180)) - Using tez.lib.uris value from configuration: hdfs:////dominos-usdp-v3-fun/tez/tez.tar.gz

2025-07-02 11:15:38,851 INFO [pool-7-thread-1] client.TezClientUtils (TezClientUtils.java:setupTezJarsLocalResources(182)) - Using tez.lib.uris.classpath value from configuration: null

2025-07-02 11:15:38,855 INFO [main] session.SessionState (SessionState.java:dropPathAndUnregisterDeleteOnExit(885)) - Deleted directory: /tmp/hive/hadoop/63fc22ae-87a3-4d13-b59e-6ea5a99a9941 on fs with scheme hdfs

2025-07-02 11:15:38,856 INFO [main] session.SessionState (SessionState.java:dropPathAndUnregisterDeleteOnExit(885)) - Deleted directory: /tmp/hadoop/63fc22ae-87a3-4d13-b59e-6ea5a99a9941 on fs with scheme file

2025-07-02 11:15:38,857 INFO [main] metastore.HiveMetaStoreClient (HiveMetaStoreClient.java:close(600)) - Closed a connection to metastore, current connections: 1

2025-07-02 11:15:39,565 INFO - FINALIZE_SESSION

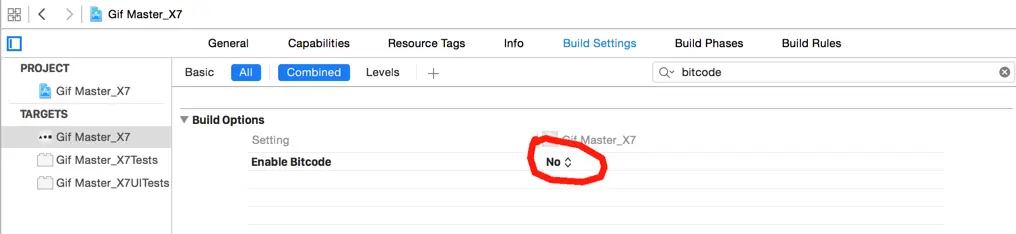

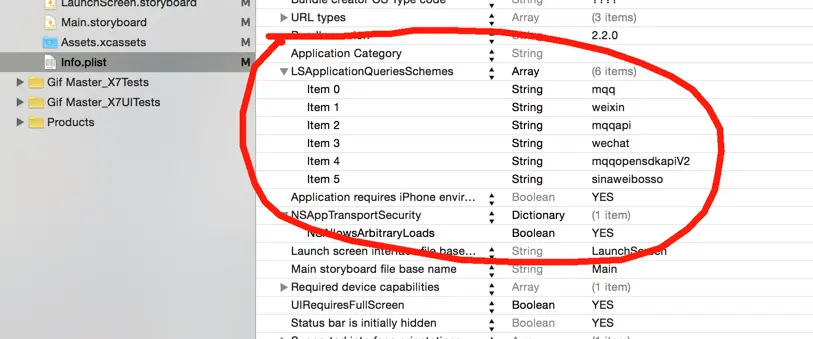

本文介绍如何解决iOS 9升级后控制台出现的安全警告问题,包括禁用Bitcode、配置LSApplicationQueriesSchemes及调整NSAppTransportSecurity设置的方法。

本文介绍如何解决iOS 9升级后控制台出现的安全警告问题,包括禁用Bitcode、配置LSApplicationQueriesSchemes及调整NSAppTransportSecurity设置的方法。

5万+

5万+

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?