最近在学习scrapy框架中写爬虫,被坑爹的空格键和tab键给害惨了。上代码:

错误代码:

import scrapy

import re

class GithubSpider(scrapy.Spider):

name = 'Github'

allowed_domains = ['github.com']

start_urls = ['https://github.com/login']

def parse(self, response):

authenticity_token = response.xpath("//input[@name='authenticity_token']/@value").extract_first()

utf8 = response.xpath("//input[@name='utf8']/@value").extract_first()

commit = response.xpath("//input[@name='commit']/@value").extract_first()

post_data = dict(

login="noobpythoner",

password="zhoudawei123",

authenticity_token=authenticity_token,

utf8=utf8,

commit=commit

)

yield scrapy.FormRequest(

"https: // github.com / session",

formdata=post_data,

callback=self.after_login

)

def after_login(self, response):

# with open("./a.html", "wb", encoding="utf-8") as file:

# file.write(response.body.decode())

print(re.findall("noobpythoner|NoobPythoner", response.body.decode()))

# print(response.body.decode())

上述代码错误之处在于: scrapy.FormRequest()函数的第一个参数,这是直接从浏览器复制过来的Url地址,正是由于url地址中的空格导致了请求不成功,返回结果没有任何数据:

正确的代码:

import scrapy

import re

class GithubSpider(scrapy.Spider):

name = 'Github'

allowed_domains = ['github.com']

start_urls = ['https://github.com/login']

def parse(self, response):

authenticity_token = response.xpath("//input[@name='authenticity_token']/@value").extract_first()

utf8 = response.xpath("//input[@name='utf8']/@value").extract_first()

commit = response.xpath("//input[@name='commit']/@value").extract_first()

post_data = dict(

login="noobpythoner",

password="zhoudawei123",

authenticity_token=authenticity_token,

utf8=utf8,

commit=commit

)

yield scrapy.FormRequest(

"https://github.com/session",

formdata=post_data,

callback=self.after_login

)

def after_login(self, response):

# with open("./a.html", "wb", encoding="utf-8") as file:

# file.write(response.body.decode())

print(re.findall("noobpythoner|NoobPythoner", response.body.decode()))

# print(response.body.decode())

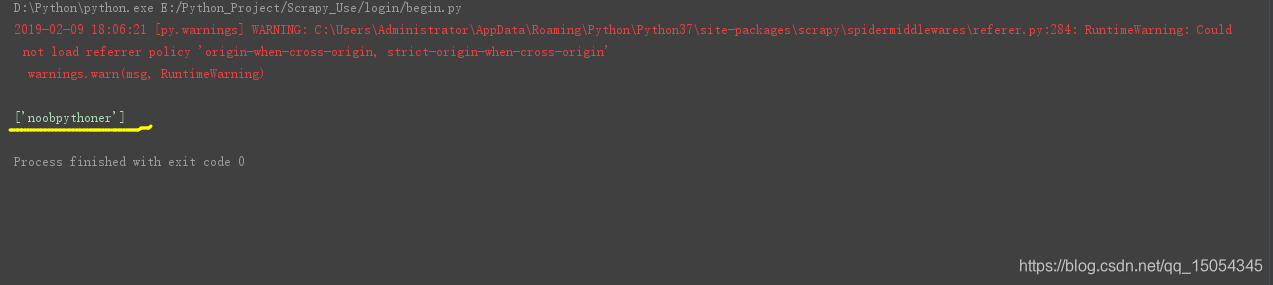

这下输出结果如下:

本文分享了使用Scrapy框架进行爬虫开发时遇到的问题,特别是关于如何正确处理URL中的空格,避免爬虫请求失败。通过对比错误与正确代码,详细解释了问题所在及解决方法。

本文分享了使用Scrapy框架进行爬虫开发时遇到的问题,特别是关于如何正确处理URL中的空格,避免爬虫请求失败。通过对比错误与正确代码,详细解释了问题所在及解决方法。

4238

4238

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?