1.概述

本文通过模拟一批数据,结合多进程、多线程、携程等相关知识,编写各种版本的python代码,再对比各版本代码处理数据的耗时情况,得出这些技术的运用对提升速度的影响和效果

2.数据

原始数据为模拟的一批爬虫数据,业务逻辑很简单,获取数据中的所有的联想词,并给每个联想词赋予一个权重。

2.1 原始数据

- 按语言划分,每个语言对应一个目录,本文模拟:en、de、es、tr 四个文件夹

- 每个文件夹中有10个json文件:data1.json ~ data10.json

- 每个json文件有200000行,总大小在80M左右

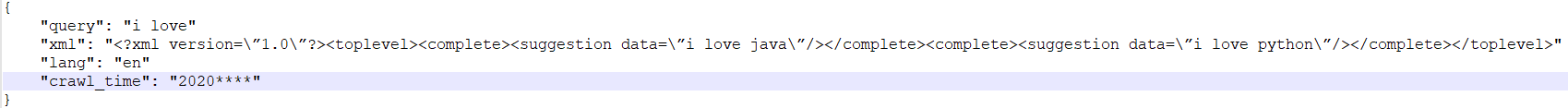

- 每行数据格式如下:

每条数据中有一个xml字段,一个xml字段中有一个firstlevel标签,一个firstlevel标签中可以有多个complete标签,每个complete标签对应一个联想词,存放在suggestion子标签的data属性中。

2.2 最终数据

最终就是每个语言目录生成一个文件,如 en 目录最终生成一个 en-suggestion 文件,文件的内容就是该语言目录中所有文件中的所有联想词的汇总,其中每一行内容的格式都为:联想词\t权重

3. 环境

本次运行脚本的机器环境如下:

- python:3.7

- cpu:2 核 4 线程

- system:window10

- ram:12G

4. 开始

4.1 版本一:单进程 + 单线程

即所有的代码逻辑均在主进程主线程中跑,三次运行的耗时日志如下:

| 第一次 | [MainProcess - MainThread] [78] [总耗时:263.15830993652344秒] |

|---|---|

| 第二次 | [MainProcess - MainThread] [78] [总耗时:236.7736451625824秒] |

| 第三次 | [MainProcess - MainThread] [78] [总耗时:232.79797506332397秒] |

| 平均耗时 | 235.64秒 |

import logging

import os

import json

import time

import datetime

import re

DATA_PATH = './crawl_data/'

OUT_PATH = "./processed_data/"

logging.basicConfig(

level=logging.INFO,

filename="script_v1.log",

filemode="a",

format="[%(processName)s - %(threadName)s] [%(lineno)d] [%(message)s]"

)

def write(lang, words_list):

if not os.path.exists(OUT_PATH):

os.mkdir(OUT_PATH, mode=0o700)

path = os.path.normpath(os.path.join(OUT_PATH, lang + "_suggestion"))

with open(path, mode='w', encoding='utf8') as f:

for words in words_list:

for word in words:

f.write(word)

def process(path, lines):

failed_line = 0

res = []

for line in lines:

try:

data = json.loads(line)

query = data['query']

xml = data['xml']

crawl_time = data['crawl_time']

year = datetime.datetime.now().year - int(crawl_time[0:4])

words = re.finditer(r"(?<=(suggestion data=\")).*?(?=(\"))", xml, re.I)

for i, word in enumerate(words):

word = word.group().strip()

weight = 1000 - len(query) - year - (0 if word.startswith(query) else 500)

res.append("%s\t%d\n" % (word, weight))

except Exception as e:

logging.error("[" + path + "] 数据处理异常:" + str(e))

failed_line += 1

continue

if failed_line:

logging.warning("[" + path + "] 错误数据条数:" + str(failed_line))

return res

def read(root, files):

res = []

for file in files:

path = os.path.normpath(os.path.join(root, file))

with open(path, mode='r', encoding='utf8') as f:

lines = f.readlines()

logging.debug("[" + path + "] 总行数:" + str(len(lines)))

res.append(process(path, lines))

return res

def main():

separator = ['\\', '/'][os.getcwd().find('\\') == -1]

for root, dirs, files in os.walk(DATA_PATH):

root = root.replace(['/', '\\'][separator == '/'], separator)

lang = root.rsplit(separator, 1)

if len(lang) == 2 and files:

logging.debug("[" + root + "] 总文件数:" + str(len(root)))

words_list = read(root, files)

write(lang[1], words_list)

if __name__ == '__main__':

start_time = time.time()

main()

logging.info("总耗时:" + str(time.time() - start_time) + "秒")

4.2 版本二:单进程 + 多线程

我们常用的python解释器是CPython解释器,CPython解释器的内存管理是非线程安全的,对此,CPthon中设置了一个GIL(global interpreter lock),即全局解释器锁,作用是防止多个线程同时执行python字节码,这就导致了同一时间只能有一个线程在执行python代码,而由于线程的切换也需要消耗时间,使用多线程编码,最终的耗时甚至可能比单线程还多。

对版本一的代码进行了修改,用一个线程去执行一个目录的数据,最终的耗时为:

| 第一次 | [MainProcess - Thread-2] [69] [[.\crawl_data\en] 耗时:235.6316602230072秒] [MainProcess - Thread-1] [69] [[.\crawl_data\de] 耗时:236.11511254310608秒] [MainProcess - Thread-3] [69] [[.\crawl_data\es] 耗时:237.1242356300354秒] [MainProcess - Thread-4] [69] [[.\crawl_data\tr] 耗时:238.1936755180359秒] [MainProcess - MainThread] [90] [总耗时:238.61105036735535秒] |

|---|---|

| 第二次 | [MainProcess - Thread-4] [69] [[.\crawl_data\tr] 耗时:241.21483945846558秒] [MainProcess - Thread-1] [69] [[.\crawl_data\de] 耗时:241.35374188423157秒] [MainProcess - Thread-3] [69] [[.\crawl_data\es] 耗时:241.35374188423157秒] [MainProcess - Thread-2] [69] [[.\crawl_data\en] 耗时:242.34314799308777秒] [MainProcess - MainThread] [90] [总耗时:242.69394850730896秒] |

| 第三次 | [MainProcess - Thread-3] [69] [[.\crawl_data\es] 耗时:241.1638629436493秒] [MainProcess - Thread-1] [69] [[.\crawl_data\de] 耗时:241.61929655075073秒] [MainProcess - Thread-2] [69] [[.\crawl_data\en] 耗时:241.91612911224365秒] [MainProcess - Thread-4] [69] [[.\crawl_data\tr] 耗时:242.71070337295532秒] [MainProcess - MainThread] [90] [总耗时:243.15007138252258秒] |

| 平均耗时 | 241.49秒 |

| 相较版本一耗时 | + 5.85秒 |

import logging

import os

import json

import time

import datetime

import re

import threading

DATA_PATH = './crawl_data/'

OUT_PATH = "./processed_data/"

logging.basicConfig(

level=logging.INFO,

filename="script_v2.log",

filemode="a",

format="[%(processName)s - %(threadName)s] [%(lineno)d] [%(message)s]"

)

def write(lang, words_list):

if not os.path.exists(OUT_PATH):

os.mkdir(OUT_PATH, mode=0o700)

path = os.path.normpath(os.path.join(OUT_PATH, lang + "_suggestion"))

with open(path, mode='w', encoding='utf8') as f:

for words in words_list:

for word in words:

f.write(word)

def process(path, lines):

failed_line = 0

res = []

for line in lines:

try:

data = json.loads(line)

query = data['query']

xml = data['xml']

crawl_time = data['crawl_time']

year = datetime.datetime.now().year - int(crawl_time[0:4])

words = re.finditer(r"(?<=(suggestion data=\")).*?(?=(\"))", xml, re.I)

for i, word in enumerate(words):

word = word.group().strip()

weight = 1000 - len(query) - year - (0 if word.startswith(query) else 500)

res.append("%s\t%d\n" % (word, weight))

except Exception as e:

logging.error("[" + path + "] 数据处理异常:" + str(e))

failed_line += 1

continue

if failed_line:

logging.warning("[" + path + "] 错误数据条数:" + str(failed_line))

return res

def read(root, files):

res = []

for file in files:

path = os.path.normpath(os.path.join(root, file))

with open(path, mode='r', encoding='utf8') as f:

lines = f.readlines()

logging.debug("[" + path + "] 总行数:" + str(len(lines)))

res.append(process(path, lines))

return res

def multiple_thread(language, root, files):

thread_start_time = time.time()

words_list = read(root, files)

write(language, words_list)

logging.info("[" + root + "] 耗时:" + str(time.time() - thread_start_time) + "秒")

def main():

separator = ['\\', '/'][os.getcwd().find('\\') == -1]

threads = []

for root, dirs, files in os.walk(DATA_PATH):

root = root.replace(['/', '\\'][separator == '/'], separator)

lang = root.rsplit(separator, 1)

if len(lang) == 2 and files:

logging.debug("[" + root + "] 总文件数:" + str(len(root)))

threads.append(threading.Thread(target=multiple_thread, args=(lang[1], root, files, )))

for thread in threads:

thread.start()

for thread in threads:

thread.join()

if __name__ == '__main__':

start_time = time.time()

main()

logging.info("总耗时:" + str(time.time() - start_time) + "秒")

难道说多线程不如单线程吗

其实不是,只有在计算密集型代码中,才可能会出现耗时比单线程还多的情况,但是对于IO密集型代码而言,在IO阻塞的过程中,有充足的时间进行线程的切换,切换之后还能在剩余的阻塞时间中运行其他线程代码,此时多线程相较于单线程的优势就体现出来了。

比如,在代码中加入一段5秒的睡眠,最终的耗时表现为只是多出了一个睡眠时间5秒上下,而不是 4个线程睡眠时间(4 * 5)的20 秒:

| 耗时情况 | [MainProcess - Thread-4] [70] [[.\crawl_data\tr] 耗时:247.21197056770325秒] [MainProcess - Thread-2] [70] [[.\crawl_data\en] 耗时:247.75143885612488秒] [MainProcess - Thread-1] [70] [[.\crawl_data\de] 耗时:248.3890733718872秒] [MainProcess - Thread-3] [70] [[.\crawl_data\es] 耗时:248.7708637714386秒] [MainProcess - MainThread] [91] [总耗时:249.15164732933044秒] |

|---|---|

| 相较未进行睡眠的耗时 | + 7.65秒 |

import logging

import os

import json

import time

import datetime

import re

import threading

DATA_PATH = './crawl_data/'

OUT_PATH = "./processed_data/"

logging.basicConfig(

level=logging.INFO,

filename="script_v2_1.log",

filemode="a",

format="[%(processName)s - %(threadName)s] [%(lineno)d] [%(message)s]"

)

def write(lang, words_list):

if not os.path.exists(OUT_PATH):

os.mkdir(OUT_PATH, mode=0o700)

path = os.path.normpath(os.path.join(OUT_PATH, lang + "_suggestion"))

with open(path, mode='w', encoding='utf8') as f:

for words in words_list:

for word in words:

f.write(word)

def process(path, lines):

failed_line = 0

res = []

for line in lines:

try:

data = json.loads(line)

query = data['query']

xml = data['xml']

crawl_time = data['crawl_time']

year = datetime.datetime.now().year - int(crawl_time[0:4])

words = re.finditer(r"(?<=(suggestion data=\")).*?(?=(\"))", xml, re.I)

for i, word in enumerate(words):

word = word.group().strip()

weight = 1000 - len(query) - year - (0 if word.startswith(query) else 500)

res.append("%s\t%d\n" % (word, weight))

except Exception as e:

logging.error("[" + path + "] 数据处理异常:" + str(e))

failed_line += 1

continue

if failed_line:

logging.warning("[" + path + "] 错误数据条数:" + str(failed_line))

return res

def read(root, files):

res = []

for file in files:

path = os.path.normpath(os.path.join(root, file))

with open(path, mode='r', encoding='utf8') as f:

lines = f.readlines()

logging.debug("[" + path + "] 总行数:" + str(len(lines)))

res.append(process(path, lines))

return res

def multiple_thread(language, root, files):

thread_start_time = time.time()

# 在这里为每个线程添加5秒的睡眠

time.sleep(5.0)

words_list = read(root, files)

write(language, words_list)

logging.info("[" + root + "] 耗时:" + str(time.time() - thread_start_time) + "秒")

def main():

separator = ['\\', '/'][os.getcwd().find('\\') == -1]

threads = []

for root, dirs, files in os.walk(DATA_PATH):

root = root.replace(['/', '\\'][separator == '/'], separator)

lang = root.rsplit(separator, 1)

if len(lang) == 2 and files:

logging.debug("[" + root + "] 总文件数:" + str(len(root)))

threads.append(threading.Thread(target=multiple_thread, args=(lang[1], root, files, )))

for thread in threads:

thread.start()

for thread in threads:

thread.join()

if __name__ == '__main__':

start_time = time.time()

main()

logging.info("总耗时:" + str(time.time() - start_time) + "秒")

4.3 版本三:多进程

由于GIL锁的存在,使用多进程就显得更加稳定和保险,因为无论是计算密集型操作还是IO密集型操作,使用多进程的方式总是并发执行的。

将版本二中的多线程改成多进程,耗时情况和代码如下:

| 第一次 |

[SpawnPoolWorker-1 - MainThread] [69] [[.\crawl_data\es] 耗时:115.60315346717834秒] [SpawnPoolWorker-2 - MainThread] [69] [[.\crawl_data\de] 耗时:116.03115606307983秒] [SpawnPoolWorker-4 - MainThread] [69] [[.\crawl_data\tr] 耗时:116.69912576675415秒] [SpawnPoolWorker-3 - MainThread] [69] [[.\crawl_data\en] 耗时:117.69917392730713秒] [MainProcess - MainThread] [92] [总耗时:118.28306794166565秒] |

|---|---|

| 第二次 |

[SpawnPoolWorker-4 - MainThread] [69] [[.\crawl_data\es] 耗时:119.81747388839722秒] [SpawnPoolWorker-3 - MainThread] [69] [[.\crawl_data\de] 耗时:120.19747161865234秒] [SpawnPoolWorker-2 - MainThread] [69] [[.\crawl_data\tr] 耗时:120.1654908657074秒] [SpawnPoolWorker-1 - MainThread] [69] [[.\crawl_data\en] 耗时:120.23749685287476秒] [MainProcess - MainThread] [92] [总耗时:121.07416224479675秒] |

| 第三次 |

[SpawnPoolWorker-3 - MainThread] [69] [[.\crawl_data\tr] 耗时:116.32428741455078秒] [SpawnPoolWorker-1 - MainThread] [69] [[.\crawl_data\en] 耗时:116.59230089187622秒] [SpawnPoolWorker-4 - MainThread] [69] [[.\crawl_data\es] 耗时:117.0862946510315秒] [SpawnPoolWorker-2 - MainThread] [69] [[.\crawl_data\de] 耗时:117.34933495521545秒] [MainProcess - MainThread] [92] [总耗时:117.96198987960815秒] |

| 平均耗时 | 119.11秒 |

| 相较版本一耗时 | - 130.04秒 |

import logging

import os

import json

import time

import datetime

import re

from multiprocessing import Pool, Process

DATA_PATH = './crawl_data/'

OUT_PATH = "./processed_data/"

logging.basicConfig(

level=logging.INFO,

filename="script_v3.log",

filemode="a",

format="[%(processName)s - %(threadName)s] [%(lineno)d] [%(message)s]"

)

def write(lang, words_list):

if not os.path.exists(OUT_PATH):

os.mkdir(OUT_PATH, mode=0o700)

path = os.path.normpath(os.path.join(OUT_PATH, lang + "_suggestion"))

with open(path, mode='w', encoding='utf8') as f:

for words in words_list:

for word in words:

f.write(word)

def process(path, lines):

failed_line = 0

res = []

for line in lines:

try:

data = json.loads(line)

query = data['query']

xml = data['xml']

crawl_time = data['crawl_time']

year = datetime.datetime.now().year - int(crawl_time[0:4])

words = re.finditer(r"(?<=(suggestion data=\")).*?(?=(\"))", xml, re.I)

for i, word in enumerate(words):

word = word.group().strip()

weight = 1000 - len(query) - year - (0 if word.startswith(query) else 500)

res.append("%s\t%d\n" % (word, weight))

except Exception as e:

logging.error("[" + path + "] 数据处理异常:" + str(e))

failed_line += 1

continue

if failed_line:

logging.warning("[" + path + "] 错误数据条数:" + str(failed_line))

return res

def read(root, files):

res = []

for file in files:

path = os.path.normpath(os.path.join(root, file))

with open(path, mode='r', encoding='utf8') as f:

lines = f.readlines()

logging.debug("[" + path + "] 总行数:" + str(len(lines)))

res.append(process(path, lines))

return res

def multiple_process(language, root, files):

thread_start_time = time.time()

words_list = read(root, files)

write(language, words_list)

logging.info("[" + root + "] 耗时:" + str(time.time() - thread_start_time) + "秒")

def main():

separator = ['\\', '/'][os.getcwd().find('\\') == -1]

processes = []

for root, dirs, files in os.walk(DATA_PATH):

root = root.replace(['/', '\\'][separator == '/'], separator)

lang = root.rsplit(separator, 1)

if len(lang) == 2 and files:

logging.debug("[" + root + "] 总文件数:" + str(len(root)))

processes.append((lang[1], root, files))

if processes:

pool = Pool(len(processes))

for language, root, files in processes:

pool.apply_async(multiple_process, args=(language, root, files, ))

pool.close()

pool.join()

if __name__ == '__main__':

start_time = time.time()

main()

logging.info("总耗时:" + str(time.time() - start_time) + "秒")

CPU核心数、线程数,以及python多进程之间的相关知识

- CPU双核

不是指有两个CPU,而是指CPU中有两个物理内核,电脑的所有计算都是在内核中进行的。 - 双核四线程

指的是通过超线程技术,其实就是利用闲置的CPU资源,模拟出一个虚拟内核,对于用户而言,就相当于多出了一个物理内核,双核四线程,也就是相当于有了4个核心,这四个核心是可以同时并发执行任务的,但是有个限制就是,当一个内核和其对应的虚拟内核同时使用同一个资源时,有一方需要进入等待。 - python程序多进程

无论程序开启的进程数是多少,程序都是并发执行的,当然这个并发并不意味着是同时进行,而是CPU不断轮询执行使之看起来像是同时执行。

4.4 版本四:多进程 + 协程

在多进程版本中,是用一个进程去跑一个目录的所有文件;现在不妨进一步地,目录中的一个文件对应使用一个线程去跑,但是这里我使用协程替代线程(一方面由于python多线程的特殊性,本文的程序也不是IO密集型;另一方面协程较多线程会更加高效),即一个文件对应一个协程。

| 耗时日志 | [SpawnPoolWorker-3 - MainThread] [64] [[crawl_data\de\data1.json] 协程耗时:9.604628562927246秒] [SpawnPoolWorker-4 - MainThread] [64] [[crawl_data\tr\data1.json] 协程耗时:9.598664283752441秒] [SpawnPoolWorker-1 - MainThread] [64] [[crawl_data\es\data1.json] 协程耗时:9.65671682357788秒] [SpawnPoolWorker-2 - MainThread] [64] [[crawl_data\en\data1.json] 协程耗时:9.73966908454895秒] [SpawnPoolWorker-3 - MainThread] [64] [[crawl_data\de\data10.json] 协程耗时:9.799988746643066秒] [SpawnPoolWorker-2 - MainThread] [64] [[crawl_data\en\data10.json] 协程耗时:9.654947519302368秒] [SpawnPoolWorker-4 - MainThread] [64] [[crawl_data\tr\data10.json] 协程耗时:9.800961971282959秒] [SpawnPoolWorker-1 - MainThread] [64] [[crawl_data\es\data10.json] 协程耗时:9.768980741500854秒] [SpawnPoolWorker-1 - MainThread] [64] [[crawl_data\es\data2.json] 协程耗时:10.656001567840576秒] [SpawnPoolWorker-3 - MainThread] [64] [[crawl_data\de\data2.json] 协程耗时:10.751980781555176秒] [SpawnPoolWorker-2 - MainThread] [64] [[crawl_data\en\data2.json] 协程耗时:10.746012926101685秒] [SpawnPoolWorker-4 - MainThread] [64] [[crawl_data\tr\data2.json] 协程耗时:10.832990884780884秒] [SpawnPoolWorker-1 - MainThread] [64] [[crawl_data\es\data3.json] 协程耗时:10.682979106903076秒] [SpawnPoolWorker-3 - MainThread] [64] [[crawl_data\de\data3.json] 协程耗时:10.68197512626648秒] [SpawnPoolWorker-2 - MainThread] [64] [[crawl_data\en\data3.json] 协程耗时:10.737026453018188秒] [SpawnPoolWorker-4 - MainThread] [64] [[crawl_data\tr\data3.json] 协程耗时:10.760026693344116秒] [SpawnPoolWorker-1 - MainThread] [64] [[crawl_data\es\data4.json] 协程耗时:10.677027702331543秒] [SpawnPoolWorker-3 - MainThread] [64] [[crawl_data\de\data4.json] 协程耗时:10.73794412612915秒] [SpawnPoolWorker-2 - MainThread] [64] [[crawl_data\en\data4.json] 协程耗时:10.68701171875秒] [SpawnPoolWorker-4 - MainThread] [64] [[crawl_data\tr\data4.json] 协程耗时:10.663997411727905秒] [SpawnPoolWorker-1 - MainThread] [64] [[crawl_data\es\data5.json] 协程耗时:10.916991949081421秒] [SpawnPoolWorker-3 - MainThread] [64] [[crawl_data\de\data5.json] 协程耗时:10.858995199203491秒] [SpawnPoolWorker-2 - MainThread] [64] [[crawl_data\en\data5.json] 协程耗时:10.849001169204712秒] [SpawnPoolWorker-4 - MainThread] [64] [[crawl_data\tr\data5.json] 协程耗时:10.99298882484436秒] [SpawnPoolWorker-1 - MainThread] [64] [[crawl_data\es\data6.json] 协程耗时:11.254186868667603秒] [SpawnPoolWorker-3 - MainThread] [64] [[crawl_data\de\data6.json] 协程耗时:11.265178442001343秒] [SpawnPoolWorker-2 - MainThread] [64] [[crawl_data\en\data6.json] 协程耗时:11.258183002471924秒] [SpawnPoolWorker-4 - MainThread] [64] [[crawl_data\tr\data6.json] 协程耗时:11.220197677612305秒] [SpawnPoolWorker-3 - MainThread] [64] [[crawl_data\de\data7.json] 协程耗时:11.461820363998413秒] [SpawnPoolWorker-1 - MainThread] [64] [[crawl_data\es\data7.json] 协程耗时:11.518789529800415秒] [SpawnPoolWorker-2 - MainThread] [64] [[crawl_data\en\data7.json] 协程耗时:11.595833539962769秒] [SpawnPoolWorker-4 - MainThread] [64] [[crawl_data\tr\data7.json] 协程耗时:11.446828842163086秒] [SpawnPoolWorker-3 - MainThread] [64] [[crawl_data\de\data8.json] 协程耗时:10.831985473632812秒] [SpawnPoolWorker-2 - MainThread] [64] [[crawl_data\en\data8.json] 协程耗时:10.815017700195312秒] [SpawnPoolWorker-1 - MainThread] [64] [[crawl_data\es\data8.json] 协程耗时:10.993000984191895秒] [SpawnPoolWorker-4 - MainThread] [64] [[crawl_data\tr\data8.json] 协程耗时:10.870014190673828秒] [SpawnPoolWorker-3 - MainThread] [64] [[crawl_data\de\data9.json] 协程耗时:10.83197832107544秒] [SpawnPoolWorker-1 - MainThread] [64] [[crawl_data\es\data9.json] 协程耗时:10.763976097106934秒] [SpawnPoolWorker-2 - MainThread] [64] [[crawl_data\en\data9.json] 协程耗时:10.813977003097534秒] [SpawnPoolWorker-4 - MainThread] [64] [[crawl_data\tr\data9.json] 协程耗时:10.772124528884888秒] [SpawnPoolWorker-3 - MainThread] [80] [[.\crawl_data\de] 进程耗时:113.76663064956665秒] [SpawnPoolWorker-2 - MainThread] [80] [[.\crawl_data\en] 进程耗时:113.78561997413635秒] [SpawnPoolWorker-1 - MainThread] [80] [[.\crawl_data\es] 进程耗时:113.79564952850342秒] [SpawnPoolWorker-4 - MainThread] [80] [[.\crawl_data\tr] 进程耗时:113.92777371406555秒] [MainProcess - MainThread] [103] [总耗时:114.69136810302734秒] |

|---|---|

| 相较版本四耗时 | - 4.42秒,由于本程序数据处理过程的代码较少,所以和直接使用多进程的耗时相差不多,而且协程的切换一样需要耗时,所以如果处理过程的代码过少时,和 多线程跟单线程的情况一样,可能比单独使用多进程的耗时还会稍多些,当然实际生产中数据处理逻辑一般不至于太少 |

import logging

import os

import json

import time

import datetime

import re

import gevent

from multiprocessing import Pool

DATA_PATH = './crawl_data/'

OUT_PATH = "./processed_data/"

logging.basicConfig(

level=logging.INFO,

filename="script_v4.log",

filemode="a",

format="[%(processName)s - %(threadName)s] [%(lineno)d] [%(message)s]"

)

def write(lang, words_list):

if not os.path.exists(OUT_PATH):

os.mkdir(OUT_PATH, mode=0o700)

path = os.path.normpath(os.path.join(OUT_PATH, lang + "_suggestion"))

with open(path, mode='w', encoding='utf8') as f:

for words in words_list:

for word in words:

f.write(word)

def process(path, lines):

failed_line = 0

res = []

for line in lines:

try:

data = json.loads(line)

query = data['query']

xml = data['xml']

crawl_time = data['crawl_time']

year = datetime.datetime.now().year - int(crawl_time[0:4])

words = re.finditer(r"(?<=(suggestion data=\")).*?(?=(\"))", xml, re.I)

for i, word in enumerate(words):

word = word.group().strip()

weight = 1000 - len(query) - year - (0 if word.startswith(query) else 500)

res.append("%s\t%d\n" % (word, weight))

except Exception as e:

logging.error("[" + path + "] 数据处理异常:" + str(e))

failed_line += 1

continue

if failed_line:

logging.warning("[" + path + "] 错误数据条数:" + str(failed_line))

return res

def read(path):

with open(path, mode='r', encoding='utf8') as f:

return f.readlines()

def multiple_coroutine(path):

coroutine_start_time = time.time()

lines = read(path)

res = process(path, lines)

logging.info("[" + path + "] 携程耗时:" + str(time.time() - coroutine_start_time) + "秒")

return res

def multiple_process(language, root, files):

process_start_time = time.time()

coroutines = []

for file in files:

path = os.path.normpath(os.path.join(root, file))

coroutines.append(gevent.spawn(multiple_coroutine, path))

results = gevent.joinall(coroutines)

res = []

for i in range(len(results)):

res.append(results[i].value)

if res:

write(language, res)

logging.info("[" + root + "] 进程耗时:" + str(time.time() - process_start_time) + "秒")

def main():

separator = ['\\', '/'][os.getcwd().find('\\') == -1]

processes = []

for root, dirs, files in os.walk(DATA_PATH):

root = root.replace(['/', '\\'][separator == '/'], separator)

lang = root.rsplit(separator, 1)

if len(lang) == 2 and files:

logging.debug("[" + root + "] 总文件数:" + str(len(root)))

processes.append((lang[1], root, files))

if processes:

pool = Pool(len(processes))

for language, root, files in processes:

pool.apply_async(multiple_process, args=(language, root, files,))

pool.close()

pool.join()

if __name__ == '__main__':

start_time = time.time()

main()

logging.info("总耗时:" + str(time.time() - start_time) + "秒")

4.5 版本五:多进程 + 主协程 + 子协程

数据少时跑得快,数据越多跑得越慢,这是显而易见的,所以,不妨更进一步地,将每批处理的数据进行分割,在版本四种,用一个协程去处理一个文件,每次都是直接处理200000条数据,现在在这个协程的基础上再创建出一批子协程,每个子协程处理2000条数据,即一个文件对应一个主协程,一个主协程中使用多个子协程。

这里我将目录减少为2个:en 和 de,目录中的data1~data10增加到400000行:

| 多进程 + 主携程:三次平均耗时 | ( 206.96 + 220.19 + 222.28 ) / 3 = 216.476秒 |

|---|---|

| 多进程 + 主携程 + 子携程:三次平均耗时 | ( 188.78 + 172.37 + 186.73 ) / 3 = 182.626秒 |

import logging

import os

import json

import time

import datetime

import re

import gevent

from multiprocessing import Pool

DATA_PATH = './crawl_data/'

OUT_PATH = "./processed_data/"

BATCH_COUNT = 2000

logging.basicConfig(

level=logging.INFO,

filename="script_v5.log",

filemode="a",

format="[%(processName)s - %(threadName)s] [%(lineno)d] [%(message)s]"

)

def write(lang, words_list):

if not os.path.exists(OUT_PATH):

os.mkdir(OUT_PATH, mode=0o700)

path = os.path.normpath(os.path.join(OUT_PATH, lang + "_suggestion"))

with open(path, mode='w', encoding='utf8') as f:

for words in words_list:

for word in words:

f.write(word)

def process(path, lines):

failed_line = 0

res = []

for line in lines:

try:

data = json.loads(line)

query = data['query']

xml = data['xml']

crawl_time = data['crawl_time']

year = datetime.datetime.now().year - int(crawl_time[0:4])

words = re.finditer(r"(?<=(suggestion data=\")).*?(?=(\"))", xml, re.I)

for i, word in enumerate(words):

word = word.group().strip()

weight = 1000 - len(query) - year - (0 if word.startswith(query) else 500)

res.append("%s\t%d\n" % (word, weight))

except Exception as e:

logging.error("[" + path + "] 数据处理异常:" + str(e))

failed_line += 1

continue

if failed_line:

logging.warning("[" + path + "] 错误数据条数:" + str(failed_line))

return res

def read(path):

res = []

with open(path, mode='r', encoding='utf8') as f:

lines = f.readlines()

for i, line in enumerate(lines):

if i % BATCH_COUNT == 0:

res.append([])

res[len(res) - 1].append(line)

return res

def child_coroutine(path, lines, child_coroutine_id):

child_coroutine_start_time = time.time()

res = process(path, lines)

logging.info("[" + path + "] 子携程-" + str(child_coroutine_id) + " 耗时" + str(time.time() - child_coroutine_start_time) + "秒")

return res

def main_coroutine(path, main_coroutine_id):

main_coroutine_start_time = time.time()

lines_list = read(path)

child_coroutines = []

for i, lines in enumerate(lines_list):

child_coroutines.append(gevent.spawn(child_coroutine, path, lines, i + 1))

results = gevent.joinall(child_coroutines)

res = []

for i in range(len(results)):

res += results[i].value

logging.info("[" + path + "] 主携程-" + str(main_coroutine_id) + " 耗时" + str(time.time() - main_coroutine_start_time) + "秒")

return res

def multiple_process(language, root, files):

process_start_time = time.time()

coroutines = []

for i, file in enumerate(files):

path = os.path.normpath(os.path.join(root, file))

coroutines.append(gevent.spawn(main_coroutine, path, i + 1))

results = gevent.joinall(coroutines)

res = []

for i in range(len(results)):

res.append(results[i].value)

if res:

write(language, res)

logging.info("[" + root + "] 进程耗时:" + str(time.time() - process_start_time) + "秒")

def main():

separator = ['\\', '/'][os.getcwd().find('\\') == -1]

processes = []

for root, dirs, files in os.walk(DATA_PATH):

root = root.replace(['/', '\\'][separator == '/'], separator)

lang = root.rsplit(separator, 1)

if len(lang) == 2 and files:

logging.debug("[" + root + "] 总文件数:" + str(len(root)))

processes.append((lang[1], root, files))

if processes:

pool = Pool(len(processes))

for language, root, files in processes:

pool.apply_async(multiple_process, args=(language, root, files,))

pool.close()

pool.join()

if __name__ == '__main__':

start_time = time.time()

main()

logging.info("总耗时:" + str(time.time() - start_time) + "秒")

每批处理数据量不是越高或者越低就越好,需要根据实际情况进行调试。

本文通过对比单线程、多线程、多进程及协程在数据处理中的应用,展示了如何优化数据处理效率。实验表明,合理利用多进程和协程能显著提升处理大量数据的速度。

本文通过对比单线程、多线程、多进程及协程在数据处理中的应用,展示了如何优化数据处理效率。实验表明,合理利用多进程和协程能显著提升处理大量数据的速度。

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?