目录

在学习spark 的时候,就想着可不可以试着实现一下,spark的底层master和worker的心跳和注册的功能,于是有了下面的代码。很详细。

如果了解更多的内容,可以关注公众号 ITwords ,第一时间获取更多资讯。

好了,直接上代码了。

首先需要有scala的SDK,最好是maven工程。然后引入akka的依赖。然后可以直接复制使用代码,亲测正确。

pom.xml文件:

<!--定义一下常量-->

<properties>

<encoding>UTF-8</encoding>

<scala.version>2.11.8</scala.version>

<scala.compat.version>2.11</scala.compat.version>

<akka.version>2.4.17</akka.version>

</properties>

<dependencies>

<!-- 添加scala的依赖-->

<dependency>

<!-- org.scala-lang-->

<groupId>org.scala-lang</groupId>

<artifactId>scala-library</artifactId>

<version>2.11.8</version>

</dependency>

<dependency>

<groupId>com.typesafe.akka</groupId>

<artifactId>akka-actor_${scala.compat.version}</artifactId>

<version>${akka.version}</version>

</dependency>

<dependency>

<groupId>com.typesafe.akka</groupId>

<artifactId>akka-remote_${scala.compat.version}</artifactId>

<version>${akka.version}</version>

</dependency>

</dependencies>

<!-- 指定插件-->

<build>

<!-- 指定源码包和测试包的位置-->

<sourceDirectory>src/main/scala</sourceDirectory>

<testSourceDirectory>src/test/scala</testSourceDirectory>

<plugins>

<plugin>

<groupId>net.alchim31.maven</groupId>

<artifactId>scala-maven-plugin</artifactId>

<version>3.2.2</version>

<executions>

<execution>

<goals>

<goal>compile</goal>

<goal>testCompile</goal>

</goals>

<configuration>

<args>

<arg>-dependencyfile</arg>

<arg>${project.build.directory}/.scala_dependencies</arg>

</args>

</configuration>

</execution>

</executions>

</plugin>

<plugin>

<groupId>org.apache.maven.plugins</groupId>

<artifactId>maven-shade-plugin</artifactId>

<version>2.4.3</version>

<executions>

<execution>

<phase>package</phase>

<goals>

<goal>shade</goal>

</goals>

<configuration>

<filters>

<filter>

<artifact>*:*</artifact>

<excludes>

<exclude>META-INF/*.SF</exclude>

<exclude>META-INF/*.DSA</exclude>

<exclude>META-INF/*.RSA</exclude>

</excludes>

</filter>

</filters>

<transformers>

<transformer implementation= "org.apache.maven.plugins.shade.resource.AppendingTransformer">

<resource>reference.conf</resource>

</transformer>

<!-- 指定main方法-->

<transformer implementation="org.apache.maven.plugins.shade.resource.ManifestResourceTransformer">

<mainClass>xxx</mainClass>

</transformer>

</transformers>

</configuration>

</execution>

</executions>

</plugin>

</plugins>

</build>SparkMaster

package com.lxb.sparkmasterworker.master

import akka.actor.{Actor, ActorRef, ActorSystem, Props}

import com.lxb.sparkmasterworker.commen.{HeartBeat, RegisterInfoSuccess, RegisterMessage, RemoveTimeOutWorker, StartTimeOutWorker, WorkerInfo}

import com.typesafe.config.ConfigFactory

import scala.collection.mutable

import scala.concurrent.duration._

class SparkMaster extends Actor{

// 建立一个map,作为存储所有worker的信息

var workers = mutable.Map[String,WorkerInfo]()

override def receive: Receive = {

case "start"=>{

println("master 开始工作了。。。")

// 开始检测,调用检测的样例类

self ! StartTimeOutWorker

}

case RegisterMessage(id,cpu,ram)=>{

// 判断uuid是否已经存在

if(!workers.contains(id)){// 不存在,可以注册

workers += (id->new WorkerInfo(id,cpu,ram))

println("workers="+workers)

sender() ! RegisterInfoSuccess

}

}

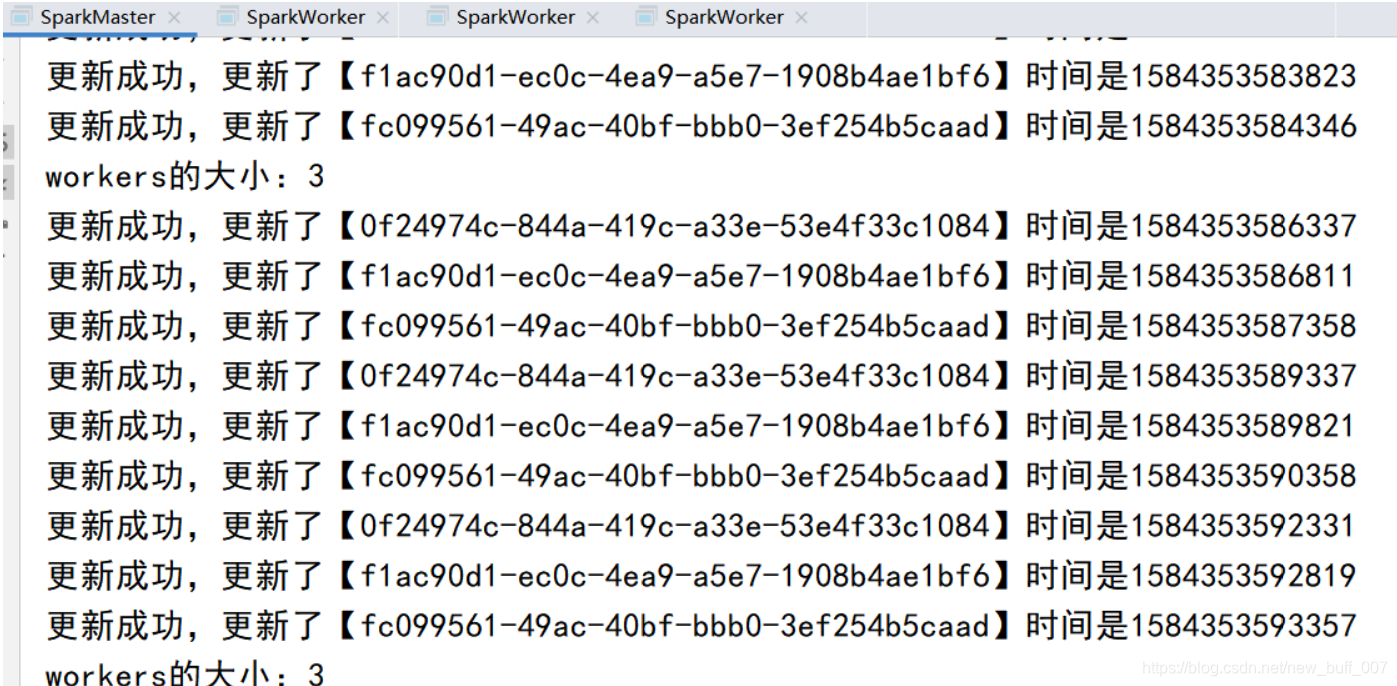

case HeartBeat(id) =>{

val workerInfo = workers(id)

workerInfo.lastHeartBeat = System.currentTimeMillis()

println("更新成功,更新了【"+id+"】时间是"+System.currentTimeMillis())

}

// 开始检测

case StartTimeOutWorker=>{

import context.dispatcher

// 每隔9秒开始调用一次检测的程序

context.system.scheduler.schedule(0 millis,9000 millis,self,RemoveTimeOutWorker )

}

case RemoveTimeOutWorker=>{

// 首先取出所有的workerinfos

val workerInfos = workers.values

// 获取当前时间

val nowTime = System.currentTimeMillis()

// 先过滤出来所有超过9秒没有发送心跳检测的worker,然后再利用循环删除掉,

// 大于6秒的时候即为过期的,距离上一个三秒的九秒

workerInfos.filter(workerInfo =>(nowTime - workerInfo.lastHeartBeat > 6000))

.foreach(workerInfo=>workers.remove(workerInfo.id))

println("workers的大小:"+workers.size)

}

}

}

object SparkMaster{

def main(args: Array[String]): Unit = {

if (args.length != 3){

println("请输入是正确的信息:masterHost masterPort masterName")

// 退出

sys.exit()

}

var host = args(0)

var port = args(1)

var name = args(2)

val config = ConfigFactory.parseString(

s"""

|akka.actor.provider="akka.remote.RemoteActorRefProvider"

|akka.remote.netty.tcp.hostname=$host

|akka.remote.netty.tcp.port=$port

|""".stripMargin)

// 创建actorSystem

val sparkMaster: ActorSystem = ActorSystem("SparkMaster", config)

val sparkMasterRef: ActorRef = sparkMaster.actorOf(Props[SparkMaster], s"${name}")

sparkMasterRef ! "start"

}

}

SparkWorker

package com.lxb.sparkmasterworker.worker

import akka.actor.{Actor, ActorRef, ActorSelection, ActorSystem, Props}

import com.lxb.sparkmasterworker.commen.{HeartBeat, HeartBeatInfo, RegisterInfoSuccess, RegisterMessage, StartTimeOutWorker}

import com.typesafe.config.ConfigFactory

import scala.concurrent.duration._

class SparkWorker(masterHost:String,masterPort:Int,masterName:String) extends Actor{

var masterProxy : ActorSelection = _

// 创建一个uuid,作为id

val id = java.util.UUID.randomUUID().toString

override def preStart(): Unit = {

masterProxy = context.actorSelection(s"akka.tcp://SparkMaster@$masterHost:$masterPort/user/${masterName}")

println(masterProxy)

}

override def receive: Receive = {

case "start" => {

println("worker 开始注册。。")

masterProxy ! RegisterMessage(id,8,8 * 1024)

}

case RegisterInfoSuccess =>{

println("id = ["+id+"]的 worker 注册成功了。。。。")

// 定时发起心跳提醒

import context.dispatcher

// 0millis:表示立即执行

// 3000millis:表示没三秒执行一次

// self,HeartBeatInfo:表示自己给自己发消息,类型

context.system.scheduler.schedule(0 millis,3000 millis,self,HeartBeatInfo)

}

case HeartBeatInfo=>{

// 把id 发过去

masterProxy ! HeartBeat(id)

}

}

}

object SparkWorker{

def main(args: Array[String]): Unit = {

if (args.length != 6){

println("请输入参数 clientHost clientPort workerName masterHost masterPort masterName")

sys.exit()

}

var clientHost = args(0)

var clientPort = args(1)

var workerName = args(2)

var masterHost = args(3)

var masterPort = args(4)

var masterName = args(5)

val config = ConfigFactory.parseString(

s"""

|akka.actor.provider="akka.remote.RemoteActorRefProvider"

|akka.remote.netty.tcp.hostname=$clientHost

|akka.remote.netty.tcp.port=$clientPort

|""".stripMargin)// 这里的stripMargin就是按指定的字符进行分割字符串,可以有参数

// 创建actorSystem

val sparkWorkerSystem: ActorSystem = ActorSystem("SparkWorker", config)

// 创建actor

val actorRef: ActorRef = sparkWorkerSystem.actorOf(Props(new SparkWorker(masterHost, masterPort.toInt,masterName)), s"${workerName}")

actorRef ! "start"

}

}

MessageProtocol

package com.lxb.sparkmasterworker.commen

// 注册的信息

case class RegisterMessage(id:String,cpu:Int,ram:Int)

// worker的信息,因为之后可能会扩展,所以不使用RegisterMessage

class WorkerInfo(id:String,cpu:Int,ram:Int){

var lastHeartBeat : Long = System.currentTimeMillis()

}

// 注册成功之后的消息

case object RegisterInfoSuccess

// worker向自己发起心跳提醒

case class HeartBeatInfo()

// worker向master发起心跳

case class HeartBeat(id: String)参数配置

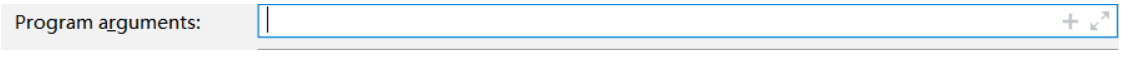

(1)选中SparkMaster

(2)点击EditConfig

(3)设置参数,点击apply

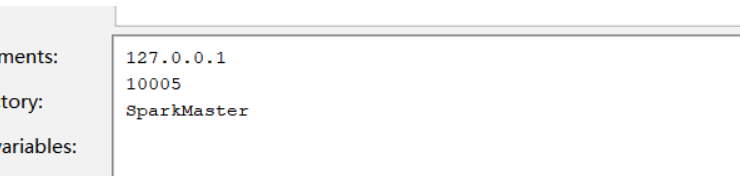

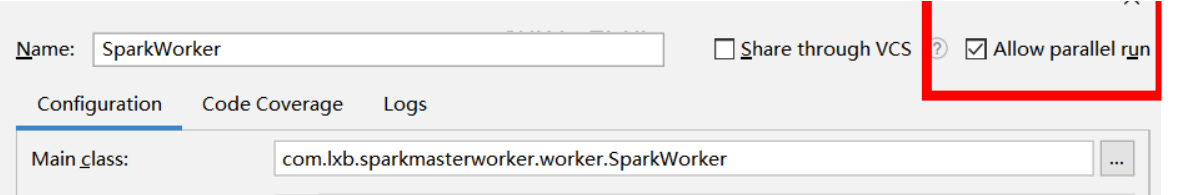

(4)选中SparkWorker

(5)和第二步一样

(6)设置参数,点击apply

(7)如果在启动一个新的时候,需要关闭原先的那个。这时候需要更改下面的配置。勾选即可。

3454

3454

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?