1.Hadoop账号创建

创建一个hadoop用户,并使用/bin/bash 作为shell

#创建hadoop用户

sudo useradd -m hadoop -s /bin/bash

#为hadoop设置一个密码

sudo passwd hadoop

#对hadoop用户添加管理员的权限

sudo adduser hadoop sudo

软件目录授权

chown hadoop:hadoop /home/software/*

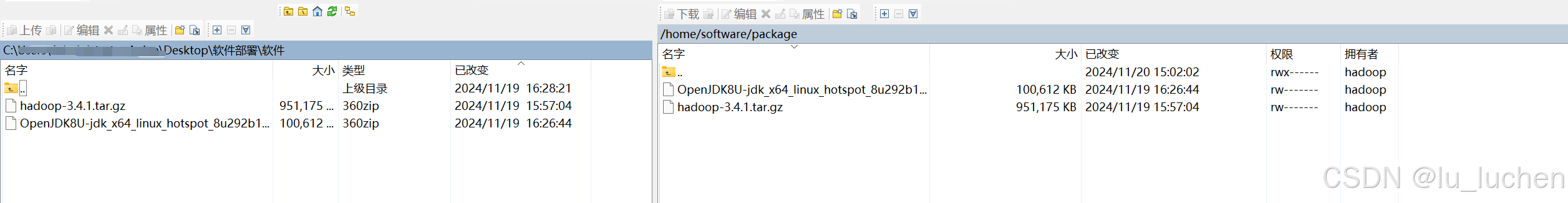

2.软件准备

需要先安装jdk

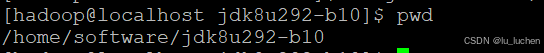

jdk解压到的路径

环境变量

vi /etc/profile

export JAVA_HOME=/home/software/jdk8u292-b10

export PATH=$JAVA_HOME/bin:$PATH

export CLASSPATH=.:$JAVA_HOME/lib/dt.jar:$JAVA_HOME/lib/tool.jar

环境变量生效

source /etc/profile

验证jdk是的生效

3.Hadoop安装

hadoop启动需要免密,我们须配置成无密码登录

#我们使用SSH登录远程服务器

ssh localhost

cd ~/.ssh/

#利用ssh-keygen生成密钥

ssh-keygen -t rsa

#密钥加入到授权中,此时再使用ssh localhost命令就无需登录密码了

cat ./id_rsa.pub >> ./authorized_keys

解压hadoop对其进行环境变量配置

#解压hadoop

tar -zxvf hadoop-3.4.1.tar.gz -C /home/software/

#修改hadoop文件名

mv /home/software/hadoop-3.4.1 hadoop

#修改文件权限

sudo chown -R hadoop ./hadoop

#添加hadoop环境变量

vi /etc/profile

#添加以下内容并保存

export HADOOP_HOME=/home/software/hadoop

export PATH=$PATH:$HADOOP_HOME/sbin:$HADOOP_HOME/bin

#查看我们解压的hadoop是否可用

cd /home/software/hadoop

./bin/hadoop version

#出现以下内容表示hadoop可用

Hadoop 3.4.1

Source code repository https://github.com/apache/hadoop.git -r 4d7825309348956336b8f06a08322b78422849b1

Compiled by mthakur on 2024-10-09T14:57Z

Compiled on platform linux-x86_64

Compiled with protoc 3.23.4

From source with checksum 7292fe9dba5e2e44e3a9f763fce3e680

This command was run using /home/software/hadoop/share/hadoop/common/hadoop-common-3.4.1.jar

将java的环境变量引用到hadoop里

修改文件hadoop-env.sh:vi /home/software/hadoop/etc/hadoop/hadoop-env.sh

export JAVA_HOME=/home/software/jdk8u292-b10

hadoop伪分布式文件配置

修改文件core-site.xml:vi /home/software/hadoop/etc/hadoop/core-site.xml

路径是安装的hadoop路径:<value>file:/home/software/hadoop/tmp</value>

<configuration>

<property>

<name>hadoop.tmp.dir</name>

<value>file:/home/software/hadoop/tmp</value>

<description>Abase for other temporary directories.</description>

</property>

<property>

<name>fs.defaultFS</name>

<value>hdfs://localhost:9000</value>

</property>

</configuration>

修改文件hdfs-site.xml:

<configuration>

<property>

<name>dfs.replication</name>

<value>1</value>

</property>

<property>

<name>dfs.namenode.name.dir</name>

<value>file:/home/software/hadoop/tmp/dfs/name</value>

</property>

<property>

<name>dfs.datanode.data.dir</name>

<value>file:/home/software/hadoop/tmp/dfs/data</value>

</property>

</configuration>

启动hadoop服务

尽量使用./sbin/stop-all.sh这个命令去结束hadoop的进程,不要去kill某个进程号因为killall dfs相关的hadoop的文件,导致后面进程看似重启成功,但是访问ip:9870无法访问,即使看到dfs相关的hadoop启动成功,也还是无法访问9870端口

#到hadoop目录下

cd /home/software/hadoop

#NameNode 的格式化

./bin/hdfs namenode -format

#hadoop启动命令两种方式

#接着开启 NameNode 和 DataNode 守护进程。

./sbin/start-dfs.sh

#all来开启hadoop全部进程,

./sbin/start-all.sh

#all来结束hadoop全部进程,

./sbin/stop-all.sh

#启动后出现以下内容

WARNING: Attempting to start all Apache Hadoop daemons as hadoop in 10 seconds.

WARNING: This is not a recommended production deployment configuration.

WARNING: Use CTRL-C to abort.

Starting namenodes on [localhost]

localhost:

localhost: Authorized users only. All activities may be monitored and reported.

Starting datanodes

localhost:

localhost: Authorized users only. All activities may be monitored and reported.

Starting secondary namenodes [localhost]

localhost:

localhost: Authorized users only. All activities may be monitored and reported.

#启动完成后,可以通过命令 jps 来判断是否成功启动

jps

#出现以下内容

18976 ResourceManager

18720 SecondaryNameNode

19108 NodeManager

19511 Jps

18414 DataNode

18223 NameNode

防火墙设置:

#开放9870端口

sudo firewall-cmd --zone=public --add-port=9870/tcp --permanent

#重启防火墙

sudo firewall-cmd --reload

#查看防火墙状态

sudo firewall-cmd --state

#启动防火墙

sudo systemctl start firewalld

sudo systemctl enable firewalld

#查看已开放的端口

sudo firewall-cmd --list-ports

#关闭防火墙

sudo systemctl stop firewalld

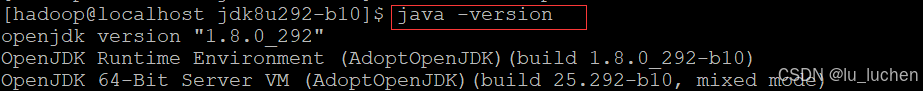

宿主机输入虚拟机ip:9870

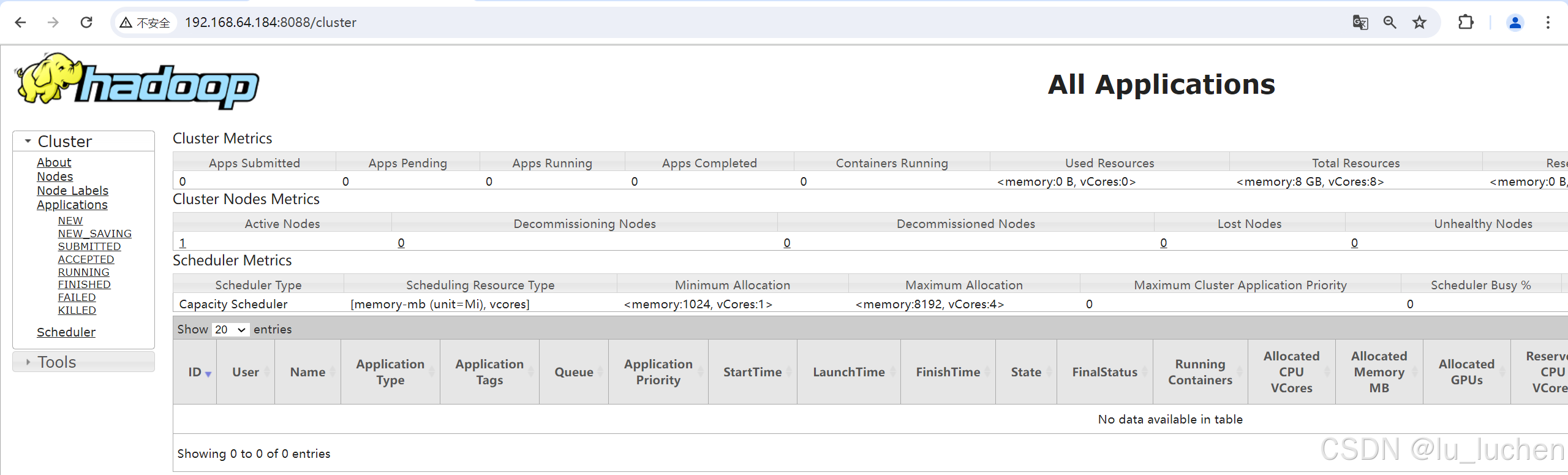

宿主机输入虚拟机ip:8088

2501

2501

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?