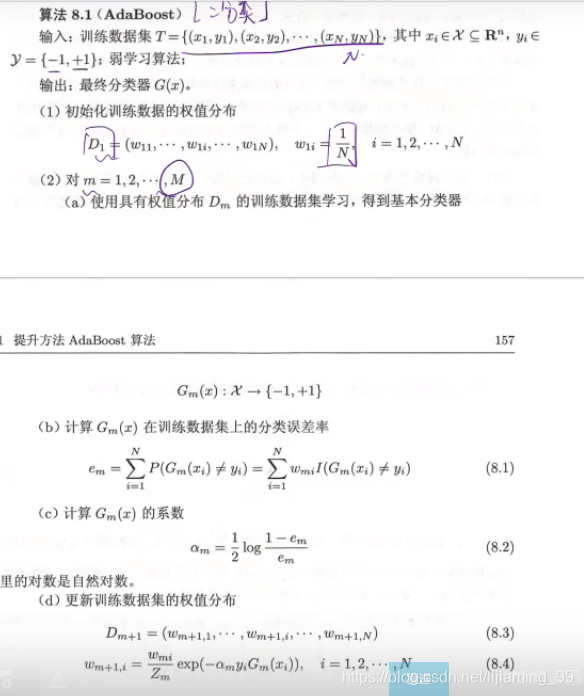

一共M个模型

一共M个模型

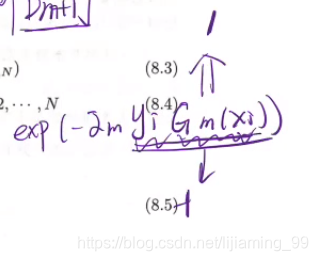

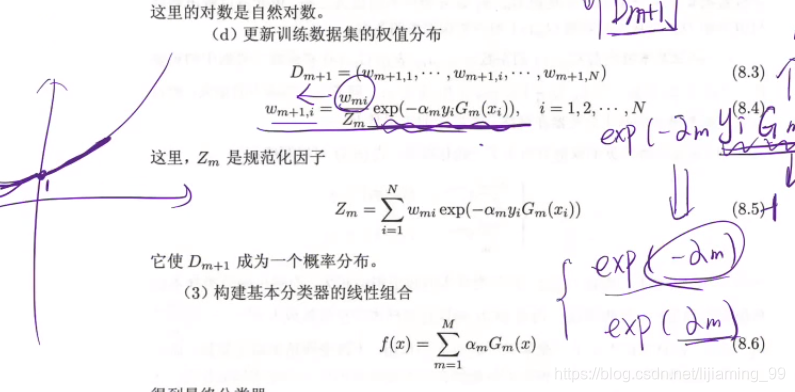

函数:分类错误为1(条件成立);分类正确(不成立)0;

预测正确一定是1,11或者-1-1;

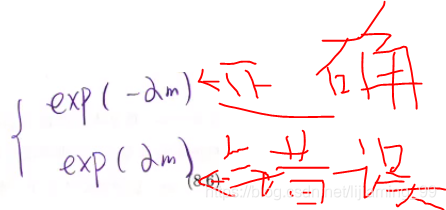

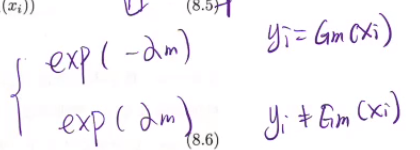

分类正确权重小于1,权重减小

分类正确权重小于1,权重减小

分类错误权重大于1,权重增加

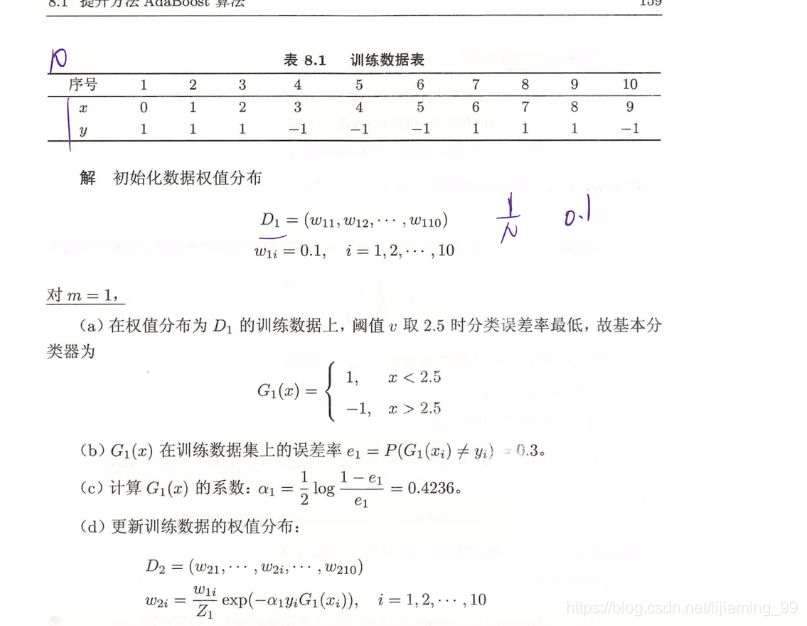

例子:

例子:

# coding=utf-8

from numpy import *

import matplotlib.pyplot as plt

# 简单数据

def loadSimpData():

datMat = matrix([[1., 2.1],

[2., 1.1],

[1.3, 1.],

[1., 1.],

[2., 1.]])

classLabels = [1.0, 1.0, -1.0, -1.0, 1.0]

return datMat, classLabels

# 在难数据集上应用

# 自适应数据加载函数

def loadDataSet(fileName):

'''

这个函数用来加载训练数据集

输入:存储数据的文件名

输出:数据集列表 以及 类别标签列表

'''

numFeat = len(open(fileName).readline().split('\t')) # get number of fields

dataMat = [];

labelMat = []

fr = open(fileName)

for line in fr.readlines():

lineArr = []

curLine = line.strip().split('\t')

for i in range(numFeat - 1):

lineArr.append(float(curLine[i]))

dataMat.append(lineArr)

labelMat.append(float(curLine[-1]))

return dataMat, labelMat

# 对数据进行分类(用于测试是否有某个值小于或者大于测试的阀值)

def stumpClassify(dataMatrix, dimen, threshVal, threshIneq): # 仅用于分类数据

'''

dataMatrix: (特征数据转化成的)特征矩阵

dimen: dimen=range(shape(dataMatrix)[1])

threshVal: 阈值

threshIneq: 小于/大于阈值的选择

'''

retArray = ones((shape(dataMatrix)[0], 1))

# 将小于或者大于阀值的数值设为-1

if threshIneq == 'less_than':

retArray[dataMatrix[:, dimen] <= threshVal] = -1.0

else:

retArray[dataMatrix[:, dimen] > threshVal] = -1.0

return retArray

# 找到最佳决策树(最佳指加权错误率最低)

def buildStump(dataArr, classLabels, D):

'''

dataArr: 特征数据

classLabels: 标签数据

D: 基于数据的权重向量

'''

dataMatrix = mat(dataArr);

labelMat = mat(classLabels).T

m, n = shape(dataMatrix)

numSteps = 10.0;

bestStump = {};

bestClasEst = mat(zeros((m, 1)))

minError = inf # 最小错误率,开始初始化为无穷大

for i in range(n): # 遍历数据集所有特征

rangeMin = dataMatrix[:, i].min();

rangeMax = dataMatrix[:, i].max();

stepSize = (rangeMax - rangeMin) / numSteps # 考虑数据特征,计算步长

for j in range(-1, int(numSteps) + 1): # 遍历不同步长时的情况

for inequal in ['less_than', 'greater_than']: # 小于/大于阈值 切换遍历

threshVal = (rangeMin + float(j) * stepSize) # 设置阈值

predictedVals = stumpClassify(dataMatrix, i, threshVal, inequal) # 分类预测

errArr = mat(ones((m, 1))) # 初始化全部为1 (初始化全部错误)

errArr[predictedVals == labelMat] = 0 # 预测正确分类为0,错误则为1

# 分类器与adaBoost交互

# 权重向量×错误向量=计算权重误差(加权错误率)

weightedError = D.T * errArr

if weightedError < minError:

minError = weightedError # 保存当前最小的错误率

bestClasEst = predictedVals.copy() # 预测类别

# 保存该单层决策树

bestStump['dim'] = i

bestStump['thresh'] = threshVal

bestStump['ineq'] = inequal

return bestStump, minError, bestClasEst # 返回字典,返回错误率最小情况下的决策树,错误率和类别估计值。

# 完整adaboost算法

def adaBoostTrainDS(dataArr, classLabels, numIt=40):

'''

dataArr: 特征数据

classLabels: 标签数据

numIt: 迭代次数

'''

weakClassArr = [] # 迭代分类器的信息(决策树字典添加alpha键值后形成嵌套字典的列表)

m = shape(dataArr)[0] # m表示数组行数

D = mat(ones((m, 1)) / m) # 初始化每个数据点的权重为1/m

aggClassEst = mat(zeros((m, 1))) # 记录每个数据点的类别估计累计值

for i in range(numIt):

# 建立一个单层决策树,输入初始权重D

bestStump, error, classEst = buildStump(dataArr, classLabels, D) # classEst:弱分类器估计值

# print ("D:",D.T)

# alpha表示本次输出结果权重

alpha = float(0.5 * log((1.0 - error) / max(error, 1e-16))) # 1e-16防止零溢出

bestStump['alpha'] = alpha # alpha加入字典

weakClassArr.append(bestStump) # 保存该次迭代分类器的信息

# print ("classEst: ",classEst.T) #该次迭代分类器对样本的估计值

# 计算下次迭代的新权重D

expon = multiply(-1 * alpha * mat(classLabels).T, classEst)

D = multiply(D, exp(expon)) # 第k个弱分类器规范化因子

D = D / D.sum() # k+1个弱分类器的样本集权重系数(D.sum():规范化因子)

# 计算累加错误率(集合策略:加权表决法)

aggClassEst += alpha * classEst

# print ("aggClassEst: ",aggClassEst.T)

aggErrors = multiply(sign(aggClassEst) != mat(classLabels).T, ones((m, 1)))

errorRate = aggErrors.sum() / m

print("total error: ", errorRate)

if errorRate == 0.0: break # 错误率为0时 停止迭代

return weakClassArr, aggClassEst

# 测试adaboost(利用训练出的多个弱分类器进行分类),用于预测

def adaClassify(datToClass, classifierArr):

'''

datToClass: 待分类样例

classifierArr: 多个弱分类器

'''

dataMatrix = mat(datToClass) # 待分类样例 转换成numpy矩阵

m = shape(dataMatrix)[0]

aggClassEst = mat(zeros((m, 1)))

for i in range(len(classifierArr)): # 遍历所有弱分类器

classEst = stumpClassify(dataMatrix, \

classifierArr[i]['dim'], \

classifierArr[i]['thresh'], \

classifierArr[i]['ineq'])

aggClassEst += classifierArr[i]['alpha'] * classEst # 由各个分类器加权确定

# print (aggClassEst) #输出每次迭代侯变化的结果

return sign(aggClassEst) # 返回符号,大于0返回1,小于0返回-1

def plotROC(predStrengths, classLabels):

cur = (1.0, 1.0) # cursor

ySum = 0.0 # variable to calculate AUC

numPosClas = sum(array(classLabels) == 1.0)

yStep = 1 / float(numPosClas);

xStep = 1 / float(len(classLabels) - numPosClas)

sortedIndicies = predStrengths.argsort() # get sorted index, it's reverse

fig = plt.figure()

fig.clf()

ax = plt.subplot(111)

# loop through all the values, drawing a line segment at each point

for index in sortedIndicies.tolist()[0]:

if classLabels[index] == 1.0:

delX = 0;

delY = yStep;

else:

delX = xStep;

delY = 0;

ySum += cur[1]

# draw line from cur to (cur[0]-delX,cur[1]-delY)

ax.plot([cur[0], cur[0] - delX], [cur[1], cur[1] - delY], c='b')

cur = (cur[0] - delX, cur[1] - delY)

ax.plot([0, 1], [0, 1], 'b--')

plt.xlabel('False positive rate');

plt.ylabel('True positive rate')

plt.title('ROC curve for AdaBoost horse colic detection system')

ax.axis([0, 1, 0, 1])

plt.show()

print("the Area Under the Curve is: ", ySum * xStep)

if __name__ == '__main__':

# 简单数据

print('=' * 10 + '简单数据集的应用' + '=' * 10)

d, c = loadSimpData() # 收集数据

weakClassArr1, aggClassEst1 = adaBoostTrainDS(d, c) # 训练算法

testArr1 = [0, 0]

prediction1 = adaClassify(testArr1, weakClassArr1) # 测试算法(假设测试数据为[0,0])

plotROC(aggClassEst1.T, c)

print()

# 难数据集的应用

# print('=' * 10 + '难数据集的应用(加载训练数据和测试)' + '=' * 10)

# datArr, labelArr = loadDataSet('horseColicTraining2.txt')

# testArr, testLabelArr = loadDataSet('horseColicTest2.txt')

# weakClassArr, aggClassEst = adaBoostTrainDS(datArr, labelArr) # 训练算法

# prediction = adaClassify(testArr, weakClassArr) # 测试(预测)算法

# errArr = multiply(prediction != mat(testLabelArr).T, ones((len(testArr), 1))).sum() # 错误个数

# errorRate = errArr / len(testArr) # 平均错误率为

# print('测试后的平均错误率为', errorRate)

# plotROC(aggClassEst.T, labelArr)

1311

1311

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?