一.kafka在mac环境下的安装

1.安装kafka

$brew install kafka

上面安装过程中已经自动安装了zookeeper

2.软件位置

/usr/local/Cellar/zookeeper

/usr/local/Cellar/kafka

3.配置文件位置

/usr/local/etc/kafka/zookeeper.properties

/usr/local/etc/kafka/server.properties

备注:后续操作均需进入 /usr/local/Cellar/kafka/1.0.0/bin 目录下。

4.启动zookeeper

zookeeper-server-start /usr/local/etc/kafka/zookeeper.properties &

5.启动kafka服务

kafka-server-start /usr/local/etc/kafka/server.properties &

6.创建topic

kafka-topics --create --zookeeper localhost:2181 --replication-factor 1 --partitions 1 --topic test1

7.查看创建的topic

kafka-topics --list --zookeeper localhost:2181

8.生产数据

kafka-console-producer --broker-list localhost:9092 --topic test1

9.消费数据

kafka-console-consumer --bootstrap-server 127.0.0.1:9092 --topic test1 --from-beginning

备注:–from-beginning 将从第一个消息开始接收

二.springboot集成kafka

1.pom文件依赖

<?xml version="1.0" encoding="UTF-8"?>

<project xmlns="http://maven.apache.org/POM/4.0.0" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/maven-4.0.0.xsd">

<modelVersion>4.0.0</modelVersion>

<parent>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-parent</artifactId>

<version>2.1.3.RELEASE</version>

<relativePath/>

</parent>

<groupId>com.ligh</groupId>

<artifactId>Springboot-kafka</artifactId>

<version>0.0.1-SNAPSHOT</version>

<name>Springboot-kafka</name>

<properties>

<java.version>1.8</java.version>

</properties>

<dependencies>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter</artifactId>

</dependency>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-web</artifactId>

</dependency>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-test</artifactId>

<scope>test</scope>

</dependency>

<dependency>

<groupId>org.projectlombok</groupId>

<artifactId>lombok</artifactId>

<optional>true</optional>

</dependency>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-test</artifactId>

<scope>test</scope>

</dependency>

<dependency>

<groupId>org.springframework.kafka</groupId>

<artifactId>spring-kafka</artifactId>

</dependency>

<dependency>

<groupId>com.google.code.gson</groupId>

<artifactId>gson</artifactId>

<version>2.8.2</version>

</dependency>

</dependencies>

<build>

<plugins>

<plugin>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-maven-plugin</artifactId>

</plugin>

</plugins>

</build>

</project>

2.在配置文件application.properties中添加以下配置

#============== kafka ===================

# 指定kafka 代理地址,可以多个

spring.kafka.bootstrap-servers=127.0.0.1:9092

#=============== provider =======================

spring.kafka.producer.retries=0

# 每次批量发送消息的数量

spring.kafka.producer.batch-size=16384

spring.kafka.producer.buffer-memory=33554432

# 指定消息key和消息体的编解码方式

spring.kafka.producer.key-serializer=org.apache.kafka.common.serialization.StringSerializer

spring.kafka.producer.value-serializer=org.apache.kafka.common.serialization.StringSerializer

#=============== consumer =======================

# 指定默认消费者group id

spring.kafka.consumer.group-id=test-consumer-group

spring.kafka.consumer.auto-offset-reset=earliest

spring.kafka.consumer.enable-auto-commit=true

spring.kafka.consumer.auto-commit-interval=100

# 指定消息key和消息体的编解码方式

spring.kafka.consumer.key-deserializer=org.apache.kafka.common.serialization.StringDeserializer

spring.kafka.consumer.value-deserializer=org.apache.kafka.common.serialization.StringDeserializer

3.创建一个实体类Message

import lombok.Data;

import java.util.Date;

@Data

public class Message {

private Long id; //id

private String msg; //消息

private Date sendTime; //时间戳

}

4.创建生产者端

import com.google.gson.Gson;

import com.google.gson.GsonBuilder;

import com.ligh.domain.Message;

import lombok.extern.slf4j.Slf4j;

import org.springframework.beans.factory.annotation.Autowired;

import org.springframework.kafka.core.KafkaTemplate;

import org.springframework.stereotype.Component;

import org.springframework.stereotype.Controller;

import org.springframework.web.bind.annotation.RequestMapping;

import org.springframework.web.bind.annotation.ResponseBody;

import java.util.Date;

import java.util.UUID;

@Component

@Slf4j

@Controller

public class KafkaProducer {

@Autowired

private KafkaTemplate<String,String> kafkaTemplate;

private Gson gson = new GsonBuilder().create();

//发送消息

@RequestMapping("send")

@ResponseBody

public String send(){

Message message = new Message();

message.setId(System.currentTimeMillis());

message.setMsg(UUID.randomUUID().toString());

message.setSendTime(new Date());

System.out.println("+++++++++++ message = {}" +(gson.toJson(message)));

kafkaTemplate.send("ligh",gson.toJson(message));

return "success";

}

}

5.创建消费者端

import lombok.extern.slf4j.Slf4j;

import org.apache.kafka.clients.consumer.ConsumerRecord;

import org.springframework.kafka.annotation.KafkaListener;

import org.springframework.stereotype.Component;

import java.util.Optional;

@Component

@Slf4j

public class KafkaConsumer {

@KafkaListener(topics = {"ligh"})

public void listen(ConsumerRecord<?,?> record){

Optional<?> kafkaMessage = Optional.ofNullable(record.value());

if(kafkaMessage.isPresent()){

Object message = kafkaMessage.get();

System.out.println("--------- recode = "+record);

System.out.println("--------- message = "+message);

}

}

}

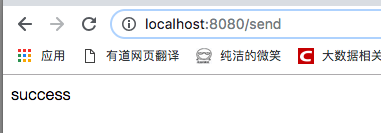

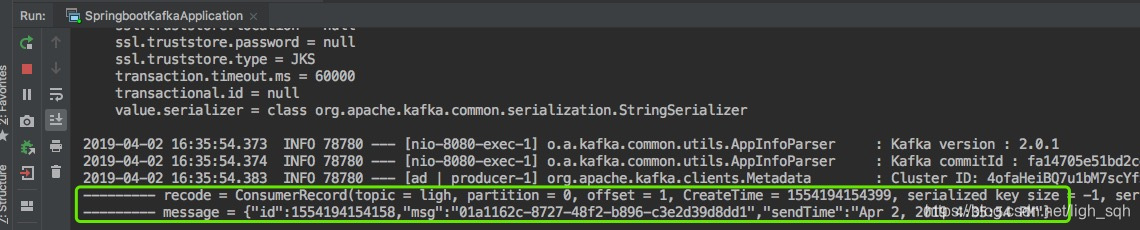

三.测试

1.访问localhost:8080/send进行生产者生产消息

2.查看控制台消费者是否消费到了消息

以上就完成了kafka的安装到集成到springboot中的过程。

本文介绍如何在Mac环境下安装Kafka,并将其集成到Spring Boot项目中。具体步骤包括通过Homebrew安装Kafka和Zookeeper,配置相关属性,创建Topic,以及实现消息的生产和消费。同时展示了如何在Spring Boot应用中引入Kafka依赖,配置Kafka参数,创建消息实体类,编写生产者和消费者逻辑。

本文介绍如何在Mac环境下安装Kafka,并将其集成到Spring Boot项目中。具体步骤包括通过Homebrew安装Kafka和Zookeeper,配置相关属性,创建Topic,以及实现消息的生产和消费。同时展示了如何在Spring Boot应用中引入Kafka依赖,配置Kafka参数,创建消息实体类,编写生产者和消费者逻辑。

6368

6368

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?