1、设置hive-site.xml ;

<property>

<name>hive.exec.post.hooks</name>

<value>org.apache.atlas.hive.hook.HiveHook</value>

</property>

2、添加HIVE_AUX_JARS_PATH到Hive安装目录下conf目录中的hive-env.sh ;

export HIVE_AUX_JARS_PATH=<atlas安装包位置>/hook/hive

本机器修改为:

export HIVE_AUX_JARS_PATH=/home/dmp/apache-atlas-sources-1.0.0/distro/target/apache-atlas-1.0.0/hook/hive

3、启动 import-hive.sh脚本

/home/dmp/apache-atlas-sources-1.0.0/distro/target/apache-atlas-1.0.0/bin/import-hive.sh

4、导入结果如下则导入成功:

[root@dmp9 bin]# ./import-hive.sh

Using Hive configuration directory [/home/dmp/apache-hive-1.2.1-bin/conf]

Log file for import is /home/dmp/apache-atlas-sources-1.0.0/distro/target/apache-atlas-1.0.0/logs/import-hive.log

log4j:WARN No such property [maxFileSize] in org.apache.log4j.PatternLayout.

log4j:WARN No such property [maxBackupIndex] in org.apache.log4j.PatternLayout.

Enter username for atlas :- admin

Enter password for atlas :-

Hive Meta Data imported successfully!!!

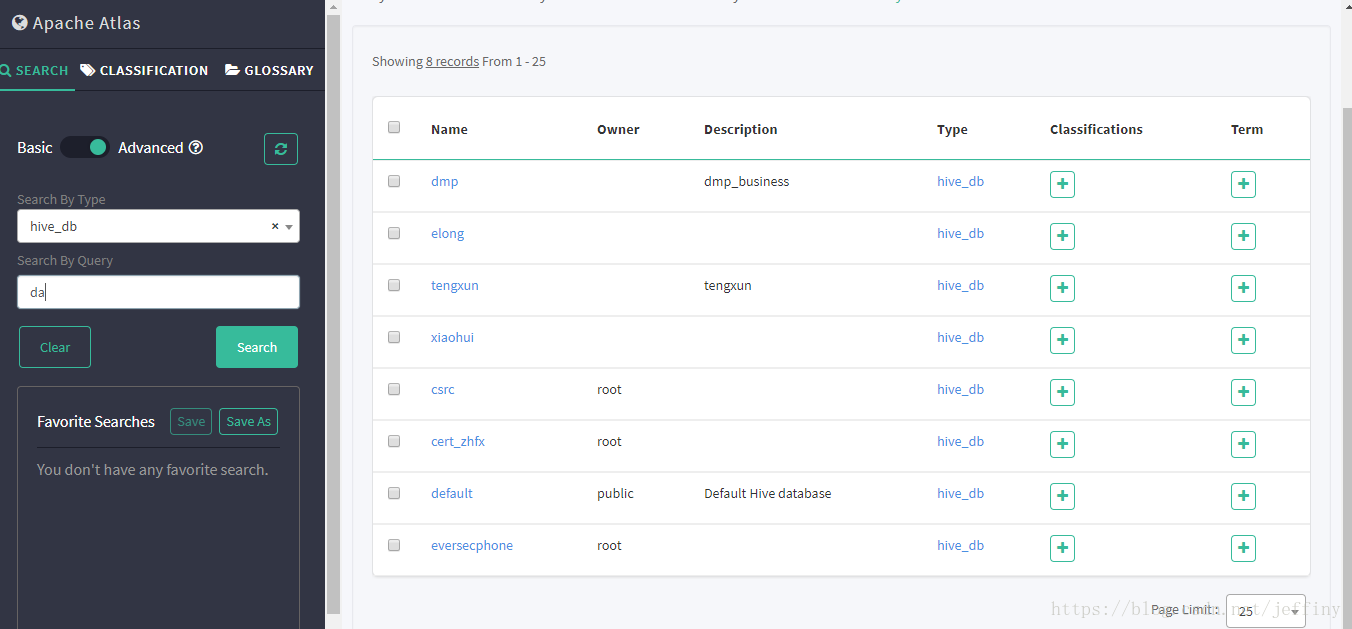

5、刷新页面后可见:

6、导入错误

[root@dmp9 bin]# ./import-hive.sh

Using Hive configuration directory [/home/dmp/apache-hive-1.2.1-bin/conf]

Log file for import is /home/apache-atlas-1.0.0-bin/apache-atlas-1.0.0/logs/import-hive.log

log4j:WARN No such property [maxFileSize] in org.apache.log4j.PatternLayout.

log4j:WARN No such property [maxBackupIndex] in org.apache.log4j.PatternLayout.

Enter username for atlas :- admin

Enter password for atlas :-

Exception in thread "main" java.lang.NoSuchMethodError: org.apache.hadoop.conf.Configuration.addDeprecations([Lorg/apache/hadoop/conf/Configuration$DeprecationDelta;)V

at org.apache.hadoop.hdfs.HdfsConfiguration.addDeprecatedKeys(HdfsConfiguration.java:66)

at org.apache.hadoop.hdfs.HdfsConfiguration.<clinit>(HdfsConfiguration.java:31)

at org.apache.atlas.utils.HdfsNameServiceResolver.init(HdfsNameServiceResolver.java:139)

at org.apache.atlas.utils.HdfsNameServiceResolver.<clinit>(HdfsNameServiceResolver.java:47)

at org.apache.atlas.hive.bridge.HiveMetaStoreBridge.toDbEntity(HiveMetaStoreBridge.java:533)

at org.apache.atlas.hive.bridge.HiveMetaStoreBridge.toDbEntity(HiveMetaStoreBridge.java:517)

at org.apache.atlas.hive.bridge.HiveMetaStoreBridge.registerDatabase(HiveMetaStoreBridge.java:384)

at org.apache.atlas.hive.bridge.HiveMetaStoreBridge.importDatabases(HiveMetaStoreBridge.java:263)

at org.apache.atlas.hive.bridge.HiveMetaStoreBridge.importHiveMetadata(HiveMetaStoreBridge.java:247)

at org.apache.atlas.hive.bridge.HiveMetaStoreBridge.main(HiveMetaStoreBridge.java:168)

Failed to import Hive Meta Data!!!

7、问题分析,猜测是加载的jar包不对

[Loaded org.apache.hadoop.hdfs.HdfsConfiguration from file:/home/dmp/hadoop-2.7.3/share/hadoop/hdfs/hadoop-hdfs-2.7.3.jar]

[Loaded org.apache.hadoop.conf.Configuration$DeprecationDelta from file:/home/dmp/hadoop-2.7.3/share/hadoop/common/hadoop-common-2.7.3.jar]

Exception in thread "main" [Loaded java.lang.Throwable$WrappedPrintStream from /usr/java/jdk1.8.0_181/jre/lib/rt.jar]

java.lang.NoSuchMethodError: org.apache.hadoop.conf.Configuration.addDeprecations([Lorg/apache/hadoop/conf/Configuration$DeprecationDelta;)V

at org.apache.hadoop.hdfs.HdfsConfiguration.addDeprecatedKeys(HdfsConfiguration.java:66)

at org.apache.hadoop.hdfs.HdfsConfiguration.<clinit>(HdfsConfiguration.java:31)

at org.apache.atlas.utils.HdfsNameServiceResolver.init(HdfsNameServiceResolver.java:139)

at org.apache.atlas.utils.HdfsNameServiceResolver.<clinit>(HdfsNameServiceResolver.java:47)

at org.apache.atlas.hive.bridge.HiveMetaStoreBridge.toDbEntity(HiveMetaStoreBridge.java:533)

at org.apache.atlas.hive.bridge.HiveMetaStoreBridge.registerDatabase(HiveMetaStoreBridge.java:388)

at org.apache.atlas.hive.bridge.HiveMetaStoreBridge.importDatabases(HiveMetaStoreBridge.java:263)

at org.apache.atlas.hive.bridge.HiveMetaStoreBridge.importHiveMetadata(HiveMetaStoreBridge.java:247)

at org.apache.atlas.hive.bridge.HiveMetaStoreBridge.main(HiveMetaStoreBridge.java:168)

[Loaded java.util.IdentityHashMap$IdentityHashMapIterator from /usr/java/jdk1.8.0_181/jre/lib/rt.jar]

[Loaded java.util.IdentityHashMap$KeyIterator from /usr/java/jdk1.8.0_181/jre/lib/rt.jar]

Failed to import Hive Meta Data!!!

1)、从hadoop-common的加载的configuration包有org.apache.hadoop.conf.Configuration ; 但是没找到加载Configuration 的类,估计这样才导致找不到方法吧:org.apache.hadoop.conf.Configuration.addDeprecations([Lorg/apache/hadoop/conf/Configuration$DeprecationDelta;)

2)、查看hadoop-hdfs包,发现有org.apache.hadoop.hdfs.HdfsConfiguration配置类;

8、解决方法

修改import-hive.sh 修改内容如下:

CP="${ATLASCPPATH}:${HADOOP_CP}:${HIVE_CP}"

本文详细介绍如何通过配置hive-site.xml、添加HIVE_AUX_JARS_PATH环境变量、修改import-hive.sh脚本来解决Hive元数据导入Apache Atlas过程中遇到的NoSuchMethodError异常,最终实现元数据的成功导入。

本文详细介绍如何通过配置hive-site.xml、添加HIVE_AUX_JARS_PATH环境变量、修改import-hive.sh脚本来解决Hive元数据导入Apache Atlas过程中遇到的NoSuchMethodError异常,最终实现元数据的成功导入。

880

880