project-dao接收kafka发来的数据,保存到mysql,再将数据通过kafka分发给es和redis

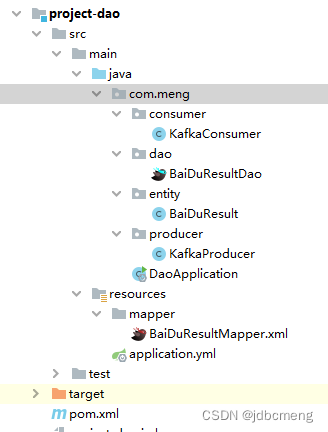

1、project-dao代码结构

2、pom.xml

<parent>

<artifactId>spring-cloud-project</artifactId>

<groupId>com.meng</groupId>

<version>1.0-SNAPSHOT</version>

</parent>

<modelVersion>4.0.0</modelVersion>

<artifactId>project-dao</artifactId>

<properties>

<spring-boot.version>2.3.12.RELEASE</spring-boot.version>

</properties>

<dependencies>

<!--注意:由于 spring-boot-starter-web 默认替我们引入了核心启动器 spring-boot-starter,

因此,当 Spring Boot 项目中的 pom.xml 引入了 spring-boot-starter-web 的依赖后,

就无须在引入 spring-boot-starter 核心启动器的依赖了-->

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-web</artifactId>

<version>${spring-boot.version}</version>

</dependency>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-test</artifactId>

<version>${spring-boot.version}</version>

<scope>test</scope>

</dependency>

<!--kafka-->

<dependency>

<groupId>org.springframework.kafka</groupId>

<artifactId>spring-kafka</artifactId>

<version>${spring-boot.version}</version>

</dependency>

<dependency>

<groupId>org.projectlombok</groupId>

<artifactId>lombok</artifactId>

<version>1.18.16</version>

<scope>compile</scope>

</dependency>

<!--整合mytais-->

<dependency>

<groupId>org.mybatis.spring.boot</groupId>

<artifactId>mybatis-spring-boot-starter</artifactId>

<version>2.1.3</version>

</dependency>

<dependency>

<groupId>mysql</groupId>

<artifactId>mysql-connector-java</artifactId>

<version>5.1.6</version>

</dependency>

<dependency>

<groupId>com.alibaba</groupId>

<artifactId>druid</artifactId>

<version>1.1.20</version>

</dependency>

<!--分页插件-->

<dependency>

<groupId>com.github.pagehelper</groupId>

<artifactId>pagehelper-spring-boot-starter</artifactId>

<version>1.2.5</version>

</dependency>

<!--consul-->

<dependency>

<groupId>org.springframework.cloud</groupId>

<artifactId>spring-cloud-starter-consul-discovery</artifactId>

<exclusions>

<exclusion>

<artifactId>jackson-annotations</artifactId>

<groupId>com.fasterxml.jackson.core</groupId>

</exclusion>

<exclusion>

<artifactId>jackson-core</artifactId>

<groupId>com.fasterxml.jackson.core</groupId>

</exclusion>

<exclusion>

<artifactId>jackson-databind</artifactId>

<groupId>com.fasterxml.jackson.core</groupId>

</exclusion>

</exclusions>

</dependency>

<!-- <dependency>

<groupId>org.springframework.cloud</groupId>

<artifactId>spring-cloud-starter-consul-config</artifactId>

</dependency>

这个consul-config我暂时还不清楚什么用,但如果引入这个jar,application.yml文件名就要改名(bootstrap.yml),否则就找不到对应的配置内容

-->

<dependency>

<groupId>com.alibaba</groupId>

<artifactId>fastjson</artifactId>

<version>1.2.28</version>

</dependency>

</dependencies>

2、application.yml

server:

port: 81

spring:

application:

name: project-dao

cloud:

consul:

host: 192.168.233.137

port: 8500

discovery:

instance-id: ${spring.application.name}:${server.port} #这个id作为唯一识别的id必填

service-name: consul-dao

#开启ip地址注册

prefer-ip-address: true

#实例的请求ip

ip-address: ${spring.cloud.client.ip-address}

heartbeat:

enabled: true #不打开心跳机制,控制台会显示红叉

datasource:

driverClassName: com.mysql.jdbc.Driver

url: jdbc:mysql://192.168.233.137:33306/today_top_news?zeroDateTimeBehavior=convertToNull&useUnicode=true&characterEncoding=utf8&allowMultiQueries=true

username: root

password: 123456

kafka:

bootstrap-servers: 192.168.233.137:9092

consumer:

group-id: testGroupBase # 指定默认消费者group id --> 由于在kafka中,同一组中的consumer不会读取到同一个消息,依靠groud.id设置组名

auto-offset-reset: earliest # smallest和largest才有效,如果smallest重新0开始读取,如果是largest从logfile的offset读取。一般情况下我们都是设置smallest

enable-auto-commit: true # enable.auto.commit:true --> 设置自动提交offset

auto-commit-interval: 100 #如果'enable.auto.commit'为true,则消费者偏移自动提交给Kafka的频率(以毫秒为单位),默认值为5000。

key-deserializer: org.apache.kafka.common.serialization.StringDeserializer # 指定消息key和消息体的编解码方式

value-deserializer: org.apache.kafka.common.serialization.StringDeserializer # 指定消息key和消息体的编解码方式

mybatis:

mapperLocations: classpath*:mapper/*Mapper.xml

# 打印sql语句

logging:

level:

com.meng.user: debug

#pagehelper分页插件

pagehelper:

helper-dialect: mysql

reasonable: true

support-methods-arguments: true

params: count=countSql

3、启动类DaoApplication

@SpringBootApplication

@MapperScan("com.meng.dao")

@EnableDiscoveryClient

public class DaoApplication {

public static void main(String[] args) {

SpringApplication.run(DaoApplication.class , args);

}

}

4、实体类和Dao

实体类BaiDuResult和项目一爬虫里的一样,这里不再粘贴了,后面项目也不粘贴了,dao就一个插入sql

BaiDuResultDao

@Repository

public interface BaiDuResultDao {

int insertSelective(BaiDuResult record);

BaiDuResultMapper.xml

<insert id="insertSelective" parameterType="com.meng.entity.BaiDuResult" >

insert into t_baidu_result

<selectKey resultType="java.lang.Long" order="AFTER" keyProperty="id">

select LAST_INSERT_ID()

</selectKey>

<trim prefix="(" suffix=")" suffixOverrides="," >

<if test="id != null" >

id,

</if>

<if test="title != null" >

title,

</if>

<if test="content != null" >

content,

</if>

<if test="sourceUrl != null" >

source_url,

</if>

<if test="imgUrl != null" >

img_url,

</if>

<if test="createTime != null" >

create_time,

</if>

<if test="updateTime != null" >

update_time,

</if>

<if test="delFlag != null" >

del_flag,

</if>

</trim>

<trim prefix="values (" suffix=")" suffixOverrides="," >

<if test="id != null" >

#{id,jdbcType=BIGINT},

</if>

<if test="title != null" >

#{title,jdbcType=VARCHAR},

</if>

<if test="content != null" >

#{content,jdbcType=VARCHAR},

</if>

<if test="sourceUrl != null" >

#{sourceUrl,jdbcType=VARCHAR},

</if>

<if test="imgUrl != null" >

#{imgUrl,jdbcType=VARCHAR},

</if>

<if test="createTime != null" >

#{createTime,jdbcType=TIMESTAMP},

</if>

<if test="updateTime != null" >

#{updateTime,jdbcType=TIMESTAMP},

</if>

<if test="delFlag != null" >

#{delFlag,jdbcType=TINYINT},

</if>

</trim>

</insert>

selectKey 标签用于插入后返回自增的id,这样发送给es和redis的数据就是有id的了

5、KafkaConsumer用于接收kafka消息

@Configuration

@Slf4j

public class KafkaConsumer {

@Autowired

private BaiDuResultDao baiDuResultDao;

@Autowired

private KafkaProducer kafkaProducer;

@KafkaListener(topics = {"baiDuResultTopic"})

public void listen(ConsumerRecord<String, String> record){

Optional<String> kafkaMessage = Optional.ofNullable(record.value());

if (kafkaMessage.isPresent()) {

String itemStr = kafkaMessage.get();

log.info("---接收到kafka消息-----msg:{}" , itemStr);

if(!StringUtils.isEmpty(itemStr)){

List<BaiDuResult> list = JSONArray.parseArray(itemStr , BaiDuResult.class);

list.forEach((baiDuResult)->{

int i = baiDuResultDao.insertSelective(baiDuResult);

//插入数据库后返回id,发送消息给es和redis

log.info("------发送给es+redis的消息:{}" , baiDuResult);

kafkaProducer.sendMsg("resultMsgTopic" , JSONObject.toJSONString(baiDuResult));

});

}

}

}

}

6、kafkaProducer再将消息发送出去

@Component

public class KafkaProducer {

@Resource

private KafkaTemplate<String , String> kafkaTemplate;

public void sendMsg(String topic , String msg){

kafkaTemplate.send(topic , msg);

}

}

以上,数据持久化项目

下一篇:项目三:project-es搜索

该项目演示了如何使用Spring Boot应用从Kafka消费者接收数据,将其存储到MySQL数据库,然后将处理后的数据通过Kafka发送到Elasticsearch和Redis。配置包括Consul服务发现、MyBatis分页插件以及Kafka监听器的实现。

该项目演示了如何使用Spring Boot应用从Kafka消费者接收数据,将其存储到MySQL数据库,然后将处理后的数据通过Kafka发送到Elasticsearch和Redis。配置包括Consul服务发现、MyBatis分页插件以及Kafka监听器的实现。

9万+

9万+

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?