视频播放 via FFmpeg

FFmpeg 简介

FFmpeg 是一套可以用来记录、转换数字音频、视频,并能将其转化为流的开源计算机程序。采用 LGPL 或 GPL 许可证。它提供了录制、转换以及流化音视频的完整解决方案。它包含了非常先进的音频 / 视频编解码库 libavcodec,为了保证高可移植性和编解码质量,libavcodec 里很多 code 都是从头开发的。

FFmpeg 在 Linux 平台下开发,但它同样也可以在其它操作系统环境中编译运行,包括 Windows、Mac OS X 等。这个项目最早由 Fabrice Bellard 发起,2004 年至 2015 年间由 Michael Niedermayer 主要负责维护。许多 FFmpeg 的开发人员都来自 MPlayer 项目,而且当前 FFmpeg 也是放在 MPlayer 项目组的服务器上。项目的名称来自 MPEG 视频编码标准,前面的 “FF” 代表 “Fast Forward”。

FFmpeg 命令行播放视频

FFmpeg 提供了现成的程序 ffplay 用命令行的方式对视频进行播放。

>ffplay.exe d:\Titanic.ts

Input #0, mpegts, from 'd:\Titanic.ts':vq=0KB sq=0B f=0/0

Duration: 00:00:48.03, start: 1.463400, bitrate: 589 kb/s

Program 1

Metadata:

service_name : Service01

service_provider: FFmpeg

Stream #0:0[0x100]: Video: h264 (High) ([27][0][0][0] / 0x001B), yuv420p(progressive), 640x272 [SAR 1:1 DAR 40:17], 23.98 fps,

23.98 tbr, 90k tbn, 47.95 tbc

Stream #0:1[0x101]: Audio: mp3 ([3][0][0][0] / 0x0003), 48000 Hz, stereo, fltp, 128 kb/s

5.78 A-V: -0.028 fd=8 aq=20KB vq=14KB sq=0B f=0/0 ← 此处是正在播放的视频信息,会实时变化

FFmpeg + SDL 播放视频

SDL(Simple DirectMedia Layer)是一套开放源代码的跨平台多媒体开发库,使用 C 语言写成。SDL 提供了数种控制图像、声音、输入的函数,让开发者只要用相同或是相似的代码就可以开发出跨多个平台(Linux、Windows、Mac OS X 等)的应用软件。目前 SDL 多用于开发游戏、模拟器、媒体播放器等多媒体应用领域。

我们将利用 FFmpeg 进行解码,然后用 SDL 进行播放。

在播放前,可以在控制台输入 ffmpeg -formats 查看支持的音视频格式(muxers / demuxers):

> ffmpeg -formats

ffmpeg version 4.1 Copyright (c) 2000-2018 the FFmpeg developers

built with gcc 8.2.1 (GCC) 20181017

libavformat 58. 20.100 / 58. 20.100

... ...

File formats:

D. = Demuxing supported

.E = Muxing supported

--

D 3dostr 3DO STR

E 3g2 3GP2 (3GPP2 file format)

E 3gp 3GP (3GPP file format)

D 4xm 4X Technologies

E a64 a64 - video for Commodore 64

D aa Audible AA format files

D aac raw ADTS AAC (Advanced Audio Coding)

DE ac3 raw AC-3

D acm Interplay ACM

D act ACT Voice file format

D adf Artworx Data Format

D adp ADP

D ads Sony PS2 ADS

E adts ADTS AAC (Advanced Audio Coding)

DE adx CRI ADX

... ...

输入ffmpeg -codecs 可以查看支持的编解码器:

> ffmpeg.exe -codecs

ffmpeg version 4.1 Copyright (c) 2000-2018 the FFmpeg developers

built with gcc 8.2.1 (GCC) 20181017

libavcodec 58. 35.100 / 58. 35.100

... ...

Codecs:

D..... = Decoding supported

.E.... = Encoding supported

..V... = Video codec

..A... = Audio codec

..S... = Subtitle codec

...I.. = Intra frame-only codec

....L. = Lossy compression

.....S = Lossless compression

-------

D.VI.S 012v Uncompressed 4:2:2 10-bit

D.V.L. 4xm 4X Movie

D.VI.S 8bps QuickTime 8BPS video

.EVIL. a64_multi Multicolor charset for Commodore 64 (encoders: a64multi )

.EVIL. a64_multi5 Multicolor charset for Commodore 64, extended with 5th color (colram) (encoders: a64multi5 )

D.V..S aasc Autodesk RLE

D.VIL. aic Apple Intermediate Codec

DEVI.S alias_pix Alias/Wavefront PIX image

DEVIL. amv AMV Video

D.V.L. anm Deluxe Paint Animation

D.V.L. ansi ASCII/ANSI art

DEV..S apng APNG (Animated Portable Network Graphics) image

DEVIL. asv1 ASUS V1

DEVIL. asv2 ASUS V2

... ...

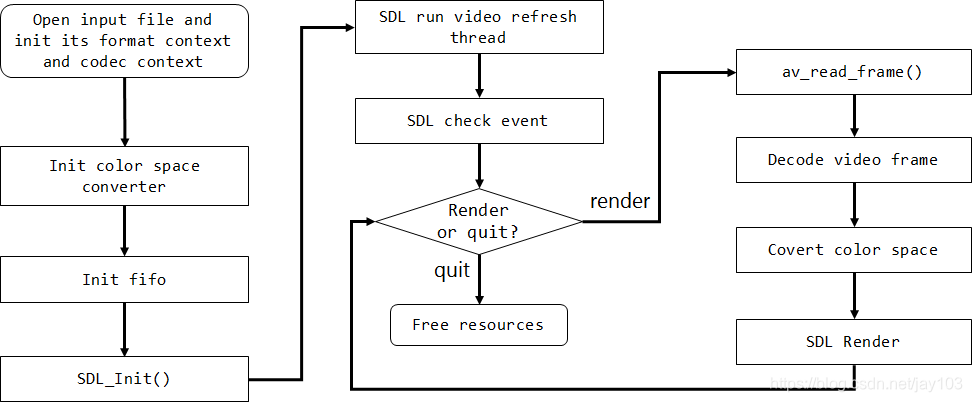

播放流程

播放代码

视频播放需要匹配解码后的图像格式和 Render 要求的图像格式,这个过程就是颜色空间转换,在 采集视频 via FFmpeg 这篇博文中有做过简单介绍。在 FFmpeg中,有两种方法进行颜色空间转换:

- 使用 swscaler

- 使用 filter (AVFilterGraph)

本文中的代码基于 FFmpeg 4.1。

- 以下是使用 swscaler 进行格式转换后再播放的概要代码,略去各个函数的具体实现和资源释放。

g_video_index = open_input_file(video_file, AVMEDIA_TYPE_VIDEO, &g_fmt_ctx, &g_dec_ctx);

RETURN_IF_FALSE(g_video_index >= 0);

hr = init_cvt_frame_and_sws(AV_PIX_FMT_YUV420P, g_dec_ctx, &g_yuv420p_frame, &g_yuv420p_buf, &g_img_cvt_ctx);

GOTO_IF_FAILED(hr);

int frame_rate = ceil(av_q2d(av_guess_frame_rate(g_fmt_ctx, g_fmt_ctx->streams[g_video_index], NULL)));

// SDL IYUV equals YUV420P in ffmpeg

hr = sdl_helper.init(SDL_PIXELFORMAT_IYUV, g_dec_ctx->width, g_dec_ctx->height, frame_rate, sdl_fill_frame);

GOTO_IF_FAILED(hr);

hr = sdl_helper.run();

GOTO_IF_FAILED(hr);

- 以下是使用 filter 进行播放的概要代码,略去各个函数的具体实现和资源释放。

g_video_index = open_input_file(video_file, AVMEDIA_TYPE_VIDEO, &g_fmt_ctx, &g_dec_ctx);

RETURN_IF_FALSE(g_video_index >= 0);

sprintf_s(filter_descr, "scale=%d:%d", SCALE_WIDTH, SCALE_HEIGHT);

AVRational stream_time_base = g_fmt_ctx->streams[g_video_index]->time_base;

hr = g_video_filter.init_filters(g_dec_ctx, stream_time_base, filter_descr);

GOTO_IF_FAILED(hr);

hr = init_frame_and_buf(AV_PIX_FMT_YUV420P, SCALE_WIDTH, SCALE_HEIGHT, &g_yuv420p_frame, &g_yuv420p_buf);

GOTO_IF_FAILED(hr);

int frame_rate = ceil(av_q2d(av_guess_frame_rate(g_fmt_ctx, g_fmt_ctx->streams[g_video_index], NULL)));

// SDL IYUV equals YUV420P in ffmpeg

hr = sdl_helper.init(SDL_PIXELFORMAT_IYUV, g_dec_ctx->width, g_dec_ctx->height, frame_rate, sdl_fill_frame);

GOTO_IF_FAILED(hr);

hr = sdl_helper.run();

GOTO_IF_FAILED(hr);

open_input_file 函数

请看 这里,一模一样。

init_cvt_frame_and_sws 函数

请看 这里,一模一样。

Video_filter::init 函数

初始化并连接 filter graph。

int Video_filter::init(AVCodecContext *dec_ctx, AVRational stream_time_base, const char *filters_descr)

{

char args[512] = {0};

int hr = -1;

const AVPixelFormat pix_fmts[] = { AV_PIX_FMT_YUV420P, AV_PIX_FMT_NONE };

const AVFilter *buffersrc = avfilter_get_by_name("buffer");

const AVFilter *buffersink = avfilter_get_by_name("buffersink");

AVFilterInOut *outputs = avfilter_inout_alloc();

AVFilterInOut *inputs = avfilter_inout_alloc();

m_filter_graph = avfilter_graph_alloc();

/* buffer video source: the decoded frames from the decoder will be inserted here. */

_snprintf_s(args, sizeof(args),

"video_size=%dx%d:pix_fmt=%d:time_base=%d/%d:pixel_aspect=%d/%d",

dec_ctx->width, dec_ctx->height, dec_ctx->pix_fmt,

stream_time_base.num, stream_time_base.den,

dec_ctx->sample_aspect_ratio.num, dec_ctx->sample_aspect_ratio.den);

hr = avfilter_graph_create_filter(&m_buffersrc_ctx, buffersrc, "in", args, NULL, m_filter_graph);

/* buffer video sink: to terminate the filter chain. */

hr = avfilter_graph_create_filter(&m_buffersink_ctx, buffersink, "out", NULL, NULL, m_filter_graph);

hr = av_opt_set_int_list(m_buffersink_ctx, "pix_fmts", pix_fmts, AV_PIX_FMT_NONE, AV_OPT_SEARCH_CHILDREN);

/* Set the endpoints for the filter graph. The filter_graph will

be linked to the graph described by filters_descr. */

outputs->name = av_strdup("in");

outputs->filter_ctx = m_buffersrc_ctx;

outputs->pad_idx = 0;

outputs->next = NULL;

inputs->name = av_strdup("out");

inputs->filter_ctx = m_buffersink_ctx;

inputs->pad_idx = 0;

inputs->next = NULL;

hr = avfilter_graph_parse_ptr(m_filter_graph, filters_descr, &inputs, &outputs, NULL);

hr = avfilter_graph_config(m_filter_graph, NULL);

hr = 0;

RESOURCE_FREE:

avfilter_inout_free(&inputs);

avfilter_inout_free(&outputs);

return hr;

}

SDL_video_helper::init 函数

主要是初始化基本的视频参数(宽高、帧率)并创建 Render。

typedef int (*PF_GET_FRAME)(uint8_t** pixel, int* pitch);

int SDL_video_helper::init(

Uint32 format,

int width,

int height,

int frame_rate,

PF_GET_FRAME get_frame_callback)

{

int hr = -1;

m_get_frame_callback = get_frame_callback;

hr = SDL_Init(SDL_INIT_VIDEO | SDL_INIT_TIMER);

RETURN_IF_FAILED(hr);

m_screen = SDL_CreateWindow( "Video Player Window", SDL_WINDOWPOS_UNDEFINED,

SDL_WINDOWPOS_UNDEFINED, width, height, SDL_WINDOW_OPENGL);

RETURN_IF_NULL(m_screen);

m_renderer = SDL_CreateRenderer(m_screen, -1, 0);

RETURN_IF_NULL(m_renderer);

m_texture = SDL_CreateTexture(m_renderer, format, SDL_TEXTUREACCESS_STREAMING, width, height);

RETURN_IF_NULL(m_texture);

m_frame_rate = frame_rate;

m_thread_video_evt = SDL_CreateThread(video_refresh_thread, NULL, (void*)this);

RETURN_IF_NULL(m_thread_video_evt);

return 0;

}

SDL_video_helper::video_refresh_thread 函数

按帧率请求画面刷新的线程。

static int video_refresh_thread(void *this_ptr)

{

return ((SDL_video_helper*)this_ptr)->refresh_video_event();

}

int refresh_video_event()

{

m_exit_thread = false;

m_pause_thread = false;

Uint32 interval = 1000 / m_frame_rate;

while (!m_exit_thread) {

if (!m_pause_thread) {

if (!SDL_HasEvent(MY_SDL_REFRESH_EVENT)) {

SDL_Event evt;

evt.type = MY_SDL_REFRESH_EVENT;

SDL_PushEvent(&evt);

}

}

SDL_Delay(interval);

}

SDL_Event evt;

evt.type = MY_SDL_BREAK_EVENT; //Break the run loop

SDL_PushEvent(&evt);

return 0;

}

SDL_video_helper::run 函数

类似于 Windows 经典的 WindowProc 函数,处理各种事件 / 消息。

如果收到 MY_SDL_REFRESH_EVENT,则通过回调函数取一帧图像,然后用 Render present 出来。

int SDL_video_helper::run()

{

SDL_Event sdl_event;

uint8_t* pixel = NULL;

int pitch = 0;

while (true) {

SDL_WaitEvent(&sdl_event);

switch (sdl_event.type) {

case MY_SDL_REFRESH_EVENT:

int hr = m_get_frame_callback(&pixel, &pitch);

if (hr >= 0) {

SDL_UpdateTexture( m_texture, NULL, pixel, pitch );

SDL_RenderClear( m_renderer );

SDL_RenderCopy( m_renderer, m_texture, NULL, NULL);

SDL_RenderPresent( m_renderer );

}

else if (hr == SDL_ERR_EOF)

m_exit_thread = true;

break;

case SDL_KEYDOWN:

if (sdl_event.key.keysym.sym == SDLK_SPACE) //Pause

m_pause_thread = !m_pause_thread;

else if (sdl_event.key.keysym.sym == SDLK_ESCAPE)

m_exit_thread = true;

break;

case SDL_QUIT:

m_exit_thread = true;

break;

case MY_SDL_BREAK_EVENT:

return 0;

}

}

return 0;

}

sdl_fill_frame 回调函数 (w/ swscalar)

使用 swscalar 转换颜色空间时的 SDL 回调函数。

int sdl_fill_frame(uint8_t** pixel, int* pitch)

{

int hr = 0;

std::vector<AVFrame*> decoded_frames;

if (g_cached_frames.empty() && !g_finished) {

hr = decode_av_frame(g_fmt_ctx, g_dec_ctx, g_video_index, decoded_frames, &g_finished);

RETURN_IF_FAILED(hr);

for (size_t i = 0; i < decoded_frames.size(); ++i)

g_cached_frames.push(decoded_frames[i]);

}

if (g_cached_frames.empty())

return g_finished ? SDL_ERR_EOF : SDL_ERR_UNKNOWN;

AVFrame* frame = g_cached_frames.front();

int height = sws_scale(g_img_cvt_ctx, (const uint8_t* const*)frame->data, frame->linesize, 0,

g_dec_ctx->height, g_yuv420p_frame->data, g_yuv420p_frame->linesize);

GOTO_IF_FALSE(height > 0);

*pixel = g_yuv420p_frame->data[0];

*pitch = g_yuv420p_frame->linesize[0];

hr = 0;

RESOURCE_FREE:

av_frame_free(&frame);

g_cached_frames.pop();

return hr;

}

sdl_fill_frame 回调函数 (w/ filter)

使用 filter 转换颜色空间时的 SDL 回调函数,涉及到 YUV surface 的数据存储结构,具体请参考我的另一篇文章:RGB 和 YUV 格式。

int sdl_fill_frame(uint8_t** pixel, int* pitch)

{

int hr = 0;

std::vector<AVFrame*> decoded_frames;

if (g_cached_frames.empty() && !g_finished) {

hr = decode_av_frame(g_fmt_ctx, g_dec_ctx, g_video_index, decoded_frames, &g_finished);

RETURN_IF_FAILED(hr);

for (size_t i = 0; i < decoded_frames.size(); ++i) {

g_video_filter.do_filter(decoded_frames[i], g_cached_frames);

av_frame_free(&decoded_frames[i]);

}

}

if (g_cached_frames.empty())

return g_finished ? SDL_ERR_EOF : SDL_ERR_UNKNOWN;

AVFrame* frame = g_cached_frames.front();

size_t y_planar_len = g_yuv420p_frame->linesize[0] * frame->height;

memcpy_s(g_yuv420p_buf, y_planar_len, frame->data[0], y_planar_len);

size_t u_planar_len = g_yuv420p_frame->linesize[1] * frame->height / 2;

memcpy_s(g_yuv420p_buf + y_planar_len, u_planar_len, frame->data[1], u_planar_len);

size_t v_planar_len = g_yuv420p_frame->linesize[2] * frame->height / 2;

memcpy_s(g_yuv420p_buf + y_planar_len + u_planar_len, v_planar_len, frame->data[2], v_planar_len);

*pixel = g_yuv420p_frame->data[0];

*pitch = g_yuv420p_frame->linesize[0];

av_frame_free(&frame);

g_cached_frames.pop();

return 0;

}

decode_av_frame 函数

请看 这里,一模一样。

Video_filter::do_filter 函数

本文中的 filter 其实就是做了颜色空间转换的工作,不过下面的函数通用所有 filter。

int Video_filter::do_filter(AVFrame* in_frame, std::queue<AVFrame*>& out_frames)

{

int hr = -1;

in_frame->pts = in_frame->best_effort_timestamp;

hr = av_buffersrc_add_frame_flags(m_buffersrc_ctx, in_frame, AV_BUFFERSRC_FLAG_KEEP_REF);

RETURN_IF_FAILED(hr);

AVFrame* filt_frame = NULL;

while (true) {

filt_frame = av_frame_alloc();

hr = av_buffersink_get_frame(m_buffersink_ctx, filt_frame);

if (hr == AVERROR(EAGAIN) || hr == AVERROR_EOF)

break;

GOTO_IF_FAILED(hr);

out_frames.push(filt_frame);

filt_frame = NULL;

}

RESOURCE_FREE:

if (NULL != filt_frame)

av_frame_free(&filt_frame);

return hr;

}

其他框架下的播放

请参考对应的文章。

– EOF –

本文深入探讨了如何使用FFmpeg和SDL进行视频播放,包括FFmpeg的简介、命令行播放、与SDL结合的播放方式、播放流程及核心代码实现。介绍了使用swscaler和filter进行颜色空间转换的方法。

本文深入探讨了如何使用FFmpeg和SDL进行视频播放,包括FFmpeg的简介、命令行播放、与SDL结合的播放方式、播放流程及核心代码实现。介绍了使用swscaler和filter进行颜色空间转换的方法。

1万+

1万+

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?