原来是大佬的github上,少写了一个参数 int4。。。。

自己搞好几天,没搞好。大佬不说,还是不知道。。。。。。。。。。。

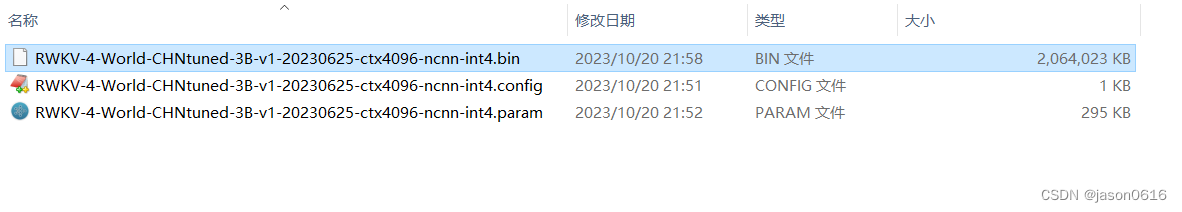

上面是大佬转的,估计是fp16

下面是我自己转的,用的fp32

Convert Model

-

Generate a ChatRWKV weight file by

v2/convert_model.py(in ChatRWKV repo) and strategycuda fp32orcpu fp32. Note that though we use fp32 here, the real dtype is determined is the following step. -

Generate a faster-rwkv weight file by

tools/convert_weight.py. -

Export ncnn model by

./export_ncnn <input_faster_rwkv_model_path> <output_path_prefix>. You can download pre-builtexport_ncnnfrom Releases if you are a Linux users, or build it by yourself.

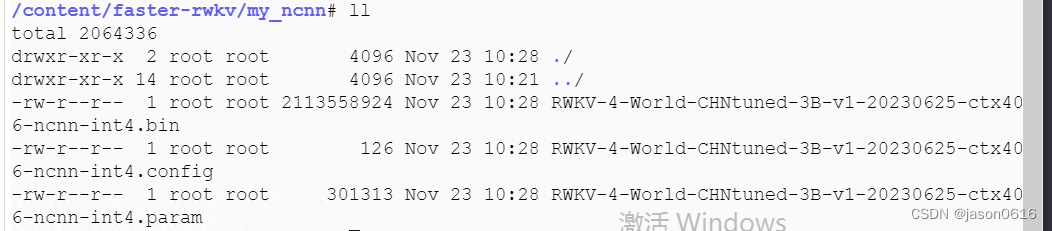

要量化的话,最后一步,./export_ncnn <input_faster_rwkv_model_path> <output_path_prefix> 后面加给参数(比如int4,int8等)就行了。

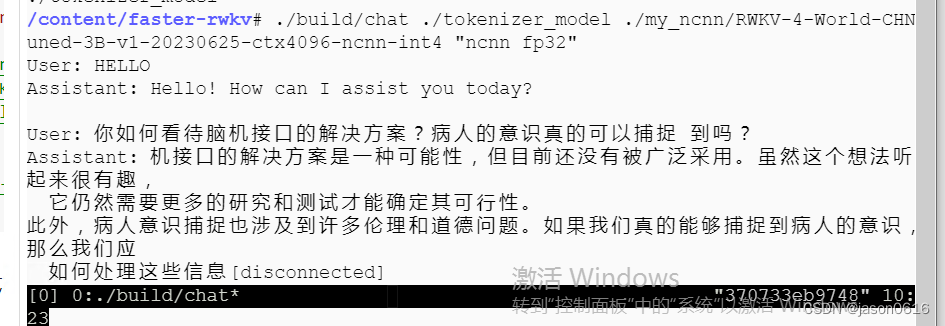

Ubuntu22,亲测成功。效果:

不知道是不是用的fp32的原因,感觉不快啊。。。

9818

9818

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?