SMON(system monitor process)系统监控后台进程,有时候也被叫做system cleanup process,这么叫的原因是它负责完成很多清理(cleanup)任务。但凡学习过Oracle基础知识的技术人员都会或多或少对该background process的功能有所了解。

曾几何时对SMON功能的了解程度可以作为评判一位DBA理论知识的重要因素,至今仍有很多公司在DBA面试中会问到SMON有哪些功能这样的问题。首先这是一道开放式的题目,并不会奢求面试者能够打全(答全几乎是不可能的,即便是在你阅读本篇文章之后),答出多少可以作为知识广度的评判依据(如果面试人特意为这题准备过,那么也很好,说明他已经能系统地考虑问题了),接着还可以就具体的某一个功能说开去,来了解面试者的知识深度,当然这扯远了。

我们所熟知的SMON是个兢兢业业的家伙,它负责完成一些列系统级别的任务。与PMON(Process Monitor)后台进程不同的是,SMON负责完成更多和整体系统相关的工作,这导致它会去做一些不知名的”累活”,当系统频繁产生这些”垃圾任务”,则SMON可能忙不过来。因此在10g中SMON变得有一点懒惰了,如果它在短期内接收到过多的工作通知(SMON: system monitor process posted),那么它可能选择消极怠工以便让自己不要过于繁忙(SMON: Posted too frequently, trans recovery disabled),之后会详细介绍。

SMON的主要作用包括:

1.清理临时段(SMON cleanup temporary segments)

触发场景

很多人错误地理解了这里所说的临时段temporary segments,认为temporary segments是指temporary tablespace临时表空间上的排序临时段(sort segment)。事实上这里的临时段主要指的是永久表空间(permanent tablespace)上的临时段,当然临时表空间上的temporary segments也是由SMON来清理(cleanup)的,但这种清理仅发生在数据库实例启动时(instance startup)。

永久表空间上同样存在临时段,譬如当我们在某个永久表空间上使用create table/index等DDL命令创建某个表/索引时,服务进程一开始会在指定的永久表空间上分配足够多的区间(Extents),这些区间在命令结束之前都是临时的(Temporary Extents),直到表/索引完全建成才将该temporary segment转换为permanent segment。另外当使用drop命令删除某个段时,也会先将该段率先转换为temporary segment,之后再来清理该temporary segment(DROP object converts the segment to temporary and then cleans up the temporary segment)。 常规情况下清理工作遵循谁创建temporary segment,谁负责清理的原则。换句话说,因服务进程rebuild index所产生的temporary segment在rebuild完成后应由服务进程自行负责清理。一旦服务进程在成功清理temporary segment之前就意外终止了,亦或者服务进程在工作过程中遇到了某些ORA-错误导致语句失败,那么SMON都会被要求(posted)负责完成temporary segment的清理工作。

对于永久表空间上的temporary segment,SMON会三分钟清理一次(前提是接到post),如果SMON过于繁忙那么可能temporary segment长期不被清理。temporary segment长期不被清理可能造成一个典型的问题是:在rebuild index online失败后,后续执行的rebuild index命令要求之前产生的temporary segment已被cleanup,如果cleanup没有完成那么就需要一直等下去。在10gR2中我们可以使用dbms_repair.online_index_clean来手动清理online index rebuild的遗留问题:

The dbms_repair.online_index_clean function has been created to cleanup online index rebuilds. Use the dbms_repair.online_index_clean function to resolve the issue. Please note if you are unable to run the dbms_repair.online_index_clean function it is due to the fact that you have not installed the patch for Bug 3805539 or are not running on a release that includes this fix. The fix for this bug is a new function in the dbms_repair package called dbms_repair.online_index_clean, which has been created to cleanup online index [[sub]partition] [re]builds. New functionality is not allowed in patchsets; therefore, this is not available in a patchset but is available in 10gR2. Check your patch list to verify the database is patched for Bug 3805539 using the following command and patch for the bug if it is not listed: opatch lsinventory -detail Cleanup after a failed online index [re]build can be slow to occurpreventing subsequent such operations until the cleanup has occured.

接着我们通过实践来看一下smon是如何清理永久表空间上的temporary segment的:

设置10500事件以跟踪smon进程,这个诊断事件后面会介绍 SQL> alter system set events '10500 trace name context forever,level 10'; System altered. 在第一个会话中执行create table命令,这将产生一定量的Temorary Extents SQL> create table smon as select * from ymon; 在另一个会话中执行对DBA_EXTENTS视图的查询,可以发现产生了多少临时区间 SQL> SELECT COUNT(*) FROM DBA_EXTENTS WHERE SEGMENT_TYPE='TEMPORARY'; COUNT(*) ---------- 117 终止以上create table的session,等待一段时间后观察smon后台进程的trc可以发现以下信息: *** 2011-06-07 21:18:39.817 SMON: system monitor process posted msgflag:0x0200 (-/-/-/-/TMPSDROP/-/-) *** 2011-06-07 21:18:39.818 SMON: Posted, but not for trans recovery, so skip it. *** 2011-06-07 21:18:39.818 SMON: clean up temp segments in slave SQL> SELECT COUNT(*) FROM DBA_EXTENTS WHERE SEGMENT_TYPE='TEMPORARY'; COUNT(*) ---------- 0 可以看到smon通过slave进程完成了对temporary segment的清理

与永久表空间上的临时段不同,出于性能的考虑临时表空间上的Extents并不在操作(operations)完成后立即被释放和归还。相反,这些Temporary Extents会被标记为可用,以便用于下一次的排序操作。SMON仍会清理这些Temporary segments,但这种清理仅发生在实例启动时(instance startup):

For performance issues, extents in TEMPORARY tablespaces are not released ordeallocated once the operation is complete.Instead, the extent is simply marked as available for the next sort operation. SMON cleans up the segments at startup. A sort segment is created by the first statement that used a TEMPORARY tablespacefor sorting, after startup. A sort segment created in a TEMPOARY tablespace is only released at shutdown. The large number of EXTENTS is caused when the STORAGE clause has been incorrectly calculated.

现象

可以通过以下查询了解数据库中Temporary Extent的总数,在一定时间内比较其总数,若有所减少那么说明SMON正在清理Temporary segment

SELECT COUNT(*) FROM DBA_EXTENTS WHERE SEGMENT_TYPE='TEMPORARY';

也可以通过v$sysstat视图中的”SMON posted for dropping temp segment”事件统计信息来了解SMON收到清理要求的情况:

SQL> select name,value from v$sysstat where name like '%SMON%'; NAME VALUE ---------------------------------------------------------------- ---------- total number of times SMON posted 8 SMON posted for undo segment recovery 0 SMON posted for txn recovery for other instances 0 SMON posted for instance recovery 0 SMON posted for undo segment shrink 0 SMON posted for dropping temp segment 1

另外在清理过程中SMON会长期持有Space Transacton(ST)队列锁,其他会话可能因为得不到ST锁而等待超时出现ORA-01575错误:

01575, 00000, "timeout waiting for space management resource" // *Cause: failed to acquire necessary resource to do space management. // *Action: Retry the operation.

如何禁止SMON清理临时段

可以通过设置诊断事件event=’10061 trace name context forever, level 10′禁用SMON清理临时段(disable SMON from cleaning temp segments)。

alter system set events '10061 trace name context forever, level 10';

了解你所不知道的SMON功能(二):合并空闲区间

SMON的作用还包括合并空闲区间(coalesces free extent)

触发场景

早期Oracle采用DMT字典管理表空间,不同于今时今日的LMT本地管理方式,DMT下通过对FET$和UET$2张字典基表的递归操作来管理区间。SMON每5分钟(SMON wakes itself every 5 minutes and checks for tablespaces with default pctincrease != 0)会自发地去检查哪些默认存储参数pctincrease不等于0的字典管理表空间,注意这种清理工作是针对DMT的,而LMT则无需合并。SMON对这些DMT表空间上的连续相邻的空闲Extents实施coalesce操作以合并成一个更大的空闲Extent,这同时也意味着SMON需要维护FET$字典基表。

现象

以下查询可以检查数据库中空闲Extents的总数,如果这个总数在持续减少那么说明SMON正在coalesce free space:

SELECT COUNT(*) FROM DBA_FREE_SPACE;

在合并区间时SMON需要排他地(exclusive)持有ST(Space Transaction)队列锁, 其他会话可能因为得不到ST锁而等待超时出现ORA-01575错误。同时SMON可能在繁琐的coalesce操作中消耗100%的CPU。

如何禁止SMON合并空闲区间

可以通过设置诊断事件event=’10269 trace name context forever, level 10′来禁用SMON合并空闲区间(Don’t do coalesces of free space in SMON)

10269, 00000, "Don't do coalesces of free space in SMON" // *Cause: setting this event prevents SMON from doing free space coalesces alter system set events '10269 trace name context forever, level 10';

了解你所不知道的SMON功能(三):清理obj$基表

SMON的作用还包括清理obj$数据字典基表(cleanup obj$)

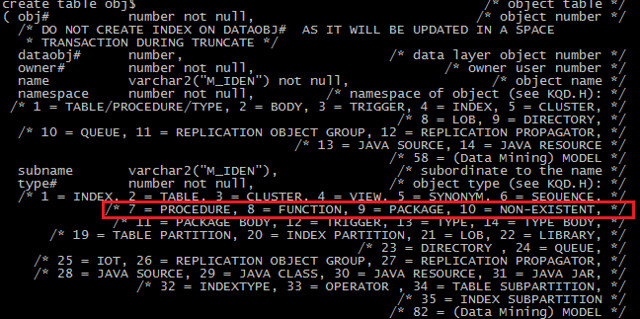

OBJ$字典基表是Oracle Bootstarp启动自举的重要对象之一:

SQL> set linesize 80 ;

SQL> select sql_text from bootstrap$ where sql_text like 'CREATE TABLE OBJ$%';

SQL_TEXT

--------------------------------------------------------------------------------

CREATE TABLE OBJ$("OBJ#" NUMBER NOT NULL,"DATAOBJ#" NUMBER,"OWNER#" NUMBER NOT N

ULL,"NAME" VARCHAR2(30) NOT NULL,"NAMESPACE" NUMBER NOT NULL,"SUBNAME" VARCHAR2(

30),"TYPE#" NUMBER NOT NULL,"CTIME" DATE NOT NULL,"MTIME" DATE NOT NULL,"STIME"

DATE NOT NULL,"STATUS" NUMBER NOT NULL,"REMOTEOWNER" VARCHAR2(30),"LINKNAME" VAR

CHAR2(128),"FLAGS" NUMBER,"OID$" RAW(16),"SPARE1" NUMBER,"SPARE2" NUMBER,"SPARE3

" NUMBER,"SPARE4" VARCHAR2(1000),"SPARE5" VARCHAR2(1000),"SPARE6" DATE) PCTFREE

10 PCTUSED 40 INITRANS 1 MAXTRANS 255 STORAGE ( INITIAL 16K NEXT 1024K MINEXTEN

TS 1 MAXEXTENTS 2147483645 PCTINCREASE 0 OBJNO 18 EXTENTS (FILE 1 BLOCK 121))

触发场景

OBJ$基表是一张低级数据字典表,该表几乎对库中的每个对象(表、索引、包、视图等)都包含有一行记录。很多情况下,这些条目所代表的对象是不存在的对象(non-existent),引起这种现象的一种可能的原因是对象本身已经被从数据库中删除了,但是对象条目仍被保留下来以满足消极依赖机制(negative dependency)。因为这些条目的存在会导致OBJ$表不断膨胀,这时就需要由SMON进程来删除这些不再需要的行。SMON会在实例启动(after startup of DB is started cleanup function again)时以及启动后的每12个小时执行一次清理任务(the cleanup is scheduled to run after startup and then every 12 hours)。

我们可以通过以下演示来了解SMON清理obj$的过程:

SQL> BEGIN

2 FOR i IN 1 .. 5000 LOOP

3 execute immediate ('create synonym gustav' || i || ' for

4 perfstat.sometable');

5 execute immediate ('drop synonym gustav' || i );

6 END LOOP;

7 END;

8 /

PL/SQL procedure successfully completed.

SQL> startup force;

ORACLE instance started.

Total System Global Area 1065353216 bytes

Fixed Size 2089336 bytes

Variable Size 486542984 bytes

Database Buffers 570425344 bytes

Redo Buffers 6295552 bytes

Database mounted.

Database opened.

SQL> select count(*) from user$ u, obj$ o

2 where u.user# (+)=o.owner# and o.type#=10 and not exists

3 (select p_obj# from dependency$ where p_obj# = o.obj#);

COUNT(*)

----------

5000

SQL> /

COUNT(*)

----------

5000

SQL> /

COUNT(*)

----------

4951

SQL> oradebug setospid 18457;

Oracle pid: 8, Unix process pid: 18457, image: oracle@rh2.oracle.com (SMON)

SQL> oradebug event 10046 trace name context forever ,level 1;

Statement processed.

SQL> oradebug tracefile_name;

/s01/admin/G10R2/bdump/g10r2_smon_18457.trc

select o.owner#,

o.obj#,

decode(o.linkname,

null,

decode(u.name, null, 'SYS', u.name),

o.remoteowner),

o.name,

o.linkname,

o.namespace,

o.subname

from user$ u, obj$ o

where u.use r#(+) = o.owner#

and o.type# = :1

and not exists

(select p_obj# from dependency$ where p_obj# = o.obj#)

order by o.obj#

for update

select null

from obj$

where obj# = :1

and type# = :2

and obj# not in

(select p_obj# from dependency$ where p_obj# = obj$.obj#)

delete from obj$ where obj# = :1

/* 删除过程其实较为复杂,可能要删除多个字典基表上的记录 */

现象

我们可以通过以下查询来了解obj$基表中NON-EXISTENT对象的条目总数(type#=10),若这个总数在不断减少说明smon正在执行清理工作

select trunc(mtime), substr(name, 1, 3) name, count(*)

from obj$

where type# = 10

and not exists (select * from dependency$ where obj# = p_obj#)

group by trunc(mtime), substr(name, 1, 3);

select count(*)

from user$ u, obj$ o

where u.user#(+) = o.owner#

and o.type# = 10

and not exists

(select p_obj# from dependency$ where p_obj# = o.obj#);

如何禁止SMON清理obj$基表

我们可以通过设置诊断事件event=’10052 trace name context forever’来禁止SMON清理obj$基表,当我们需要避免SMON因cleanup obj$的相关代码而意外终止或spin从而开展进一步的诊断时可以设置该诊断事件。在Oracle并行服务器或RAC环境中,也可以设置该事件来保证只有特定的某个节点来执行清理工作。

10052, 00000, "don't clean up obj$"

alter system set events '10052 trace name context forever, level 65535';

Problem Description: We are receiving the below warning during db startup:

WARNING: kqlclo() has detected the following :

Non-existent object 37336 NOT deleted because an object

of the same name exists already.

Object name: PUBLIC.USER$

This is caused by the SMON trying to cleanup the SYS.OJB$.

SMON cleans all dropped objects which have a SYS.OBJ$.TYPE#=10.

This can happen very often when you create an object that have the same name as a public synonym.

When SMON is trying to remove non-existent objects and fails because there are duplicates,

multiple nonexistent objects with same name.

This query will returned many objects with same name under SYS schema:

select o.name,u.user# from user$ u, obj$ o where u.user# (+)=o.owner# and o.type#=10

and not exists (select p_obj# from dependency$ where p_obj# = o.obj#);

To cleanup this message:

Take a full backup of the database - this is crucial. If anything goes wrong during this procedure,

your only option would be to restore from backup, so make sure you have a good backup before proceeding.

We suggest a COLD backup. If you plan to use a HOT backup, you will have to restore point in time if any problem happens

Normally DML against dictionary objects is unsupported,

but in this case we know exactly what the type of corruption,

also you are instructing to do this under guidance from Support.

Data dictionary patching must be done by an experienced DBA.

This solution is unsupported.

It means that if there were problems after applying this solution, a database backup must be restored.

1. Set event 10052 at parameter file to disable cleanup of OBJ$ by SMON

EVENT="10052 trace name context forever, level 65535"

2. Startup database in restricted mode

3. Delete from OBJ$, COMMIT

SQL> delete from obj$ where (name,owner#) in ( select o.name,u.user# from user$ u, obj$ o

where u.user# (+)=o.owner# and o.type#=10 and not exists (select p_obj# from

dependency$ where p_obj# = o.obj#) );

SQL> commit;

SQL> Shutdown abort.

4. remove event 10052 from init.ora

5. Restart the database and monitor for the message in the ALERT LOG file

了解你所不知道的SMON功能(四):维护col_usage$字典基表

SMON的作用还包括维护col_usage$列监控统计信息基表。

最早在9i中引入了col_usage$字典基表,其目的在于监控column在SQL语句作为predicate的情况,col_usage$的出现完善了CBO中柱状图自动收集的机制。

create table col_usage$ ( obj# number, /* object number */ intcol# number, /* internal column number */ equality_preds number, /* equality predicates */ equijoin_preds number, /* equijoin predicates */ nonequijoin_preds number, /* nonequijoin predicates */ range_preds number, /* range predicates */ like_preds number, /* (not) like predicates */ null_preds number, /* (not) null predicates */ timestamp date /* timestamp of last time this row was changed */ ) storage (initial 200K next 100k maxextents unlimited pctincrease 0) / create unique index i_col_usage$ on col_usage$(obj#,intcol#) storage (maxextents unlimited) /

在10g中我们默认使用’FOR ALL COLUMNS SIZE AUTO’的柱状图收集模式,而在9i中默认是’SIZE 1′即默认不收集柱状图,这导致许多9i中正常运行的应用程序在10g中CBO执行计划异常,详见<dbms_stats收集模式在9i和10g上的区别>;。’SIZE AUTO’意为由Oracle自动决定是否收集柱状图及柱状图的桶数,Oracle自行判断的依据就来源于col_usage$字典基表,若表上的某一列曾在硬解析(hard parse)过的SQL语句中充当过predicate(通俗的说就是where后的condition)的话,我们认为此列上有收集柱状图的必要,那么col_usage$上就会被加入该列曾充当predicate的记录。当DBMS_STATS.GATHER_TABLE_STATS存储过程以’SIZE AUTO’模式执行时,收集进程会检查col_usage$基表以判断哪些列之前曾充当过predicate,若充当过则说明该列有收集柱状图的价值。

SMON会每15分钟将shared pool中的predicate columns的数据刷新到col_usage$基表中(until periodically about every 15 minutes SMON flush the data into the data dictionary),另外当instance shutdown时SMON会扫描col_usage$并找出已被drop表的相关predicate columns记录,并删除这部分”orphaned”孤儿记录。

我们来具体了解col_usage$的填充过程:

SQL> select * from v$version;

BANNER

----------------------------------------------------------------

Oracle Database 10g Enterprise Edition Release 10.2.0.4.0 - 64bi

PL/SQL Release 10.2.0.4.0 - Production

CORE 10.2.0.4.0 Production

TNS for Linux: Version 10.2.0.4.0 - Production

NLSRTL Version 10.2.0.4.0 - Production

SQL> select * from global_name;

GLOBAL_NAME

--------------------------------------------------------------------------------

www.oracledatabase12g.com

SQL> create table maclean (t1 int);

Table created.

SQL> select object_id from dba_objects where object_name='MACLEAN';

OBJECT_ID

----------

1323013

SQL> select * from maclean where t1=1;

no rows selected

SQL> set linesize 200 pagesize 2000;

注意col_usage$的数据同*_tab_modifications类似,

从查询到数据刷新到col_usage$存在一段时间的延迟,

所以我们立即查询col_usage$将得不到任何记录,

可以手动执行DBMS_STATS.FLUSH_DATABASE_MONITORING_INFO将缓存中的信息刷新到字典上

SQL> select * from col_usage$ where obj#=1323013;

no rows selected

SQL> oradebug setmypid;

Statement processed.

针对FLUSH_DATABASE_MONITORING_INFO填充操作做10046 level 12 trace

SQL> oradebug event 10046 trace name context forever,level 12;

Statement processed.

SQL> exec DBMS_STATS.FLUSH_DATABASE_MONITORING_INFO;

PL/SQL procedure successfully completed.

SQL> select * from col_usage$ where obj#=1323013;

OBJ# INTCOL# EQUALITY_PREDS EQUIJOIN_PREDS NONEQUIJOIN_PREDS RANGE_PREDS LIKE_PREDS NULL_PREDS TIMESTAMP

---------- ---------- -------------- -------------- ----------------- ----------- ---------- ---------- ---------

1323013 1 1 0 0 0 0 0 19-AUG-11

=============10046 trace content====================

lock table sys.col_usage$ in exclusive mode nowait

在测试中可以发现10.2.0.4上DBMS_STATS.FLUSH_DATABASE_MONITORING_INFO存储过程会优先使用

lock in exclusive mode nowait来锁住col_usage$基表,

如果lock失败则会反复尝试1100次,

若仍不能锁住col_usage$表则放弃更新col_usage$上的数据,避免造成锁等待和死锁。

Cksxm.c

Monitor Modification Hash Table Base

modification hash table entry

modification hash table chunk

monitoring column usage element

ksxmlock_1

lock table sys.col_usage$ in exclusive mode

lock table sys.col_usage$ in exclusive mode nowait

update sys.col_usage$

set equality_preds = equality_preds +

decode(bitand(:flag, 1), 0, 0, 1),

equijoin_preds = equijoin_preds +

decode(bitand(:flag, 2), 0, 0, 1),

nonequijoin_preds = nonequijoin_preds +

decode(bitand(:flag, 4), 0, 0, 1),

range_preds = range_preds + decode(bitand(:flag, 8), 0, 0, 1),

like_preds = like_preds + decode(bitand(:flag, 16), 0, 0, 1),

null_preds = null_preds + decode(bitand(:flag, 32), 0, 0, 1),

timestamp = :time

where obj# = :ob jn

and intcol# = :coln

insert into sys.col_usage$

(obj#,

intcol#,

equality_preds,

equijoin_preds,

nonequijoin_preds,

range_preds,

like_preds,

null_preds,

timestamp)

values

(:objn,

:coln,

decode(bitand(:flag, 1), 0, 0, 1),

decode(bitand(:flag, 2), 0, 0, 1),

decode(bitand(:flag, 4), 0, 0, 1),

decode(bitand(:flag, 8), 0, 0, 1),

decode(bitand(:flag, 16), 0, 0, 1),

decode(bitand(:flag, 32), 0, 0, 1),

:time)

使用dbms_stats的’SIZE AUTO’模式收集表上的统计信息会首先参考col_usage$中的predicate columns记录:

SQL> begin

2

3 dbms_stats.gather_table_stats(ownname => 'SYS',

4 tabname => 'MACLEAN',

5 method_opt => 'FOR ALL COLUMNS SIZE AUTO');

6 end;

7 /

PL/SQL procedure successfully completed.

============10046 level 12 trace content======================

SELECT /*+ ordered use_nl(o c cu h) index(u i_user1) index(o i_obj2)

index(ci_obj#) index(cu i_col_usage$)

index(h i_hh_obj#_intcol#) */

C.NAME COL_NAME,

C.TYPE# COL_TYPE,

C.CHARSETFORM COL_CSF,

C.DEFAULT$ COL_DEF,

C.NULL$ COL_NULL,

C.PROPERTY COL_PROP,

C.COL # COL_UNUM,

C.INTCOL# COL_INUM,

C.OBJ# COL_OBJ,

C.SCALE COL_SCALE,

H.BUCKET_CNT H_BCNT,

(T.ROWCNT - H.NULL_CNT) / GREATEST(H.DISTCNT, 1) H_PFREQ,

C.LENGTH COL_LEN,

CU.TIMES TAMP CU_TIME,

CU.EQUALITY_PREDS CU_EP,

CU.EQUIJOIN_PREDS CU_EJP,

CU.RANGE_PREDS CU_RP,

CU.LIKE_PREDS CU_LP,

CU.NONEQUIJOIN_PREDS CU_NEJP,

CU.NULL_PREDS NP

FROM SYS.USE R$ U,

SYS.OBJ$ O,

SYS.TAB$ T,

SYS.COL$ C,

SYS.COL_USAGE$ CU,

SYS.HIST_HEAD$ H

WHERE :B3 = '0'

AND U.NAME = :B2

AND O.OWNER# = U.USER#

AND O.TYPE# = 2

AND O.NAME = :B1

AND O.OBJ# = T.OBJ#

AND O.OBJ# = C.OBJ#

AND C.OBJ# = CU.OBJ#(+)

AND C.INTCOL# = CU.INTCOL#(+)

AND C.OBJ# = H.OBJ#(+)

AND C.INTCOL# = H.INTCOL#(+)

UNION ALL

SELECT /*+

ordered use_nl(c) */

C.KQFCONAM COL_NAME,

C.KQFCODTY COL_TYPE,

DECODE(C.KQFCODTY, 1, 1, 0) COL_CSF,

NULL COL_DEF,

0 COL_NULL,

0 COL_PROP,

C.KQFCOCNO COL_UNUM,

C.KQFCOC NO COL_INUM,

O.KQFTAOBJ COL_OBJ,

DECODE(C.KQFCODTY, 2, -127, 0) COL_SCALE,

H.BUCKET_CNT H_BCNT,

(ST.ROWCNT - NULL_CNT) / GREATEST(H.DISTCNT, 1) H_PFREQ,

DECODE(C.KQFCODTY, 2, 22, C.KQFCOSIZ) COL_LEN,

CU.TIMESTAMP CU_TIME,

CU.EQUALITY_PREDS CU_EP,

CU.EQUIJOIN_PREDS CU_EJP,

CU.RANGE_PREDS CU_RP,

CU.LIKE_PREDS CU_LP,

CU.NONEQUIJOIN_PREDS CU _NEJP,

CU.NULL_PREDS NP

FROM SYS.X$KQFTA O,

SYS.TAB_STATS$ ST,

SYS.X$KQFCO C,

SYS.COL_USAGE$ CU,

SYS.HIST_HEAD$ H

WHERE :B3 != '0'

AND :B2 = 'SYS'

AND O.KQFTANAM = :B1

AND O.KQFTAOBJ = ST.OBJ#(+)

AND O.KQFTAOBJ = C.KQFCOTOB

AND C.KQFCOTOB = CU.OBJ#(+)

AND C.KQFCOCNO = CU.INTCOL#(+)

AND C.KQFCOTOB = H.OBJ#(+)

AND C.KQFCOCNO = H.INTCO L#(+)

现象

根据Metalink Note<Database Shutdown Immediate Takes Forever, Can Only Do Shutdown Abort [ID 332177.1]>:

Database Shutdown Immediate Takes Forever, Can Only Do Shutdown Abort [ID 332177.1] Applies to: Oracle Server - Enterprise Edition - Version: 9.2.0.4.0 This problem can occur on any platform. Symptoms The database is not shutting down for a considerable time when you issue the command : shutdown immediate To shut it down in a reasonable time you have to issue the command shutdown abort To collect some diagnostics before issuing the shutdown immediate command set a trace event as follows: Connect as SYS (/ as sysdba) SQL> alter session set events '10046 trace name context forever,level 12'; SQL> shutdown immediate; In the resultant trace file (within the udump directory) you see something similar to the following :- PARSING IN CURSOR #n delete from sys.col_usage$ c where not exists (select 1 from sys.obj$ o where o.obj# = c.obj# ) ...followed by loads of..... WAIT #2: nam='db file sequential read' ela= 23424 p1=1 p2=4073 p3=1 .... WAIT #2: nam='db file scattered read' ela= 1558 p1=1 p2=44161 p3=8 etc Then eventually WAIT #2: nam='log file sync' ela= 32535 p1=4111 p2=0 p3=0 ...some other SQL....then back to WAIT #2: nam='db file sequential read' ela= 205 p1=1 p2=107925 p3=1 WAIT #2: nam='db file sequential read' ela= 1212 p1=1 p2=107926 p3=1 WAIT #2: nam='db file sequential read' ela= 212 p1=1 p2=107927 p3=1 WAIT #2: nam='db file scattered read' ela= 1861 p1=1 p2=102625 p3=8 etc.... To verify which objects are involved here you can use a couple of the P1 & P2 values from above :- a) a sequential read SELECT owner,segment_name,segment_type FROM dba_extents WHERE file_id=1 AND 107927 BETWEEN block_id AND block_id + blocks b) a scattered read SELECT owner,segment_name,segment_type FROM dba_extents WHERE file_id=1 AND 102625 BETWEEN block_id AND block_id + blocks The output confirms that the objects are SYS.I_COL_USAGE$ (INDEX) and SYS.COL_USAGE$ (TABLE) Finally, issue select count(*) from sys.col_usage$; Cause If the number of entries in sys.col_usage$ is large then you are very probably hitting the issue raised in Bug: 3540022 9.2.0.4.0 RDBMS Base Bug 3221945 Abstract: CLEAN-UP OF ENTRIES IN COL_USAGE$ Base Bug 3221945 9.2.0.3 RDBMS Abstract: ORA-1631 ON COL_USAGE$ Closed as "Not a Bug" However, when a table is dropped, the column usage statistics are not dropped. They are left as they are. When the database is shutdown (in normal mode), then these "orphaned" column usage entries are deleted. The code which does this gets called only during normal shutdown. Unless and until the database is shutdown, the col_usage$ table will continue to grow. Solution To implement the workaround, please execute the following steps: 1. Periodically (eg once a day) run exec DBMS_STATS.FLUSH_DATABASE_MONITORING_INFO; DBMS_STATS.FLUSH_DATABASE_MONITORING_INFO will clean out redundant col_usage$ entries, and when you come to shutdown the database you should not have a huge number of entries left to clean up.

该文档指出了在shutdown instance时SMON会着手清理col_usage$中已被drop表的相关predicate columns的”orphaned”记录,如果在本次实例的生命周期中曾生成大量最后被drop的中间表,那么col_usage$中已经堆积了众多的”orphaned”记录,SMON为了完成cleanup工作需要花费大量时间导致shutdown变慢。这个文档还指出定期执行DBMS_STATS.FLUSH_DATABASE_MONITORING_INFO也可以清理col_usage$中的冗余记录。

我们来观察一下SMON的清理工作:

begin

for i in 1 .. 5000 loop

execute immediate 'create table maclean1' || i ||' tablespace fragment as select 1 t1 from dual';

execute immediate 'select * from maclean1' || i || ' where t1=1';

end loop;

DBMS_STATS.FLUSH_DATABASE_MONITORING_INFO;

for i in 1 .. 5000 loop

execute immediate 'drop table maclean1' || i;

end loop;

end;

/

SQL> purge dba_recyclebin;

DBA Recyclebin purged.

我们可以通过以下查询了解col_usage$上的orphaned记录总数,这也将是在instance shutdown时

SMON所需要清理的数目

select count(*)

from sys.col_usage$ c

where not exists (select /*+ unnest */

1

from sys.obj$ o

where o.obj# = c.obj#);

COUNT(*)

----------

10224

针对SMON做10046 level 12 trace

SQL> oradebug setospid 30225;

Oracle pid: 8, Unix process pid: 30225, image: oracle@rh2.oracle.com (SMON)

SQL> oradebug event 10046 trace name context forever,level 12;

Statement processed.

SQL> shutdown immediate;

=================10046 trace content==================

lock table sys.col_usage$ in exclusive mode nowait

delete from sys.col_usage$ where obj#= :1 and intcol#= :2

delete from sys.col_usage$ c

where not exists (select /*+ unnest */

1

from sys.obj$ o

where o.obj# = c.obj#)

如何禁止SMON维护col_usage$字典基表

1.设置隐藏参数_column_tracking_level(column usage tracking),该参数默认为1即启用column使用情况跟踪。设置该参数为0,将禁用column tracking,该参数可以在session和system级别动态修改:

SQL> col name for a25

SQL> col DESCRIB for a25

SQL> SELECT x.ksppinm NAME, y.ksppstvl VALUE, x.ksppdesc describ

2 FROM SYS.x$ksppi x, SYS.x$ksppcv y

3 WHERE x.inst_id = USERENV ('Instance')

4 AND y.inst_id = USERENV ('Instance')

5 AND x.indx = y.indx

6 AND x.ksppinm LIKE '%_column_tracking_level%';

NAME VALUE DESCRIB

------------------------- ---------- -------------------------

_column_tracking_level 1 column usage tracking

SQL> alter session set "_column_tracking_level"=0 ;

Session altered.

SQL> alter system set "_column_tracking_level"=0 scope=both;

System altered.

2.关闭DML monitoring,可以通过设置隐藏参数_dml_monitoring_enabled(enable modification monitoring)为false实现,disable dml monitoring对CBO的影响较大,所以我们一般推荐上一种方式:

SQL> SELECT monitoring, count(*) from DBA_TABLES group by monitoring;

MON COUNT(*)

--- ----------

NO 79

YES 2206

SQL> alter system set "_dml_monitoring_enabled"=false;

System altered.

SQL> SELECT monitoring, count(*) from DBA_TABLES group by monitoring;

MON COUNT(*)

--- ----------

NO 2285

实际上dba_tables的monitoring列来源于内部参数_dml_monitoring_enabled

SQL> set long 99999

SQL> select text from dba_views where view_name='DBA_TABLES';

TEXT

--------------------------------------------------------------------------------

select u.name, o.name, decode(bitand(t.property,2151678048), 0, ts.name, null),

decode(bitand(t.property, 1024), 0, null, co.name),

decode((bitand(t.property, 512)+bitand(t.flags, 536870912)),

0, null, co.name),

decode(bitand(t.trigflag, 1073741824), 1073741824, 'UNUSABLE', 'VALID'),

decode(bitand(t.property, 32+64), 0, mod(t.pctfree$, 100), 64, 0, null),

decode(bitand(ts.flags, 32), 32, to_number(NULL),

decode(bitand(t.property, 32+64), 0, t.pctused$, 64, 0, null)),

decode(bitand(t.property, 32), 0, t.initrans, null),

decode(bitand(t.property, 32), 0, t.maxtrans, null),

s.iniexts * ts.blocksize,

decode(bitand(ts.flags, 3), 1, to_number(NULL),

s.extsize * ts.blocksize),

s.minexts, s.maxexts,

decode(bitand(ts.flags, 3), 1, to_number(NULL),

s.extpct),

decode(bitand(ts.flags, 32), 32, to_number(NULL),

decode(bitand(o.flags, 2), 2, 1, decode(s.lists, 0, 1, s.lists))),

decode(bitand(ts.flags, 32), 32, to_number(NULL),

decode(bitand(o.flags, 2), 2, 1, decode(s.groups, 0, 1, s.groups))),

decode(bitand(t.property, 32+64), 0,

decode(bitand(t.flags, 32), 0, 'YES', 'NO'), null),

decode(bitand(t.flags,1), 0, 'Y', 1, 'N', '?'),

t.rowcnt,

decode(bitand(t.property, 64), 0, t.blkcnt, null),

decode(bitand(t.property, 64), 0, t.empcnt, null),

t.avgspc, t.chncnt, t.avgrln, t.avgspc_flb,

decode(bitand(t.property, 64), 0, t.flbcnt, null),

lpad(decode(t.degree, 32767, 'DEFAULT', nvl(t.degree,1)),10),

lpad(decode(t.instances, 32767, 'DEFAULT', nvl(t.instances,1)),10),

lpad(decode(bitand(t.flags, 8), 8, 'Y', 'N'),5),

decode(bitand(t.flags, 6), 0, 'ENABLED', 'DISABLED'),

t.samplesize, t.analyzetime,

decode(bitand(t.property, 32), 32, 'YES', 'NO'),

decode(bitand(t.property, 64), 64, 'IOT',

decode(bitand(t.property, 512), 512, 'IOT_OVERFLOW',

decode(bitand(t.flags, 536870912), 536870912, 'IOT_MAPPING', null

))),

decode(bitand(o.flags, 2), 0, 'N', 2, 'Y', 'N'),

decode(bitand(o.flags, 16), 0, 'N', 16, 'Y', 'N'),

decode(bitand(t.property, 8192), 8192, 'YES',

decode(bitand(t.property, 1), 0, 'NO', 'YES')),

decode(bitand(o.flags, 2), 2, 'DEFAULT',

decode(s.cachehint, 0, 'DEFAULT', 1, 'KEEP', 2, 'RECYCLE', NULL)),

decode(bitand(t.flags, 131072), 131072, 'ENABLED', 'DISABLED'),

decode(bitand(t.flags, 512), 0, 'NO', 'YES'),

decode(bitand(t.flags, 256), 0, 'NO', 'YES'),

decode(bitand(o.flags, 2), 0, NULL,

decode(bitand(t.property, 8388608), 8388608,

'SYS$SESSION', 'SYS$TRANSACTION')),

decode(bitand(t.flags, 1024), 1024, 'ENABLED', 'DISABLED'),

decode(bitand(o.flags, 2), 2, 'NO',

decode(bitand(t.property, 2147483648), 2147483648, 'NO',

decode(ksppcv.ksppstvl, 'TRUE', 'YES', 'NO'))),

decode(bitand(t.property, 1024), 0, null, cu.name),

decode(bitand(t.flags, 8388608), 8388608, 'ENABLED', 'DISABLED'),

decode(bitand(t.property, 32), 32, null,

decode(bitand(s.spare1, 2048), 2048, 'ENABLED', 'DISABLED')),

decode(bitand(o.flags, 128), 128, 'YES', 'NO')

from sys.user$ u, sys.ts$ ts, sys.seg$ s, sys.obj$ co, sys.tab$ t, sys.obj$ o,

sys.obj$ cx, sys.user$ cu, x$ksppcv ksppcv, x$ksppi ksppi

where o.owner# = u.user#

and o.obj# = t.obj#

and bitand(t.property, 1) = 0

and bitand(o.flags, 128) = 0

and t.bobj# = co.obj# (+)

and t.ts# = ts.ts#

and t.file# = s.file# (+)

and t.block# = s.block# (+)

and t.ts# = s.ts# (+)

and t.dataobj# = cx.obj# (+)

and cx.owner# = cu.user# (+)

and ksppi.indx = ksppcv.indx

and ksppi.ksppinm = '_dml_monitoring_enabled'

本文详细介绍了 Oracle 数据库系统监控进程 SMON 的多种核心功能,包括清理临时段、合并空闲区间、清理 obj$ 基表、维护 col_usage$ 字典基表、恢复死事务及实例恢复等。并通过实例演示了 SMON 在执行各功能时的行为。

本文详细介绍了 Oracle 数据库系统监控进程 SMON 的多种核心功能,包括清理临时段、合并空闲区间、清理 obj$ 基表、维护 col_usage$ 字典基表、恢复死事务及实例恢复等。并通过实例演示了 SMON 在执行各功能时的行为。

1398

1398

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?