http://komku.blogspot.com/2008/11/install-windows-xp-using-usb-flash-disk.html

Someone in this post (Acer Aspire One Specifications and Windows XP Drivers ) ask me to write a tutorial about how to install Windows XP using USB flash disk/flash drive.

If you want to install Windows XP , but your notebook (or PC) has no CDROM, you should install Windows XP using USB Flash disk/Flash Drive/Thumb drive...

just follow this guide :

step 1 :

Buy an USB Flash Drive (at least 2GB).

When you do this tutorial, please make sure your computer/laptop/PC has a CD-ROM (or DVD).

so, now you have 2 computers, with CD-ROM support and without CD-ROM support (e.g Acer Aspire One , Asus EEE-PC).

step 2 :

Download this software pack (Komku-SP-usb.exe - Download ) 1.47MB

UPDATE 1 :

Anonymous said…

your download at mediafire keeps timing out, any other hosts available?

Mirror Depositfiles

Mirror Rapidshare

Mirror Easy-Share

Mirror Megaupload

this software pack contains 3 application :

-BootSect.exe (Boot Sector Manipulation Tool)

-PeToUSB (http://GoCoding.Com )

-usb_prep8 (Prepares Windows XP Setup LocalSource for Copy to USB-Drive)

step 3 :

Double click Komku-SP-usb.exe

a window will appear... and click Install

step 4 :

Insert your USB Flash Drive.

When I made this tutorial, I was using 4GB Transcend USB FlashDrive...

Locate folder C:\Komku\PeToUSB\

double click PeToUSB.exe

a window will appear like this...

Destination Drive : select USB Removable

check on Enable Disk Format

check on Quick Format

check on Enable LBA (FAT 16x)

Drive Label : XP-KOMKU (or whatever you want)

then click Start  Click Yes to continue....

Click Yes to continue....

"You are about to repartition and format a disk. Disk: .... All existing volumes and data on that disk will be lost. Are You Sure You Want To Continue?"

click Yes

Wait a few seconds...

Click OK , and you can close PeToUSB window.

step 5 :

Open Command Prompt ...

Click Start > Run > type cmd > click OK

On Command Prompt window, go to directory C:\Komku\bootsect\

how to do this?

first type this cd\ and press Enter

then type cd komku\bootsect and press Enter

the result...

Don't close Command Prompt window, and go to step 6...

step 6:

on command prompt window, type bootsect /nt52 H:

H: is drive letter for my USB Flash Drive, it may be different with yours...

and press Enter

and press Enter

the result... "Successfully updated filesystem bootcode. Bootcode was succesfully updated on all targeted volumes."

don't close Command Prompt window, and go to step 7...

step 7:

now type this cd.. and press Enter

then type cd usb_prep8 and press Enter

type usb_prep8 again... and pres Enter

step 8:

Your command prompt window will look like this

Press any key to continue...

usb_prep8 welcome screen will appear

Prepares Windows XP LocalSource for Copy to USB-Drive:

0) Change Type of USB-Drive, currently [USB-stick]

1) Change XP Setup Source Path, currently []

2) Change Virtual TempDrive, currently [T:]

3) Change Target USB-Drive Letter, currently []

4) Make New Tempimage with XP LocalSource and Copy to USB-Drive

5) Use Existing Tempimage with XP LocalSource and Copy to USB-Drive

F) Change Log File - Simple OR Extended, currently [Simple]

Q) Quit

Enter your choice:_

now, insert your original Windows XP CD, or Windows XP with sata ahci driver to your CD/DVD ROM

and back to Command Prompt window

type 1 then press Enter...

"Browse For Folder" window will appear, select your CD/DVD drive and click OK

the result... "XP Setup Source Path" changed to G:\ (yours may be different)

now for point 2, if letter T is currently assigned to a drive in your computer, you must change it.... if not, leave it as it is

how to change it?

type 2 and press Enter...

"Enter Available Virtual DriveLetter"

"Enter Available Virtual DriveLetter"

for example you doesn't have drive S

so you type S and press Enter

back to usb_prep8 welcome screen...

now type 3 and press Enter...

"Please give Target USB-Drive Letter e.g type U"

Enter Target USB-Drive Letter:

"Please give Target USB-Drive Letter e.g type U"

Enter Target USB-Drive Letter:

because my Flash drive letter is H

so, type

H and press Enter...

so, type

H and press Enter...

after back to usb_prep8 welcome screen...

now type 4 and press Enter to make new temporary image with XP LocalSource and copy it to USB Flash Drive

please wait a few seconds..

"WARNING, ALL DATA ON NON-REMOVABLE DISK DRIVE T: WILL BE LOST! Proceed with Format (Y/N)?"

type Y and press Enter

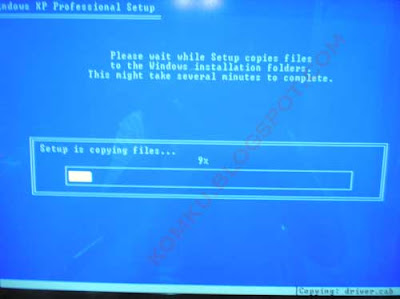

please wait....

when format virtual disk complete, press any key to continue...

please wait... making of LocalSource folder is in progress...

when "Making of LocalSource folder $WIN_NT$.~LS Ready"

Press any key to continue...

"Copy TempDrive Files to USB-Drive in about 15 minutes = Yes OR STOP = End Program = No"

Click Yes , and wait...

"Would you like USB-stick to be preferred Boot Drive U: bla... bla..."

Click Yes

"Would you like to unmount the Virtual Drive ?"

Click Yes , wait a few seconds, and press any key....

press any key again to close usb_prep8...

Now, your USB Flash Drive is ready...

step 9 :

Now, insert your USB Flash Drive/Flash Disk/Thumb Drive to your notebook (e.g Acer Aspire One )

go to BIOS and make USB HDD (or USB ZIP in some other machine) as primary boot device....

then boot form your USB Flash Drive....

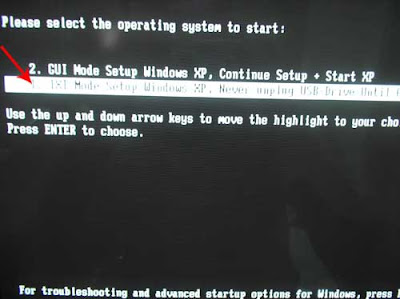

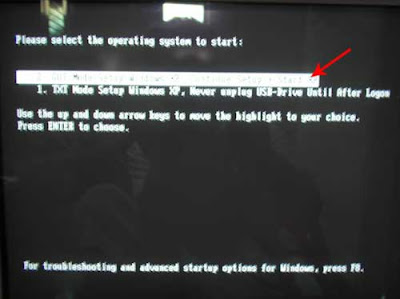

and select "TXT Mode Setup Windows XP, Never unplug USB-Drive Until After Logon"

After Hard Disk detected, delete all partition , create single partition... and install Windows XP on that partition...

and wait...

once text mode setup is complete, computer will restart....

this time select "GUI Mode setup Windows XP, Continue Setup + Start XP "

Continue Windows XP setup.... and Done!

Windows XP Installed....

Remember, you can use this tutorial to install Windows XP on all other computers.. not just Aspire One and Asus EEE-PC....

good luck!

good luck!UPDATE 2 :

FAQ

said…

what if i use a 1GB flash drive ? will it still work?

Yes it is.. :)

Anonymous said…

hi all

can somebody tell me how to install xp with this method in SATA HD?

i finished the tutorial,but when boot up,the hdd is not showed.

help me plz

Follow this guide ( Slipstreaming driver using nLite )

FYI, you can burn it as image, then use virtual drive as Daemon Tools ( Download ) to mount it

Anonymous said…

Trying to restore XPSP3 on Medion akoya MD96910 netbook. Worked fine until 99% of files copied then got: Setup cannot copy the file: iaahci.cat - giving 3 options (retry, skip, quit). Retry didn't work so skipped. Similar message for iaahci.inf iaStor.cat iaStor.inf iaStor.sys. Subsequent XP Boot then failed. I do not understand why the intelSATA drivers (these files) won't load. Will try again with Netbook Bios AHCI mode set to Disable but not confident that will make any difference. Help!

Ref: MD96910 netbook restore. With AHCI mode disabled it works without loading the intelSATA files. I assume these will have to be loaded, just got to figure how to do that now! Thanks for great install guide - brilliant. If you could put a comment about intelSATA it would be great, I cannot be the only person who doesn't understand it.

Allen said…

PeToUSB didn't find any removable flash drive. Please help me .

by the way, I'm using Windows Vista.

Try use XP... or

use another USB port... My friend tried it on his Lenovo... when he plug in his USB flashdrive on left side of his notebook... PeToUSB didn't find any removable flashdrive... he change it to the right side then it works (XP) :)

read this :

Anonymous said…

Easy and well written.. thanks for making our lives easier. Although I should mention that I've tried it inder Windows Vista at first and the USB Flash Driver wasn't readable at all. The whole process must be under Windows XP. Again thanks, and wish you all the best.

Anonymous said…

I was having the "No USB Disks" error with PeToUSB as well with by Samsung U3 (2G) and my microSD + Reader (4G) on 32-bit Vista. The USBs were all formatted to FAT.

Then I switched to XP, and the USB was detected

I want do this is Vista...

Ali Jaffer said…

If you're on Windows Vista, try using the "Run as Administrator" function for both formatting the drive and command prompt.

Anonymous said…

thanks for the instructions works like a charm everytime :D

got one question though, can you use partition manager to make partitions after XP has been installed successfully using this method?

Like Obama said... Yes We Can!

For more FAQ read comments.....

Anonymous said…

Hi Guys this is greate blog Guess what i sued the same instruction to install the Server 2003 sr2 and works well for my Home server

Thanks to you all the Contributor

UPDATE 3 :

Everyone please read this WinToFlash Guide - Install Windows XP from USB Flash drive

UPDATE 4 :

NOTE TO ANYBODY WHO'S FLASH DRIVE CANNOT BE DETECTED... If you are in Vista or Windows 7 (Like Me) you have to Right-Click PeToUSB.exe, go to Properties, Compatibility Tab, and in Windows 7, choose RUN IN COMPATIBILITY MODE, and select Windows XP (Service Pack 3).

In Vista, just do some kind of "xp" mode. Also, in Windows 7, Command Prompt will probably give you a "denied" message on one of the "CMD" commands. Before going into Command Prompt, Right Click it (When typing into the search box in start menu) and click "Run as Administrator".

-Jason

"The 11 Year Old Tech Wiz"

UPDATE 5 :

Install Windows 7 from USB Flash drive guide

本文提供了一个详细的教程,指导用户如何使用USB闪存盘安装Windows XP系统。教程包括准备USB闪存盘、下载必要软件、配置BIOS等步骤,并解决了常见问题。

本文提供了一个详细的教程,指导用户如何使用USB闪存盘安装Windows XP系统。教程包括准备USB闪存盘、下载必要软件、配置BIOS等步骤,并解决了常见问题。

7542

7542

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?