实现神经网络的基本步骤:

- 初始化网络参数

- 前向传播

- 计算损失函数

- 反向传播

- 更新参数

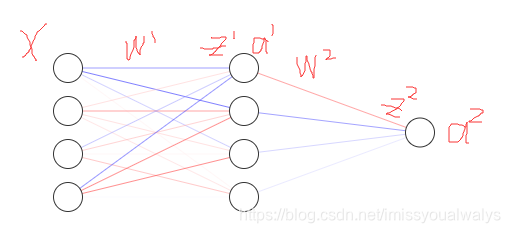

首先以两层神经网络为例:

我们建立的是一个两层(输入层通常不计算在内)的神经网络,包含第0层输入层,第1层隐藏层,第二层,输出层,第一个激活函数 g ( z [ 1 ] ) [ 1 ] g(z^[1])^[1] g(z[1])[1]是 t a n h tanh tanh函数,第二个激活函数 g ( z [ 2 ] ) [ 2 ] g(z^[2])^[2] g(z[2])[2]是sigmoid函数

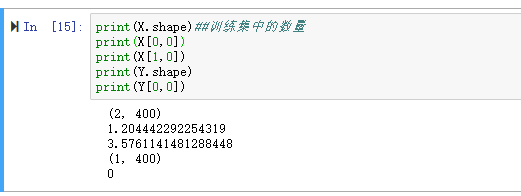

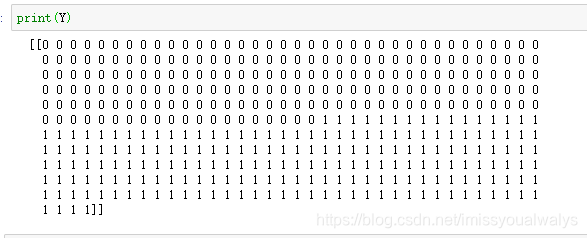

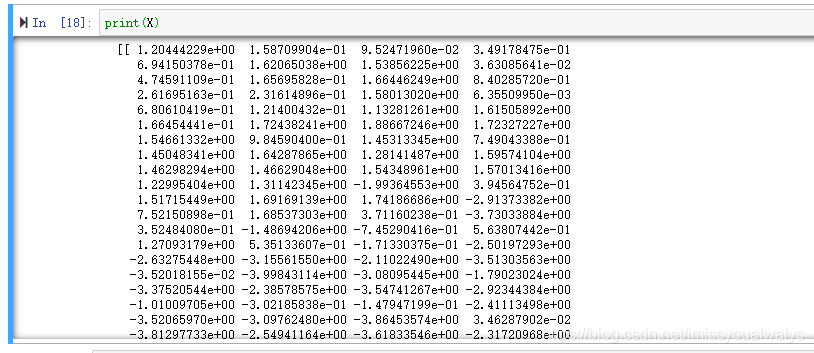

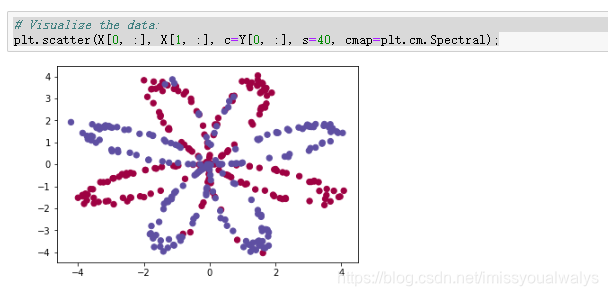

首先来了解一下任务所用的数据集

输入数据X是2行400列的数组,X有2个特征,400组数据,一列代表一组数据,输出结果Y为1行400列数组,同样也是400组数据

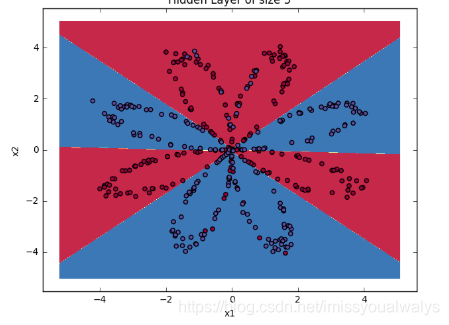

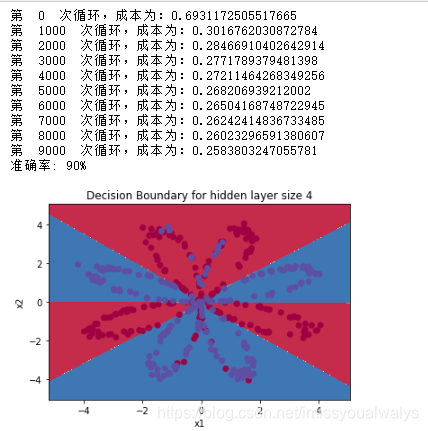

Y的0和1表示红蓝色,X内是坐标,目的是用神经网络,做一个二分类问题,通过X的坐标,判断Y应该是0还是1,即判断该点红色还是蓝色,做完后可视化类似下图所示:

所以应该建立一个输入层节点数目为2,隐藏层数目自己选一个值,输出层节点数目为1的两层神经网络。

1 初始化隐藏层节点数和权值矩阵

def initialize_parameters(X,n_h,Y):

"""

#初始化输入层与隐藏层之间的权值矩阵 和隐藏层和输出层的权值矩阵

n_x = X.shape[0] #输入层

n_h = 4 #,隐藏层,硬编码为4

n_y = Y.shape[0] #输出层

np.random.seed(1)

### START CODE HERE ### (≈ 4 lines of code)

W1=np.random.randn(n_h,n_x)*0.01

b1=np.zeros((n_h,1))

W2=np.random.randn(n_y,n_h)*0.01

b2=np.zeros((n_y,1))

### END CODE HERE ###主要为了确保矩阵的维度

assert(W1.shape == (n_h, n_x))

assert(b1.shape == (n_h, 1))

assert(W2.shape == (n_y, n_h))

assert(b2.shape == (n_y, 1))

parameters = {"W1": W1,

"b1": b1,

"W2": W2,

"b2": b2}

return parameters #返回一个字典类型

2 前向传递

def forward_propagation( X , parameters ):

""#前向传播

W1 = parameters["W1"]

b1 = parameters["b1"]

W2 = parameters["W2"]

b2 = parameters["b2"]

#前向传播计算A2

Z1 = np.dot(W1 , X) + b1

A1 = np.tanh(Z1)

Z2 = np.dot(W2 , A1) + b2

A2 = sigmoid(Z2)

#使用断言确保我的数据格式是正确的

assert(A2.shape == (1,X.shape[1]))

cache = {"Z1": Z1,

"A1": A1,

"Z2": Z2,

"A2": A2}

#cache中的值,向后传播计算机需要用到,所以存起来

return (A2, cache)

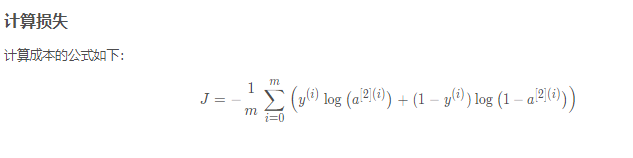

3 计算损失函数:

损失函数采用的是交叉熵函数

def compute_cost(A2,Y,parameters):

m = Y.shape[1]

W1 = parameters["W1"]

W2 = parameters["W2"]

#计算成本

logprobs = logprobs = np.multiply(np.log(A2), Y) + np.multiply((1 - Y), np.log(1 - A2))

cost = - np.sum(logprobs) / m

cost = float(np.squeeze(cost))

assert(isinstance(cost,float))

return cost

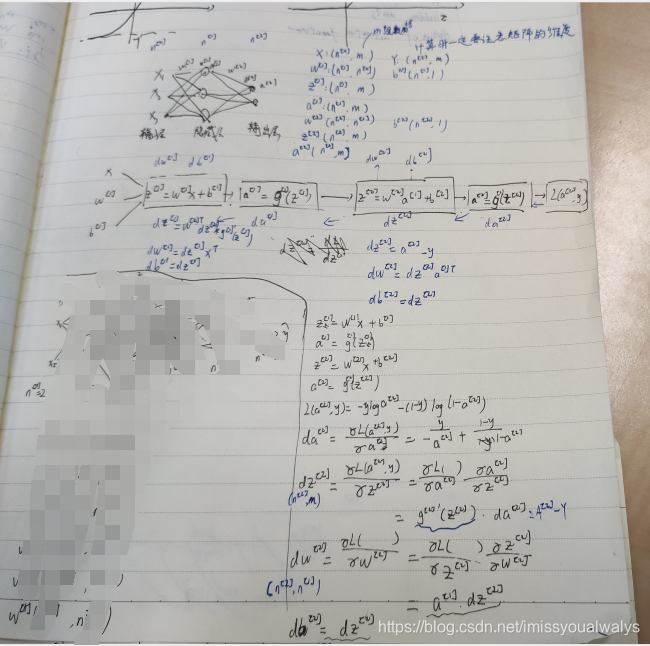

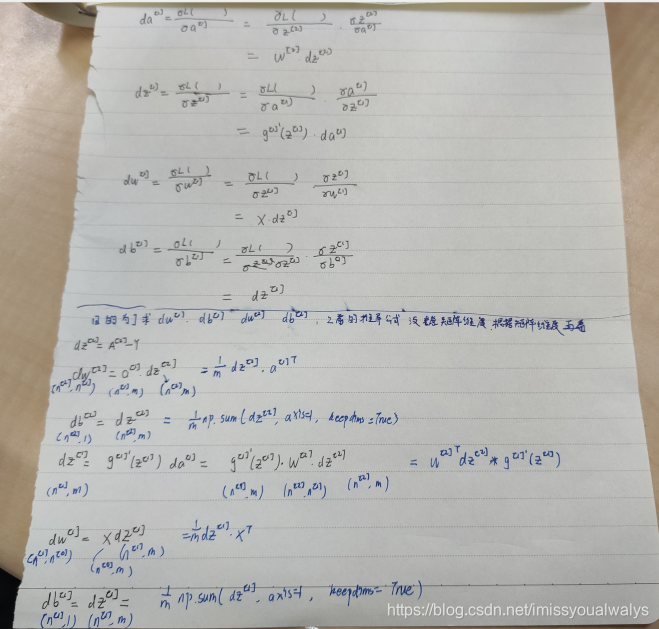

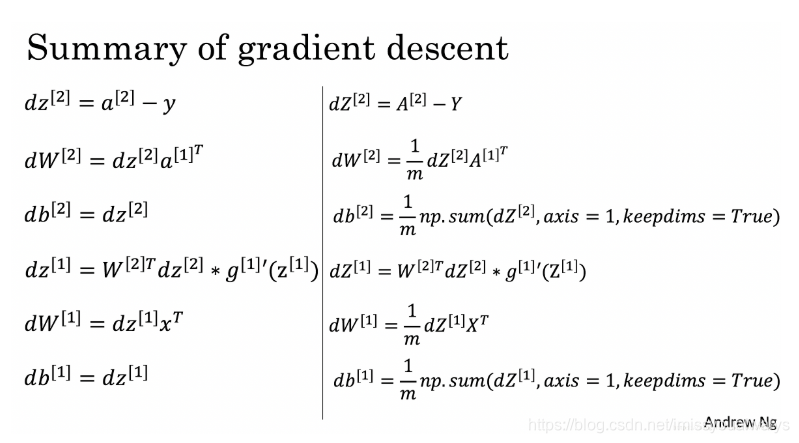

4 向后传播:

推导过程:

公式:

def backward_propagation(parameters,cache,X,Y):

m = X.shape[1]

W1 = parameters["W1"]

W2 = parameters["W2"]

A1 = cache["A1"]

A2 = cache["A2"]

dZ2= A2 - Y

dW2 = (1 / m) * np.dot(dZ2, A1.T)

db2 = (1 / m) * np.sum(dZ2, axis=1, keepdims=True)

dZ1 = np.multiply(np.dot(W2.T, dZ2), 1 - np.power(A1, 2))

dW1 = (1 / m) * np.dot(dZ1, X.T)

db1 = (1 / m) * np.sum(dZ1, axis=1, keepdims=True)

grads = {"dW1": dW1,

"db1": db1,

"dW2": dW2,

"db2": db2 }

return grads

5 更新参数

def update_parameters(parameters,grads,learning_rate=1.2):

W1 = parameters["W1"]

W2 = parameters["W2"]

b1 = parameters["b1"]

b2 = parameters["b2"]

dW1,dW2 = grads["dW1"],grads["dW2"]

db1,db2 = grads["db1"],grads["db2"]

W1 = W1 - learning_rate * dW1

b1 = b1 - learning_rate * db1

W2 = W2 - learning_rate * dW2

b2 = b2 - learning_rate * db2

parameters = {"W1": W1,

"b1": b1,

"W2": W2,

"b2": b2}

return parameters

整合

def nn_model(X,Y,n_h,num_iterations,print_cost=False):

np.random.seed(3) #指定随机种子

parameters = initialize_parameters(X,n_h,Y)

W1 = parameters["W1"]

b1 = parameters["b1"]

W2 = parameters["W2"]

b2 = parameters["b2"]

for i in range(num_iterations):

A2 , cache = forward_propagation(X,parameters)

cost = compute_cost(A2,Y,parameters)

grads = backward_propagation(parameters,cache,X,Y)

parameters = update_parameters(parameters,grads,learning_rate = 0.5)

if print_cost:

if i%1000 == 0:

print("第 ",i," 次循环,成本为:"+str(cost))

return parameters

6 预测:

预测只需要做前向传播即可。

def predict(parameters, X):

A2,cache=forward_propagation(X,parameters)

#print(A2)

predictions=np.round(A2)#没跟参数表示四舍五入,去除小数位,A2中的元素都是0-1之间的值,predictions的值全是0或1,因为本题是二分类问题,输出节点只有一个,0和1各代表一类,小于0.5让其等于0,大于0.5让其等于1

#print(predictions)

### END CODE HERE ###

return predictions

完整代码

import numpy as np

import matplotlib.pyplot as plt

from testCases import *

import sklearn

import sklearn.datasets

import sklearn.linear_model

from planar_utils import plot_decision_boundary, sigmoid, load_planar_dataset, load_extra_datasets

%matplotlib inline

np.random.seed(1) # set a seed so that the results are consistent

X, Y = load_planar_dataset()

def initialize_parameters(X,n_h,Y):

#初始化输入层与隐藏层之间的权值矩阵 和隐藏层和输出层的权值矩阵

n_x = X.shape[0] #输入层

n_h = 4 #,隐藏层,硬编码为4

n_y = Y.shape[0] #输出层

np.random.seed(1)

### START CODE HERE ### (≈ 4 lines of code)

W1=np.random.randn(n_h,n_x)*0.01

b1=np.zeros((n_h,1))

W2=np.random.randn(n_y,n_h)*0.01

b2=np.zeros((n_y,1))

### END CODE HERE ###

assert(W1.shape == (n_h, n_x))

assert(b1.shape == (n_h, 1))

assert(W2.shape == (n_y, n_h))

assert(b2.shape == (n_y, 1))

parameters = {"W1": W1,

"b1": b1,

"W2": W2,

"b2": b2}

return parameters

def forward_propagation( X , parameters ):

""#前向传播

W1 = parameters["W1"]

b1 = parameters["b1"]

W2 = parameters["W2"]

b2 = parameters["b2"]

#前向传播计算A2

Z1 = np.dot(W1 , X) + b1

A1 = np.tanh(Z1)

Z2 = np.dot(W2 , A1) + b2

A2 = sigmoid(Z2)

#使用断言确保我的数据格式是正确的

assert(A2.shape == (1,X.shape[1]))

cache = {"Z1": Z1,

"A1": A1,

"Z2": Z2,

"A2": A2}

#cache中的值,向后传播计算机需要用到,所以存起来

return (A2, cache)

def compute_cost(A2,Y,parameters):

m = Y.shape[1]

W1 = parameters["W1"]

W2 = parameters["W2"]

#计算成本

logprobs = logprobs = np.multiply(np.log(A2), Y) + np.multiply((1 - Y), np.log(1 - A2))

cost = - np.sum(logprobs) / m

cost = float(np.squeeze(cost))

assert(isinstance(cost,float))

return cost

def backward_propagation(parameters,cache,X,Y):

m = X.shape[1]

W1 = parameters["W1"]

W2 = parameters["W2"]

A1 = cache["A1"]

A2 = cache["A2"]

dZ2= A2 - Y

dW2 = (1 / m) * np.dot(dZ2, A1.T)

db2 = (1 / m) * np.sum(dZ2, axis=1, keepdims=True)

dZ1 = np.multiply(np.dot(W2.T, dZ2), 1 - np.power(A1, 2))

dW1 = (1 / m) * np.dot(dZ1, X.T)

db1 = (1 / m) * np.sum(dZ1, axis=1, keepdims=True)

grads = {"dW1": dW1,

"db1": db1,

"dW2": dW2,

"db2": db2 }

return grads

def update_parameters(parameters,grads,learning_rate=1.2):

W1 = parameters["W1"]

W2 = parameters["W2"]

b1 = parameters["b1"]

b2 = parameters["b2"]

dW1,dW2 = grads["dW1"],grads["dW2"]

db1,db2 = grads["db1"],grads["db2"]

W1 = W1 - learning_rate * dW1

b1 = b1 - learning_rate * db1

W2 = W2 - learning_rate * dW2

b2 = b2 - learning_rate * db2

parameters = {"W1": W1,

"b1": b1,

"W2": W2,

"b2": b2}

return parameters

def nn_model(X,Y,n_h,num_iterations,print_cost=False):

np.random.seed(3) #指定随机种子

parameters = initialize_parameters(X,n_h,Y)

W1 = parameters["W1"]

b1 = parameters["b1"]

W2 = parameters["W2"]

b2 = parameters["b2"]

for i in range(num_iterations):

A2 , cache = forward_propagation(X,parameters)

cost = compute_cost(A2,Y,parameters)

grads = backward_propagation(parameters,cache,X,Y)

parameters = update_parameters(parameters,grads,learning_rate = 0.5)

if print_cost:

if i%1000 == 0:

print("第 ",i," 次循环,成本为:"+str(cost))

return parameters

def predict(parameters, X):

# Computes probabilities using forward propagation, and classifies to 0/1 using 0.5 as the threshold.

### START CODE HERE ### (≈ 2 lines of code)

A2,cache=forward_propagation(X,parameters)

#print(A2)

predictions=np.round(A2)#没跟参数表示四舍五入,去除小数位,A2中的元素都说0-1之间的值,predictions的值全是0或1

#print(predictions)

### END CODE HERE ###

return predictions

parameters = nn_model(X, Y, n_h = 4, num_iterations=10000, print_cost=True)

#绘制边界

plot_decision_boundary(lambda x: predict(parameters, x.T), X, np.squeeze(Y))

plt.title("Decision Boundary for hidden layer size " + str(4))

predictions = predict(parameters, X)

#因为Y中的元素只有0或者1才能这么计算准确率,np.dot(Y, predictions.T),只有1*1才能为1,np.dot(1 - Y, 1 - predictions.T)将原先的0变成1

print ('准确率: %d' % float((np.dot(Y, predictions.T) + np.dot(1 - Y, 1 - predictions.T)) / float(Y.size) * 100) + '%')

运行结果

参考博客:

https://blog.youkuaiyun.com/u013733326/article/details/79827273

http://www.ai-start.com/

本文详细介绍了使用Python从零开始构建神经网络的过程,包括初始化参数、前向传播、计算损失函数、反向传播和更新参数等关键步骤。通过一个具体的二分类问题,展示了如何训练神经网络并进行预测。

本文详细介绍了使用Python从零开始构建神经网络的过程,包括初始化参数、前向传播、计算损失函数、反向传播和更新参数等关键步骤。通过一个具体的二分类问题,展示了如何训练神经网络并进行预测。

526

526

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?