1. 准备工作

logstash版本:6.1.1

es版本:6.1.1

kibana版本6.1.1

kafka版本:0.8.2.1

2. 环境搭建

略

3. 在pom.xml中依赖

<dependency>

<groupId>net.logstash.logback</groupId>

<artifactId>logstash-logback-encoder</artifactId>

<version>4.11</version>

</dependency>

<dependency>

<groupId>com.github.danielwegener</groupId>

<artifactId>logback-kafka-appender</artifactId>

<version>0.1.0</version>

<scope>runtime</scope>

</dependency>

4. 在logback中配置

<appender name="KafkaAppender" class="com.github.danielwegener.logback.kafka.KafkaAppender">

<encoder class="com.github.danielwegener.logback.kafka.encoding.LayoutKafkaMessageEncoder">

<layout class="net.logstash.logback.layout.LogstashLayout" >

<includeContext>true</includeContext>

<includeCallerData>true</includeCallerData>

<customFields>{"system":"test"}</customFields>

<fieldNames class="net.logstash.logback.fieldnames.ShortenedFieldNames"/>

</layout>

<charset>UTF-8</charset>

</encoder>

<!--kafka topic 需要与配置文件里面的topic一致 否则kafka会沉默并鄙视你-->

<topic>abklog_topic</topic>

<keyingStrategy class="com.github.danielwegener.logback.kafka.keying.HostNameKeyingStrategy" />

<deliveryStrategy class="com.github.danielwegener.logback.kafka.delivery.AsynchronousDeliveryStrategy" />

<producerConfig>bootstrap.servers=kafka_ip:kafka_port</producerConfig>

</appender>

<!--你可能还需要加点这个玩意儿-->

<logger name="Application_ERROR">

<appender-ref ref="KafkaAppender"/>

</logger>

<!--还有这个玩意儿-->

<root>

<level value="INFO" />

<appender-ref ref="KafkaAppender" />

</root>

5. logstash中配置文件中配置

input {

kafka {

bootstrap_servers => "127.0.01:9099"

# topic_id => "abklog_topic"

# reset_beginning => false

topics => ["abklog_topic"]

}

}

filter {

grok {

match => {

"message" => "(?m)%{TIMESTAMP_ISO8601:timestamp}\s+\[%{DATA:trace},%{DATA:span}\]\s+%{GREEDYDATA:msg}"

}

}

mutate{

"add_field" => {"appname" => "api"}

}

}

output {

elasticsearch {

action => "index"

hosts => "127.0.0.1:9200"

index => "abk_logs"

}

}

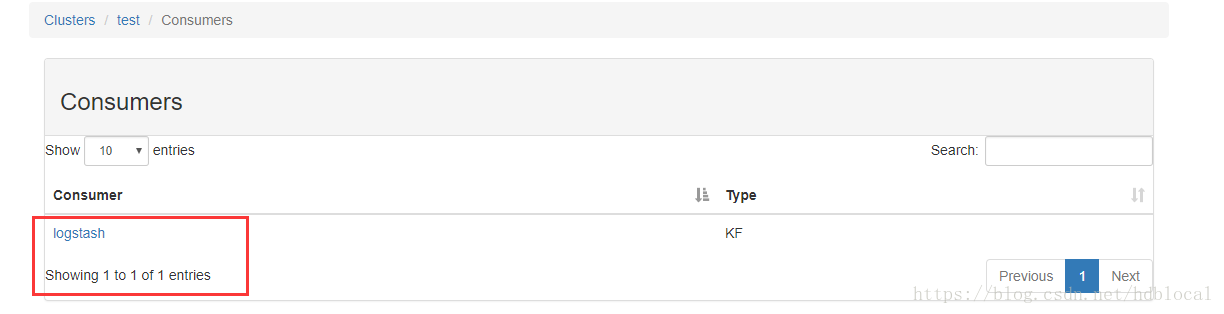

6. 启动项目,打印log,可以看到log消息被logstash消费

=============================================================================================

本文介绍如何将Logstash与Kafka集成,实现日志数据的收集与处理。包括环境搭建、依赖配置、logback配置、Logstash配置等步骤。

本文介绍如何将Logstash与Kafka集成,实现日志数据的收集与处理。包括环境搭建、依赖配置、logback配置、Logstash配置等步骤。

820

820

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?