java.io.IOException: Failed to replace a bad datanode on the existing pipeline due to no more good datanodes being available to try.

文章目录

1.我在Hadoop,namenode节点里执行追加一个文件到已经存在文件的末尾,这样一个操作时

hadoop fs -appendToFile ./liubei.txt /sanguo/shuguo/text.txt

我遇到了以下的报错

java.io.IOException: Failed to replace a bad datanode on the existing pipeline due to no more good datanodes being available to try.

它的意思是,没有办法来替换一个坏掉的DataNode节点,因为在已经存在的流水线里没有一个好的DataNode节点可以来更换

2.出现原因

因为,我的集群共有3个DataNode节点,而我设置的默认副本数是3个。在执行写入到HDFS的操作时,当我的一台Datanode写入失败时,它要保持副本数为3,它就会去寻找一个可用的DataNode节点来写入,可是我的流水线上就只有3 台DataNode节点,所以导致会报错Failed to replace a bad datanode

3.怎么查看自己已经存在的副本数

查看Hadoop配置文件中的hdfs-site.xml(在hadoop目录下的etc/hadoop里)

如果有这几行代码,那就说明你的副本数是3。value标签就是副本数

<property>

<name>dfs.replication</name>

<value>3</value>

</property>

如果没有的话,那hadoop默认就是3个副本

4.怎么解决报错

在hdfs-site.xml 文件中添加下面几行代码

<property>

<name>dfs.client.block.write.replace-datanode-on-failure.policy</name>

<value>NEVER</value>

</property>

参考apache官方文档得知 NEVER: never add a new datanode.

相当于 设置为NEVER之后,就不会添加新的DataNode

一般来说,集群中DataNode节点小于等于3 都不建议开启

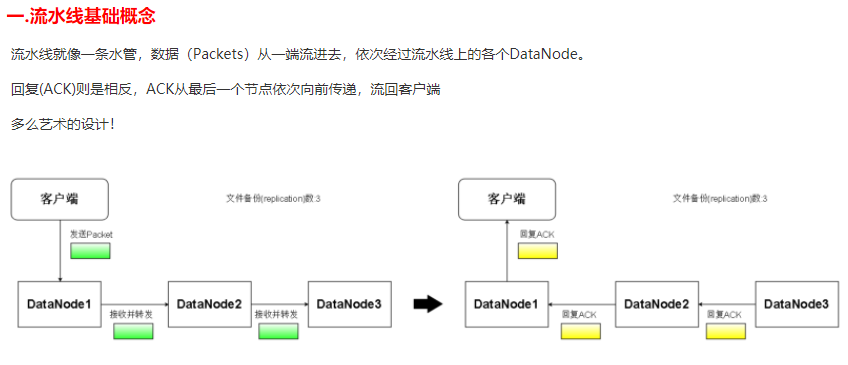

5.什么是 Pipeline(流水线)?

pipeline:就是客户端向DataNode传输数据(Packet)和接受DataNode恢复(ACK)(Acknowledge)

整条流水线由若干个DataNode串联而成,数据由客户端流向PipeLine,在流水线上,假如DataNode A 比 DataNode B 更接近流水线

那么称A在B的上游(Upstream),称B在A的下游(Downstream)。

流水线上传输数据步骤

-

客户端向整条流水线的第一个DataNode发送Packet,第一个DataNode收到Packet就向下个DataNode转发,下游DataNode照做。

-

接收到Packet的DataNode将Packet数据写入磁盘

-

流水线上最后一个DataNode接收到Packet后向前一个DataNode发送ACK响应,表示自己已经收到Packet,上游DataNode照做

-

当客户端收到第一个DataNode的ACK,表明此次Packet的传输成功

流水线更多知识,参考这篇文档https://www.cnblogs.com/lqlqlq/p/12321930.html

801)]

流水线更多知识,参考这篇文档https://www.cnblogs.com/lqlqlq/p/12321930.html

在Hadoop集群中尝试追加文件时遇到IOException,原因是无法替换故障DataNode且无其他可用节点。解决方法是在hdfs-site.xml中设置dfs.client.block.write.replace-datanode-on-failure.policy为NEVER,避免添加新节点。理解Pipeline是数据在DataNode间传输的流程,涉及Packet的传递和ACK确认。

在Hadoop集群中尝试追加文件时遇到IOException,原因是无法替换故障DataNode且无其他可用节点。解决方法是在hdfs-site.xml中设置dfs.client.block.write.replace-datanode-on-failure.policy为NEVER,避免添加新节点。理解Pipeline是数据在DataNode间传输的流程,涉及Packet的传递和ACK确认。

6826

6826

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?