A module that was compiled using NumPy 1.x cannot be run in

NumPy 2.0.2 as it may crash. To support both 1.x and 2.x

versions of NumPy, modules must be compiled with NumPy 2.0.

Some module may need to rebuild instead e.g. with 'pybind11>=2.12'.

If you are a user of the module, the easiest solution will be to

downgrade to 'numpy<2' or try to upgrade the affected module.

We expect that some modules will need time to support NumPy 2.

Traceback (most recent call last): File "/emotion-recogniton-pytorch-orangepiaipro-main/train_emotion_classifier.py", line 2, in <module>

import torch

File "/usr/local/miniconda3/lib/python3.9/site-packages/torch/__init__.py", line 1382, in <module>

from .functional import * # noqa: F403

File "/usr/local/miniconda3/lib/python3.9/site-packages/torch/functional.py", line 7, in <module>

import torch.nn.functional as F

File "/usr/local/miniconda3/lib/python3.9/site-packages/torch/nn/__init__.py", line 1, in <module>

from .modules import * # noqa: F403

File "/usr/local/miniconda3/lib/python3.9/site-packages/torch/nn/modules/__init__.py", line 35, in <module>

from .transformer import TransformerEncoder, TransformerDecoder, \

File "/usr/local/miniconda3/lib/python3.9/site-packages/torch/nn/modules/transformer.py", line 20, in <module>

device: torch.device = torch.device(torch._C._get_default_device()), # torch.device('cpu'),

/usr/local/miniconda3/lib/python3.9/site-packages/torch/nn/modules/transformer.py:20: UserWarning: Failed to initialize NumPy: _ARRAY_API not found (Triggered internally at /pytorch/torch/csrc/utils/tensor_numpy.cpp:84.)

device: torch.device = torch.device(torch._C._get_default_device()), # torch.device('cpu'),

Traceback (most recent call last):

File "/emotion-recogniton-pytorch-orangepiaipro-main/train_emotion_classifier.py", line 6, in <module>

from torchvision import transforms

File "/usr/local/miniconda3/lib/python3.9/site-packages/torchvision/__init__.py", line 6, in <module>

from torchvision import _meta_registrations, datasets, io, models, ops, transforms, utils

File "/usr/local/miniconda3/lib/python3.9/site-packages/torchvision/models/__init__.py", line 2, in <module>

from .convnext import *

File "/usr/local/miniconda3/lib/python3.9/site-packages/torchvision/models/convnext.py", line 8, in <module>

from ..ops.misc import Conv2dNormActivation, Permute

File "/usr/local/miniconda3/lib/python3.9/site-packages/torchvision/ops/__init__.py", line 1, in <module>

from ._register_onnx_ops import _register_custom_op

File "/usr/local/miniconda3/lib/python3.9/site-packages/torchvision/ops/_register_onnx_ops.py", line 5, in <module>

from torch.onnx import symbolic_opset11 as opset11

File "/usr/local/miniconda3/lib/python3.9/site-packages/torch/onnx/__init__.py", line 57, in <module>

from ._internal.onnxruntime import (

File "/usr/local/miniconda3/lib/python3.9/site-packages/torch/onnx/_internal/onnxruntime.py", line 34, in <module>

import onnx

File "/home/HwHiAiUser/.local/lib/python3.9/site-packages/onnx/__init__.py", line 11, in <module>

from onnx.external_data_helper import load_external_data_for_model, write_external_data_tensors, convert_model_to_external_data

File "/home/HwHiAiUser/.local/lib/python3.9/site-packages/onnx/external_data_helper.py", line 14, in <module>

from .onnx_pb import TensorProto, ModelProto

File "/home/HwHiAiUser/.local/lib/python3.9/site-packages/onnx/onnx_pb.py", line 8, in <module>

from .onnx_ml_pb2 import * # noqa

File "/home/HwHiAiUser/.local/lib/python3.9/site-packages/onnx/onnx_ml_pb2.py", line 33, in <module>

_descriptor.EnumValueDescriptor(

File "/usr/local/miniconda3/lib/python3.9/site-packages/google/protobuf/descriptor.py", line 789, in __new__

_message.Message._CheckCalledFromGeneratedFile()

TypeError: Descriptors cannot be created directly.

If this call came from a _pb2.py file, your generated code is out of date and must be regenerated with protoc >= 3.19.0.

If you cannot immediately regenerate your protos, some other possible workarounds are:

1. Downgrade the protobuf package to 3.20.x or lower.

2. Set PROTOCOL_BUFFERS_PYTHON_IMPLEMENTATION=python (but this will use pure-Python parsing and will be much slower).

More information: https://developers.google.com/protocol-buffers/docs/news/2022-05-06#python-updates

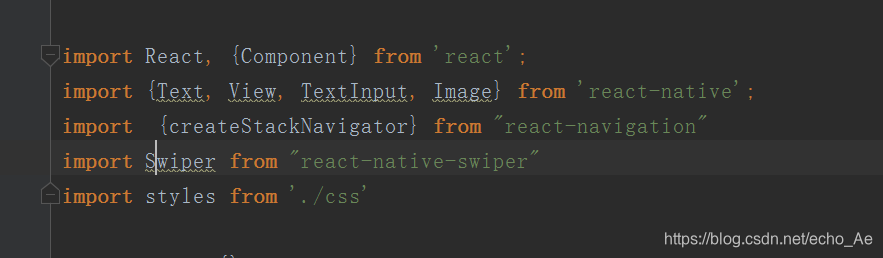

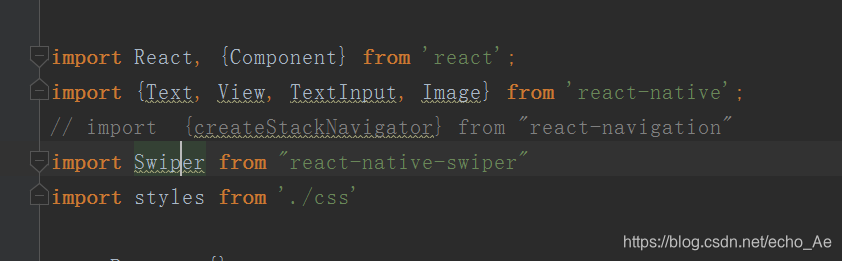

react-navigation引入了没有使用第二天过来运行报错

react-navigation引入了没有使用第二天过来运行报错 引入注释掉就可以了

引入注释掉就可以了

博客围绕React Native中无法解析`AccessibilityInfo`模块的问题展开。给出了相关GitHub链接,列举了通用解决办法,如清除watchman watches、删除`node_modules`等。作者自身情况是安装运行正常,引入`react-navigation`未使用,次日运行报错,注释引入后问题解决。

博客围绕React Native中无法解析`AccessibilityInfo`模块的问题展开。给出了相关GitHub链接,列举了通用解决办法,如清除watchman watches、删除`node_modules`等。作者自身情况是安装运行正常,引入`react-navigation`未使用,次日运行报错,注释引入后问题解决。

6885

6885

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?