安装Flink

从hudi 0.9的编译pom中查看,编译时用的 flink版本是1.12.2,在官网下载

Index of /dist/flink/flink-1.12.2

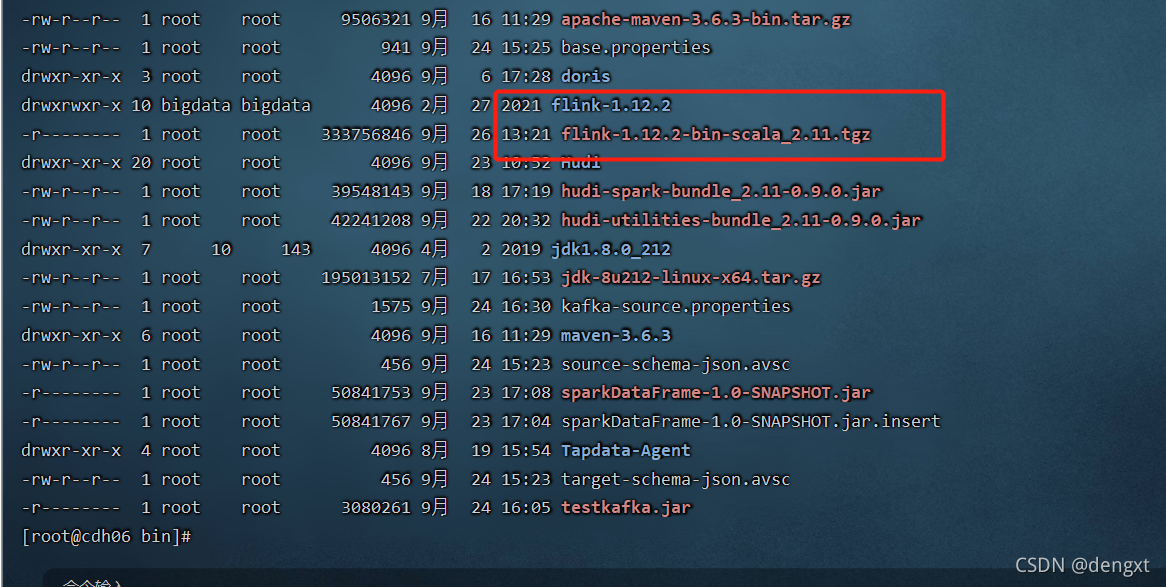

(1)上传到集群中

因为是测试流程,先单节点 上传至cdh06 解压

先不做hadoop 环境变量的配置,因为使用的cdh ,先让flink自己识别系统中的hadoop环境

(2)启动flink集群

cd /data/software/flink-1.12.2/bin

[xxx@cdh06 bin]# ./start-cluster.sh

(3)启动flink sql client,并关联编译好的hudi依赖包

[xxx@cdh06 bin]# ./sql-client.sh embedded -j /data/software/Hudi/packaging/hudi-flink-bundle/target/hudi-flink-bundle_2.11-0.9.0.jar

上面方式启动后操作会有问题

建议将这个 hudi-flink-bundle_2.11-0.9.0.jar 放在flink/lib 包下,再启动

./sql-client.sh embedded

启动

Setting HADOOP_CONF_DIR=/etc/hadoop/conf because no HADOOP_CONF_DIR or HADOOP_CLASSPATH was set.

Setting HBASE_CONF_DIR=/etc/hbase/conf because no HBASE_CONF_DIR was set.

No default environment specified.

Searching for '/data/software/flink-1.12.2/conf/sql-client-defaults.yaml'...found.

Reading default environment from: file:/data/software/flink-1.12.2/conf/sql-client-defaults.yaml

No session environment specified.

Command history file path: /root/.

最低0.47元/天 解锁文章

最低0.47元/天 解锁文章

405

405

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?