目录

操作前准备:安装好k8s集群、安装好ingress服务

设计思路:

1、通过ingress规则实现自定义域名访问nginx,经php解析,访问到redis再到mysql

2、通过ingress规则实现自定义域名访问tomcat,访问到mysql

注意:

1、拉取镜像网络不好容器拉去失败,建议在网络好(有wifi)的地方操作

2、在windos主机上编写hosts文件时,要把hosts文件拉到桌面改,改完再拉回来,不然很可能电脑不会重新读取文件导致失败

3、使用pvc存储数据到存储主机的指定目录下

一、配置文件编写

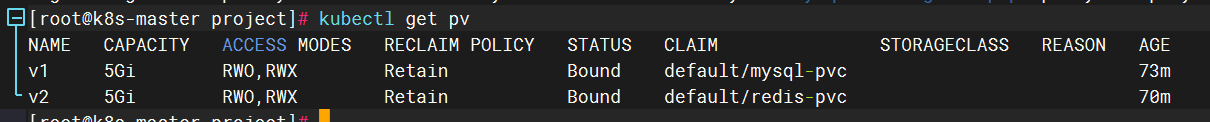

1、数据持久化

所有节点安装nfs服务

yum install -y nfs-utils

在存储主机操作

vim /etc/exports

/data 192.168.58.0/24(rw,sync,no_root_squash,no_subtree_check) /data/v1 192.168.58.0/24(rw,sync,no_root_squash,no_subtree_check) /data/v2 192.168.58.0/24(rw,sync,no_root_squash,no_subtree_check)

执行命令

mkdir /data/v1 /data/v2 -p systemctl enable --now nfs-server.service

在集群master节点上操作配置持久化存储pvc

vim pv.yaml

apiVersion: v1 kind: PersistentVolume metadata: name: v1 spec: capacity: storage: 5Gi #pv的存储空间容量 accessModes: ["ReadWriteOnce","ReadWriteMany"] nfs: path: /data/v1 #把nfs的存储空间创建成pv server: 192.168.58.170 #nfs服务器的地址 --- apiVersion: v1 kind: PersistentVolume metadata: name: v2 spec: capacity: storage: 5Gi #pv的存储空间容量 accessModes: ["ReadWriteOnce","ReadWriteMany"] nfs: path: /data/v2 #把nfs的存储空间创建成pv server: 192.168.58.170 #nfs服务器的地址

vim pvc.yaml

apiVersion: v1 kind: PersistentVolumeClaim metadata: name: mysql-pvc spec: accessModes: ["ReadWriteMany"] resources: requests: storage: 5Gi --- apiVersion: v1 kind: PersistentVolumeClaim metadata: name: redis-pvc spec: accessModes: ["ReadWriteMany"] resources: requests: storage: 5Gi

2、mysql主从复制

vim mysql-m-s/mysql-secrets.yaml

# 定义K8s Secret,存储MySQL敏感密码(base64编码) apiVersion: v1 kind: Secret metadata: name: mysql-secrets # Secret名称,供其他资源引用 type: Opaque # 通用类型,存储任意键值对 data: # 密码需先base64编码(示例原密码:123.com → 编码后:MTIzLmNvbQ==) root-password: MTIzLmNvbQ== # MySQL root用户密码 replication-password: cmVwbDEyMw== # 主从复制用户(repl)密码rep123

vim mysql-m-s/mysql-configmap.yaml

# 定义K8s ConfigMap,存储非敏感配置(配置文件、脚本)

apiVersion: v1

kind: ConfigMap

metadata:

name: mysql-config # ConfigMap名称,供Pod引用

data:

# --------------------------

# 主库核心配置

# --------------------------

master.cnf: |

[mysqld]

server-id=1 # 主库唯一ID(必须≠从库)

log_bin=mysql-bin # 开启二进制日志(主从复制依赖)

skip-host-cache # 禁用主机缓存(减少DNS依赖)

skip-name-resolve # 禁用域名解析(加速连接)

default_authentication_plugin=mysql_native_password # 解决认证插件兼容问题

# --------------------------

# 从库核心配置

# --------------------------

slave.cnf: |

[mysqld]

server-id=2 # 从库唯一ID(必须≠主库)

relay_log=mysql-relay-bin # 开启中继日志(存储主库同步的日志)

log_bin=mysql-bin # 开启二进制日志(支持级联复制)

read_only=1 # 从库设为只读(root用户除外)

skip-host-cache

skip-name-resolve

# --------------------------

# 主库初始化脚本(容器启动时执行)

# --------------------------

init-master.sh: |

#!/bin/bash

set -e # 脚本出错立即退出

# 等待MySQL服务启动(避免脚本先于数据库执行)

until mysqladmin ping -h localhost --silent; do

echo "等待主库MySQL启动..."

sleep 2

done

# 1. 创建复制用户repl(从库用该用户连接主库)

mysql -u root -p"$MYSQL_ROOT_PASSWORD" -e "CREATE USER IF NOT EXISTS 'repl'@'%' IDENTIFIED BY '$REPLICATION_PASSWORD';"

# 2. 授予复制权限(仅允许同步操作)

mysql -u root -p"$MYSQL_ROOT_PASSWORD" -e "GRANT REPLICATION SLAVE ON *.* TO 'repl'@'%';"

# 3. 创建额外数据库

mysql -u root -p"$MYSQL_ROOT_PASSWORD" -e "CREATE DATABASE IF NOT EXISTS discuz;"

mysql -u root -p"$MYSQL_ROOT_PASSWORD" -e "CREATE DATABASE IF NOT EXISTS biyesheji;"

# 4. 创建额外用户和权限

mysql -u root -p"$MYSQL_ROOT_PASSWORD" -e "CREATE USER IF NOT EXISTS 'discuz_user'@'%' IDENTIFIED BY '123.com';"

mysql -u root -p"$MYSQL_ROOT_PASSWORD" -e "GRANT ALL PRIVILEGES ON discuz.* TO 'discuz_user'@'%';"

mysql -u root -p"$MYSQL_ROOT_PASSWORD" -e "DROP USER IF EXISTS 'root'@'%';"

mysql -u root -p"$MYSQL_ROOT_PASSWORD" -e "CREATE USER IF NOT EXISTS 'root'@'%' IDENTIFIED BY '123.com';"

mysql -u root -p"$MYSQL_ROOT_PASSWORD" -e "GRANT ALL PRIVILEGES ON *.* TO 'root'@'%' WITH GRANT OPTION;"

mysql -u root -p"$MYSQL_ROOT_PASSWORD" -e "FLUSH PRIVILEGES;" # 刷新权限

# 记录主库日志状态(供从库同步起点使用)

mysql -u root -p"$MYSQL_ROOT_PASSWORD" -e "SHOW MASTER STATUS\G" > /var/lib/mysql/master-status.txt

# --------------------------

# 从库初始化脚本(容器启动时执行)

# --------------------------

init-slave.sh: | # 修正缩进:与init-master.sh保持同级(属于data的子项)

#!/bin/bash

set -e

# 等待从库自身MySQL启动

until mysqladmin ping -h localhost --silent; do

echo "等待从库MySQL启动..."

sleep 2

done

# 等待主库服务就绪(增加超时机制,避免无限等待)

timeout=120

elapsed=0

until mysqladmin ping -h mysql-master --silent -u root -p"$MYSQL_ROOT_PASSWORD"; do

echo "等待主库就绪...(已等待 $elapsed 秒)"

sleep 2

elapsed=$((elapsed + 2))

if [ $elapsed -ge $timeout ]; then

echo "ERROR: 主库超时未就绪!"

exit 1

fi

done

# 1. 从主库获取同步起点(增加重试,确保日志位置有效)

retry=3

while [ $retry -gt 0 ]; do

MASTER_STATUS=$(mysql -u root -p"$MYSQL_ROOT_PASSWORD" -h mysql-master -e "SHOW MASTER STATUS\G")

MASTER_LOG_FILE=$(echo "$MASTER_STATUS" | grep 'File:' | awk '{print $2}')

MASTER_LOG_POS=$(echo "$MASTER_STATUS" | grep 'Position:' | awk '{print $2}')

if [ -n "$MASTER_LOG_FILE" ] && [ "$MASTER_LOG_POS" -gt 0 ]; then

break # 日志位置有效,退出重试

fi

echo "重试获取主库日志位置...(剩余 $retry 次)"

retry=$((retry - 1))

sleep 5

done

# 2. 配置从库同步参数

mysql -u root -p"$MYSQL_ROOT_PASSWORD" -e "STOP SLAVE;"

mysql -u root -p"$MYSQL_ROOT_PASSWORD" -e "CHANGE MASTER TO

MASTER_HOST='mysql-master',

MASTER_USER='repl',

MASTER_PASSWORD='$REPLICATION_PASSWORD',

MASTER_LOG_FILE='$MASTER_LOG_FILE',

MASTER_LOG_POS=$MASTER_LOG_POS;"

# 3. 创建额外数据库

mysql -u root -p"$MYSQL_ROOT_PASSWORD" -e "CREATE DATABASE IF NOT EXISTS discuz;"

mysql -u root -p"$MYSQL_ROOT_PASSWORD" -e "CREATE DATABASE IF NOT EXISTS biyesheji;"

# 4. 创建额外用户和权限

mysql -u root -p"$MYSQL_ROOT_PASSWORD" -e "CREATE USER IF NOT EXISTS 'discuz_user'@'%' IDENTIFIED BY '123.com';"

mysql -u root -p"$MYSQL_ROOT_PASSWORD" -e "GRANT ALL PRIVILEGES ON discuz.* TO 'discuz_user'@'%';"

mysql -u root -p"$MYSQL_ROOT_PASSWORD" -e "DROP USER IF EXISTS 'root'@'%';"

mysql -u root -p"$MYSQL_ROOT_PASSWORD" -e "CREATE USER IF NOT EXISTS 'root'@'%' IDENTIFIED BY '123.com';"

mysql -u root -p"$MYSQL_ROOT_PASSWORD" -e "GRANT ALL PRIVILEGES ON *.* TO 'root'@'%' WITH GRANT OPTION;"

# 5. 启动同步并验证状态(核心新增:确保启动成功)

mysql -u root -p"$MYSQL_ROOT_PASSWORD" -e "START SLAVE;"

if ! mysql -u root -p"$MYSQL_ROOT_PASSWORD" -e "SHOW SLAVE STATUS\G" | grep -q "Slave_IO_Running: Yes"; then

echo "ERROR: 从库IO线程启动失败!"

exit 1

fi

vim mysql-m-s/mysql-master.yaml

# -------------------------- # 主库Pod定义 # -------------------------- apiVersion: v1 kind: Pod metadata: name: mysql-master # 主库Pod名称 labels: app: mysql # 应用标签(用于Service关联) role: master # 角色标签(区分主从) spec: containers: - name: mysql image: mysql:8.0 # 基础镜像(MySQL 8.0) ports: - containerPort: 3306 # 容器内MySQL端口 env: # 从Secret获取root密码(引用mysql-secrets中的key) - name: MYSQL_ROOT_PASSWORD valueFrom: secretKeyRef: name: mysql-secrets key: root-password # 从Secret获取复制密码 - name: REPLICATION_PASSWORD valueFrom: secretKeyRef: name: mysql-secrets key: replication-password volumeMounts: # 挂载主库配置文件(从ConfigMap获取) - name: master-config mountPath: /etc/mysql/conf.d/master.cnf # MySQL默认配置目录 subPath: master.cnf # 只挂载ConfigMap中的master.cnf(避免覆盖其他文件) # 挂载主库初始化脚本(从ConfigMap获取) - name: init-scripts mountPath: /docker-entrypoint-initdb.d/init-master.sh # MySQL初始化脚本目录 subPath: init-master.sh # 挂载数据目录(持久化MySQL数据) - name: mysql-master-data mountPath: /var/lib/mysql # MySQL数据存储路径 # 健康检查(确保主库存活) livenessProbe: exec: # 执行mysqladmin ping检查服务是否可用 command: ["mysqladmin", "ping", "-u", "root", "-p$(MYSQL_ROOT_PASSWORD)"] initialDelaySeconds: 30 # 启动后30秒再开始检查(给数据库启动时间) periodSeconds: 10 # 每10秒检查一次 # 定义Pod使用的卷 volumes: # 引用ConfigMap中的主库配置 - name: master-config configMap: name: mysql-config # 引用ConfigMap中的初始化脚本(设置权限为0755,确保可执行) - name: init-scripts configMap: name: mysql-config defaultMode: 0755 # 主库数据卷 - name: mysql-master-data persistentVolumeClaim: claimName: mysql-pvc --- # -------------------------- # 主库Service(提供稳定访问地址) # -------------------------- apiVersion: v1 kind: Service metadata: name: mysql-master # 主库Service名称(从库通过此名称访问主库) spec: selector: app: mysql role: master # 关联标签为app=mysql、role=master的Pod(即主库Pod) ports: - port: 3306 # Service暴露的端口 targetPort: 3306 # 转发到Pod的端口(与容器内MySQL端口一致)

vim mysql-m-s/mysql-slave.yaml

# --------------------------

# 从库Pod定义(核心优化:确保init-slave.sh自动执行+主从复制配置生效)

# 基于原有模板修改,保留Secret管理敏感密码的安全设计,补充脚本执行关键配置

# --------------------------

apiVersion: v1

kind: Pod

metadata:

name: mysql-slave # 从库Pod唯一名称,用于kubectl命令操作(如exec、logs)

labels:

app: mysql # 业务标签,与后续Service的selector匹配

role: slave # 角色标签,明确区分主库/从库,便于批量管理(如筛选所有从库Pod)

spec:

containers:

- name: mysql # 容器名称,唯一标识Pod内的MySQL进程

image: mysql:8.0 # 使用MySQL 8.0镜像,必须与主库镜像版本一致(避免主从版本兼容问题)

ports:

- containerPort: 3306 # 容器内部MySQL服务端口(默认3306,与MySQL默认端口保持一致)

env: # 环境变量配置(敏感密码从Secret读取,符合K8s安全最佳实践,避免明文暴露)

# 1. 从库root初始密码:需与主库root初始密码完全一致(主库后续修改密码会同步到从库)

- name: MYSQL_ROOT_PASSWORD

valueFrom:

secretKeyRef:

name: mysql-secrets # 引用的Secret名称(需提前通过kubectl create secret创建)

key: root-password # Secret中存储root密码的key(需与Secret定义的key匹配)

# 2. 复制用户repl的密码:必须与主库ConfigMap中init-master.sh配置的REPLICATION_PASSWORD一致

# (从库通过repl用户连接主库同步数据,密码不匹配会导致同步失败)

- name: REPLICATION_PASSWORD

valueFrom:

secretKeyRef:

name: mysql-secrets

key: replication-password # Secret中存储复制密码的key

volumeMounts: # 卷挂载配置:将ConfigMap的配置/脚本、数据卷挂载到容器指定路径

# 1. 挂载从库核心配置文件(slave.cnf)

- name: slave-config

mountPath: /etc/mysql/conf.d/slave.cnf # MySQL默认加载此目录下的.cnf文件(自动生效)

subPath: slave.cnf # 仅挂载ConfigMap中的slave.cnf文件,避免覆盖目录内其他默认配置

# 2. 挂载从库初始化脚本(关键:利用MySQL镜像特性,自动执行/docker-entrypoint-initdb.d下的.sh脚本)

- name: init-scripts

mountPath: /docker-entrypoint-initdb.d/init-slave.sh # 脚本挂载路径(符合MySQL自动执行规则)

subPath: init-slave.sh # 仅挂载ConfigMap中的init-slave.sh脚本,避免干扰其他初始化文件

# 3. 挂载从库数据目录(持久化MySQL数据,防止Pod重建后数据丢失)

- name: slave-data

mountPath: /var/lib/mysql # MySQL默认数据存储路径(所有表数据、日志均存在此目录)

# 健康检查配置(Liveness Probe):确保MySQL服务正常运行,异常时触发Pod重启

livenessProbe:

exec:

# 健康检查命令:通过mysqladmin ping验证MySQL服务可用性(使用环境变量中的root密码)

command: ["mysqladmin", "ping", "-u", "root", "-p$(MYSQL_ROOT_PASSWORD)"]

initialDelaySeconds: 30 # 容器启动后延迟30秒再检查(给MySQL足够的启动时间,避免误判)

periodSeconds: 10 # 健康检查间隔:每10秒检查一次

volumes: # 定义Pod需挂载的卷(关联ConfigMap、数据存储)

# 1. 从ConfigMap挂载从库配置文件(slave.cnf)

- name: slave-config

configMap:

name: mysql-config # 关联之前定义的ConfigMap名称(需确保ConfigMap已创建)

# 2. 从ConfigMap挂载初始化脚本(init-slave.sh):关键配置defaultMode确保脚本可执行

- name: init-scripts

configMap:

name: mysql-config # 关联的ConfigMap名称

defaultMode: 0755 # 核心权限配置:赋予脚本rwxr-xr-x权限(Linux可执行权限),否则脚本无法运行

# 3. 从库数据卷:注意!当前为emptyDir(临时存储,Pod删除后数据丢失),生产环境需替换为PV/PVC

- name: slave-data

emptyDir: {} # 测试环境临时使用,生产环境替换为下方注释的persistentVolumeClaim配置

# 生产环境数据卷配置示例(需提前创建PVC):

# persistentVolumeClaim:

# claimName: mysql-slave-pvc # 关联的PVC名称

---

# --------------------------

# 从库Service定义(作用:提供稳定访问入口,供主库/集群内其他服务访问从库)

# --------------------------

apiVersion: v1

kind: Service

metadata:

name: mysql-slave # Service名称:主库脚本中通过此名称访问从库(如-h mysql-slave)

spec:

selector: # 标签选择器:关联到对应的从库Pod(必须与Pod的labels完全匹配)

app: mysql

role: slave

ports:

- port: 3306 # Service暴露的端口(集群内访问从库的端口)

targetPort: 3306 # 映射到Pod的目标端口(与容器的containerPort一致,即MySQL服务端口)

type: ClusterIP # Service类型:默认ClusterIP(仅K8s集群内部可访问,安全可控)

# 若需外部访问从库(如运维调试),可临时改为NodePort(生产环境不建议长期暴露):

# type: NodePort

# nodePort: 30006 # 外部访问端口(需确保NodePort范围在K8s配置的范围内,默认30000-32767)

检查主从复制是否成功

kubectl exec -it mysql-slave -- mysql -u root -p123.com show slave status\G;

3、php解析环境

vim php/php-configmap.yaml

apiVersion: v1 kind: ConfigMap metadata: name: php-config data: www.conf: | [www] user = nginx group = nginx listen = 0.0.0.0:9000 pm = dynamic pm.max_children = 5 pm.start_servers = 2 pm.min_spare_servers = 1 pm.max_spare_servers = 3

vim php/php-deployment.yaml

apiVersion: apps/v1 kind: Deployment metadata: # Deployment名称 name: php-fpm-deployment # 标签用于识别Deployment labels: app: php-fpm spec: # 副本数,根据负载需求调整 replicas: 1 # 选择器,匹配要管理的Pod标签 selector: matchLabels: app: php-fpm # Pod模板定义 template: metadata: # Pod标签,需与selector匹配 labels: app: php-fpm spec: # 容器定义 containers: - name: php-fpm # 基础镜像,使用最新版Alpine image: alpine:latest # 容器启动命令,在Alpine基础上构建PHP-FPM环境 command: ["/bin/sh", "-c"] args: - | # 更新包索引并安装所需PHP组件 apk update && apk add \ php82 \ php82-fpm \ php82-mysqli \ php82-xml \ php82-json \ php82-pdo \ php82-pdo_mysql \ php82-tokenizer \ php82-pecl-redis && \ # 创建与Nginx匹配的用户和组(ID 101) addgroup -g 101 -S nginx && \ adduser -u 101 -S -G nginx nginx && \ # 创建符号链接,方便使用php-fpm命令 ln -s /usr/sbin/php-fpm82 /usr/bin/php-fpm && \ # 创建PHP运行时目录并设置权限 mkdir -p /var/run/php && \ chown -R nginx:nginx /var/run/php && \ # 添加Redis扩展配置 echo "extension=redis.so" > /etc/php82/conf.d/redis.ini && \ # 以前台模式启动PHP-FPM php-fpm82 --nodaemonize --force-stderr # 暴露容器端口 ports: - containerPort: 9000 # 挂载卷配置 volumeMounts: - name: php-config-volume # 卷名称,需与下面volumes定义一致 mountPath: /etc/php82/php-fpm.d/www.conf # 容器内挂载路径 subPath: www.conf # 只挂载ConfigMap中的www.conf文件 - name: discuz-volume mountPath: /var/www/html # 容器内 Discuz 目录路径(对应宿主机目录) # 卷定义 volumes: - name: php-config-volume configMap: name: php-config # 引用前面定义的ConfigMap - name: discuz-volume hostPath: path: /root/project/discuz # 宿主机上的 Discuz 目录(含文件和子目录) type: Directory # 必须是已存在的目录(避免自动创建空目录覆盖内容) --- # Service配置:提供Pod的网络访问入口 apiVersion: v1 kind: Service metadata: name: php-fpm-service # 服务名称 spec: selector: app: php-fpm # 关联标签,指向PHP-FPM的Pod ports: - port: 9000 # 服务暴露的端口(集群内访问用) targetPort: 9000 # 对应容器的端口 type: ClusterIP # 仅集群内部可访问(默认类型)

4、nginx服务

vim nginx/nginx-configmap.yaml

apiVersion: v1

kind: ConfigMap

metadata:

name: nginx-config # ConfigMap名称,在Deployment中会引用此名称

data:

# Nginx的默认配置文件内容,与原default.conf保持一致

default.conf: |

server {

listen 80;

listen [::]:80;

server_name localhost;

root /usr/share/nginx/html;

index index.php index.html index.htm;

# PHP文件处理配置

location ~ \.php$ {

fastcgi_pass php-fpm-service:9000; # 指向PHP服务,需确保在同一网络中

fastcgi_index index.php;

fastcgi_param SCRIPT_FILENAME /var/www/html$fastcgi_script_name;

include fastcgi_params;

}

}

vim nginx/nginx-deployment.yaml

apiVersion: apps/v1 kind: Deployment metadata: name: nginx # Deployment名称 spec: replicas: 1 # 副本数量,可根据需求调整 selector: matchLabels: app: nginx # 选择器标签,匹配Pod template: metadata: labels: app: nginx # Pod标签,与selector一致 spec: containers: - name: nginx # 容器名称 image: alpine:latest # 基础镜像 command: ["/bin/sh", "-c"] args: - | #!/bin/sh apk add --no-cache nginx; pkill nginx || true; rm -f /etc/nginx/http.d/default.conf; mkdir -p /run/nginx; chown nginx:nginx /run/nginx; chmod 755 /run/nginx; nginx -t; nginx -g "daemon off;"; ports: - containerPort: 80 # 容器内部端口 volumeMounts: - name: nginx-config-volume # 引用配置卷 mountPath: /etc/nginx/http.d/default.conf subPath: default.conf - name: discuz-volume # 网站文件卷 mountPath: /usr/share/nginx/html readOnly: true resources: requests: memory: "64Mi" cpu: "250m" limits: memory: "128Mi" cpu: "500m" livenessProbe: httpGet: path: / port: 80 initialDelaySeconds: 30 periodSeconds: 10 failureThreshold: 3 readinessProbe: tcpSocket: port: 80 initialDelaySeconds: 20 periodSeconds: 5 volumes: - name: nginx-config-volume # 配置卷(关联ConfigMap) configMap: name: nginx-config items: - key: default.conf path: default.conf - name: discuz-volume # 网站文件卷 hostPath: path: /root/project/discuz type: DirectoryOrCreate # 保留自动创目录,避免路径不存在问题 # 1. 最小化修改:删除nodeSelector(解决调度时标签不匹配问题) dnsPolicy: ClusterFirst restartPolicy: Always strategy: rollingUpdate: maxSurge: 1 maxUnavailable: 0 type: RollingUpdate --- apiVersion: v1 kind: Service metadata: name: nginx-service # Service名称 namespace: default spec: selector: app: nginx # 关联Nginx Pod标签 ports: - port: 80 targetPort: 80 nodePort: 30080 # 保留固定NodePort,避免端口变化 type: NodePort

5、redis主从复制

vim redis/redis-configmap.yaml

apiVersion: v1 kind: ConfigMap metadata: name: redis-config data: redis.conf: | # 允许所有IP访问(K8s环境需暴露给集群内服务) bind 0.0.0.0 # 关闭保护模式(配合密码保证安全,允许外部连接) protected-mode no # Redis服务端口(与Deployment容器暴露端口一致) port 6379 # 禁止后台运行(K8s容器需前台启动,否则容器会退出) daemonize no # Redis数据存储目录(与Deployment数据卷挂载路径一致) dir /data # Redis访问密码(基础安全验证) requirepass 123456 #允许从节点认证 masterauth 123456 redis-slave.conf: | bind 0.0.0.0 protected-mode no port 6379 daemonize no dir /data requirepass 123456 # 从节点认证密码 masterauth 123456 # 指向主节点服务 slaveof redis-master 6379 # 从节点只读 slave-read-only yes

vim redis/redis-deployment.yaml

# -------------- 1. 主节点 Deployment --------------

apiVersion: apps/v1

kind: Deployment

metadata:

name: redis-master # 主节点部署名,明确身份

spec:

replicas: 1 # 主节点仅1个

selector:

matchLabels:

app: redis

role: master # 主节点标签,用于Service选择

template:

metadata:

labels:

app: redis

role: master

spec:

containers:

- name: redis

image: redis:7.2-alpine # 与你原配置镜像一致

ports:

- containerPort: 6379 # 暴露Redis端口,与配置一致

volumeMounts:

# 挂载ConfigMap中的主节点配置文件(redis.conf)

- name: redis-config

mountPath: /etc/redis/redis.conf # 配置文件路径

subPath: redis.conf # 仅挂载主配置文件,避免覆盖目录

# 挂载数据卷(存储Redis数据)

- name: redis-master-data

mountPath: /data # 与配置文件中dir /data一致

# 启动Redis,指定加载主节点配置文件

command: ["redis-server", "/etc/redis/redis.conf"]

volumes:

# 关联你已有的ConfigMap(redis-config)

- name: redis-config

configMap:

name: redis-config # 必须与你现有ConfigMap名称一致

# 数据卷

- name: redis-master-data

persistentVolumeClaim:

claimName: redis-pvc

---

# -------------- 2. 从节点 Deployment --------------

apiVersion: apps/v1

kind: Deployment

metadata:

name: redis-slave # 从节点部署名,与主节点区分

spec:

replicas: 1 # 2个从节点,可根据需求调整数量

selector:

matchLabels:

app: redis

role: slave # 从节点标签,用于Service选择

template:

metadata:

labels:

app: redis

role: slave

spec:

securityContext:

fsGroup: 999 # 移到这里,作用于整个Pod的挂载卷

containers:

- name: redis

image: redis:7.2-alpine # 与主节点镜像一致,避免版本兼容问题

ports:

- containerPort: 6379

securityContext:

runAsUser: 999

runAsGroup: 999

volumeMounts:

# 挂载ConfigMap中的从节点配置文件(redis-slave.conf)

- name: redis-config

mountPath: /etc/redis/redis.conf # 配置文件路径(与主节点保持一致,便于启动命令统一)

subPath: redis-slave.conf # 挂载从节点专属配置文件

# 挂载数据卷(存储Redis数据)

- name: redis-slave-data

mountPath: /data # 与配置文件中dir /data一致

# 启动Redis,指定加载从节点配置文件(路径与主节点一致,因subPath已区分配置)

command: ["redis-server", "/etc/redis/redis.conf"]

volumes:

# 同样关联你已有的ConfigMap(无需新增)

- name: redis-config

configMap:

name: redis-config

# 从节点数据卷

- name: redis-slave-data

emptyDir: {}

---

# -------------- 3. 主节点 Service(供写入操作) --------------

apiVersion: v1

kind: Service

metadata:

name: redis-master # 主节点Service名,与从节点配置中slaveof redis-master 6379完全一致

spec:

selector:

app: redis

role: master # 仅选择主节点Pod

ports:

- port: 6379

targetPort: 6379 # 与容器暴露端口一致

type: ClusterIP # 集群内访问(生产环境若需外部访问,可改NodePort/LoadBalancer)

---

# -------------- 4. 从节点 Service(供读取操作) --------------

apiVersion: v1

kind: Service

metadata:

name: redis-slave # 从节点Service名,供客户端读取数据使用

spec:

selector:

app: redis

role: slave # 仅选择从节点Pod

ports:

- port: 6379

targetPort: 6379

type: ClusterIP # 自动负载均衡到所有从节点

6、tomcat服务

vim tomcat/shangcheng-deployment.yaml

# -------------------------- # Tomcat Deployment配置 # 特点:随机分配到Node节点(自动避开Master节点) # -------------------------- apiVersion: apps/v1 kind: Deployment metadata: name: tomcat-shangcheng spec: replicas: 1 selector: matchLabels: app: tomcat template: metadata: labels: app: tomcat spec: # 【关键修改】删除节点亲和性配置,K8s会自动调度到Node节点 # (Master节点因有control-plane污点,默认不调度普通Pod) containers: - name: tomcat image: tomcat:8 ports: - containerPort: 8080 volumeMounts: # 挂载宿主机目录(需所有Node节点都有此目录及文件) - name: tomcat-data mountPath: /usr/local/tomcat readOnly: false # 启动命令(保持不变) command: ["/bin/bash", "-c"] args: - ln -s /usr/local/tomcat/bin/startup.sh /usr/local/bin/tomcat_start; ln -s /usr/local/tomcat/bin/shutdown.sh /usr/local/bin/tomcat_stop; /usr/local/tomcat/bin/startup.sh && tail -f /usr/local/tomcat/logs/catalina.out; volumes: - name: tomcat-data hostPath: path: /root/project/tomcat8 # 所有Node节点必须有此目录及文件 type: Directory # 严格检查目录存在(避免创建空目录) --- # -------------------------- # Tomcat Service配置 # -------------------------- apiVersion: v1 kind: Service metadata: name: tomcat-shangcheng-svc spec: selector: app: tomcat ports: - port: 8080 targetPort: 8080 nodePort: 30081 # 使用未占用的端口 type: NodePort

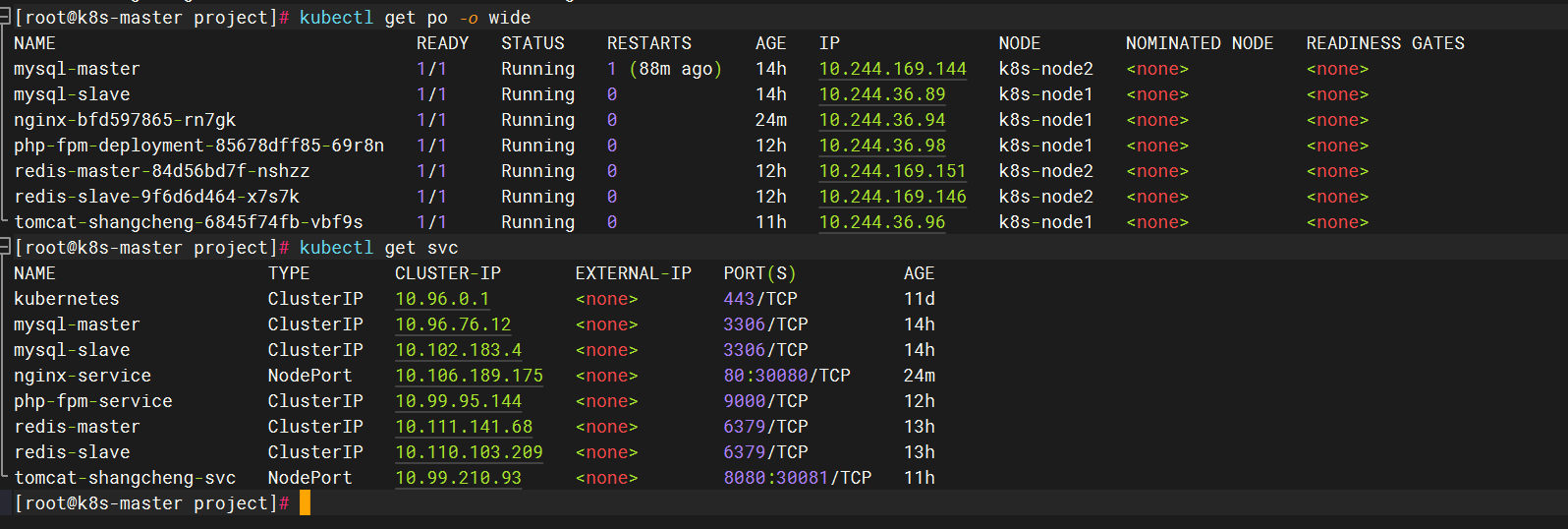

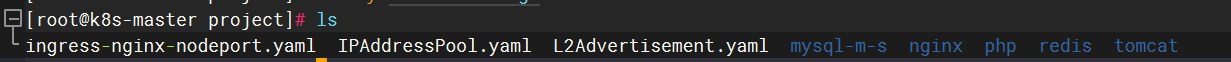

7、操作命令

#mysql主从复制 kubectl apply -f mysql-m-s/mysql-secrets.yaml kubectl apply -f mysql-m-s/mysql-configmap.yaml kubectl apply -f mysql-m-s/mysql-master.yaml kubectl apply -f mysql-m-s/mysql-slave.yaml #php解析环境 kubectl apply -f php/php-configmap.yaml kubectl apply -f php/php-deployment.yaml #nginx访问 kubectl apply -f nginx/nginx-configmap.yaml kubectl apply -f nginx/nginx-deployment.yaml #redis缓存 kubectl apply -f redis/redis-configmap.yaml kubectl apply -f redis/redis-deployment.yaml #tomcat访问 kubectl apply -f tomcat/shangcheng-deployment.yaml #把商城的数据库文件导入库中 cp /root/project//tomcat/tomcat8/webapps/biyesheji/biyesheji.sql /root/project/tomcat kubectl exec -i mysql-master -- mysql -u root -p123.com biyesheji < /root/project/tomcat/biyesheji.sql

检查主从复制是否成功

kubectl exec -it mysql-slave -- mysql -u root -p123.com show slave status\G;

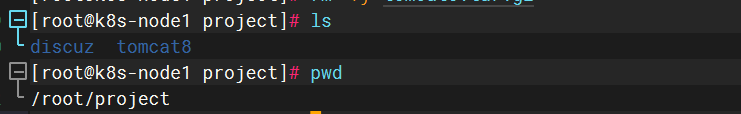

8、在每个node节点操作上

有两个目录文件

/root/project/discuz /root/project/tomcat8

操作

cd /root/project/discuz chown 101:101 ./ -R

网站访问192.168.58.180:30080

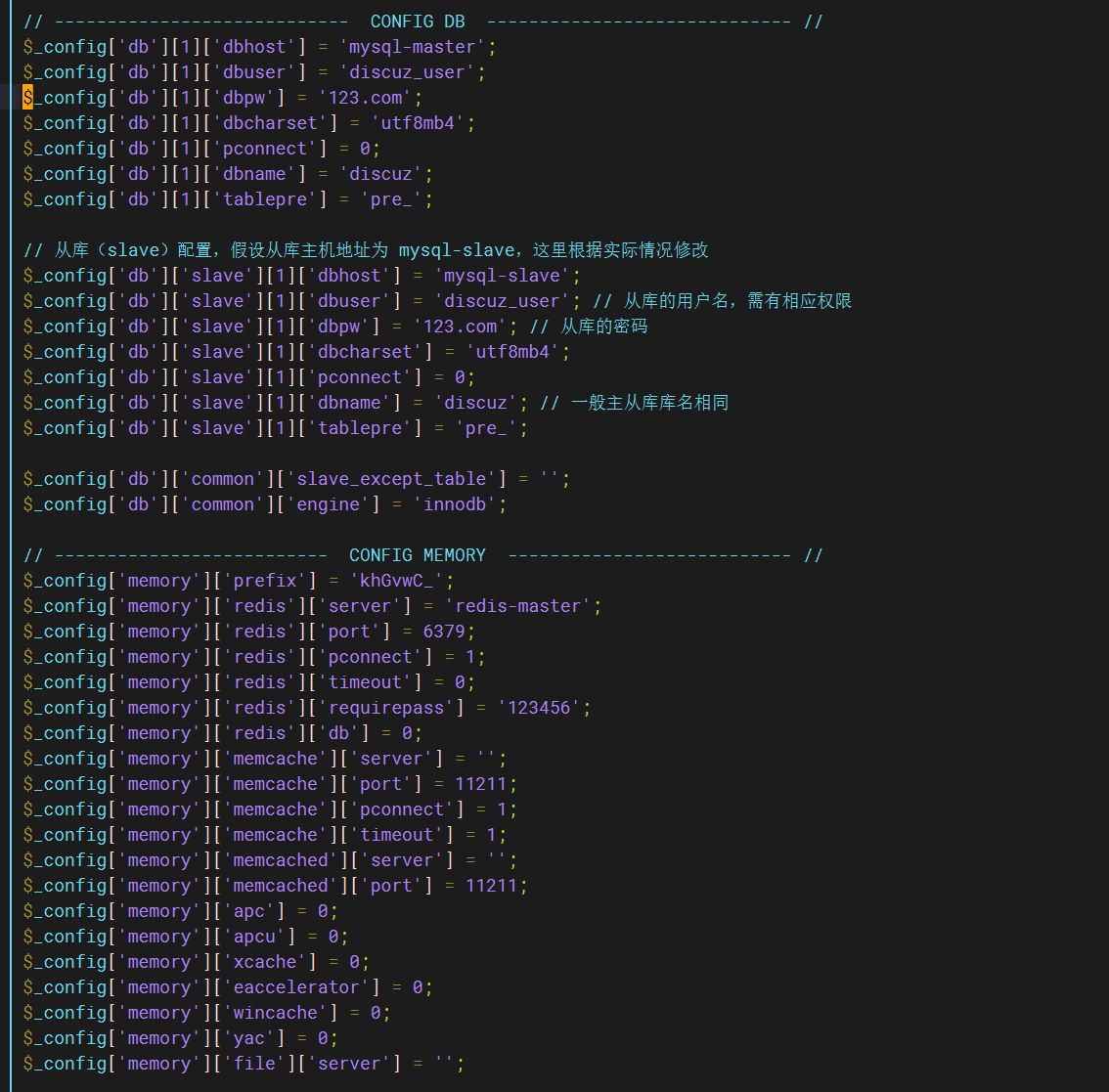

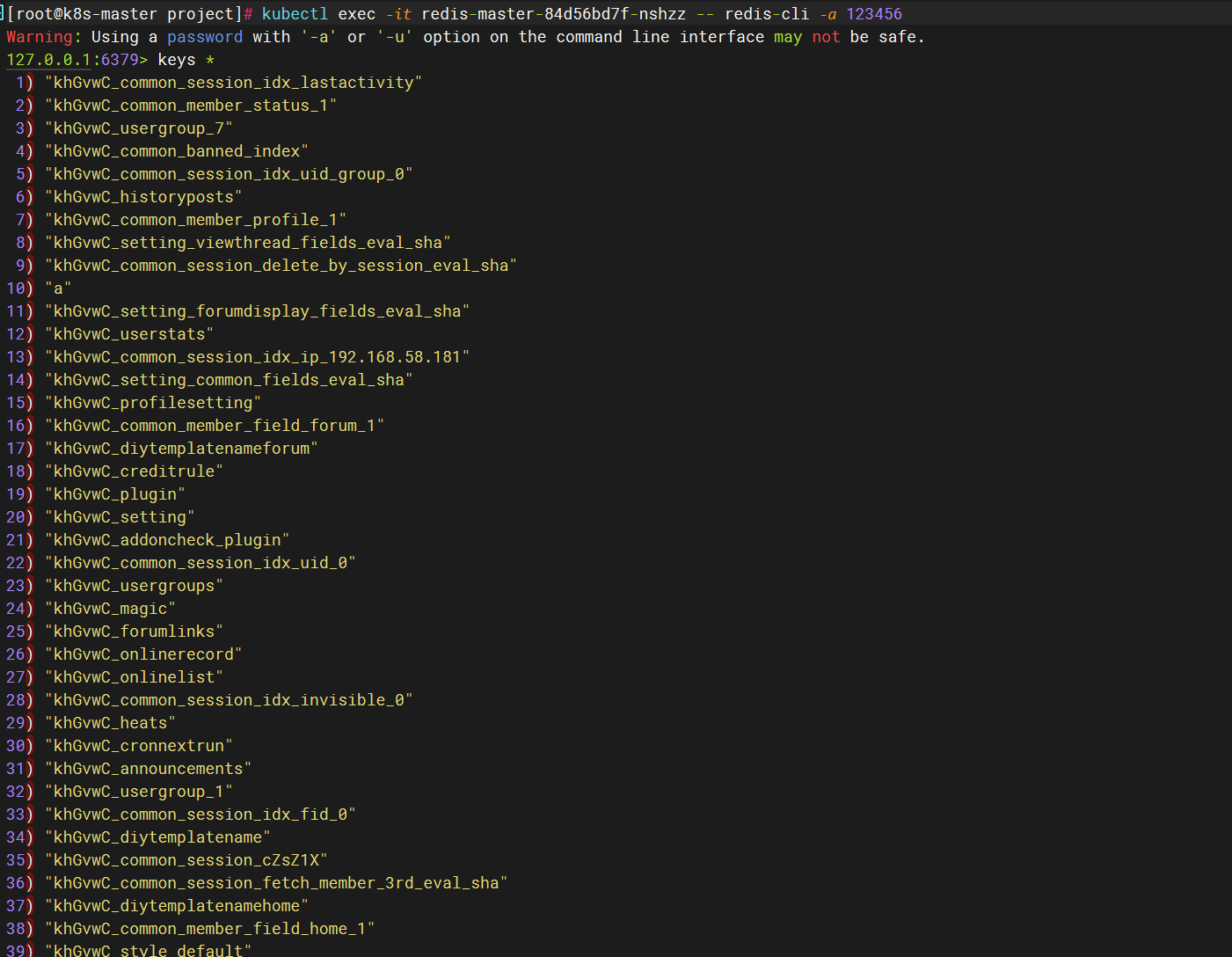

9、更改服务文件加入redis缓存和实现访问动静分离

vim /root/project/discuz/config/config_global.php

#加入从库,实现访问动静分离 #修改redis #加入用户redis-master和密码123456

redis查看

kubectl exec -it redis-master-84d56bd7f-nshzz -- redis-cli -a 123456

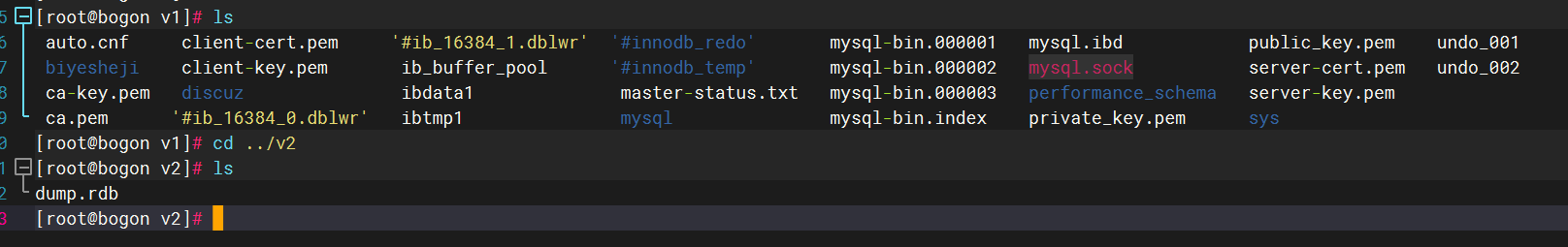

在存储主机上查看

10、更改商城应用文件

vim /root/project/tomcat8/webapps/biyesheji/index.jsp

<%-- Created by IntelliJ IDEA. User: baiyuhong Date: 2018/10/21 Time: 18:43 To change this template use File | Settings | File Templates. --%> <% response.sendRedirect(request.getContextPath()+"/fore/foreIndex"); //项目启动时,自动跳转到前台首页 %>

vim /root/project/tomcat8/webapps/biyesheji/WEB-INF/classes/jdbc.properties

#修改登陆数据库用户为mysql-master,修改密码为123.com jdbc.driver=com.mysql.cj.jdbc.Driver jdbc.jdbcUrl=jdbc:mysql://mysql-master:3306/biyesheji?useUnicode=true&characterEncoding=utf-8&allowMultiQueries=true&useSSL=false&serverTimezone=GMT%2b8&allowPublicKeyRetrieval=true jdbc.user=root jdbc.password=123.com

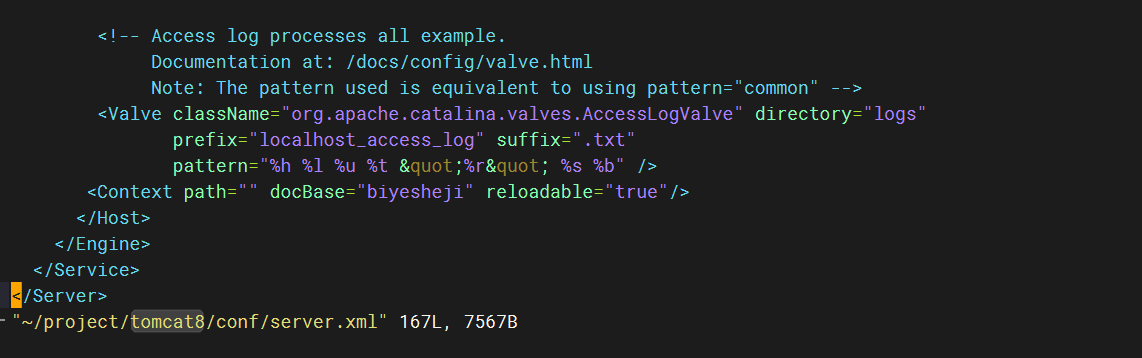

vim /root/project/tomcat8/conf/server.xml

#增加一层,使得访问时后面不需要添加目录biyesheji

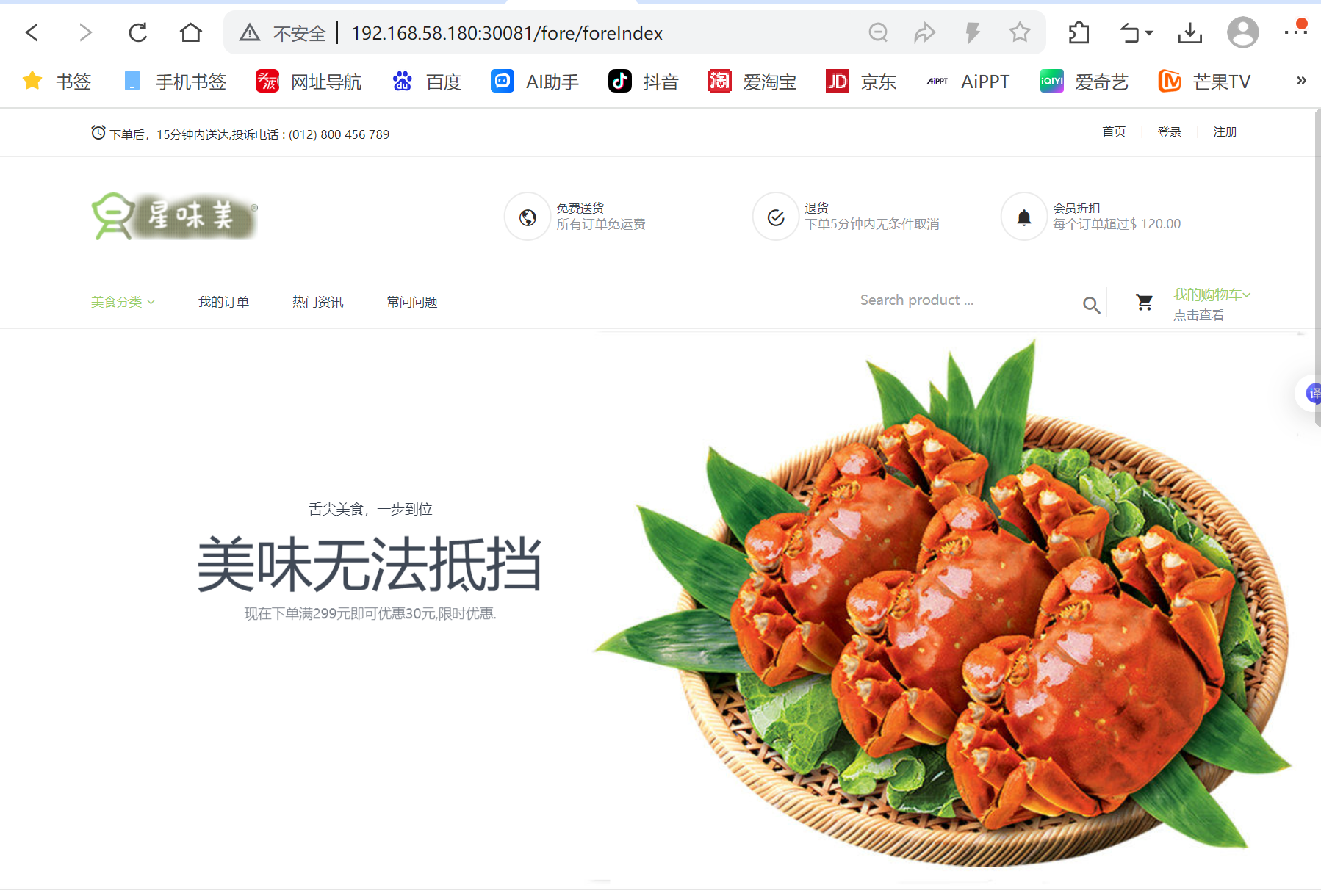

网站访问192.168.58.180:30081

二、实现域名访问

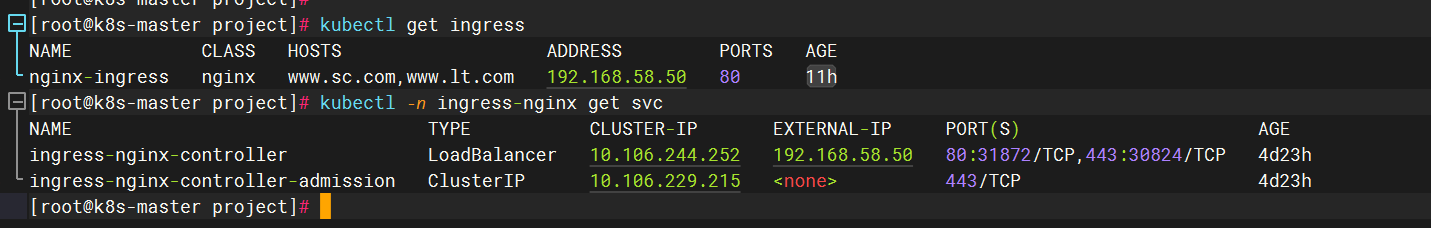

1、配置ingress规则

vim IPAddressPool.yaml

apiVersion: metallb.io/v1beta1 kind: IPAddressPool metadata: name: planip-pool #这里与下面的L2Advertisement的ip池名称需要一样 namespace: metallb-system spec: addresses: - 192.168.58.50-192.168.58.60 #自定义ip段

vim L2Advertisement.yaml

apiVersion: metallb.io/v1beta1 kind: L2Advertisement metadata: name: planip-pool namespace: metallb-system spec: ipAddressPools: - planip-pool #这里需要跟上面ip池的名称保持一致

vim ingress-nginx-nodeport.yaml

apiVersion: networking.k8s.io/v1 kind: Ingress # 创建一个类型为Ingress的资源 metadata: name: nginx-ingress # 这个资源的名字为 nginx-ingress spec: ingressClassName: nginx # 使用nginx rules: - host: www.sc.com # 访问此内容的域名 http: paths: - backend: service: name: tomcat-shangcheng-svc # 对应nginx的服务名字,该规则的namespace必须与service的一致 port: number: 8080 # 访问的端口 path: / # 匹配规则 pathType: Prefix # 匹配类型,这里为前缀匹配 - host: www.lt.com # 访问此内容的域名 http: paths: - backend: service: name: nginx-service # 对应nginx的服务名字 port: number: 80 # 访问的端口 path: / # 匹配规则 pathType: Prefix # 匹配类型,这里为前缀匹配

操作命令

kubectl apply -f IPAddressPool.yaml kubectl apply -f L2Advertisement.yaml kubectl apply -f ingress-nginx-nodeport.yaml

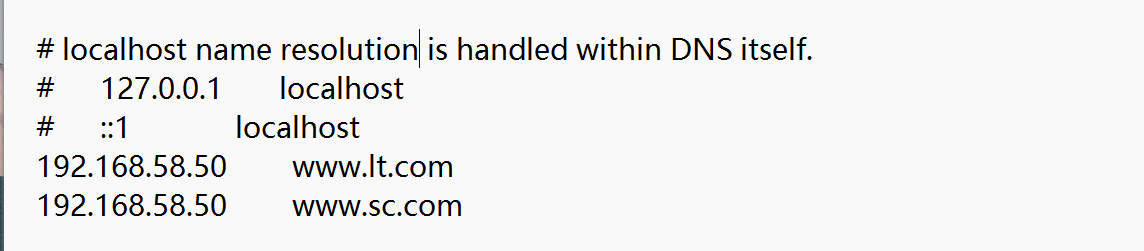

2、在wondows主机上更改hosts文件

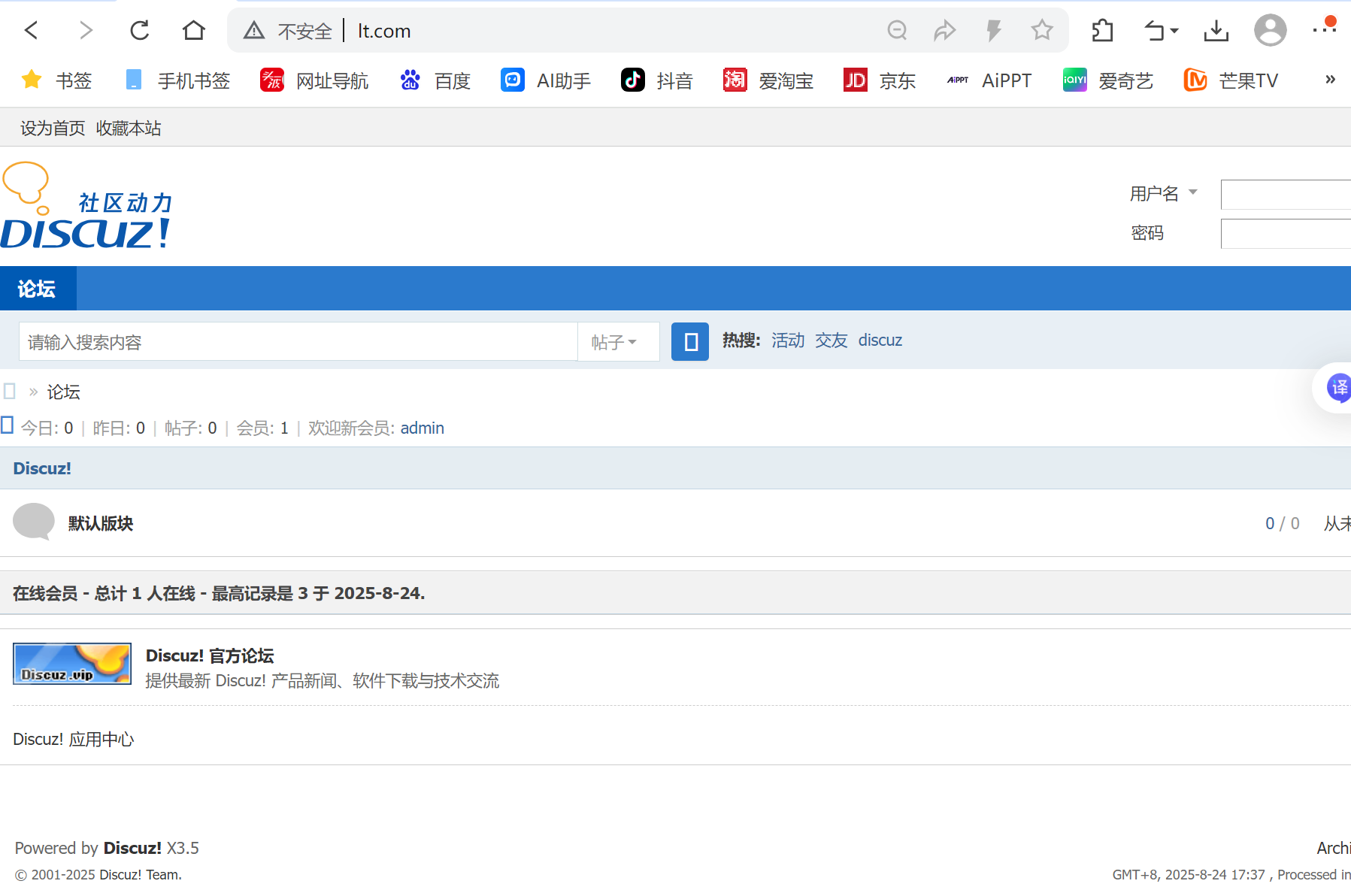

3、网站访问

网站访问www.lt.com

网站访问www.sc.com

1289

1289

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?