Caused by: org.apache.flink.util.SerializedThrowable: Stream load failed because of error, db: s_rpt, table: rpt_rt_dau_dim, label: 26639e7c-856e-477f-896f-d2ba3bb37f66,

responseBody: {

"Status": "INTERNAL_ERROR",

"Message": "The size of this batch exceed the max size [104857600] of json type data data [ 106097396 ]. Set ignore_json_size to skip the check, although it may lead huge memory consuming."

}

errorLog: null

at com.starrocks.data.load.stream.DefaultStreamLoader.send(DefaultStreamLoader.java:289) ~[flink-connector-starrocks-1.2.7_flink-1.12_2.11.jar:?]

at com.starrocks.data.load.stream.DefaultStreamLoader.lambda$send$2(DefaultStreamLoader.java:120) ~[flink-connector-starrocks-1.2.7_flink-1.12_2.11.jar:?]

at java.util.concurrent.FutureTask.run(FutureTask.java:266) ~[?:1.8.0_362]

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149) ~[?:1.8.0_362]

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624) ~[?:1.8.0_362]

... 1 more

2025-06-10 01:14:52,776 INFO com.starrocks.connector.flink.manager.StarRocksSinkManagerV2 [] - Stream load manager flush

2025-06-10 01:14:52,776 ERROR com.starrocks.connector.flink.manager.StarRocksSinkManagerV2 [] - catch exception, wait rollback

com.starrocks.data.load.stream.exception.StreamLoadFailException: Stream load failed because of error, db: s_rpt, table: rpt_rt_dau_dim, label: 26639e7c-856e-477f-896f-d2ba3bb37f66,

responseBody: {

"Status": "INTERNAL_ERROR",

"Message": "The size of this batch exceed the max size [104857600] of json type data data [ 106097396 ]. Set ignore_json_size to skip the check, although it may lead huge memory consuming."

}

errorLog: null

at com.starrocks.data.load.stream.DefaultStreamLoader.send(DefaultStreamLoader.java:289) ~[flink-connector-starrocks-1.2.7_flink-1.12_2.11.jar:?]

at com.starrocks.data.load.stream.DefaultStreamLoader.lambda$send$2(DefaultStreamLoader.java:120) ~[flink-connector-starrocks-1.2.7_flink-1.12_2.11.jar:?]

at java.util.concurrent.FutureTask.run(FutureTask.java:266) ~[?:1.8.0_362]

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149) ~[?:1.8.0_362]

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624) ~[?:1.8.0_362]

at java.lang.Thread.run(Thread.java:750) [?:1.8.0_362]

2025-06-10 01:14:52,776 ERROR com.starrocks.connector.flink.table.sink.StarRocksDynamicSinkFunctionV2 [] - Failed to flush when closing

java.lang.RuntimeException: com.starrocks.data.load.stream.exception.StreamLoadFailException: Stream load failed because of error, db: s_rpt, table: rpt_rt_dau_dim, label: 26639e7c-856e-477f-896f-d2ba3bb37f66,

responseBody: {

"Status": "INTERNAL_ERROR",

"Message": "The size of this batch exceed the max size [104857600] of json type data data [ 106097396 ]. Set ignore_json_size to skip the check, although it may lead huge memory consuming."

}

errorLog: null

at com.starrocks.connector.flink.manager.StarRocksSinkManagerV2.AssertNotException(StarRocksSinkManagerV2.java:367) ~[flink-connector-starrocks-1.2.7_flink-1.12_2.11.jar:?]

at com.starrocks.connector.flink.manager.StarRocksSinkManagerV2.flush(StarRocksSinkManagerV2.java:299) ~[flink-connector-starrocks-1.2.7_flink-1.12_2.11.jar:?]

at com.starrocks.connector.flink.table.sink.StarRocksDynamicSinkFunctionV2.close(StarRocksDynamicSinkFunctionV2.java:184) ~[flink-connector-starrocks-1.2.7_flink-1.12_2.11.jar:?]

at org.apache.flink.api.common.functions.util.FunctionUtils.closeFunction(FunctionUtils.java:41) ~[roi-flinksql-custom-1.0-SNAPSHOT.jar:?]

at org.apache.flink.streaming.api.operators.AbstractUdfStreamOperator.dispose(AbstractUdfStreamOperator.java:117) ~[flink-dist_2.11-1.12.3.jar:1.12.3]

at org.apache.flink.streaming.runtime.tasks.StreamTask.disposeAllOperators(StreamTask.java:791) ~[flink-dist_2.11-1.12.3.jar:1.12.3]

at org.apache.flink.streaming.runtime.tasks.StreamTask.runAndSuppressThrowable(StreamTask.java:770) [flink-dist_2.11-1.12.3.jar:1.12.3]

at org.apache.flink.streaming.runtime.tasks.StreamTask.cleanUpInvoke(StreamTask.java:689) [flink-dist_2.11-1.12.3.jar:1.12.3]

at org.apache.flink.streaming.runtime.tasks.StreamTask.invoke(StreamTask.java:593) [flink-dist_2.11-1.12.3.jar:1.12.3]

at org.apache.flink.runtime.taskmanager.Task.doRun(Task.java:755) [flink-dist_2.11-1.12.3.jar:1.12.3]

at org.apache.flink.runtime.taskmanager.Task.run(Task.java:570) [flink-dist_2.11-1.12.3.jar:1.12.3]

at java.lang.Thread.run(Thread.java:750) [?:1.8.0_362]

Caused by: com.starrocks.data.load.stream.exception.StreamLoadFailException: Stream load failed because of error, db: s_rpt, table: rpt_rt_dau_dim, label: 26639e7c-856e-477f-896f-d2ba3bb37f66,

responseBody: {

"Status": "INTERNAL_ERROR",

"Message": "The size of this batch exceed the max size [104857600] of json type data data [ 106097396 ]. Set ignore_json_size to skip the check, although it may lead huge memory consuming."

}

errorLog: null

at com.starrocks.data.load.stream.DefaultStreamLoader.send(DefaultStreamLoader.java:289) ~[flink-connector-starrocks-1.2.7_flink-1.12_2.11.jar:?]

at com.starrocks.data.load.stream.DefaultStreamLoader.lambda$send$2(DefaultStreamLoader.java:120) ~[flink-connector-starrocks-1.2.7_flink-1.12_2.11.jar:?]

at java.util.concurrent.FutureTask.run(FutureTask.java:266) ~[?:1.8.0_362]

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149) ~[?:1.8.0_362]

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624) ~[?:1.8.0_362]

... 1 more

2025-06-10 01:14:52,778 WARN org.apache.flink.runtime.taskmanager.Task [] - Sink: Sink(table=[default_catalog.default_database.sink_rt_dau_pvreport2sr], fields=[day, EXPR$1, client_act_time, is_client_act, ts, EXPR$5]) (1/1)#0 (6b68924ad80786daa5913d36d18c04f2) switched from RUNNING to FAILED.

java.io.IOException: Could not perform checkpoint 1 for operator Sink: Sink(table=[default_catalog.default_database.sink_rt_dau_pvreport2sr], fields=[day, EXPR$1, client_act_time, is_client_act, ts, EXPR$5]) (1/1)#0.

at org.apache.flink.streaming.runtime.tasks.StreamTask.triggerCheckpointOnBarrier(StreamTask.java:963) ~[flink-dist_2.11-1.12.3.jar:1.12.3]

at org.apache.flink.streaming.runtime.io.CheckpointBarrierHandler.notifyCheckpoint(CheckpointBarrierHandler.java:115) ~[flink-dist_2.11-1.12.3.jar:1.12.3]

at org.apache.flink.streaming.runtime.io.SingleCheckpointBarrierHandler.processBarrier(SingleCheckpointBarrierHandler.java:156) ~[flink-dist_2.11-1.12.3.jar:1.12.3]

at org.apache.flink.streaming.runtime.io.CheckpointedInputGate.handleEvent(CheckpointedInputGate.java:180) ~[flink-dist_2.11-1.12.3.jar:1.12.3]

at org.apache.flink.streaming.runtime.io.CheckpointedInputGate.pollNext(CheckpointedInputGate.java:157) ~[flink-dist_2.11-1.12.3.jar:1.12.3]

at org.apache.flink.streaming.runtime.io.StreamTaskNetworkInput.emitNext(StreamTaskNetworkInput.java:179) ~[flink-dist_2.11-1.12.3.jar:1.12.3]

at org.apache.flink.streaming.runtime.io.StreamOneInputProcessor.processInput(StreamOneInputProcessor.java:65) ~[flink-dist_2.11-1.12.3.jar:1.12.3]

at org.apache.flink.streaming.runtime.tasks.StreamTask.processInput(StreamTask.java:396) ~[flink-dist_2.11-1.12.3.jar:1.12.3]

at org.apache.flink.streaming.runtime.tasks.mailbox.MailboxProcessor.runMailboxLoop(MailboxProcessor.java:191) ~[flink-dist_2.11-1.12.3.jar:1.12.3]

at org.apache.flink.streaming.runtime.tasks.StreamTask.runMailboxLoop(StreamTask.java:617) ~[flink-dist_2.11-1.12.3.jar:1.12.3]

at org.apache.flink.streaming.runtime.tasks.StreamTask.invoke(StreamTask.java:581) ~[flink-dist_2.11-1.12.3.jar:1.12.3]

at org.apache.flink.runtime.taskmanager.Task.doRun(Task.java:755) [flink-dist_2.11-1.12.3.jar:1.12.3]

at org.apache.flink.runtime.taskmanager.Task.run(Task.java:570) [flink-dist_2.11-1.12.3.jar:1.12.3]

at java.lang.Thread.run(Thread.java:750) [?:1.8.0_362]

Suppressed: java.lang.RuntimeException: com.starrocks.data.load.stream.exception.StreamLoadFailException: Stream load failed because of error, db: s_rpt, table: rpt_rt_dau_dim, label: 26639e7c-856e-477f-896f-d2ba3bb37f66,

responseBody: {

"Status": "INTERNAL_ERROR",

"Message": "The size of this batch exceed the max size [104857600] of json type data data [ 106097396 ]. Set ignore_json_size to skip the check, although it may lead huge memory consuming."

}

errorLog: null

at com.starrocks.connector.flink.manager.StarRocksSinkManagerV2.AssertNotException(StarRocksSinkManagerV2.java:367) ~[flink-connector-starrocks-1.2.7_flink-1.12_2.11.jar:?]

at com.starrocks.connector.flink.manager.StarRocksSinkManagerV2.flush(StarRocksSinkManagerV2.java:299) ~[flink-connector-starrocks-1.2.7_flink-1.12_2.11.jar:?]

at com.starrocks.connector.flink.table.sink.StarRocksDynamicSinkFunctionV2.close(StarRocksDynamicSinkFunctionV2.java:184) ~[flink-connector-starrocks-1.2.7_flink-1.12_2.11.jar:?]

at org.apache.flink.api.common.functions.util.FunctionUtils.closeFunction(FunctionUtils.java:41) ~[roi-flinksql-custom-1.0-SNAPSHOT.jar:?]

at org.apache.flink.streaming.api.operators.AbstractUdfStreamOperator.dispose(AbstractUdfStreamOperator.java:117) ~[flink-dist_2.11-1.12.3.jar:1.12.3]

at org.apache.flink.streaming.runtime.tasks.StreamTask.disposeAllOperators(StreamTask.java:791) ~[flink-dist_2.11-1.12.3.jar:1.12.3]

at org.apache.flink.streaming.runtime.tasks.StreamTask.runAndSuppressThrowable(StreamTask.java:770) ~[flink-dist_2.11-1.12.3.jar:1.12.3]

at org.apache.flink.streaming.runtime.tasks.StreamTask.cleanUpInvoke(StreamTask.java:689) ~[flink-dist_2.11-1.12.3.jar:1.12.3]

at org.apache.flink.streaming.runtime.tasks.StreamTask.invoke(StreamTask.java:593) ~[flink-dist_2.11-1.12.3.jar:1.12.3]

at org.apache.flink.runtime.taskmanager.Task.doRun(Task.java:755) [flink-dist_2.11-1.12.3.jar:1.12.3]

at org.apache.flink.runtime.taskmanager.Task.run(Task.java:570) [flink-dist_2.11-1.12.3.jar:1.12.3]

at java.lang.Thread.run(Thread.java:750) [?:1.8.0_362]

Caused by: com.starrocks.data.load.stream.exception.StreamLoadFailException: Stream load failed because of error, db: s_rpt, table: rpt_rt_dau_dim, label: 26639e7c-856e-477f-896f-d2ba3bb37f66,

responseBody: {

"Status": "INTERNAL_ERROR",

"Message": "The size of this batch exceed the max size [104857600] of json type data data [ 106097396 ]. Set ignore_json_size to skip the check, although it may lead huge memory consuming."

}

errorLog: null

at com.starrocks.data.load.stream.DefaultStreamLoader.send(DefaultStreamLoader.java:289) ~[flink-connector-starrocks-1.2.7_flink-1.12_2.11.jar:?]

at com.starrocks.data.load.stream.DefaultStreamLoader.lambda$send$2(DefaultStreamLoader.java:120) ~[flink-connector-starrocks-1.2.7_flink-1.12_2.11.jar:?]

at java.util.concurrent.FutureTask.run(FutureTask.java:266) ~[?:1.8.0_362]

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149) ~[?:1.8.0_362]

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624) ~[?:1.8.0_362]

... 1 more

Caused by: org.apache.flink.runtime.checkpoint.CheckpointException: Could not complete snapshot 1 for operator Sink: Sink(table=[default_catalog.default_database.sink_rt_dau_pvreport2sr], fields=[day, EXPR$1, client_act_time, is_client_act, ts, EXPR$5]) (1/1)#0. Failure reason: Checkpoint was declined.

at org.apache.flink.streaming.api.operators.StreamOperatorStateHandler.snapshotState(StreamOperatorStateHandler.java:241) ~[flink-dist_2.11-1.12.3.jar:1.12.3]

at org.apache.flink.streaming.api.operators.StreamOperatorStateHandler.snapshotState(StreamOperatorStateHandler.java:162) ~[flink-dist_2.11-1.12.3.jar:1.12.3]

at org.apache.flink.streaming.api.operators.AbstractStreamOperator.snapshotState(AbstractStreamOperator.java:371) ~[flink-dist_2.11-1.12.3.jar:1.12.3]

at org.apache.flink.streaming.runtime.tasks.SubtaskCheckpointCoordinatorImpl.checkpointStreamOperator(SubtaskCheckpointCoordinatorImpl.java:686) ~[flink-dist_2.11-1.12.3.jar:1.12.3]

at org.apache.flink.streaming.runtime.tasks.SubtaskCheckpointCoordinatorImpl.buildOperatorSnapshotFutures(SubtaskCheckpointCoordinatorImpl.java:607) ~[flink-dist_2.11-1.12.3.jar:1.12.3]

at org.apache.flink.streaming.runtime.tasks.SubtaskCheckpointCoordinatorImpl.takeSnapshotSync(SubtaskCheckpointCoordinatorImpl.java:572) ~[flink-dist_2.11-1.12.3.jar:1.12.3]

at org.apache.flink.streaming.runtime.tasks.SubtaskCheckpointCoordinatorImpl.checkpointState(SubtaskCheckpointCoordinatorImpl.java:298) ~[flink-dist_2.11-1.12.3.jar:1.12.3]

at org.apache.flink.streaming.runtime.tasks.StreamTask.lambda$performCheckpoint$9(StreamTask.java:1004) ~[flink-dist_2.11-1.12.3.jar:1.12.3]

at org.apache.flink.streaming.runtime.tasks.StreamTaskActionExecutor$1.runThrowing(StreamTaskActionExecutor.java:50) ~[flink-dist_2.11-1.12.3.jar:1.12.3]

at org.apache.flink.streaming.runtime.tasks.StreamTask.performCheckpoint(StreamTask.java:988) ~[flink-dist_2.11-1.12.3.jar:1.12.3]

at org.apache.flink.streaming.runtime.tasks.StreamTask.triggerCheckpointOnBarrier(StreamTask.java:947) ~[flink-dist_2.11-1.12.3.jar:1.12.3]

... 13 more

Caused by: org.apache.flink.util.SerializedThrowable: com.starrocks.data.load.stream.exception.StreamLoadFailException: Stream load failed because of error, db: s_rpt, table: rpt_rt_dau_dim, label: 26639e7c-856e-477f-896f-d2ba3bb37f66,

responseBody: {

"Status": "INTERNAL_ERROR",

"Message": "The size of this batch exceed the max size [104857600] of json type data data [ 106097396 ]. Set ignore_json_size to skip the check, although it may lead huge memory consuming."

}

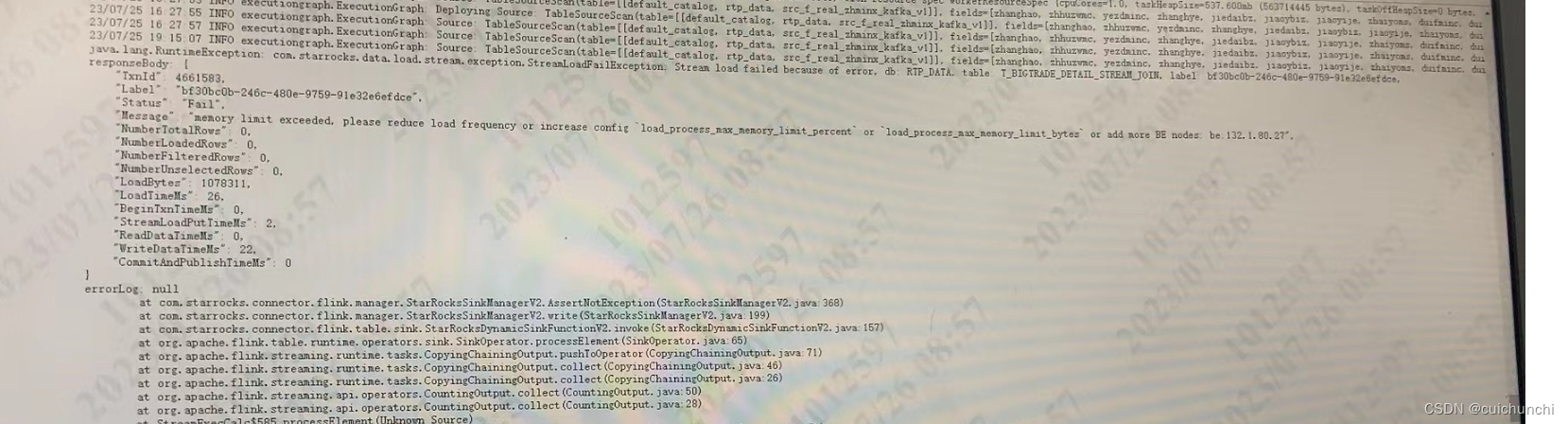

在尝试实时写入StarRocks时遇到导入失败的问题,错误信息显示超出内存限制。检查配置参数,如sink.properties.timeout、sink.connect.timeout-ms和heartbeat.timeout等,但未明确BE的内存设置。建议检查StarRocks的内存管理文档以优化配置。

在尝试实时写入StarRocks时遇到导入失败的问题,错误信息显示超出内存限制。检查配置参数,如sink.properties.timeout、sink.connect.timeout-ms和heartbeat.timeout等,但未明确BE的内存设置。建议检查StarRocks的内存管理文档以优化配置。

最低0.47元/天 解锁文章

最低0.47元/天 解锁文章

347

347

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?