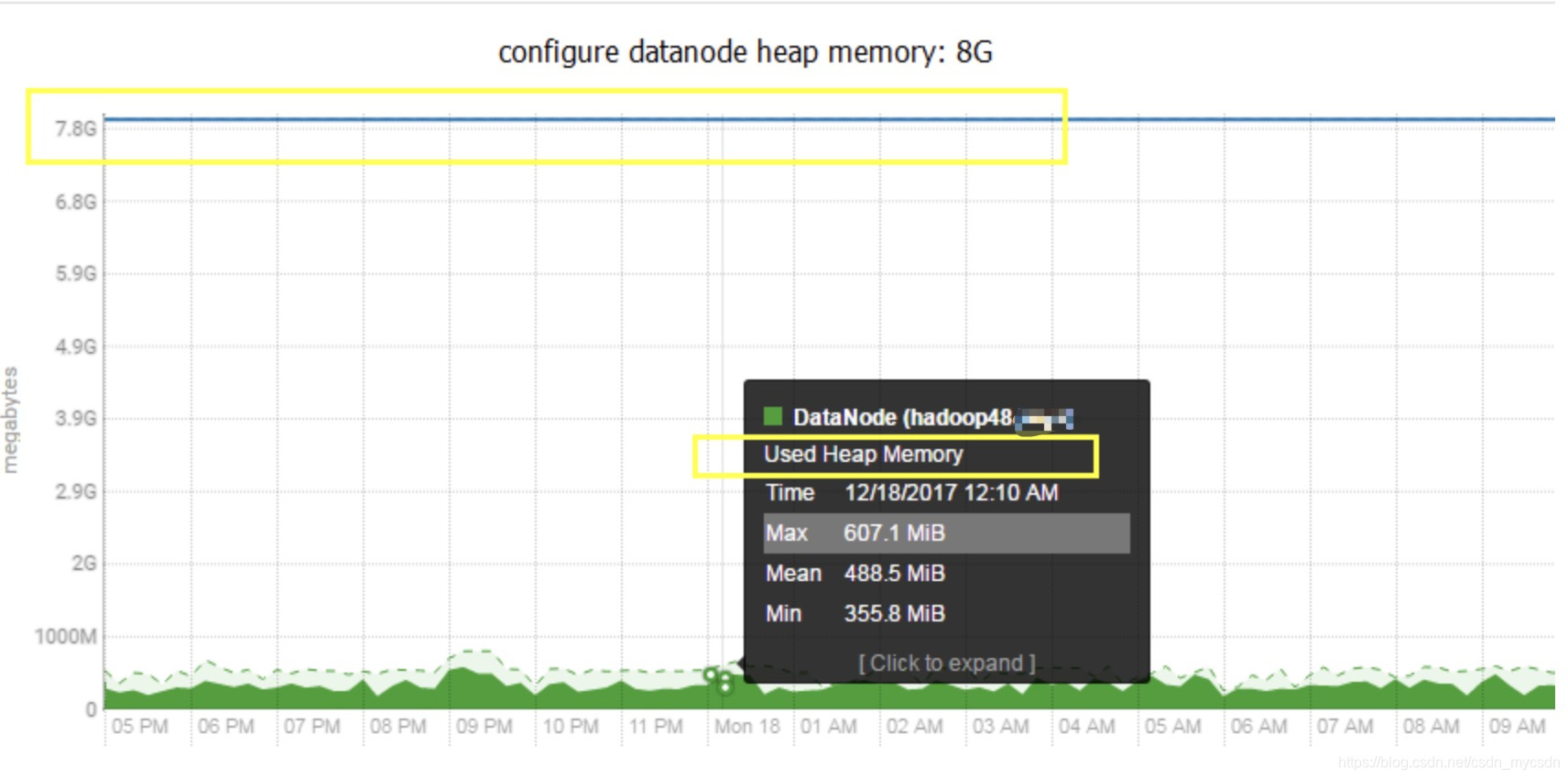

CDH查看DataNode的内存情况

DataNode报错信息

2017-12-17 23:58:14,422 INFO org.apache.hadoop.hdfs.server.datanode.DataNode: PacketResponder: BP-1437036909-192.168.17.36-1509097205664:blk_1074725940_987917, type=HAS_DOWNSTREAM_IN_PIPELINE terminating

2017-12-17 23:58:31,425 ERROR org.apache.hadoop.hdfs.server.datanode.DataNode: DataNode is out of memory. Will retry in 30 seconds.

java.lang.OutOfMemoryError: unable to create new native thread

at java.lang.Thread.start0(Native Method)

at java.lang.Thread.start(Thread.java:714)

at org.apache.hadoop.hdfs.server.datanode.DataXceiverServer.run(DataXceiverServer.java:154)

at java.lang.Thread.run(Thread.java:745)

2017-12-17 23:59:01,426 ERROR org.apache.hadoop.hdfs.server.datanode.DataNode: DataNode is out of memory. Will retry in 30 seconds.

java.lang.OutOfMemoryError: unable to create new native thread

at java.lang.Thread.start0(Native Method)

at java.lang.Thread.start(Thread.java:714)

at org.apache.hadoop.hdfs.server.datanode.DataXceiverServer.run(DataXceiverServer.java:154)

at java.lang.Thread.run(Thread.java:745)

2017-12-17 23:59:05,520 ERROR org.apache.hadoop.hdfs.server.datanode.DataNode: DataNode is out of memory. Will retry in 30 seconds.

java.lang.OutOfMemoryError: unable to create new native thread

at java.lang.Thread.start0(Native Method)

at java.lang.Thread.start(Thread.java:714)

at org.apache.hadoop.hdfs.server.datanode.DataXceiverServer.run(DataXceiverServer.java:154)

at java.lang.Thread.run(Thread.java:745)

2017-12-17 23:59:31,429 INFO org.apache.hadoop.hdfs.server.datanode.DataNode: Receiving BP-1437036909-![img]

为什么会报错

根据 CDH查看DataNode的内存情况,发现DN最大占用内存才607.1M,(生产环境DN的内存配置为2G就足够用了)为甚么还会抛出内存溢出错误呢,应该是配置信息的参数没有配正确,解决方法:

1.

echo "kernel.threads-max=196605" >> /etc/sysctl.conf

echo "kernel.pid_max=196605" >> /etc/sysctl.conf

echo "vm.max_map_count=393210" >> /etc/sysctl.conf

sysctl -p

2.

/etc/security/limits.conf

* soft nofile 196605

* hard nofile 196605

* soft nproc 196605

* hard nproc 196605

CDH DataNode 内存溢出问题解析

CDH DataNode 内存溢出问题解析

本文分析了CDH集群中DataNode出现内存溢出错误的原因,尽管实际内存使用远低于配置上限。文章详细记录了错误日志,并提供了解决方案,包括调整系统参数和限制设置,确保DataNode稳定运行。

本文分析了CDH集群中DataNode出现内存溢出错误的原因,尽管实际内存使用远低于配置上限。文章详细记录了错误日志,并提供了解决方案,包括调整系统参数和限制设置,确保DataNode稳定运行。

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?